1、集群环境

Hadoop HA 集群规划

hadoop1 cluster1 nameNode HMaster

hadoop2 cluster1 nameNodeStandby ZooKeeper ResourceManager HMaster

hadoop3 cluster2 nameNode ZooKeeper

hadoop4 cluster2 nameNodeStandby ZooKeeper ResourceManagerStandBy

hadoop5 DataNode NodeManager HRegionServer

hadoop6 DataNode NodeManager HRegionServer

hadoop7 DataNode NodeManager HRegionServer

2、安装

版本:版本为hbase-0.99.2-bin.tar

解压配置环境变量

export HBASE_HOME=/home/hadoop/apps/hbase

export PATH=$PATH:$HBASE_HOME/bin

配置文件:在conf目录中加入core-site.xml,hdfs-site.xml文件,该文件保持和hadoop的配置文件一致

3、详细配置文件

core-site.xml 和当前hadoop集群的配置文件保持一致

1 <?xml version="1.0" encoding="UTF-8"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <name>fs.defaultFS</name> 22 <value>hdfs://cluster1</value> 23 </property> 24 <property> 25 <name>hadoop.tmp.dir</name> 26 <value>/home/hadoop/hadoop/tmp</value> 27 </property> 28 <property> 29 <name>ha.zookeeper.quorum</name> 30 <value>hadoop2:2181,hadoop3:2181,hadoop4:2181</value> 31 </property> 32 </configuration>

hdfs-site.xml和当前hadoop集群的配置文件保持一致

1 <?xml version="1.0" encoding="UTF-8"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 21 <property> 22 <name>dfs.replication</name> 23 <value>3</value> 24 </property> 25 <!--指定DataNode存储block的副本数量。默认值是3个,我们现在有4个DataNode,该值不大于4即可。--> 26 <property> 27 <name>dfs.nameservices</name> 28 <value>cluster1,cluster2</value> 29 </property> 30 <!--使用federation时,使用了2个HDFS集群。这里抽象出两个NameService实际上就是给这2个HDFS集群起了个别名。名字可以随便起,相互不重复即可 --> 31 32 <!-- 以下是集群cluster1的配置信息--> 33 <property> 34 <name>dfs.ha.namenodes.cluster1</name> 35 <value>nn1,nn2</value> 36 </property> 37 <!-- 指定NameService是cluster1时的namenode有哪些,这里的值也是逻辑名称,名字随便起,相互不重复即可 --> 38 <property> 39 <name>dfs.namenode.rpc-address.cluster1.nn1</name> 40 <value>hadoop1:9000</value> 41 </property> 42 <!-- 指定hadoop1的RPC地址 --> 43 <property> 44 <name>dfs.namenode.http-address.cluster1.nn1</name> 45 <value>hadoop1:50070</value> 46 </property> 47 <!-- 指定hadoop1的http地址 --> 48 <property> 49 <name>dfs.namenode.rpc-address.cluster1.nn2</name> 50 <value>hadoop2:9000</value> 51 </property> 52 <!-- 指定hadoop2的RPC地址 --> 53 <property> 54 <name>dfs.namenode.http-address.cluster1.nn2</name> 55 <value>hadoop2:50070</value> 56 </property> 57 <!-- 指定hadoop2的http地址 --> 58 <property> 59 <name>dfs.namenode.shared.edits.dir</name> 60 <value>qjournal://hadoop2:8485;hadoop3:8485;hadoop4:8485/cluster1</value> 61 </property> 62 <!-- 指定cluster1的两个NameNode共享edits文件目录时,使用的JournalNode集群信息,在cluster1中配置此信息 --> 63 <property> 64 <name>dfs.ha.automatic-failover.enabled.cluster1</name> 65 <value>true</value> 66 </property> 67 <!-- 指定cluster1是否启动自动故障恢复,即当NameNode出故障时,是否自动切换到另一台NameNode --> 68 <property> 69 <name>dfs.client.failover.proxy.provider.cluster1</name> 70 <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> 71 </property> 72 <!-- 指定cluster1出故障时,哪个实现类负责执行故障切换 --> 73 74 <!-- 以下是集群cluster2的配置信息--> 75 <property> 76 <name>dfs.ha.namenodes.cluster2</name> 77 <value>nn3,nn4</value> 78 </property> 79 <!-- 指定NameService是cluster2时的namenode有哪些,这里的值也是逻辑名称,名字随便起,相互不重复即可 --> 80 <property> 81 <name>dfs.namenode.rpc-address.cluster2.nn3</name> 82 <value>hadoop3:9000</value> 83 </property> 84 <!-- 指定hadoop3的RPC地址 --> 85 <property> 86 <name>dfs.namenode.http-address.cluster2.nn3</name> 87 <value>hadoop3:50070</value> 88 </property> 89 <!-- 指定hadoop3的http地址 --> 90 <property> 91 <name>dfs.namenode.rpc-address.cluster2.nn4</name> 92 <value>hadoop4:9000</value> 93 </property> 94 <!-- 指定hadoop4的RPC地址 --> 95 <property> 96 <name>dfs.namenode.http-address.cluster2.nn4</name> 97 <value>hadoop4:50070</value> 98 </property> 99 <!-- 指定hadoop4的http地址 --> 100 <!-- 101 <property> 102 <name>dfs.namenode.shared.edits.dir</name> 103 <value>qjournal://hadoop2:8485;hadoop3:8485;hadoop4:8485/cluster2</value> 104 </property> 105 --> 106 <!-- 指定cluster2的两个NameNode共享edits文件目录时,使用的JournalNode集群信息,在cluster2中配置此信息 --> 107 <property> 108 <name>dfs.ha.automatic-failover.enabled.cluster2</name> 109 <value>true</value> 110 </property> 111 <!-- 指定cluster2是否启动自动故障恢复,即当NameNode出故障时,是否自动切换到另一台NameNode --> 112 <property> 113 <name>dfs.client.failover.proxy.provider.cluster2</name> 114 <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> 115 </property> 116 <!-- 指定cluster2出故障时,哪个实现类负责执行故障切换 --> 117 <property> 118 <name>dfs.journalnode.edits.dir</name> 119 <value>/home/hadoop/hadoop/jndir</value> 120 </property> 121 <!-- 指定JournalNode集群在对NameNode的目录进行共享时,自己存储数据的磁盘路径 --> 122 <property> 123 <name>dfs.datanode.data.dir</name> 124 <value>/home/hadoop/hadoop/datadir</value> 125 </property> 126 <property> 127 <name>dfs.namenode.name.dir</name> 128 <value>/home/hadoop/hadoop/namedir</value> 129 </property> 130 <property> 131 <name>dfs.ha.fencing.methods</name> 132 <value>sshfence</value> 133 </property> 134 <!-- 一旦需要NameNode切换,使用ssh方式进行操作 --> 135 <property> 136 <name>dfs.ha.fencing.ssh.private-key-files</name> 137 <value>/home/hadoop/.ssh/id_rsa</value> 138 </property> 139 <!-- 一旦需要NameNode切换,使用ssh方式进行操作 --> 140 </configuration>

hbase-env.sh

#

#/**

# * Copyright 2007 The Apache Software Foundation

# *

# * Licensed to the Apache Software Foundation (ASF) under one

# * or more contributor license agreements. See the NOTICE file

# * distributed with this work for additional information

# * regarding copyright ownership. The ASF licenses this file

# * to you under the Apache License, Version 2.0 (the

# * "License"); you may not use this file except in compliance

# * with the License. You may obtain a copy of the License at

# *

# * http://www.apache.org/licenses/LICENSE-2.0

# *

# * Unless required by applicable law or agreed to in writing, software

# * distributed under the License is distributed on an "AS IS" BASIS,

# * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# * See the License for the specific language governing permissions and

# * limitations under the License.

# */

# Set environment variables here.

# This script sets variables multiple times over the course of starting an hbase process,

# so try to keep things idempotent unless you want to take an even deeper look

# into the startup scripts (bin/hbase, etc.)

# The java implementation to use. Java 1.6 required.

export JAVA_HOME=/usr/local/jdk1.8.0_151

# Extra Java CLASSPATH elements. Optional.这行代码是错的,需要可以修改为下面的形式

#export HBASE_CLASSPATH=/home/hadoop/hbase/conf

export JAVA_CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

# The maximum amount of heap to use, in MB. Default is 1000.

# export HBASE_HEAPSIZE=1000

# Extra Java runtime options.

# Below are what we set by default. May only work with SUN JVM.

# For more on why as well as other possible settings,

# see http://wiki.apache.org/hadoop/PerformanceTuning

export HBASE_OPTS="-XX:+UseConcMarkSweepGC"

# Uncomment below to enable java garbage collection logging for the server-side processes

# this enables basic gc logging for the server processes to the .out file

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps $HBASE_GC_OPTS"

# this enables gc logging using automatic GC log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+. Either use this set of options or the one above

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M $HBASE_GC_OPTS"

# Uncomment below to enable java garbage collection logging for the client processes in the .out file.

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps $HBASE_GC_OPTS"

# Uncomment below (along with above GC logging) to put GC information in its own logfile (will set HBASE_GC_OPTS).

# This applies to both the server and client GC options above

# export HBASE_USE_GC_LOGFILE=true

# Uncomment below if you intend to use the EXPERIMENTAL off heap cache.

# export HBASE_OPTS="$HBASE_OPTS -XX:MaxDirectMemorySize="

# Set hbase.offheapcache.percentage in hbase-site.xml to a nonzero value.

# Uncomment and adjust to enable JMX exporting

# See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access.

# More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html

#

# export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104"

# File naming hosts on which HRegionServers will run. $HBASE_HOME/conf/regionservers by default.

# export HBASE_REGIONSERVERS=${HBASE_HOME}/conf/regionservers

# File naming hosts on which backup HMaster will run. $HBASE_HOME/conf/backup-masters by default.

# export HBASE_BACKUP_MASTERS=${HBASE_HOME}/conf/backup-masters

# Extra ssh options. Empty by default.

# export HBASE_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR"

# Where log files are stored. $HBASE_HOME/logs by default.

# export HBASE_LOG_DIR=${HBASE_HOME}/logs

# Enable remote JDWP debugging of major HBase processes. Meant for Core Developers

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8070"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8071"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8072"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8073"

# A string representing this instance of hbase. $USER by default.

# export HBASE_IDENT_STRING=$USER

# The scheduling priority for daemon processes. See 'man nice'.

# export HBASE_NICENESS=10

# The directory where pid files are stored. /tmp by default.

# export HBASE_PID_DIR=/var/hadoop/pids

# Seconds to sleep between slave commands. Unset by default. This

# can be useful in large clusters, where, e.g., slave rsyncs can

# otherwise arrive faster than the master can service them.

# export HBASE_SLAVE_SLEEP=0.1

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

export HBASE_MANAGES_ZK=false

hbase-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- /** * Copyright 2010 The Apache Software Foundation * * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ --> <configuration> <!--指定master--> <property> <name>hbase.master</name> <value>hadoop1:60000</value> </property> <property> <name>hbase.master.maxclockskew</name> <value>180000</value> </property> <!--指定hbase的路径,地址根据hdfs-site.xml的配置而定,当前是hadoop集群1(cluster1)的路径--> <property> <name>hbase.rootdir</name> <value>hdfs://cluster1/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <!--zoojeeper集群--> <property> <name>hbase.zookeeper.quorum</name> <value>hadoop2,hadoop3,hadoop4</value> </property> <!--zookeeper的data目录--> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/home/hadoop/zookeeper/data</value> </property> </configuration>

regionservers文件指定 HRegionServer所在的机器

hadoop5

hadoop6

hadoop7

在一台机器上配置好hbase之后,然后使用scp命令复制到其他机器上(hadoop1,hadoop2,hadoop5,hadoop6,hadoop7)

4 启动hbase集群(前提是hadoop集群已经启动)

4.1 在hadoop1 上执行

start-hbase.sh

此时在hadoop1上会启动 Hmaster 在hadoop5,hadoop6,hadoop7 上会启动HRegionServer。

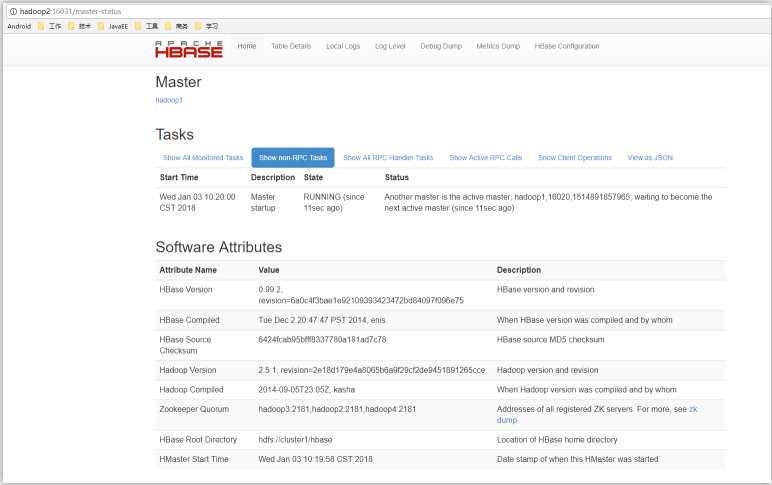

通过http://hadoop1:16030可以访问web页面如下:

4.2 启动备用master

在hadoop2 上执行:

local-master-backup.sh start 1

通过http://hadoop2:16031访问备用master

local-master-backup.sh start n :

该命令说明:n代表启动第几个备用master,对应的web访问地址的端口号也会变成1603n。