一、kubernetes升级概述

kubernetes版本升级迭代非常快,每三个月更新一个版本,很多新的功能在新版本中快速迭代,为了与社区版本功能保持一致,升级kubernetes集群,社区已通过kubeadm工具统一升级集群,升级步骤简单易行。

1、升级kubernetes集群的基本流程

首先来看下升级kubernetes集群的基本流程:

- 升级主控制平面节点,升级管理节点上的kube-apiserver,kuber-controller-manager,kube-scheduler,etcd等;

- 升级其他控制平面节点,管理节点如果以高可用的方式部署,多个高可用节点需要一并升级;

- 升级工作节点,升级工作节点上的Container Runtime如docker,kubelet和kube-proxy。

版本升级通常分为两类:小版本升级和跨版本升级,小版本升级如1.14.1升级至1.14.2,小版本之间可以跨版本升级如1.14.1直接升级至1.14.3;跨版本升级指大版本升级,如1.14.x升级至1.15.x。本文以离线的方式将1.18.6升级至1.19.16版本,升级前需要满足条件如下:

- 当前集群版本需要大于1.14.x,可升级至1.14.x和1.15.x版本,小版本和跨版本之间升级;

- 关闭swap空间;

- 备份数据,将etcd数据备份,以及一些重要目录如/etc/kubernetes,/var/lib/kubelet;

- 升级过程中pod需要重启,确保应用使用RollingUpdate滚动升级策略,避免业务有影响。

升级注意,不能跨版本升级,比如:

- 1.19.x → 1.20.y——是可以的(其中y > x)

- 1.19.x → 1.21.y——不可以【跨段了】(其中y > x)

- 1.21.x→ 1.21.y——也可以(只要其中y > x)

所以,如果需要跨大版本升级,必须多次逐步升级

2、为什么要升级集群

- 功能上的更新

- 当前版本存在bug

- 存在安全隐患

二、Kubernetes 1.18.6 -> 1.19.16升级前准备

1、下载指定版本kubernetes源码

wget https://github.com/kubernetes/kubernetes/archive/refs/tags/v1.19.16.tar.gz

解压并进入到kubernetes-1.19.16目录

tar -zxvf v1.19.16.tar.gz && cd kubernetes-1.19.16

2、编译kubeadm

make WHAT=cmd/kubeadm GOFLAGS=-v

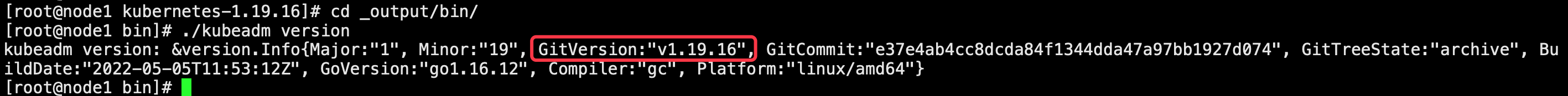

检查编译好的kubeadm:

如果需要修改颁发证书过期年限,请参见:Kubeadm颁发证书延迟到10年

3、编译kubelet

GO111MODULE=on KUBE_GIT_TREE_STATE=clean KUBE_GIT_VERSION=v1.19.6 make kubelet GOFLAGS="-tags=nokmem"

检查编译好的kubelet:

4、编译kubectl

make WHAT=cmd/kubectl GOFLAGS=-v

检查编译好的kubectl:

5、下载并推送指定版本镜像到私有镜像仓库

执行kubeadm config images list命令查看当前kubeadm版本所需要的镜像

[root@m-master126 kubernetes_bak]# kubeadm config images list I0505 21:46:15.423822 22607 version.go:255] remote version is much newer: v1.24.0; falling back to: stable-1.19 W0505 21:46:16.466441 22607 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] k8s.gcr.io/kube-apiserver:v1.19.16 k8s.gcr.io/kube-controller-manager:v1.19.16 k8s.gcr.io/kube-scheduler:v1.19.16 k8s.gcr.io/kube-proxy:v1.19.16 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns:1.7.0

注意:由于安装kubernetes集群时已经指定了镜像仓库地址和镜像所在项目,所以这里直接把以上镜像推送到镜像仓库指定项目即可,详细步骤不再赘余(不用管上面命令输出的k8s.gcr.io),如果需要修改镜像仓库地址或修改镜像所在项目,请执行如下命令进行修改

kubectl edit cm -n kube-system kubeadm-config

6、升级前对待升级Kubernetes集群进行etcd数据备份

详细步骤参见:定时备份etcd数据

三、kubernetes 1.18.6 -> 1.19.16升级步骤

1、升级主控制平面节点

1)进行主控节点数据备份

备份/etc/kubernetes/目录

tar -zcvf etc_kubernetes_2022_0505.tar.gz /etc/kubernetes/

备份/var/lib/kubelet/目录

tar -zcvf var_lib_kubelet_2022_0505.tar.gz /var/lib/kubelet/

备份kubeadm、kubelet、kubectl二进制文件

cp /usr/local/bin/kubeadm /usr/local/bin/kubeadm.old #备份 cp /usr/local/bin/kubelet /usr/bin/kubelet.old cp /usr/local/bin/kubectl /usr/local/bin/kubectl.old

2) 替换主控节点的kubeadm

将编译好的kubeadm二级制文件上传到/usr/local/bin/目录。

3)查看升级计划,通过kubeadm可以查看当前集群的升级计划

[root@m-master126 ~]# kubeadm upgrade plan [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks. [upgrade] Running cluster health checks [upgrade] Fetching available versions to upgrade to [upgrade/versions] Cluster version: v1.18.6 [upgrade/versions] kubeadm version: v1.19.16 W0505 21:07:13.750248 12476 version.go:103] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable.txt": Get "https://dl.k8s.io/release/stable.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W0505 21:07:13.750948 12476 version.go:104] falling back to the local client version: v1.19.16 [upgrade/versions] Latest stable version: v1.19.16 [upgrade/versions] Latest stable version: v1.19.16 W0505 21:07:23.758371 12476 version.go:103] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.18.txt": Get "https://dl.k8s.io/release/stable-1.18.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W0505 21:07:23.758400 12476 version.go:104] falling back to the local client version: v1.19.16 [upgrade/versions] Latest version in the v1.18 series: v1.19.16 [upgrade/versions] Latest version in the v1.18 series: v1.19.16 Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT AVAILABLE kubelet 4 x v1.18.6 v1.19.16 Upgrade to the latest version in the v1.18 series: COMPONENT CURRENT AVAILABLE kube-apiserver v1.18.6 v1.19.16 kube-controller-manager v1.18.6 v1.19.16 kube-scheduler v1.18.6 v1.19.16 kube-proxy v1.18.6 v1.19.16 CoreDNS 1.6.9 1.7.0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.19.16 _____________________________________________________________________ The table below shows the current state of component configs as understood by this version of kubeadm. Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually upgrade to is denoted in the "PREFERRED VERSION" column. API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED kubeproxy.config.k8s.io v1alpha1 v1alpha1 no kubelet.config.k8s.io v1beta1 v1beta1 no _____________________________________________________________________

此命令检查你的集群是否可被升级,并取回你要升级的目标版本。 命令也会显示一个包含组件配置版本状态的表格。

说明:

kubeadm upgrade也会自动对 kubeadm 在节点上所管理的证书执行续约操作。 如果需要略过证书续约操作,可以使用标志--certificate-renewal=false。 更多的信息,可参阅证书管理指南。

4)升级主控节点组件

kubeadm upgrade apply v1.19.16 --certificate-renewal=false --v=5

注意:此次kubernetes版本升级不需要再重新版本证书,所以需要加上--certificate-renewal=false配置项,另外需要确保待升级集群版本的相关镜像已推送到私有镜像仓库指定项目下。

[root@m-master126 kubernetes_bak]# kubeadm upgrade apply v1.19.16 --certificate-renewal=false --v=5 I0505 22:03:33.208373 8754 apply.go:113] [upgrade/apply] verifying health of cluster I0505 22:03:33.208622 8754 apply.go:114] [upgrade/apply] retrieving configuration from cluster [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' W0505 22:03:33.239526 8754 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10] I0505 22:03:33.312112 8754 common.go:168] running preflight checks [preflight] Running pre-flight checks. I0505 22:03:33.312151 8754 preflight.go:87] validating if there are any unsupported CoreDNS plugins in the Corefile I0505 22:03:33.322765 8754 preflight.go:113] validating if migration can be done for the current CoreDNS release. [upgrade] Running cluster health checks I0505 22:03:33.328598 8754 health.go:158] Creating Job "upgrade-health-check" in the namespace "kube-system" I0505 22:03:33.344471 8754 health.go:188] Job "upgrade-health-check" in the namespace "kube-system" is not yet complete, retrying I0505 22:03:34.416940 8754 health.go:188] Job "upgrade-health-check" in the namespace "kube-system" is not yet complete, retrying I0505 22:03:35.346623 8754 health.go:188] Job "upgrade-health-check" in the namespace "kube-system" is not yet complete, retrying I0505 22:03:36.347622 8754 health.go:195] Job "upgrade-health-check" in the namespace "kube-system" completed I0505 22:03:36.347655 8754 health.go:201] Deleting Job "upgrade-health-check" in the namespace "kube-system" I0505 22:03:36.362883 8754 apply.go:121] [upgrade/apply] validating requested and actual version I0505 22:03:36.362936 8754 apply.go:137] [upgrade/version] enforcing version skew policies [upgrade/version] You have chosen to change the cluster version to "v1.19.16" [upgrade/versions] Cluster version: v1.18.6 [upgrade/versions] kubeadm version: v1.19.16 [upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y [upgrade/prepull] Pulling images required for setting up a Kubernetes cluster [upgrade/prepull] This might take a minute or two, depending on the speed of your internet connection [upgrade/prepull] You can also perform this action in beforehand using 'kubeadm config images pull' I0505 22:03:38.077681 8754 checks.go:839] image exists: ******:443/**/kube-apiserver:v1.19.16 I0505 22:03:38.114688 8754 checks.go:839] image exists: ******:443/**/kube-controller-manager:v1.19.16 I0505 22:03:38.149041 8754 checks.go:839] image exists: ******:443/**/kube-scheduler:v1.19.16 I0505 22:03:38.182660 8754 checks.go:839] image exists: ******:443/**/kube-proxy:v1.19.16 I0505 22:03:38.217020 8754 checks.go:839] image exists: ******:443/**/pause:3.2 I0505 22:03:38.250097 8754 checks.go:839] image exists: ******:443/coredns/coredns:1.6.9 I0505 22:03:38.250131 8754 apply.go:163] [upgrade/apply] performing upgrade [upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.19.16"... Static pod: kube-apiserver-m-master126 hash: cf2e8b11fd6311e4b4a75188bcafcbf2 Static pod: kube-controller-manager-m-master126 hash: f21a611aa6f96a5540e12f8e074e3035 Static pod: kube-scheduler-m-master126 hash: 56a16186baf8b51953cc2402e6465243 [upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests248879628" I0505 22:03:38.261365 8754 manifests.go:42] [control-plane] creating static Pod files I0505 22:03:38.261379 8754 manifests.go:96] [control-plane] getting StaticPodSpecs I0505 22:03:38.262157 8754 manifests.go:109] [control-plane] adding volume "ca-certs" for component "kube-apiserver" I0505 22:03:38.262169 8754 manifests.go:109] [control-plane] adding volume "etc-pki" for component "kube-apiserver" I0505 22:03:38.262174 8754 manifests.go:109] [control-plane] adding volume "etcd-certs-0" for component "kube-apiserver" I0505 22:03:38.262178 8754 manifests.go:109] [control-plane] adding volume "k8s-certs" for component "kube-apiserver" I0505 22:03:38.266240 8754 manifests.go:135] [control-plane] wrote static Pod manifest for component "kube-apiserver" to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests248879628/kube-apiserver.yaml" I0505 22:03:38.266254 8754 manifests.go:109] [control-plane] adding volume "ca-certs" for component "kube-controller-manager" I0505 22:03:38.266260 8754 manifests.go:109] [control-plane] adding volume "etc-pki" for component "kube-controller-manager" I0505 22:03:38.266264 8754 manifests.go:109] [control-plane] adding volume "flexvolume-dir" for component "kube-controller-manager" I0505 22:03:38.266268 8754 manifests.go:109] [control-plane] adding volume "host-time" for component "kube-controller-manager" I0505 22:03:38.266273 8754 manifests.go:109] [control-plane] adding volume "k8s-certs" for component "kube-controller-manager" I0505 22:03:38.266315 8754 manifests.go:109] [control-plane] adding volume "kubeconfig" for component "kube-controller-manager" I0505 22:03:38.267104 8754 manifests.go:135] [control-plane] wrote static Pod manifest for component "kube-controller-manager" to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests248879628/kube-controller-manager.yaml" I0505 22:03:38.267117 8754 manifests.go:109] [control-plane] adding volume "kubeconfig" for component "kube-scheduler" I0505 22:03:38.267567 8754 manifests.go:135] [control-plane] wrote static Pod manifest for component "kube-scheduler" to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests248879628/kube-scheduler.yaml" [upgrade/staticpods] Preparing for "kube-apiserver" upgrade [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2022-05-05-22-03-38/kube-apiserver.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-apiserver-m-master126 hash: cf2e8b11fd6311e4b4a75188bcafcbf2 Static pod: kube-apiserver-m-master126 hash: cf2e8b11fd6311e4b4a75188bcafcbf2 Static pod: kube-apiserver-m-master126 hash: cf2e8b11fd6311e4b4a75188bcafcbf2 Static pod: kube-apiserver-m-master126 hash: 0bec5f4dfd3442da288036305e19c787 [apiclient] Found 1 Pods for label selector component=kube-apiserver [upgrade/staticpods] Component "kube-apiserver" upgraded successfully! [upgrade/staticpods] Preparing for "kube-controller-manager" upgrade [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2022-05-05-22-03-38/kube-controller-manager.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-controller-manager-m-master126 hash: f21a611aa6f96a5540e12f8e074e3035 Static pod: kube-controller-manager-m-master126 hash: 6ccd91a4d27bfea3dffe8f2c6b0c3088 [apiclient] Found 1 Pods for label selector component=kube-controller-manager [upgrade/staticpods] Component "kube-controller-manager" upgraded successfully! [upgrade/staticpods] Preparing for "kube-scheduler" upgrade [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2022-05-05-22-03-38/kube-scheduler.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-scheduler-m-master126 hash: 56a16186baf8b51953cc2402e6465243 Static pod: kube-scheduler-m-master126 hash: 2ac1915cf7a77bd5c2616a0d509132f6 [apiclient] Found 1 Pods for label selector component=kube-scheduler [upgrade/staticpods] Component "kube-scheduler" upgraded successfully! I0505 22:03:48.816976 8754 apply.go:169] [upgrade/postupgrade] upgrading RBAC rules and addons [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" I0505 22:03:48.843028 8754 patchnode.go:30] [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "m-master126" as an annotation [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token ...... [upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.19.16". Enjoy! [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

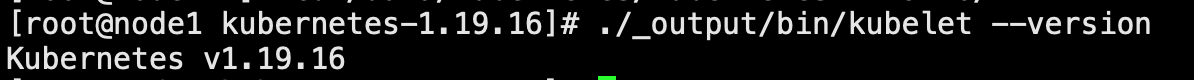

升级完查看kube-system下面的Pod,可以发现镜像版本已经替换,下面以查看kube-apiserver为例

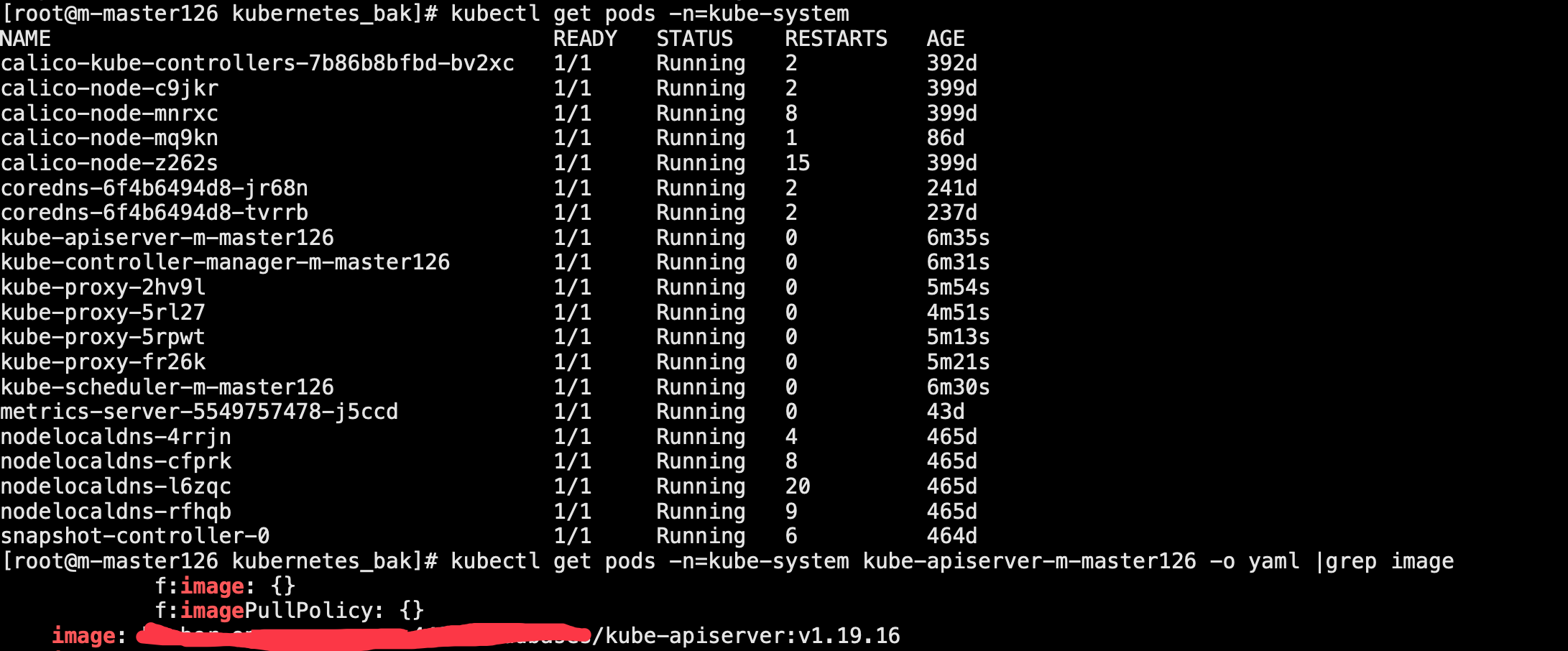

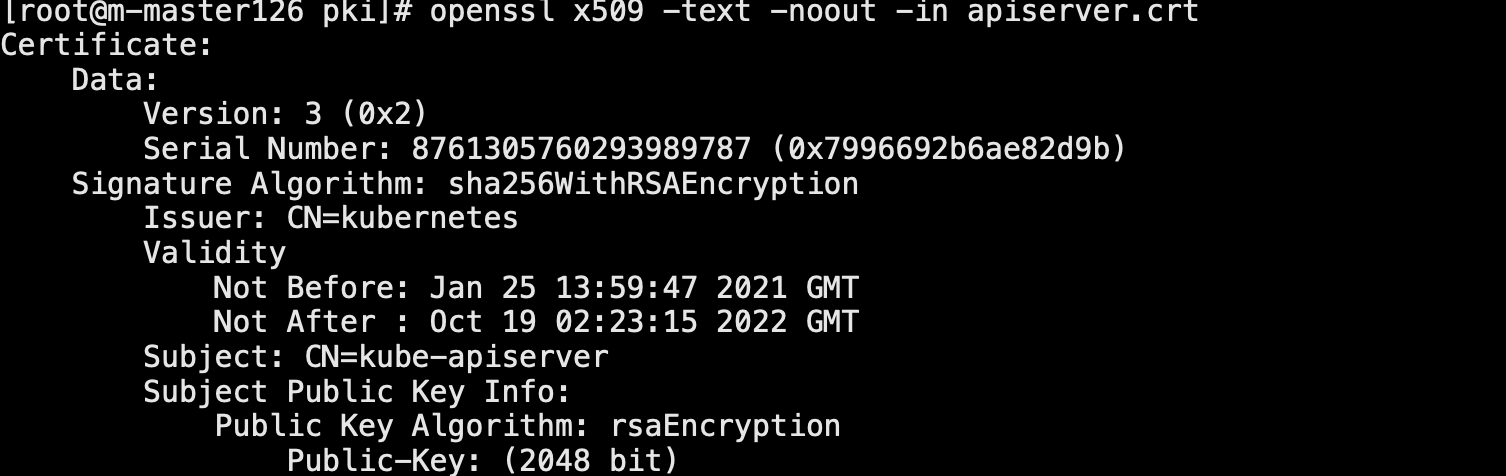

另外,由于升级主控节点时指定了kubeadm不重新颁发证书,下面通过openssl验证下证书是否重新颁发

cd /etc/kubernetes/pki/ && openssl x509 -text -noout -in apiserver.crt

可以看到没有重新颁发证书。

5)替换主控节点的kubelet

将编译好的kubelet和kubectl二级制文件上传到/usr/local/bin/目录,替换kubelet时需要先停止kubelet服务,替换完kubelet二进制文件后使用如下命令启动新版本的kubelet服务

systemctl daemon-reload systemctl restart kubelet

6) 替换主控节点的kubectl

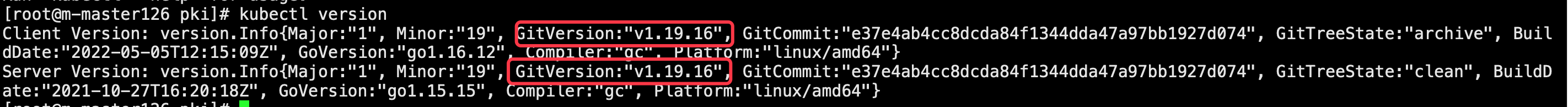

将编译好的kubectl二级制文件上传到/usr/local/bin/目录,通过kubectl version命令验证主控节点是否升级成功

至此,主控制节点版本升级完毕。

2、升级其他控制平面节点(如果是单控制节点集群忽略此步骤)

与主控制面节点步骤相同,但是使用:

sudo kubeadm upgrade node

而不是:

sudo kubeadm upgrade apply

此外,不需要执行 kubeadm upgrade plan 和更新 CNI 驱动插件的操作。

3、升级工作节点

1)进行工作节点数据备份并替换控制节点kubeadm

步骤参见升级主控节点,此处不再赘余。

2)设置节点进入维护模式并驱逐worker节点上的应用,会将除了DaemonSets之外的其他应用迁移到其他节点上

# 将 <node-to-drain> 替换为你要腾空的控制面节点名称 kubectl drain <node-to-drain> --ignore-daemonsets

下面驱逐m-node131节点上面的应用

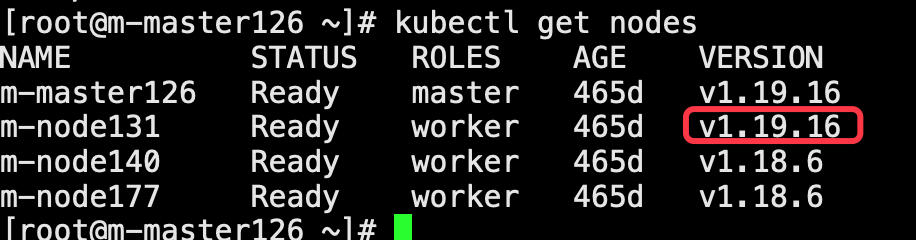

[root@m-master126 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION m-master126 Ready master 465d v1.19.16 m-node131 Ready worker 465d v1.18.6 m-node140 Ready worker 465d v1.18.6 m-node177 Ready worker 465d v1.18.6 [root@m-master126 ~]# kubectl drain m-node131 --delete-local-data --force --ignore-daemonsets node/m-node131 already cordoned WARNING: ignoring DaemonSet-managed Pods: cloudbases-logging-system/fluent-bit-j7gwx, cloudbases-monitoring-system/node-exporter-xzp49, kube-system/calico-node-mnrxc, kube-system/kube-proxy-5rpwt, kube-system/nodelocaldns-rfhqb, velero/restic-xplbd .....

3)升级worker节点

对于工作节点,

kubeadm upgrade node

命令会升级本地的 kubelet 配置:

[root@m-node131 ~]# kubeadm upgrade node [upgrade] Reading configuration from the cluster... [upgrade] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' W0505 23:06:09.841396 1140 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10] [preflight] Running pre-flight checks [preflight] Skipping prepull. Not a control plane node. [upgrade] Skipping phase. Not a control plane node. [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [upgrade] The configuration for this node was successfully updated! [upgrade] Now you should go ahead and upgrade the kubelet package using your package manager.

4)替换工作节点的kubelet

将编译好的kubelet和kubectl二级制文件上传到/usr/local/bin/目录,替换kubelet时需要先停止kubelet服务,替换完kubelet二进制文件后使用如下命令启动新版本的kubelet服务

systemctl daemon-reload systemctl restart kubelet

5)取消节点调度标志,确保worker节点可正常调度

kubectl uncordon m-node131

6)查看工作节点是否升级成功

通过kubectl get nodes命令可以看到m-node131节点k8s版本升级成功。

注意:上面步骤以升级节点m-node131为例进行了步骤展示,其他工作节点升级和以上步骤一致,本文不再赘余,待所有工作节点升级完成后,整个kubernetes集群便升级成功了。

四、升级原理

1、kubeadm upgrade apply执行动作

- 检查集群是否具备更新条件,检查apiserver处于可用状态,所有node处于ready状态,确保cs组件正常

- 强制版本更新策略

- 检查更新所需镜像是否下载或者可拉取

- 更新所有控制节点组件,确保异常时能回滚到原有状态

- 更新kube-dns和kube-proxy的配置文件,确保所需的的RBAC授权配置正常

- 生成新的证书文件并备份证书(当证书超时超过180天)

2、kubeadm upgrade node执行动作

- 从kubeadm中获取ClusterConfiguration,即从集群中获取到更新集群的配置文件并应用

- 更新node节点上的kubelet配置信息和软件

参考:https://cloud.tencent.com/developer/article/1505912

参考:https://v1-20.docs.kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/