1. HDFS常用操作

1.1. 查询

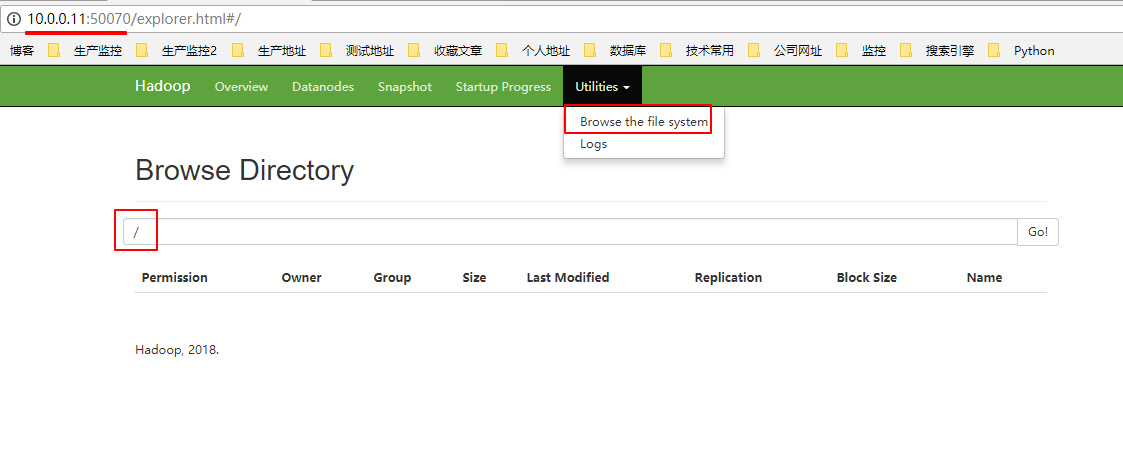

1.1.1. 浏览器查询

1.1.2. 命令行查询

[yun@mini04 bin]$ hadoop fs -ls /

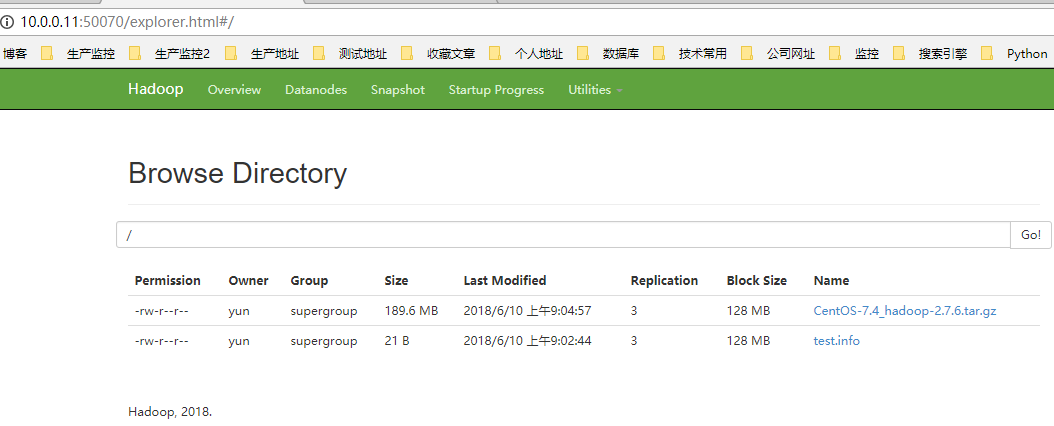

1.2. 上传文件

1 [yun@mini05 zhangliang]$ cat test.info 2 111 3 222 4 333 5 444 6 555 7 8 [yun@mini05 zhangliang]$ hadoop fs -put test.info / # 上传文件 9 [yun@mini05 software]$ ll -h 10 total 641M 11 -rw-r--r-- 1 yun yun 190M Jun 8 16:36 CentOS-7.4_hadoop-2.7.6.tar.gz 12 [yun@mini05 software]$ hadoop fs -put CentOS-7.4_hadoop-2.7.6.tar.gz / # 上传文件 13 [yun@mini05 software]$ hadoop fs -ls / 14 Found 2 items 15 -rw-r--r-- 3 yun supergroup 198811365 2018-06-10 09:04 /CentOS-7.4_hadoop-2.7.6.tar.gz 16 -rw-r--r-- 3 yun supergroup 21 2018-06-10 09:02 /test.info

1.2.1. 文件存放位置

1 [yun@mini04 subdir0]$ pwd # 根据之前的配置,总共3份相同的文件 2 /app/hadoop/tmp/dfs/data/current/BP-925531343-10.0.0.11-1528537498201/current/finalized/subdir0/subdir0 3 [yun@mini04 subdir0]$ ll -h # 默认128M 切片 4 total 192M 5 -rw-rw-r-- 1 yun yun 21 Jun 10 09:02 blk_1073741825 6 -rw-rw-r-- 1 yun yun 11 Jun 10 09:02 blk_1073741825_1001.meta 7 -rw-rw-r-- 1 yun yun 128M Jun 10 09:04 blk_1073741826 8 -rw-rw-r-- 1 yun yun 1.1M Jun 10 09:04 blk_1073741826_1002.meta 9 -rw-rw-r-- 1 yun yun 62M Jun 10 09:04 blk_1073741827 10 -rw-rw-r-- 1 yun yun 493K Jun 10 09:04 blk_1073741827_1003.meta 11 [yun@mini04 subdir0]$ cat blk_1073741825 12 111 13 222 14 333 15 444 16 555 17 18 [yun@mini04 subdir0]$

1.2.2. 浏览器访问

1.3. 文件下载

1 [yun@mini04 zhangliang]$ pwd 2 /app/software/zhangliang 3 [yun@mini04 zhangliang]$ ll 4 total 0 5 [yun@mini04 zhangliang]$ hadoop fs -ls / 6 Found 2 items 7 -rw-r--r-- 3 yun supergroup 198811365 2018-06-10 09:04 /CentOS-7.4_hadoop-2.7.6.tar.gz 8 -rw-r--r-- 3 yun supergroup 21 2018-06-10 09:02 /test.info 9 [yun@mini04 zhangliang]$ hadoop fs -get /test.info 10 [yun@mini04 zhangliang]$ hadoop fs -get /CentOS-7.4_hadoop-2.7.6.tar.gz 11 [yun@mini04 zhangliang]$ ll 12 total 194156 13 -rw-r--r-- 1 yun yun 198811365 Jun 10 09:10 CentOS-7.4_hadoop-2.7.6.tar.gz 14 -rw-r--r-- 1 yun yun 21 Jun 10 09:09 test.info 15 [yun@mini04 zhangliang]$ cat test.info 16 111 17 222 18 333 19 444 20 555 21 22 [yun@mini04 zhangliang]$

2. 简单案例

2.1. 准备数据

1 [yun@mini05 zhangliang]$ pwd 2 /app/software/zhangliang 3 [yun@mini05 zhangliang]$ ll 4 total 8 5 -rw-rw-r-- 1 yun yun 33 Jun 10 09:43 test.info 6 -rw-rw-r-- 1 yun yun 55 Jun 10 09:43 zhang.info 7 [yun@mini05 zhangliang]$ cat test.info 8 111 9 222 10 333 11 444 12 555 13 333 14 222 15 222 16 222 17 18 [yun@mini05 zhangliang]$ cat zhang.info 19 zxcvbnm 20 asdfghjkl 21 qwertyuiop 22 qwertyuiop 23 111 24 qwertyuiop 25 [yun@mini05 zhangliang]$ hadoop fs -mkdir -p /wordcount/input 26 [yun@mini05 zhangliang]$ hadoop fs -put test.info zhang.info /wordcount/input 27 [yun@mini05 zhangliang]$ hadoop fs -ls /wordcount/input 28 Found 2 items 29 -rw-r--r-- 3 yun supergroup 37 2018-06-10 09:45 /wordcount/input/test.info 30 -rw-r--r-- 3 yun supergroup 55 2018-06-10 09:45 /wordcount/input/zhang.info

2.1. 运行分析

1 [yun@mini04 mapreduce]$ pwd 2 /app/hadoop/share/hadoop/mapreduce 3 [yun@mini04 mapreduce]$ ll 4 total 5000 5 -rw-r--r-- 1 yun yun 545657 Jun 8 16:36 hadoop-mapreduce-client-app-2.7.6.jar 6 -rw-r--r-- 1 yun yun 777070 Jun 8 16:36 hadoop-mapreduce-client-common-2.7.6.jar 7 -rw-r--r-- 1 yun yun 1558767 Jun 8 16:36 hadoop-mapreduce-client-core-2.7.6.jar 8 -rw-r--r-- 1 yun yun 191881 Jun 8 16:36 hadoop-mapreduce-client-hs-2.7.6.jar 9 -rw-r--r-- 1 yun yun 27830 Jun 8 16:36 hadoop-mapreduce-client-hs-plugins-2.7.6.jar 10 -rw-r--r-- 1 yun yun 62954 Jun 8 16:36 hadoop-mapreduce-client-jobclient-2.7.6.jar 11 -rw-r--r-- 1 yun yun 1561708 Jun 8 16:36 hadoop-mapreduce-client-jobclient-2.7.6-tests.jar 12 -rw-r--r-- 1 yun yun 72054 Jun 8 16:36 hadoop-mapreduce-client-shuffle-2.7.6.jar 13 -rw-r--r-- 1 yun yun 295697 Jun 8 16:36 hadoop-mapreduce-examples-2.7.6.jar 14 drwxr-xr-x 2 yun yun 4096 Jun 8 16:36 lib 15 drwxr-xr-x 2 yun yun 30 Jun 8 16:36 lib-examples 16 drwxr-xr-x 2 yun yun 4096 Jun 8 16:36 sources 17 [yun@mini04 mapreduce]$ hadoop jar hadoop-mapreduce-examples-2.7.6.jar wordcount /wordcount/input /wordcount/output 18 18/06/10 09:46:09 INFO client.RMProxy: Connecting to ResourceManager at mini02/10.0.0.12:8032 19 18/06/10 09:46:10 INFO input.FileInputFormat: Total input paths to process : 2 20 18/06/10 09:46:10 INFO mapreduce.JobSubmitter: number of splits:2 21 18/06/10 09:46:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528589937101_0002 22 18/06/10 09:46:10 INFO impl.YarnClientImpl: Submitted application application_1528589937101_0002 23 18/06/10 09:46:10 INFO mapreduce.Job: The url to track the job: http://mini02:8088/proxy/application_1528589937101_0002/ 24 18/06/10 09:46:10 INFO mapreduce.Job: Running job: job_1528589937101_0002 25 18/06/10 09:46:21 INFO mapreduce.Job: Job job_1528589937101_0002 running in uber mode : false 26 18/06/10 09:46:21 INFO mapreduce.Job: map 0% reduce 0% 27 18/06/10 09:46:30 INFO mapreduce.Job: map 100% reduce 0% 28 18/06/10 09:46:36 INFO mapreduce.Job: map 100% reduce 100% 29 18/06/10 09:46:37 INFO mapreduce.Job: Job job_1528589937101_0002 completed successfully 30 18/06/10 09:46:37 INFO mapreduce.Job: Counters: 49 31 File System Counters 32 FILE: Number of bytes read=113 33 FILE: Number of bytes written=368235 34 FILE: Number of read operations=0 35 FILE: Number of large read operations=0 36 FILE: Number of write operations=0 37 HDFS: Number of bytes read=311 38 HDFS: Number of bytes written=65 39 HDFS: Number of read operations=9 40 HDFS: Number of large read operations=0 41 HDFS: Number of write operations=2 42 Job Counters 43 Launched map tasks=2 44 Launched reduce tasks=1 45 Data-local map tasks=2 46 Total time spent by all maps in occupied slots (ms)=12234 47 Total time spent by all reduces in occupied slots (ms)=3651 48 Total time spent by all map tasks (ms)=12234 49 Total time spent by all reduce tasks (ms)=3651 50 Total vcore-milliseconds taken by all map tasks=12234 51 Total vcore-milliseconds taken by all reduce tasks=3651 52 Total megabyte-milliseconds taken by all map tasks=12527616 53 Total megabyte-milliseconds taken by all reduce tasks=3738624 54 Map-Reduce Framework 55 Map input records=16 56 Map output records=15 57 Map output bytes=151 58 Map output materialized bytes=119 59 Input split bytes=219 60 Combine input records=15 61 Combine output records=9 62 Reduce input groups=8 63 Reduce shuffle bytes=119 64 Reduce input records=9 65 Reduce output records=8 66 Spilled Records=18 67 Shuffled Maps =2 68 Failed Shuffles=0 69 Merged Map outputs=2 70 GC time elapsed (ms)=308 71 CPU time spent (ms)=2700 72 Physical memory (bytes) snapshot=709656576 73 Virtual memory (bytes) snapshot=6343041024 74 Total committed heap usage (bytes)=473956352 75 Shuffle Errors 76 BAD_ID=0 77 CONNECTION=0 78 IO_ERROR=0 79 WRONG_LENGTH=0 80 WRONG_MAP=0 81 WRONG_REDUCE=0 82 File Input Format Counters 83 Bytes Read=92 84 File Output Format Counters 85 Bytes Written=65 86 [yun@mini05 zhangliang]$ hadoop fs -ls /wordcount/output 87 Found 2 items 88 -rw-r--r-- 3 yun supergroup 0 2018-06-10 09:46 /wordcount/output/_SUCCESS 89 -rw-r--r-- 3 yun supergroup 65 2018-06-10 09:46 /wordcount/output/part-r-00000 90 [yun@mini05 zhangliang]$ hadoop fs -cat /wordcount/output/part-r-00000 91 111 2 92 222 4 93 333 2 94 444 1 95 555 1 96 asdfghjkl 1 97 qwertyuiop 3 98 zxcvbnm 1

3. 案例:开发shell采集脚本

3.1. 需求说明

点击流日志每天都10T,在业务应用服务器上,需要准实时上传至数据仓库(Hadoop HDFS)上

3.2. 需求分析

一般上传文件都是在凌晨24点操作,由于很多种类的业务数据都要在晚上进行传输,为了减轻服务器的压力,避开高峰期。

如果需要伪实时的上传,则采用定时上传的方式。比如每小时上传一次。

3.3. web日志模拟

运行jar包模拟web日志

1 # 必要的目录 2 # 模拟web服务器目录 /app/webservice 3 # 模拟web日志目录 /app/webservice/logs 4 # 模拟待上传文件存放的目录 /app/webservice/logs/up2hdfs # 在这个目录上传到HDFS 5 [yun@mini01 webservice]$ pwd 6 /app/webservice 7 [yun@mini01 webservice]$ ll 8 total 360 9 drwxrwxr-x 3 yun yun 39 Jun 14 17:40 logs 10 -rw-r--r-- 1 yun yun 367872 Jun 14 11:32 testlog.jar 11 # 运行jar包,打印日志 12 [yun@mini01 webservice]$ java -jar testlog.jar & 13 …………………… 14 # 生成web日志查看 15 [yun@mini01 logs]$ pwd 16 /app/webservice/logs 17 [yun@mini01 logs]$ ll -hrt 18 total 460K 19 -rw-rw-r-- 1 yun yun 11K Jun 14 17:28 access.log.7 20 -rw-rw-r-- 1 yun yun 11K Jun 14 17:28 access.log.6 21 -rw-rw-r-- 1 yun yun 11K Jun 14 17:28 access.log.5 22 -rw-rw-r-- 1 yun yun 11K Jun 14 17:28 access.log.4 23 -rw-rw-r-- 1 yun yun 11K Jun 14 17:29 access.log.3 24 -rw-rw-r-- 1 yun yun 11K Jun 14 17:29 access.log.2 25 -rw-rw-r-- 1 yun yun 11K Jun 14 17:29 access.log.1 26 -rw-rw-r-- 1 yun yun 9.6K Jun 14 17:29 access.log

3.4. Shell脚本执行

1 # 脚本测试 2 [yun@mini01 hadoop]$ pwd 3 /app/yunwei/hadoop 4 [yun@mini01 hadoop]$ ll 5 -rwxrwxr-x 1 yun yun 2657 Jun 14 17:27 uploadFile2Hdfs.sh 6 [yun@mini01 hadoop]$ ./uploadFile2Hdfs.sh 7 ………………

3.5. 查看结果

3.5.1. 脚本执行结果查看

Web日志转移与上传查看

1 # web日志转移查看 2 [yun@mini01 logs]$ pwd 3 /app/webservice/logs 4 [yun@mini01 logs]$ ll -hrt 5 total 28K 6 -rw-rw-r-- 1 yun yun 7.3K Jun 14 17:36 access.log ### 表明日志已经转移 7 drwxrwxr-x 2 yun yun 16K Jun 14 17:40 up2hdfs 8 9 # 待上传文件存放的目录 10 [yun@mini01 up2hdfs]$ pwd 11 /app/webservice/logs/up2hdfs 12 [yun@mini01 up2hdfs]$ ll -hrt 13 -rw-rw-r-- 1 yun yun 11K Jun 14 17:35 access.log.7_20180614174001_DONE 14 -rw-rw-r-- 1 yun yun 11K Jun 14 17:35 access.log.6_20180614174001_DONE 15 -rw-rw-r-- 1 yun yun 11K Jun 14 17:35 access.log.5_20180614174001_DONE 16 -rw-rw-r-- 1 yun yun 11K Jun 14 17:35 access.log.4_20180614174001_DONE 17 -rw-rw-r-- 1 yun yun 11K Jun 14 17:35 access.log.3_20180614174001_DONE 18 -rw-rw-r-- 1 yun yun 11K Jun 14 17:36 access.log.2_20180614174001_DONE 19 -rw-rw-r-- 1 yun yun 11K Jun 14 17:36 access.log.1_20180614174001_DONE 20 ## 文件以 DONE 结尾,表明已上传到HDFS

脚本日志查看

# shell脚本日志查看 [yun@mini01 log]$ pwd /app/yunwei/hadoop/log [yun@mini01 log]$ ll total 28 -rw-rw-r-- 1 yun yun 24938 Jun 14 17:40 uploadFile2Hdfs.sh.log [yun@mini01 log]$ cat uploadFile2Hdfs.sh.log 2018-06-14 17:40:08 uploadFile2Hdfs.sh access.log.1_20180614174001 ok 2018-06-14 17:40:10 uploadFile2Hdfs.sh access.log.2_20180614174001 ok 2018-06-14 17:40:12 uploadFile2Hdfs.sh access.log.3_20180614174001 ok 2018-06-14 17:40:14 uploadFile2Hdfs.sh access.log.4_20180614174001 ok 2018-06-14 17:40:16 uploadFile2Hdfs.sh access.log.5_20180614174001 ok 2018-06-14 17:40:18 uploadFile2Hdfs.sh access.log.6_20180614174001 ok 2018-06-14 17:40:20 uploadFile2Hdfs.sh access.log.7_20180614174001 ok

3.5.2. HDFS命令行上传文件查看

1 [yun@mini01 ~]$ hadoop fs -ls /data/webservice/20180614 2 Found 7 items 3 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.1_20180614174001 4 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.2_20180614174001 5 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.3_20180614174001 6 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.4_20180614174001 7 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.5_20180614174001 8 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.6_20180614174001 9 -rw-r--r-- 2 yun supergroup 10268 2018-06-14 17:08 /data/webservice/20180614/access.log.7_20180614174001

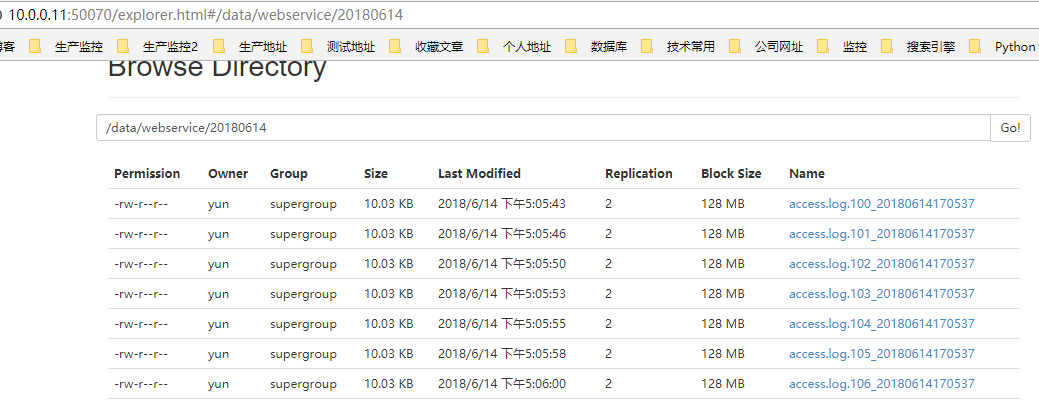

3.5.3. 浏览器查看看

http://10.0.0.11:50070/explorer.html#/data/webservice/20180614 路径 : /data/webservice/20180614

3.6. 脚本加入定时任务

1 [yun@mini01 hadoop]$ crontab -l 2 3 # WEB日志上传HDFS 每小时执行一次 4 00 */1 * * * /app/yunwei/hadoop/uploadFile2Hdfs.sh >/dev/null 2>&1

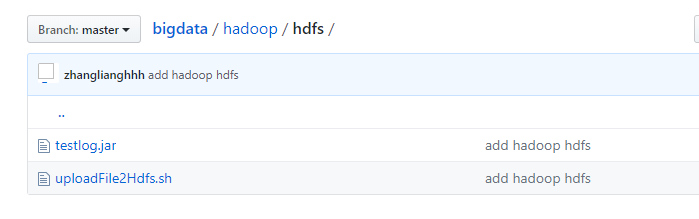

3.7. 相关的脚本和jar包

在Git上,路径如下: https://github.com/zhanglianghhh/bigdata/tree/master/hadoop/hdfs