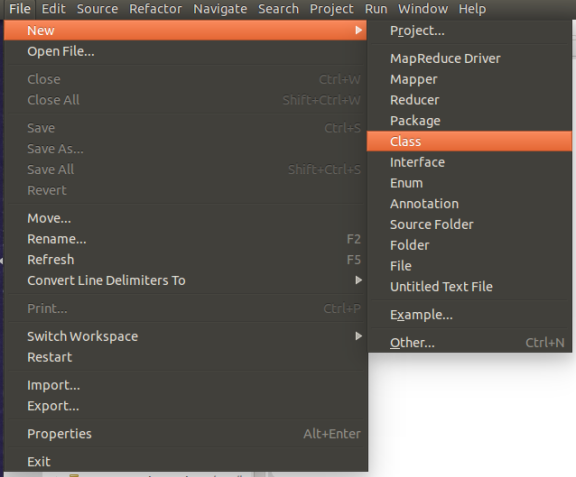

1.安装插件

-

下载插件hadoop-eclipse-plugin-2.6.0.jar并将其放到eclips安装目录->plugins(插件)文件夹下。然后启动eclipse。

-

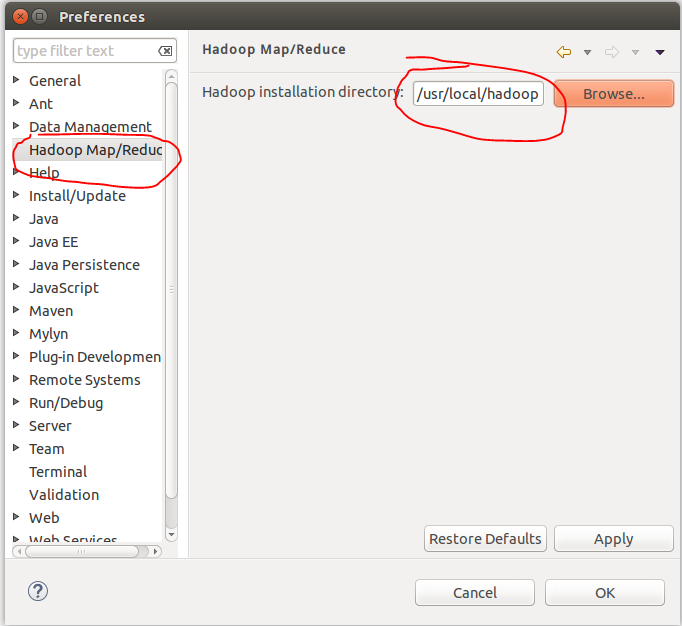

配置 hadoop 安装目录

-

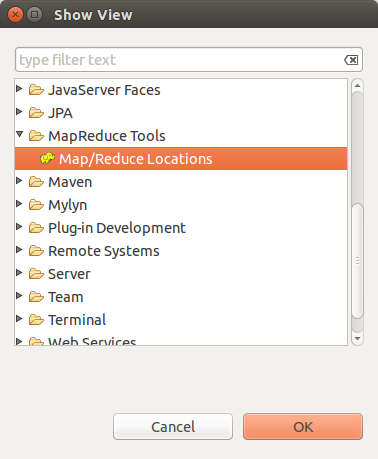

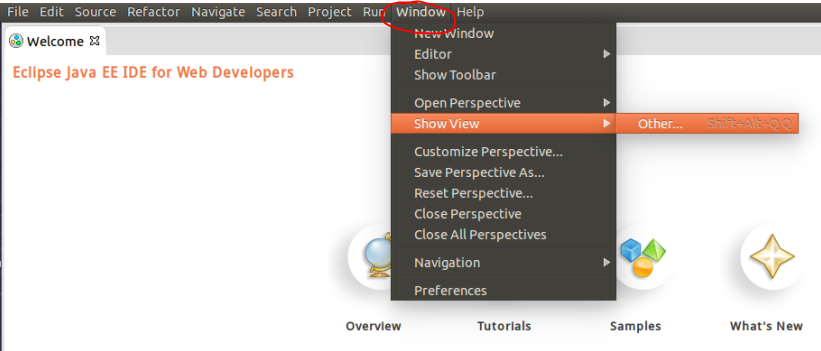

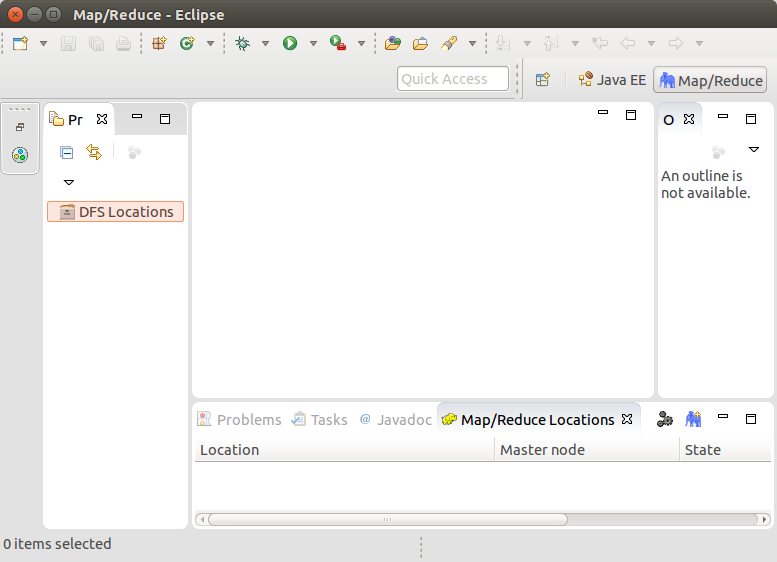

配置Map/Reduce 视图

- 点击"大象"

- 在“Map/Reduce Locations” Tab页 点击图标“大象”,选择“New Hadoop location…”,弹出对话框“New hadoop location…”。填写Location name和右边的Port:9000(与配置文件core-site.xml中的保持一致)。

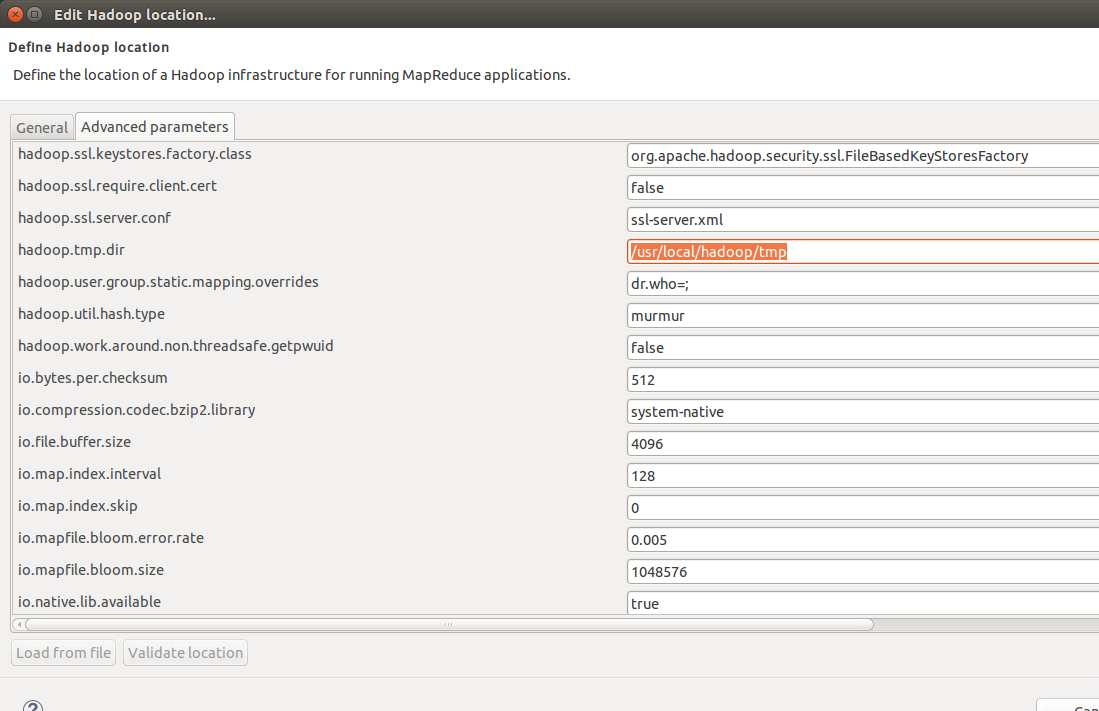

- 在Advanced paramenters中如下图所示找到hadoop.tmp.dir选项,与配置文件core-site.xml保持一致。

以及dfs.namenode.name.dir和dfs.datanode.data.dir与配置文件hdfs-site.xml保持一致

-

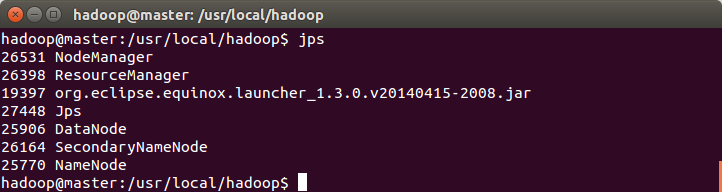

启动hadoop: sbin/start-all.sh 然后执行 jps。多一个org.eclipse.equinox.launcher...

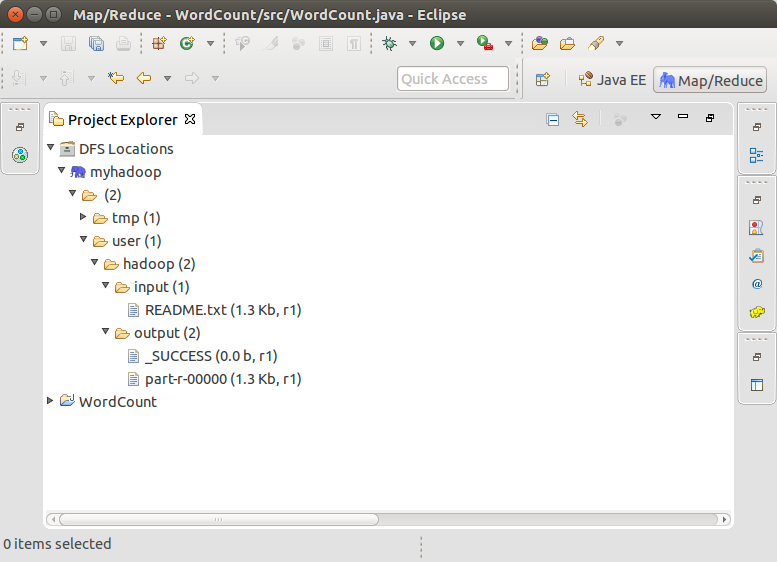

- 打开Project Explorer,查看HDFS文件系统。这是前篇文章中配置hadoop中的运行的结果。传送门

2.运行WordCount的例子

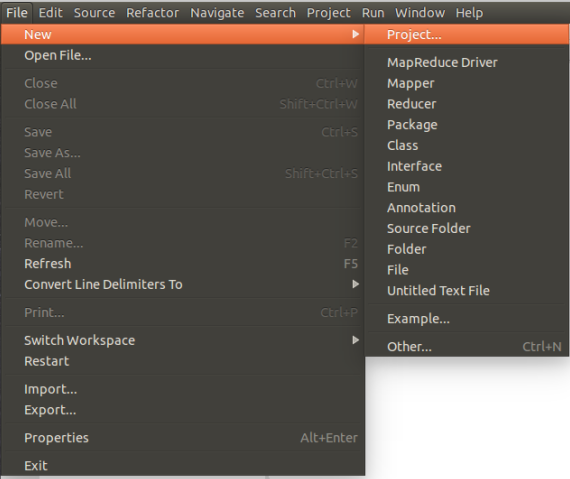

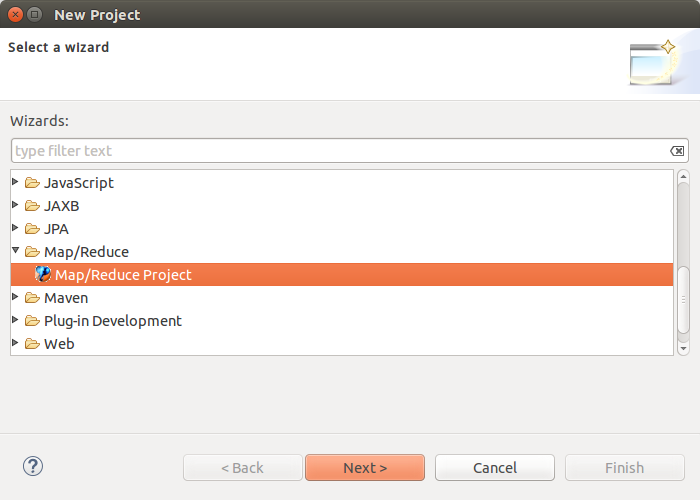

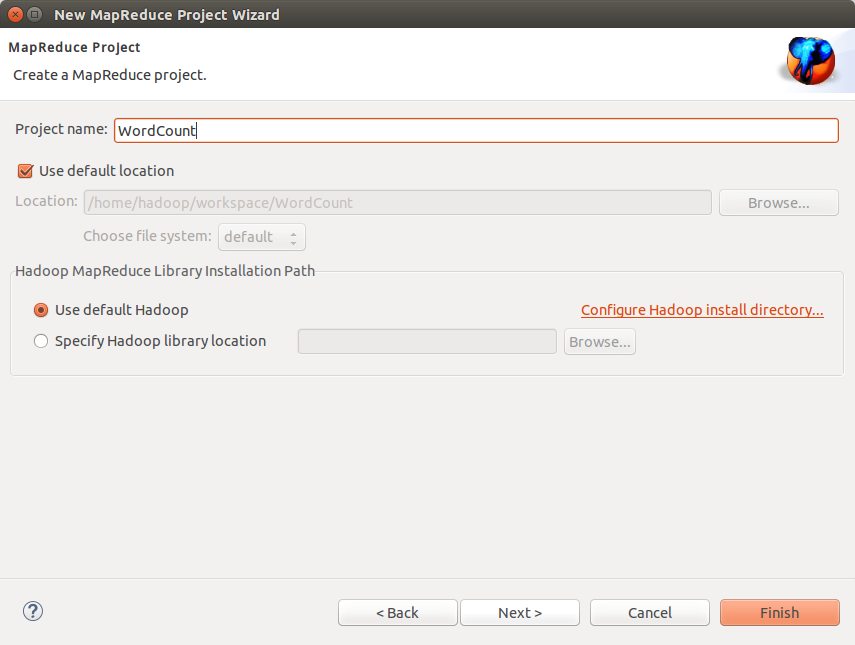

- 新建Map/Reduce任务

- 编写WordCount

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class WordCount {

public static class Map extends MapReduceBase implements Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

public static class Reduce extends MapReduceBase implements Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}

- 添加log4j.properties文件,很重要。

内容:

log4j.rootLogger=INFO, stdout log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/spring.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

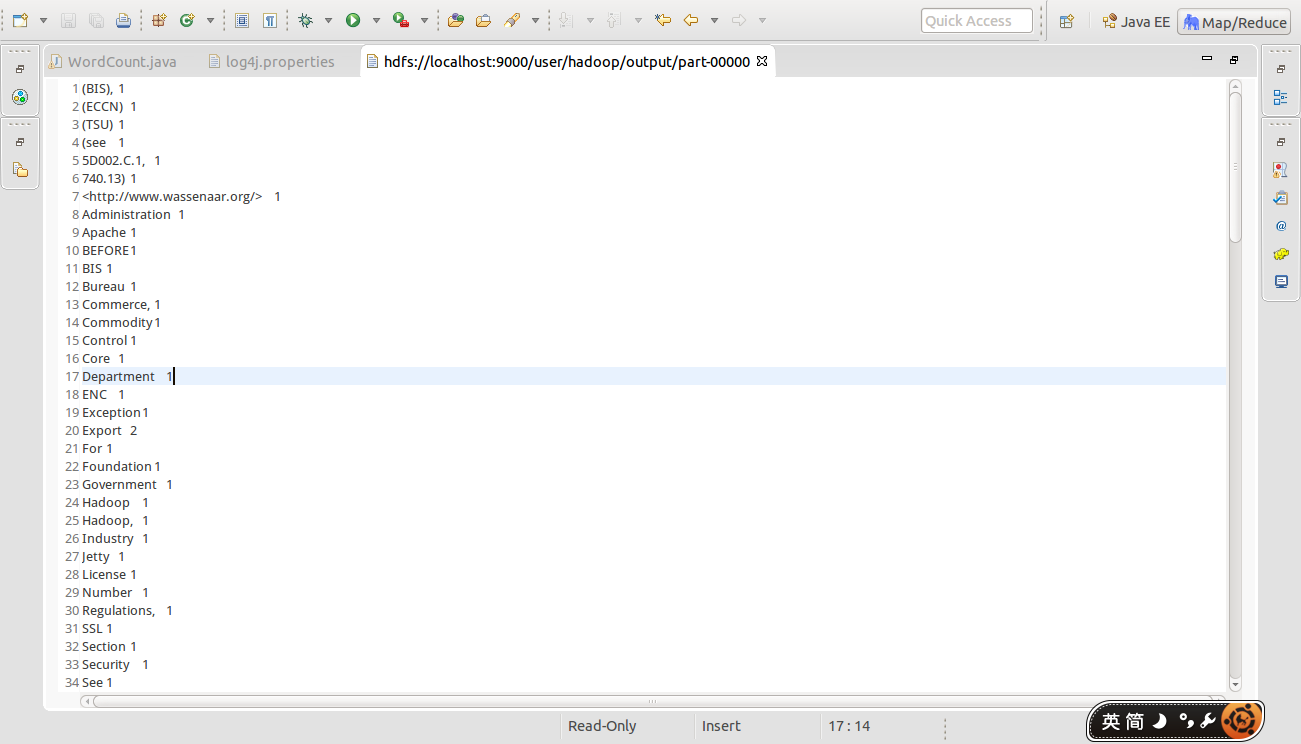

结果如下

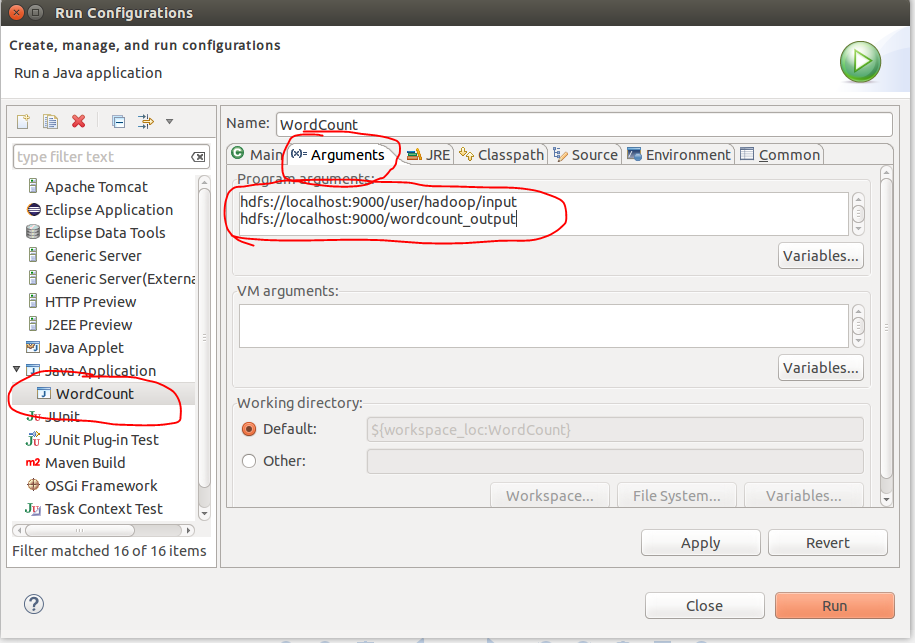

- 空白处右键,配置运行时参数

output写成hdfs://localhost:9000/user/hadoop/output 或者其他名,下面并没有写错。

注意:output文件夹在HDFS文件系统每次运行前必须重新删除,否则出错。或者写成其他名字亦可。

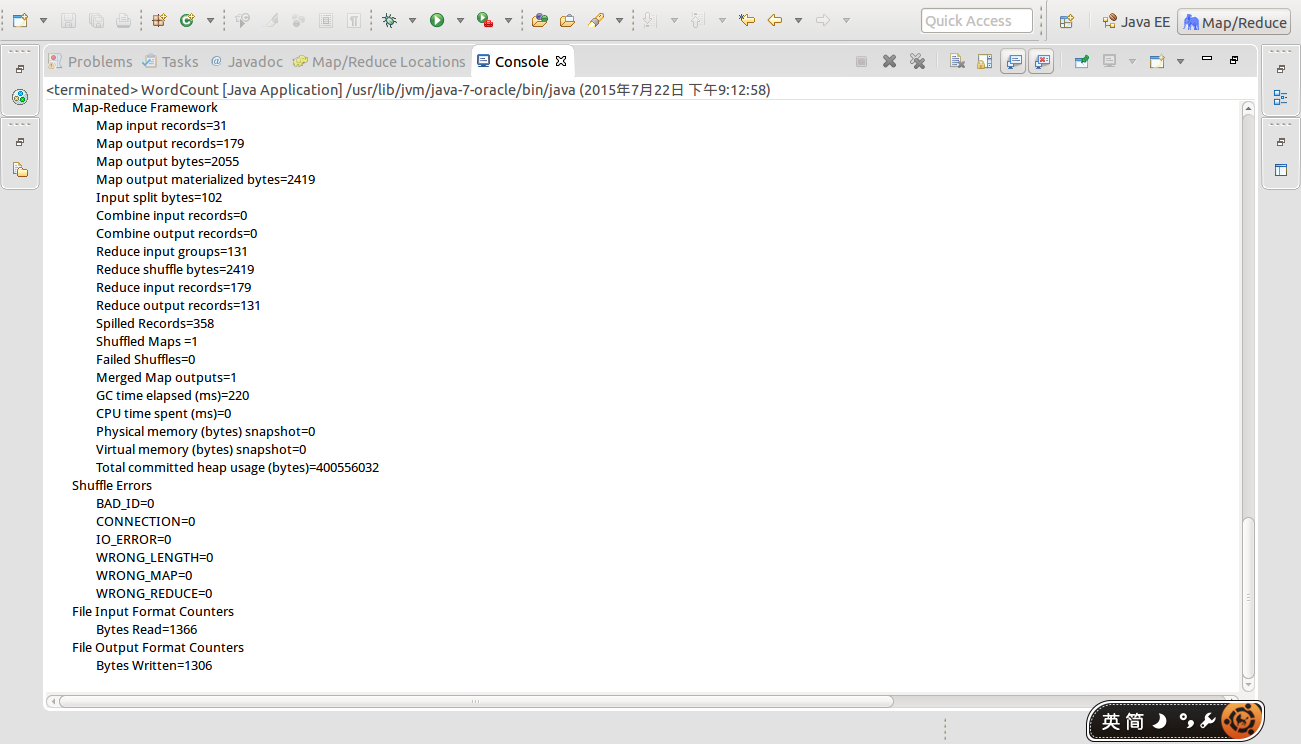

最后点Run运行。控制台输出

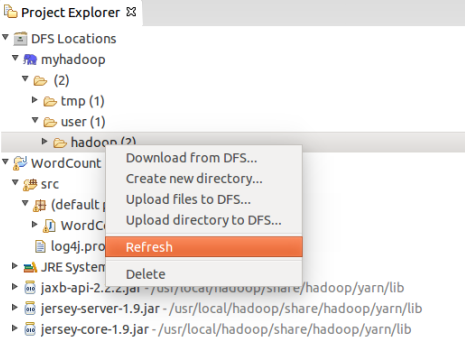

- Project Explorer反应并不及时,点击F5刷新或者:

- 最后查看结果,结果放在output文件夹中(与Run Configurations中配置的地址一致)