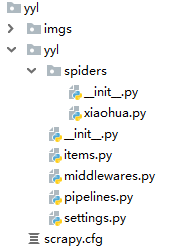

# 一个校花网图片下载的案例,也适合大文件处理,多个文件视频,音频处理

工程流程 --

scrapy startproject xx

cd xx

scrapy genspider hh www.xx.com

爬虫执行 scrapy crawl hh

import scrapy from yyl.items import YylItem class ExampleSpider(scrapy.Spider): name = 'xiaohua' # allowed_domains = ['example.com'] start_urls = ['http://www.521609.com/daxuemeinv/'] def parse(self, response): li_lst = response.xpath('//*[@id="content"]/div[2]/div[2]/ul/li') # print(li_lst) for li in li_lst: item = YylItem() #实例化 # item['src'] = 'http://www.521609.com{}'.format(li.xpath('./a/img/@src').extract_first()) item['src'] = 'http://www.521609.com' + li.xpath('./a/img/@src').extract_first() # 拼接完整地址 yield item # 管道负责 下载url 视频 压缩包 大文件下载的机制

import scrapy class YylItem(scrapy.Item): # define the fields for your item here like: src = scrapy.Field()

import scrapy from scrapy.pipelines.images import ImagesPipeline class YylPipeline(object): def process_item(self, item, spider): print(item) return item # 使用一个scrapy封装好的一个专门用于大文件下载的管道类 class ImgPipeline(ImagesPipeline): # 进行大文件的请求 def get_media_requests(self, item, info): yield scrapy.Request(url=item['src']) # 用于指定被下载文件的名称 def file_path(self, request, response=None, info=None): url = request.url filename = url.split('/')[-1] return filename # def item_completed(self, results, item, info): print(results) # 结果 True,{url path checksum} return item # process_item中的return item 作用一致

BOT_NAME = 'yyl' SPIDER_MODULES = ['yyl.spiders'] NEWSPIDER_MODULE = 'yyl.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'yyl.middlewares.YylSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'yyl.middlewares.YylDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'yyl.pipelines.YylPipeline': 301, 'yyl.pipelines.ImgPipeline': 300, } IMAGES_STORE = './imgs' LOG_LEVEL = 'ERROR'