一、下载Alertmanager

https://prometheus.io/download/

wget https://github.com/prometheus/alertmanager/releases/download/v0.16.0-alpha.0/alertmanager-0.16.0-alpha.0.linux-amd64.tar.gz #解压 tar xf alertmanager-0.16.0-alpha.0.linux-amd64.tar.gz mv alertmanager-0.16.0-alpha.0.linux-amd64 /usr/local/alertmanager #创建数据目录 mkdir -p /data/alertmanager #创建用户 useradd prometheus chown -R prometheus:prometheus /usr/local/alertmanager /data/alertmanager/ #添加启动服务 vim /usr/lib/systemd/system/alertmanager.service [Unit] Description=Alertmanager After=network.target [Service] Type=simple User=prometheus ExecStart=/usr/local/alertmanager/alertmanager --config.file=/usr/local/alertmanager/alertmanager.yml --storage.path=/data/alertmanager Restart=on-failure [Install] WantedBy=multi-user.target #启动服务 systemctl start alertmanager.service #查看端口 [root@k8s-m alertmanger]# ss -lntp|grep 9093

LISTEN 0 128 :::9093 :::* users:(("alertmanager",pid=26298,fd=6))

浏览器访问

http://IP:9093

二、结合prometheus实现监控报警

安装prometheus

https://www.cnblogs.com/zhangb8042/p/10204997.html

#创建一个configmap(监控用的)

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config2

namespace: kube-system

data:

rules.yml: |

groups:

- name: test-rules

rules:

- alert: InstanceDown

expr: up == 0

for: 2m

labels:

team: node

annotations:

summary: "{{$labels.instance}}: has been down"

description: "{{$labels.instance}}: job {{$labels.job}} has been down "

修改下载的prometheus.deploy.yml的配置文件(挂载上面的configmap)

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: "/etc/rules"

name: rules

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

- name: rules

configMap:

name: prometheus-config2

#修改原先的configmap文件

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_timeout: 15s

external_labels:

monitor: 'codelab_monitor'

alerting:

alertmanagers:

- static_configs:

- targets: ["172.31.0.248:9093"] #alertmanagers地址

rule_files: ## rule配置,首次读取默认加载,之后根据evaluation_interval设定的周期加载

- "/etc/rules/rules.yml"

scrape_configs:

- job_name: 'prometheus' # job_name默认写入timeseries的labels中,可以用于查询使用

scrape_interval: 15s # 抓取周期,默认采用global配置

static_configs: # 静态配置

- targets: ['localhost:9090'] # prometheus所要抓取数据的地址

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

#重新导入所有文件

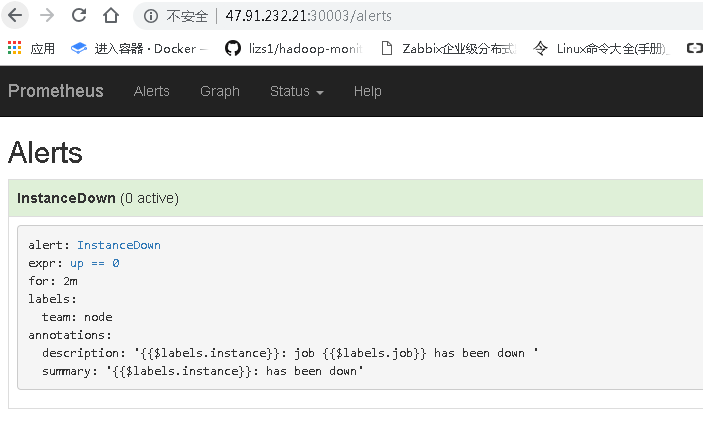

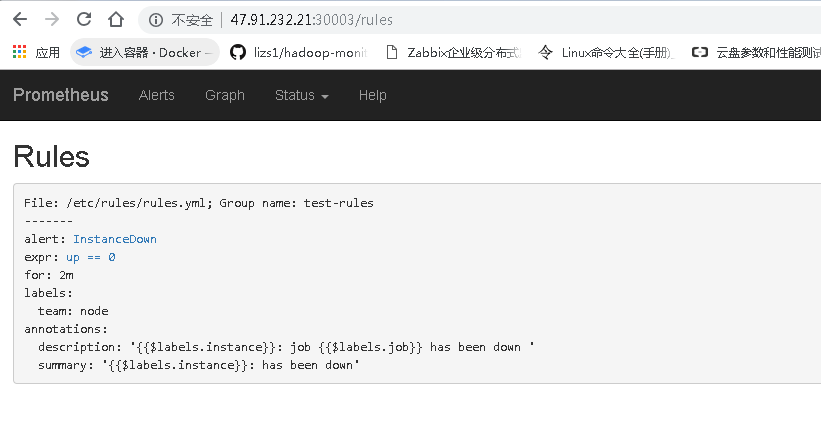

#查看prometheus页面的监控与报警项

#配置alertmanager的报警设置(我暂时使用的是email,支持微信和钉钉)

修改alertmanager.yml文件

[root@k8s-m prometheus]# cat /usr/local/alertmanager/alertmanager.yml

global:

resolve_timeout: 5m #处理超时时间,默认为5min

smtp_smarthost: 'smtp.163.com:25' # 邮箱smtp服务器代理

smtp_from: 'xxxx@163.com' # 发送邮箱名称

smtp_auth_username: 'xxxx@163.com' # 邮箱名称

smtp_auth_password: 'xxxxxxxxx' #邮箱密码

route:

group_by: ['alertname'] # 报警分组名称

group_wait: 10s # 最初即第一次等待多久时间发送一组警报的通知

group_interval: 10s # 在发送新警报前的等待时间

repeat_interval: 1m # 发送重复警报的周期

receiver: 'email' # 发送警报的接收者的名称,以下receivers name的名称

receivers:

- name: 'email' # 警报

email_configs: # 邮箱配置

- to: '18305944341@163.com' # 接收警报的email配置

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

#重启alertmanager

systemctl restart alertmanager.service

#干掉node端的kubelet

#过一会查看prometheus(出现问题了)

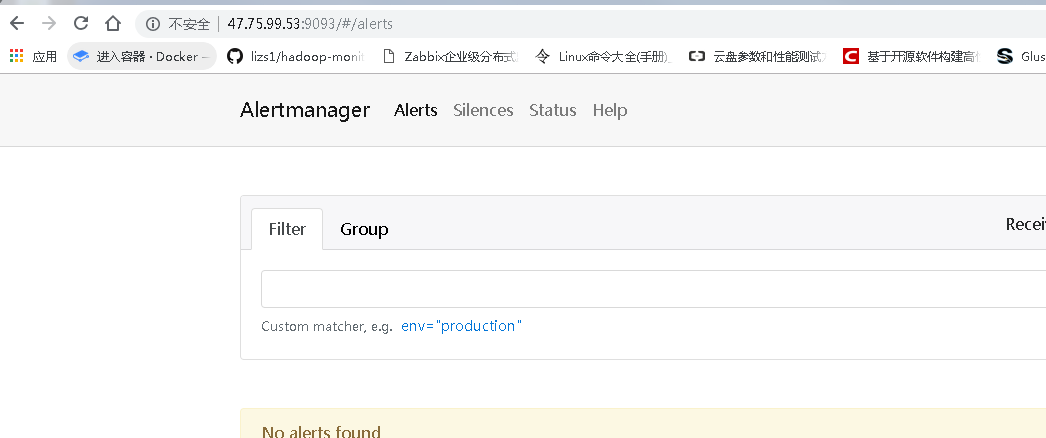

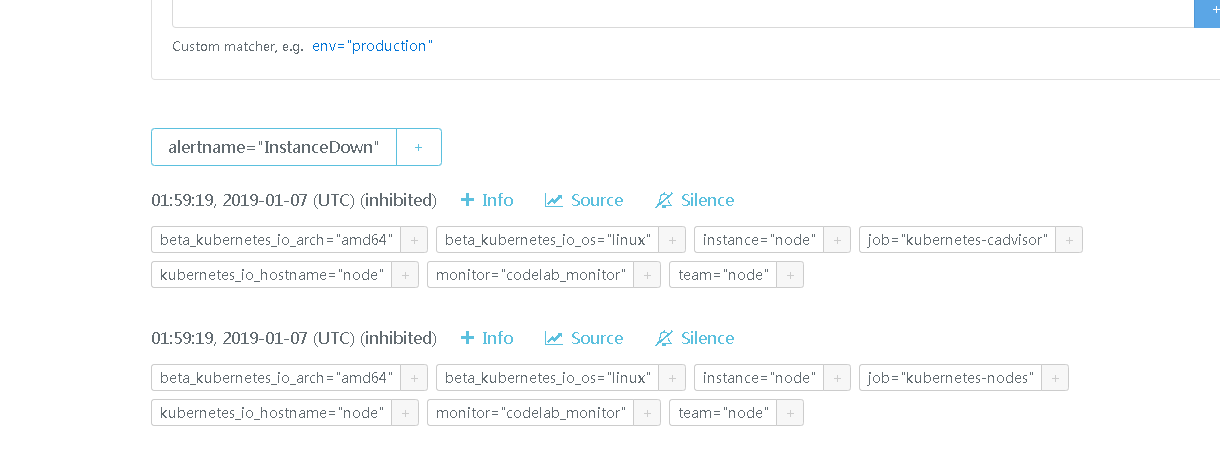

#查看alertmanager

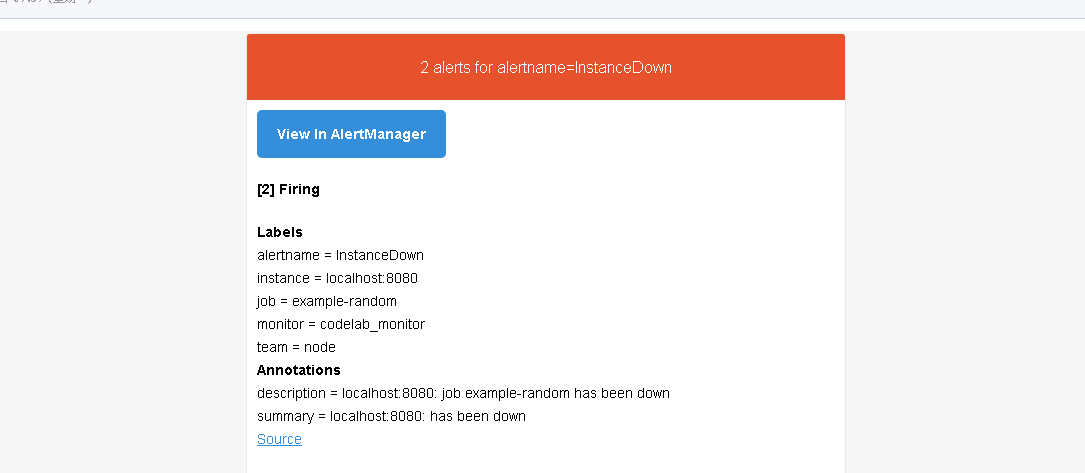

#查看邮箱

#配置钉钉报警

一、下载prometheus-webhook-dingtalk插件

wget https://github.com/timonwong/prometheus-webhook-dingtalk/releases/download/v0.3.0/prometheus-webhook-dingtalk-0.3.0.linux-amd64.tar.gz

tar xf prometheus-webhook-dingtalk-0.3.0.linux-amd64.tar.gz

mv prometheus-webhook-dingtalk-0.3.0.linux-amd64 /usr/local

ln -s /usr/local/prometheus-webhook-dingtalk-0.3.0.linux-amd64 /usr/local/prometheus-webhook-dingtalk

cp default.tmpl{,.default}

vim default.tmpl#修改报警模板

{{ define "__subject" }}[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] {{ .GroupLabels.SortedPairs.Values | join " " }} {{ if gt (len .CommonLabels) (len .GroupLabels) }}({{ with .CommonLabels.Remove .GroupLabels.Names }}{{ .Values | join " " }}{{ end }}){{ end }}{{ end }}

{{ define "__alertmanagerURL" }}{{ .ExternalURL }}/#/alerts?receiver={{ .Receiver }}{{ end }}

{{ define "__text_alert_list" }}{{ range . }}

**Labels**

{{ range .Labels.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Annotations**

{{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Source:** [{{ .GeneratorURL }}]({{ .GeneratorURL }})

{{ end }}{{ end }}

{{ define "ding.link.title" }}{{ template "__subject" . }}{{ end }}

{{ define "ding.link.content" }}#### [{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ template "__text_alert_list" .Alerts.Firing }}

{{ end }}

#启动prometheus-webhook-dingtalk

/usr/local/prometheus-webhook-dingtalk/prometheus-webhook-dingtalk --web.listen-address="0.0.0.0:8060" --ding.profile="ops_dingding=https://oapi.dingtalk.com/robot/send?access_token=xxxxxxxxxxxxx" #钉钉机器人wehook地址

#2、修改alertmanager.yml文件

[root@k8s-m alertmanager]# cat alertmanager.yml

global:

resolve_timeout: 5m

route:

receiver: webhook

group_wait: 30s

group_interval: 5m

repeat_interval: 4h

group_by: [alertname]

routes:

- receiver: webhook

group_wait: 10s

match:

team: node

receivers:

- name: webhook

webhook_configs:

- url: http://localhost:8060/dingtalk/ops_dingding/send

send_resolved: true

#重启alertmanager

systemctl restart alertmanager.service

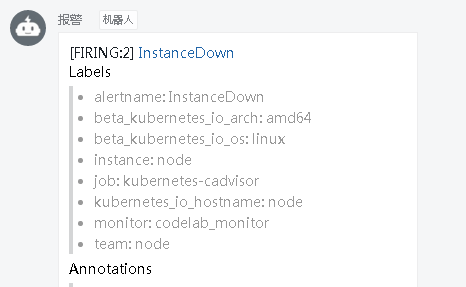

#钉钉报警查看