| 主机名 | IP | 安装服务 |

| master | 192.168.30.130 | mfsmaster、mfsmetalogger |

| node-1 | 192.168.30.131 | chunkserver |

| node-2 | 192.168.30.132 | mfsclient |

| node-3 | 192.168.30.133 | chunkserver |

在master上安装

[root@master ~]# yum install -y rpm-build gcc gcc-c++ fuse-devel zlib-devel -y 上传软件包 [root@master ~]# rz 添加mfs运行用户 [root@master ~]# useradd -s /sbin/nologin mfs [root@master ~]# tar -xf mfs-1.6.27-5.tar.gz -C /usr/local/src/ [root@master ~]# cd /usr/local/src/mfs-1.6.27/ [root@master mfs-1.6.27]# ./configure --prefix=/usr/local/mfs > --with-default-user=mfs > --with-default-group=mfs [root@master mfs-1.6.27]# make -j 4 && make install [root@master mfs-1.6.27]# echo $? 0

相关文件介绍

[root@master mfs-1.6.27]# cd /usr/local/mfs/ [root@master mfs]# ll total 20 drwxr-xr-x 2 root root 4096 Jun 4 14:56 bin #客户端工具 drwxr-xr-x 3 root root 4096 Jun 4 14:56 etc #服务器的配置文件所在位置 drwxr-xr-x 2 root root 4096 Jun 4 14:56 sbin #服务端启动程序 drwxr-xr-x 4 root root 4096 Jun 4 14:56 share #帮助手册 drwxr-xr-x 3 root root 4096 Jun 4 14:56 var #元数据目录,可在配置文件中定义到其他目录

创建配置文件

[root@master mfs]# cd /usr/local/mfs/etc/mfs/ [root@master mfs]# ll total 28 -rw-r--r-- 1 root root 531 Jun 4 14:56 mfschunkserver.cfg.dist -rw-r--r-- 1 root root 4060 Jun 4 14:56 mfsexports.cfg.dist -rw-r--r-- 1 root root 57 Jun 4 14:56 mfshdd.cfg.dist -rw-r--r-- 1 root root 1020 Jun 4 14:56 mfsmaster.cfg.dist -rw-r--r-- 1 root root 417 Jun 4 14:56 mfsmetalogger.cfg.dist -rw-r--r-- 1 root root 404 Jun 4 14:56 mfsmount.cfg.dist -rw-r--r-- 1 root root 1123 Jun 4 14:56 mfstopology.cfg.dist [root@master mfs]# cp mfsmaster.cfg.dist mfsmaster.cfg #配置文件 [root@master mfs]# cp mfsexports.cfg.dist mfsexports.cfg #输出目录配置文件 [root@master mfs]# cp mfsmetalogger.cfg.dist mfsmetalogger.cfg #元数据日志 [root@master mfs]# cp metadata.mfs.empty metadata.mfs #首次安装master时,会自动生成一个名为metadata.mfs.empty的元数据文件,但是该文件是空的,MooseFS master必须有文件metadata.mfs才可以运行

启动服务

[root@master mfs]# chown -R mfs:mfs /usr/local/mfs/ [root@master mfs]# /usr/local/mfs/sbin/mfsmaster start working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmaster modules ... loading sessions ... file not found if it is not fresh installation then you have to restart all active mounts !!! exports file has been loaded mfstopology configuration file (/usr/local/mfs/etc/mfstopology.cfg) not found - using defaults loading metadata ... create new empty filesystemmetadata file has been loaded no charts data file - initializing empty charts master <-> metaloggers module: listen on *:9419 master <-> chunkservers module: listen on *:9420 main master server module: listen on *:9421 mfsmaster daemon initialized properly

[root@master mfs]# /usr/local/mfs/sbin/mfsmaster start working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmaster modules ... loading sessions ... ok sessions file has been loaded exports file has been loaded mfstopology configuration file (/usr/local/mfs/etc/mfstopology.cfg) not found - using defaults loading metadata ... loading objects (files,directories,etc.) ... ok loading names ... ok loading deletion timestamps ... ok loading chunks data ... ok checking filesystem consistency ... ok connecting files and chunks ... ok all inodes: 1 directory inodes: 1 file inodes: 0 chunks: 0 metadata file has been loaded stats file has been loaded master <-> metaloggers module: listen on *:9419 master <-> chunkservers module: listen on *:9420 main master server module: listen on *:9421 mfsmaster daemon initialized properly

查看端口是否侦听到

[root@master ~]# netstat -antup | grep 942* tcp 0 0 0.0.0.0:9419 0.0.0.0:* LISTEN 2502/mfsmaster tcp 0 0 0.0.0.0:9420 0.0.0.0:* LISTEN 2502/mfsmaster tcp 0 0 0.0.0.0:9421 0.0.0.0:* LISTEN 2502/mfsmaster tcp 0 0 0.0.0.0:3260 0.0.0.0:* LISTEN 1942/tgtd tcp 0 0 192.168.30.130:3260 192.168.30.131:33414 ESTABLISHED 1942/tgtd tcp 0 0 :::3260 :::* LISTEN 1942/tgtd

设置开机启动及关闭服务

[root@master ~]# echo "/usr/local/mfs/sbin/mfsmaster start" >> /etc/rc.local [root@master ~]# chmod +x /etc/rc.local [root@master ~]# /usr/local/mfs/sbin/mfsmaster stop sending SIGTERM to lock owner (pid:2502) waiting for termination ... terminated

查看日志文件

[root@master ~]# ll /usr/local/mfs/var/mfs/ total 764 -rw-r----- 1 mfs mfs 95 Jun 4 15:48 metadata.mfs -rw-r----- 1 mfs mfs 95 Jun 4 15:33 metadata.mfs.back.1 -rw-r--r-- 1 mfs mfs 8 Jun 4 14:56 metadata.mfs.empty -rw-r----- 1 mfs mfs 10 Jun 4 15:21 sessions.mfs -rw-r----- 1 mfs mfs 762516 Jun 4 15:48 stats.mfs

指定需要共享的权限

[root@master ~]# cd /usr/local/mfs/etc/mfs/ [root@master mfs]# vim mfsexports.cfg # Allow "meta". * . rw#此行下面添加两行 192.168.30.131 / rw,alldirs,maproot=0 192.168.30.132 / rw,alldirs,maproot=0

启动服务

[root@master mfs]# sh /etc/rc.local working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmaster modules ... loading sessions ... ok sessions file has been loaded exports file has been loaded mfstopology configuration file (/usr/local/mfs/etc/mfstopology.cfg) not found - using defaults loading metadata ... loading objects (files,directories,etc.) ... ok loading names ... ok loading deletion timestamps ... ok loading chunks data ... ok checking filesystem consistency ... ok connecting files and chunks ... ok all inodes: 1 directory inodes: 1 file inodes: 0 chunks: 0 metadata file has been loaded stats file has been loaded master <-> metaloggers module: listen on *:9419 master <-> chunkservers module: listen on *:9420 main master server module: listen on *:9421 mfsmaster daemon initialized properly

在master上配置元数据日志服务器

root@master src]# rm -rf mfs-1.6.27/ [root@master src]# ls /root/ anaconda-ks.cfg install.log.syslog Pictures Desktop ip_forward~ Public Documents ip_forwarz~ Templates Downloads mfs-1.6.27-5.tar.gz Videos install.log Music [root@master src]# tar -xf /root/mfs-1.6.27-5.tar.gz -C /usr/local/src/ [root@master src]# cd mfs-1.6.27/ [root@master mfs-1.6.27]# ./configure > --prefix=/usr/local/mfsmeta > --with-default-user=mfs > --with-default-group=mfs [root@master mfs-1.6.27]# make -j 4 && make install && echo $? && cd /usr/local/mfsmeta && ls ..... 0 bin etc sbin share var [root@master mfsmeta]# pwd /usr/local/mfsmeta [root@master mfsmeta]# cd etc/mfs/ [root@master mfs]# cp mfsmetalogger.cfg.dist mfsmetalogger.cfg [root@master mfs]# vim mfsmetalogger.cfg .......... MASTER_HOST = 192.168.30.130 ....... 启动服务和关闭服务的方法 [root@master mfs]# chown -R mfs:mfs /usr/local/mfsmeta/ [root@master mfs]# /usr/local/mfsmeta/sbin/mfsmetalogger start working directory: /usr/local/mfsmeta/var/mfs lockfile created and locked initializing mfsmetalogger modules ... mfsmetalogger daemon initialized properly [root@master mfs]# echo "/usr/local/mfsmeta/sbin/mfsmetalogger start " >> /etc/rc.local #设置开机启动 [root@master mfs]# /usr/local/mfsmeta/sbin/mfsmetalogger stop sending SIGTERM to lock owner (pid:7535) waiting for termination ... terminated [root@master mfs]# /usr/local/mfsmeta/sbin/mfsmetalogger start working directory: /usr/local/mfsmeta/var/mfs lockfile created and locked initializing mfsmetalogger modules ... mfsmetalogger daemon initialized properly #查看端口 [root@master mfs]# lsof -i :9419 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME mfsmaster 2258 mfs 8u IPv4 14653 0t0 TCP *:9419 (LISTEN) mfsmaster 2258 mfs 11u IPv4 21223 0t0 TCP master:9419->master:44790 (ESTABLISHED) mfsmetalo 7542 mfs 8u IPv4 21222 0t0 TCP master:44790->master:9419 (ESTABLISHED)

在node-1上安装数据服务器(chunk-server),chunk-server存储数据的特点:在普通的文件系统上存储数据块或碎片作为文件,因此,在chunk-server上看不到完整的文件。

[root@node-1 ~]# tar -xf mfs-1.6.27-5.tar.gz -C /usr/local/src/ [root@node-1 ~]# cd /usr/local/src/mfs-1.6.27/ [root@node-1 mfs-1.6.27]# useradd -s /sbin/nologin mfs [root@node-1 mfs-1.6.27]# ./configure --prefix=/usr/local/mfschunk --with-default-user=mfs --with-default-group=mfs [root@node-1 mfs-1.6.27]# make -j 4 && make install && echo $? && cd /usr/local/mfschunk ............ 0 [root@node-1 mfschunk]# pwd /usr/local/mfschunk [root@node-1 mfschunk]# ls etc sbin share var [root@node-1 mfschunk]# cd etc/mfs/ 创建配置文件,并修改 [root@node-1 mfs]# cp mfschunkserver.cfg.dist mfschunkserver.cfg [root@node-1 mfs]# cp mfshdd.cfg.dist mfshdd.cfg [root@node-1 mfs]# vim mfschunkserver.cfg # WORKING_USER = mfs #运行用户 # WORKING_GROUP = mfs #运行用户组 # SYSLOG_IDENT = mfschunkserver #master sever在syslog中的标识,用以识别该服务的日志 # LOCK_MEMORY = 0 #是否执行mlockall()避免mfsmaster进程溢出(默认为0) # NICE_LEVEL = -19 #运行的优先级(默认-19) # DATA_PATH = /usr/local/mfschunk/var/mfs #数据存放路径,该目录分三类文件(changlelog,sessions,stats) # MASTER_RECONNECTION_DELAY = 5 # # BIND_HOST = * MASTER_HOST = 192.168.30.130 #元数据服务器的名称或地址,(主机名或ip均可) MASTER_PORT = 9420 #默认9420,可以启用 # MASTER_TIMEOUT = 60 # CSSERV_LISTEN_HOST = * # CSSERV_LISTEN_PORT = 9422 #用于与其他数据存储服务器间的连接,通常为数据复制 # HDD_CONF_FILENAME = /usr/local/mfschunk/etc/mfs/mfshdd.cfg #用于指定MFS使用的磁盘空间的配置文件的位置 # HDD_TEST_FREQ = 10 # deprecated, to be removed in MooseFS 1.7 # LOCK_FILE = /var/run/mfs/mfschunkserver.lock # BACK_LOGS = 50 # CSSERV_TIMEOUT = 5 建立mfs分区,这里以/tmp目录为例,生产环境下一般是一个独立的磁盘 [root@node-1 mfs]# vim mfshdd.cfg # mount points of HDD drives # #/mnt/hd1 #/mnt/hd2 #etc. /tmp 开始启动服务 [root@node-1 mfs]# chown -R mfs:mfs /usr/local/mfschunk/ [root@node-1 mfs]# /usr/local/mfschunk/sbin/mfschunkserver start working directory: /usr/local/mfschunk/var/mfs lockfile created and locked initializing mfschunkserver modules ... hdd space manager: path to scan: /tmp/ hdd space manager: start background hdd scanning (searching for available chunks) main server module: listen on *:9422 no charts data file - initializing empty charts mfschunkserver daemon initialized properly [root@node-1 mfs]# echo "/usr/local/mfschunk/sbin/mfschunkserver start " >> /etc/rc.local [root@node-1 mfs]# chmod +x /etc/rc.local [root@node-1 mfs]# ls /tmp/ #全部是存储块,人工无法识别 00 11 22 33 44 55 66 77 88 99 AA BB CC DD EE FF 01 12 23 34 45 56 67 78 89 9A AB BC CD DE EF 02 13 24 35 46 57 68 79 8A 9B AC BD CE DF F0 03 14 25 36 47 58 69 7A 8B 9C AD BE CF E0 F1 04 15 26 37 48 59 6A 7B 8C 9D AE BF D0 E1 F2 05 16 27 38 49 5A 6B 7C 8D 9E AF C0 D1 E2 F3 06 17 28 39 4A 5B 6C 7D 8E 9F B0 C1 D2 E3 F4 07 18 29 3A 4B 5C 6D 7E 8F A0 B1 C2 D3 E4 F5 08 19 2A 3B 4C 5D 6E 7F 90 A1 B2 C3 D4 E5 F6 09 1A 2B 3C 4D 5E 6F 80 91 A2 B3 C4 D5 E6 F7 0A 1B 2C 3D 4E 5F 70 81 92 A3 B4 C5 D6 E7 F8 0B 1C 2D 3E 4F 60 71 82 93 A4 B5 C6 D7 E8 F9 0C 1D 2E 3F 50 61 72 83 94 A5 B6 C7 D8 E9 FA 0D 1E 2F 40 51 62 73 84 95 A6 B7 C8 D9 EA FB 0E 1F 30 41 52 63 74 85 96 A7 B8 C9 DA EB FC 0F 20 31 42 53 64 75 86 97 A8 B9 CA DB EC FD 10 21 32 43 54 65 76 87 98 A9 BA CB DC ED FE 关闭服务的方法 [root@node-1 mfs]# /usr/local/mfschunk/sbin/mfschunkserver stop sending SIGTERM to lock owner (pid:7395) waiting for termination ... terminated

在node-2上配置客户端

[root@node-2 ~]# yum install -y rpm-build gcc gcc-c++ fuse-devel zlib-devel lrzsz [root@node-2 ~]# useradd -s /sbin/nologin mfs [root@node-2 ~]# rz [root@node-2 ~]# tar -xf mfs-1.6.27-5.tar.gz -C /usr/local/src/ [root@node-2 ~]# cd /usr/local/src/mfs-1.6.27/ [root@node-2 mfs-1.6.27]# ./configure --prefix=/usr/local/mfsclient > --with-default-user=mfs > --with-default-group=mfs > --enable-mfsmount [root@node-2 mfs-1.6.27]# make -j 4 && make install && echo $? && cd /usr/local/mfsclient ...... 0 [root@node-2 mfsclient]# pwd /usr/local/mfsclient [root@node-2 mfsclient]# cd bin/ [root@node-2 bin]# ls mfsappendchunks mfsfileinfo mfsgettrashtime mfsrgettrashtime mfssetgoal mfscheckfile mfsfilerepair mfsmakesnapshot mfsrsetgoal mfssettrashtime mfsdeleattr mfsgeteattr mfsmount mfsrsettrashtime mfssnapshot mfsdirinfo mfsgetgoal mfsrgetgoal mfsseteattr mfstools [root@node-2 bin]# mkdir /mfs [root@node-2 bin]# lsmod | grep fuse fuse 73530 2 #如果这里没有加载出来fuse模块,手动执行下如下命令 [root@node-2 ~]# modprobe fuse 创建mfsmount命令 [root@node-2 bin]# ln -s /usr/local/mfsclient/bin/mfsmount /usr/bin/mfsmount 挂载测试 [root@node-2 ~]# mfsmount /mfs/ -H 192.168.30.130 -p MFS Password: mfsmaster accepted connection with parameters: read-write,restricted_ip ; root mapped to root:root [root@node-2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_master-LogVol00 18G 4.2G 13G 25% / tmpfs 2.0G 72K 2.0G 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/sr0 3.6G 3.6G 0 100% /media/cdrom 192.168.30.130:9421 13G 0 13G 0% /mfs [root@node-2 ~]# echo "modprobe fuse">>/etc/rc.local [root@node-2 ~]# echo "/usr/local/mfsclient/bin/mfsmount /mfs -H 192.168.30.130 -p">> /etc/rc.local [root@node-2 ~]# chmod +x /etc/rc.local

在node-1上查看监控状态

[root@node-1 ~]# tree /tmp/ /tmp/ ├── 00 ├── 01 ├── 02 ├── 03 ├── 04 ├── 05 ├── 06 ├── 07 ├── 08 ├── 09 ├── 0A .............. 265 directories, 63 files 在node-2上 [root@node-2 ~]# cp /etc/passwd /mfs/ 然后在node-1上查看 [root@node-1 ~]# tree /tmp/ /tmp/ ├── 00 ├── 01 │ └── chunk_0000000000000001_00000001.mfs ├── 02 ├── 03 ├── 04 ├── 05 ├── 06 ├── 07 ├── 08 ├── 09 ......... 265 directories, 64 files

在node-2上可以看得到文件

[root@node-2 ~]# ls /mfs/ passwd

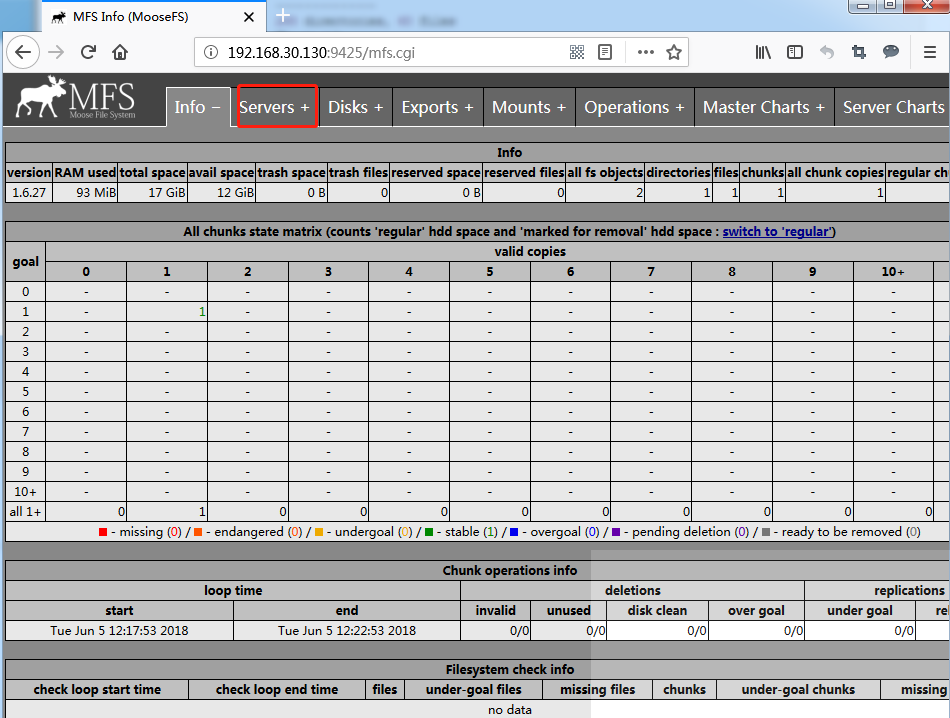

在master上启动网页监控,主要用户监控MFS各节点的状态信息,网页监控可以部署到任意一台机器上。

[root@master mfs]# /usr/local/mfs/sbin/mfscgiserv start lockfile created and locked starting simple cgi server (host: any , port: 9425 , rootpath: /usr/local/mfs/share/mfscgi)

查看权限

配置密码以及添加一台存储和复制份数

在master上

[root@master mfs]# cd /usr/local/mfs/etc/mfs [root@master mfs]# vim mfsexports.cfg # Allow everything but "meta". * / rw,alldirs,maproot=0 # Allow "meta". * . rw 192.168.30.131 / rw,alldirs,maproot=0 192.168.30.132 / rw,alldirs,maproot=0,password=123456 192.168.30.0/24 / rw,alldirs,maproot=0 添加chunk-server [root@node-3 ~]# yum install -y rpm-build gcc gcc-c++ fuse-devel zlib-devel lrzsz [root@node-3 ~]# useradd -s /sbin/nologin mfs [root@node-3 mfs-1.6.27]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --enable-mfsmount && echo $? && sleep 3 && make -j 4 && sleep 3 && make install && cd /usr/local/mfs [root@node-3 mfs]# pwd /usr/local/mfs [root@node-3 mfs]# ls bin etc sbin share var [root@node-3 mfs]# cd etc/mfs/ [root@node-3 mfs]# cp mfschunkserver.cfg.dist mfschunkserver.cfg [root@node-3 mfs]# vim mfschunkserver.cfg ...... MASTER_HOST = 192.168.30.130 MASTER_PORT = 9420 ....... [root@node-3 mfs]# vim mfshdd.cfg # mount points of HDD drives # #/mnt/hd1 #/mnt/hd2 #etc. /opt #此行添加 [root@node-3 mfs]# chown -R mfs:mfs /usr/local/mfs/ [root@node-3 mfs]# chown -R mfs:mfs /opt/ [root@node-3 mfs]# /usr/local/mfs/sbin/mfschunkserver start working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfschunkserver modules ... hdd space manager: path to scan: /opt/ hdd space manager: start background hdd scanning (searching for available chunks) main server module: listen on *:9422 no charts data file - initializing empty charts mfschunkserver daemon initialized properly [root@node-3 mfs]# yum install -y tree

在master上重新启动下mfsmaster

[root@master ~]# /usr/local/mfs/sbin/mfsmaster stop sending SIGTERM to lock owner (pid:2258) waiting for termination ... terminated [root@master ~]# /usr/local/mfs/sbin/mfsmaster start working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmaster modules ... loading sessions ... ok sessions file has been loaded exports file has been loaded mfstopology configuration file (/usr/local/mfs/etc/mfstopology.cfg) not found - using defaults loading metadata ... loading objects (files,directories,etc.) ... ok loading names ... ok loading deletion timestamps ... ok loading chunks data ... ok checking filesystem consistency ... ok connecting files and chunks ... ok all inodes: 2 directory inodes: 1 file inodes: 1 chunks: 1 metadata file has been loaded stats file has been loaded master <-> metaloggers module: listen on *:9419 master <-> chunkservers module: listen on *:9420 main master server module: listen on *:9421 mfsmaster daemon initialized properly [root@master ~]# /usr/local/mfsmeta/sbin/mfsmetalogger stop sending SIGTERM to lock owner (pid:7614) waiting for termination ... terminated [root@master ~]# /usr/local/mfsmeta/sbin/mfsmetalogger start working directory: /usr/local/mfsmeta/var/mfs lockfile created and locked initializing mfsmetalogger modules ... mfsmetalogger daemon initialized properly

在node-2上重新写入数据测试

在node-3上查看监控状态

[root@node-3 mfs]# tree /opt/ /opt/ ├── 00 ├── 01 ├── 02 ├── 03 │ └── chunk_0000000000000003_00000001.mfs ├── 04 ├── 05 │ └── chunk_0000000000000005_00000001.mfs ├── 06 ├── 07 │ └── chunk_0000000000000007_00000001.mfs ├── 08 ├── 09 ........

在node-1上查看

[root@node-1 ~]# tree /tmp/ /tmp/ ├── 00 ├── 01 │ └── chunk_0000000000000001_00000001.mfs ├── 02 │ └── chunk_0000000000000002_00000001.mfs ├── 03 ├── 04 │ └── chunk_0000000000000004_00000001.mfs ├── 05 ├── 06 │ └── chunk_0000000000000006_00000001.mfs ├── 07 ├── 08 ├── 09 ........

注意对比下区别!

设置复制份数

[root@node-2 ~]# cd /usr/local/mfsclient/bin/ [root@node-2 bin]# ./mfssetgoal -r 2 /mfs/ /mfs/: inodes with goal changed: 8 inodes with goal not changed: 0 inodes with permission denied: 0 [root@node-2 bin]# ./mfsfileinfo /mfs/initramfs-2.6.32-431.el6.x86_64.img #这里没有生效 /mfs/initramfs-2.6.32-431.el6.x86_64.img: chunk 0: 0000000000000003_00000001 / (id:3 ver:1) copy 1: 192.168.30.133:9422

原因:

客户端没有重新挂载 [root@node-2 ~]# umount /mfs/ [root@node-2 ~]# mfsmount /mfs/ -H 192.168.30.130 -p MFS Password: #输入123456 mfsmaster accepted connection with parameters: read-write,restricted_ip ; root mapped to root:root [root@node-2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_master-LogVol00 18G 4.2G 13G 25% / tmpfs 2.0G 72K 2.0G 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/sr0 3.6G 3.6G 0 100% /media/cdrom 192.168.30.130:9421 25G 58M 25G 1% /mfs [root@node-2 ~]# cd /usr/local/mfsclient/bin/ [root@node-2 bin]# ./mfssetgoal -r 2 /mfs/ #虽然这里没有提示2份 /mfs/: inodes with goal changed: 0 inodes with goal not changed: 8 inodes with permission denied: 0 [root@node-2 bin]# ./mfsfileinfo /mfs/initramfs-2.6.32-431.el6.x86_64.img #这里的结果表明已经有两份数据 /mfs/initramfs-2.6.32-431.el6.x86_64.img: chunk 0: 0000000000000003_00000001 / (id:3 ver:1) copy 1: 192.168.30.131:9422 copy 2: 192.168.30.133:9422

手动停掉node-1上的chunk-server

[root@node-1 ~]# /usr/local/mfschunk/sbin/mfschunkserver stop sending SIGTERM to lock owner (pid:7829) waiting for termination ... terminated [root@node-2 ~]# cp /etc/group /mfs/ #客户端依然可写 [root@node-2 ~]# ls /mfs/ config-2.6.32-431.el6.x86_64 passwd group symvers-2.6.32-431.el6.x86_64.gz initramfs-2.6.32-431.el6.x86_64.img System.map-2.6.32-431.el6.x86_64 initrd-2.6.32-431.el6.x86_64kdump.img vmlinuz-2.6.32-431.el6.x86_64

拓展:设置数据文件在回收站的过期时间

[root@node-2 bin]# cd [root@node-2 ~]# cd /usr/local/mfsclient/bin/ [root@node-2 bin]# ls mfsappendchunks mfsfileinfo mfsgettrashtime mfsrgettrashtime mfssetgoal mfscheckfile mfsfilerepair mfsmakesnapshot mfsrsetgoal mfssettrashtime mfsdeleattr mfsgeteattr mfsmount mfsrsettrashtime mfssnapshot mfsdirinfo mfsgetgoal mfsrgetgoal mfsseteattr mfstools [root@node-2 bin]# ./mfsrsettrashtime 600 /mfs/ deprecated tool - use "mfssettrashtime -r" #提示这个工具已经被弃用了 /mfs/: inodes with trashtime changed: 9 inodes with trashtime not changed: 0 inodes with permission denied: 0 [root@node-2 bin]# echo $? 0 #上个命令执行成功

#回收站的过期时间以秒为单位,如果是单独安装或挂载的MFSMETA文件系统,它包含/trash目录(该目录表示仍然可以还原被删除的文件,)

和/trash/undel/(用于获取文件)

把删除的文件移到/trash/undel下,就可以恢复删除的文件。

在MFSMETA的目录里,除了trash和trash/undel两个目录外,还有第三个目录reserved,reserved目录内有已经删除的文件,但却被其他

用户打开占用着,在用户关闭了这些打开占用着的文件后,reserved目录中的文件即被删除,文件的数据也会被立即删除,此目录不能进行操作。

MFS集群启动和关闭的顺序

启动顺序

1.启动master server 2.启动chunk server 3.启动metaogger 4.启动客户端,使用mfsmount挂载

mfs集群关闭顺序

1.所有客户端卸载MFS文件系统 2.停止chunk server 3.停止metalogger 4.停止master server