用webagic爬取csdn

前面配置pom.xml一样,主要代码:

1 public class CsdnBlogProcessor implements PageProcessor { 2 3 private static String username = "yixiao1874";// 设置csdn用户名 4 private static int size = 0;// 共抓取到的文章数量 5 6 // 抓取网站的相关配置,包括:编码、抓取间隔、重试次数等 7 private Site site = Site.me().setRetryTimes(3).setSleepTime(1000); 8 9 @Override 10 public void process(Page page) { 11 if (!page.getUrl().regex("http://blog.csdn.net/" + username + "/article/details/\d+").match()) { 12 //获取当前页码 13 String number = page.getHtml().xpath("//li[@class='page-item active']//a[@class='page-link']/text()").toString(); 14 //匹配当前页码+1的页码也就是下一页,加入爬取列表中 15 String targetUrls = page.getHtml().links() 16 .regex("http://blog.csdn.net/"+username+"/article/list/"+(Integer.parseInt(number)+1)).get(); 17 page.addTargetRequest(targetUrls); 18 19 List<String> detailUrls = page.getHtml().xpath("//li[@class='blog-unit']//a/@href").all(); 20 for(String list :detailUrls){ 21 System.out.println(list); 22 } 23 page.addTargetRequests(detailUrls); 24 }else { 25 size++;// 文章数量加1 26 CsdnBlog csdnBlog = new CsdnBlog(); 27 String path = page.getUrl().get(); 28 int id = Integer.parseInt(path.substring(path.lastIndexOf("/")+1)); 29 String title = page.getHtml().xpath("//h1[@class='csdn_top']/text()").get(); 30 String date = page.getHtml().xpath("//div[@class='artical_tag']//span[@class='time']/text()").get(); 31 String copyright = page.getHtml().xpath("//div[@class='artical_tag']//span[@class='original']/text()").get(); 32 int view = Integer.parseInt(page.getHtml().xpath("//button[@class='btn-noborder']//span[@class='txt']/text()").get()); 33 csdnBlog.id(id).title(title).date(date).copyright(copyright).view(view); 34 System.out.println(csdnBlog); 35 } 36 } 37 38 public Site getSite() { 39 return site; 40 } 41 42 public static void main(String[] args) { 43 // 从用户博客首页开始抓,开启5个线程,启动爬虫 44 Spider.create(new CsdnBlogProcessor()) 45 .addUrl("http://blog.csdn.net/" + username) 46 .thread(5).run(); 47 System.out.println("文章总数为"+size); 48 }

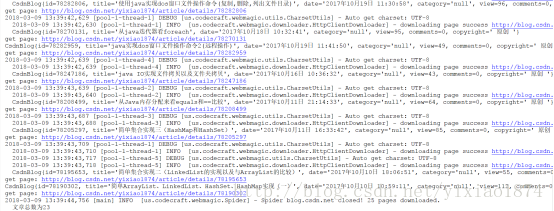

爬取后的控制台输出: