一. 准备工作

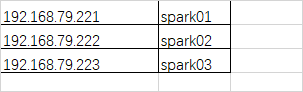

1. 准备三台虚拟机,网络互联,安装jdk,配置JAVA_HOME

2. 对虚拟机进行hosts配置,以便于免密操作

3. 三台虚拟机进行免密登录配置

3.1 执行 ssh-keygen -t rsa 命令,然后一直回车,会在/root/.ssh/ 目标下面生成两个文件id_rsa(私钥), id_rsa.pub(公钥)

3.2 将三台虚拟机的cat id_rsa.pub >> /root/.ssh/authorized_keys, 最终三台服务器的authorized_keys文件内容如下

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDOSBn4YN3B3s8LgP/FM5oVckeKBuLOClg1CEuc7Fk6iv6W38UxEm9L6ktetB4ykiUvZzuyyuhE3jV0PYsSe4lB/09k/IphR0wLN4vH4xM11CuHGHGNLNGe54l/YJM6QGIs/0pa1/dExPqDJVxK5ENzU1fJ2MV+pD/65P5j6WBNdRrNkvCq8EAqN8h/dBU06NsAB82YM8FgUDKHd1ufs803cnMEszlURjEian3QK+BVkBcacWGXru0keAtTHVEeouk+kEeR1EIhnjq1/T0ZD012OYZTak1I0gf0BVuSH2j3PG5/DFix+S8e27tnwKL/VJ+sV66U134vFbnNdvG2/3XH root@spark02 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCxW7QabrL4V19WMS7dCY4MFVSkz5nC2UbJK/cPdxMixZ7Mzwz2IBKY8PbYOojOnOqw+zR4JFw9oOyDGkvswv+WjM5IMilZo8P//fAQWeUyxjA3i0T0+kHHbJVV1s78f/tHtcXKzJBzm82c0TdFcISX7W8sGsLK0uC/iZfyx+ArwRPaDIc9xsvCMTr9h7sNY9f8UdDcJqJHLy6yGkBsIcIyJGcQaV8TAM1/uEpCcdA15RPf43kEkQGBLU8WrXj97c/p3DeYGaDhgS88LRm8R4JjrQMB8qyASQdK8y6FKx/og3cV+FxVlRLkURrdBcK64oxtmZs9zdt8Z7y+e46xDeY/ root@spark01 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDB2HcwQL2kf1VmA15zuDx0wOjXMrj7mwb1J3T7w0TAn6yC/SOP7H4wsZ8cibnvhq2cLfxb5wSofdFFL6kQbUZPH55s4CYlZRu9zN+PkY9harwHSauiFyEP2gNPXr3arUaBzgkuOJxPLGy/yLsefm2buDVPPbaPqyh144scjWmLvksKfHAMO+ia8MWov5RsM4ZBak3nBiuJuUvJHof5nzsXH01WgQVK1qSi4CMEuiXUY5crEGJr9Lq6u7NdNBz81m931Znu+vnTQ6PRztrdGwLWio2uX8HJKlOqGn/uPIOEK0pQ9lWc81Gs3I0D4TXICCVs69j4ImjLgNs7SQCgeVof root@spark03

如此,三台虚拟机就可以免密访问了

二. 正式安装spark

1. 上传,并解压安装包 spark-2.4.4-bin-hadoop2.7.tgz

2. 重命令

mv spark-2.4.4-bin-hadoop2.7 spark

3.配置spark的环境变量

vi /etc/profile

export SPARK_HOME=/root/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

source /etc/profile

4. 修改spark配置文件

cd /root/spark/conf

cp slaves.template slaves

vim slaves

# 配置slave的地址

spark02

spark03

5. 运行环境配置

cp spark-env.sh.template spark-env.sh

5.1 单master配置

#配置jdk

export JAVA_HOME=/root//jdk8

export SPARK_MASTER_IP=spark01

export SPARK_MASTER_PORT=7077

5.2 多master配置(ha)

#配置jdk

export JAVA_HOME=/root/jdk8

export SPARK_MASTER_PORT=7077

##多master时添加以下配置项zookeeper

export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=mini1,mini2,mini3 -Dspark.deploy.zookeeper.dir=/spark"

6. 分发spark安装包到spark02,spark03

scp -r spark root@spark02:/root/

scp -r spark root@spark02:/root/

7. 启动

sbin/start-all.sh

停止

sbin/stop-all.sh

-------------------------------

多master测试:

sbin/start-all.sh

然后在spark02上再启一个master

sbin/start-master.sh

通过ui页面就能看到master一主一备

http://spark01:8080/

http://spark02:8080/

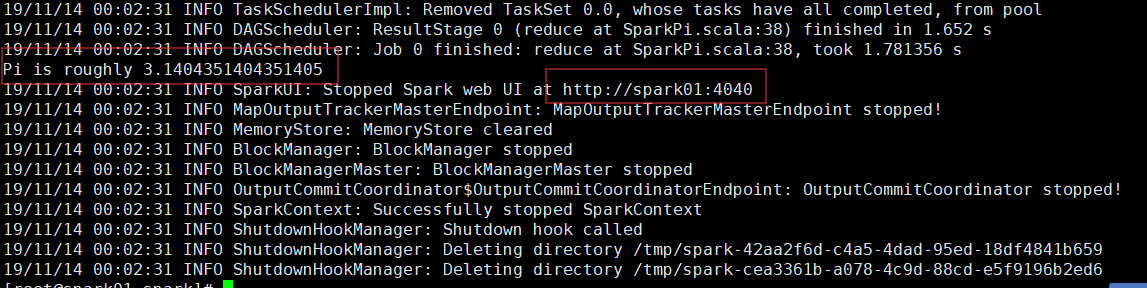

8 .运行测试

./bin/run-example SparkPi 10

不报错即可