注: elasticsearch 版本6.2.2

- 1)集群模式,则每个节点都需要安装ik分词,安装插件完毕后需要重启服务,创建mapping前如果有机器未安装分词,则可能该索引可能为RED,需要删除后重建。

-

域名 ip master 192.168.0.120 slave1 192.168.0.121 slave2 192.168.0.122

-

- 2)Elasticsearch 内置的分词器对中文不友好,会把中文分成单个字来进行全文检索,不能达到想要的结果,在全文检索及新词发展如此快的互联网时代,IK可以进行友好的分词及自定义分词。

- 3)IK Analyzer是一个开源的,基于java语言开发的轻量级的中文分词工具包。从2006年12月推出1.0版,目前支持最新版本的ES6.X版本。

- 4)IK 带有两个分词器:

- ik_max_word :会将文本做最细粒度的拆分;尽可能多的拆分出词语

- ik_smart:会做最粗粒度的拆分;已被分出的词语将不会再次被其它词语占有

- 5)本篇采用下载IK中文分词器源码后,使用eclipse编译源码方式得到IK分词器安装包,因为以后如果要进行修改IK分词器可以修改完源码自己进行打包安装。

第一步:下载IK中文分词器源代码

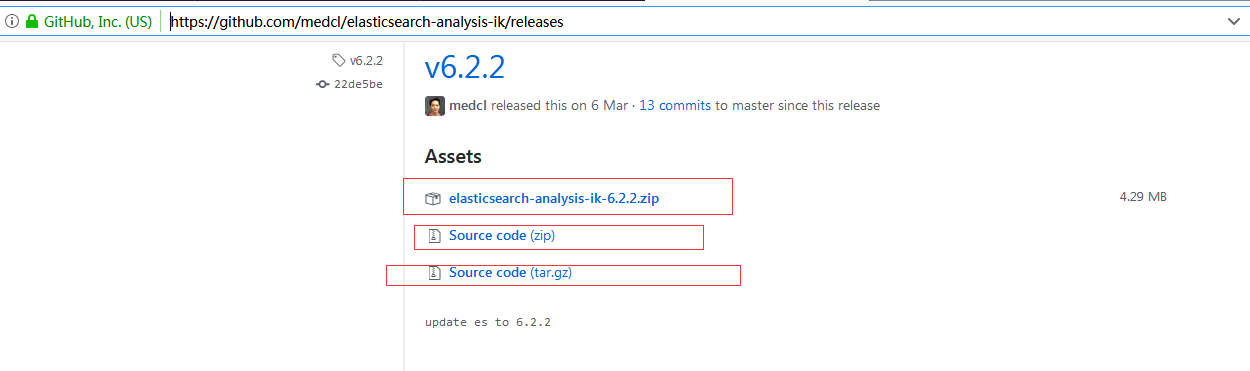

在github中搜索ik,找到"medcl/elasticsearch-analysis-ik",并找到https://github.com/medcl/elasticsearch-analysis-ik/releases,选择自己需要的版本:

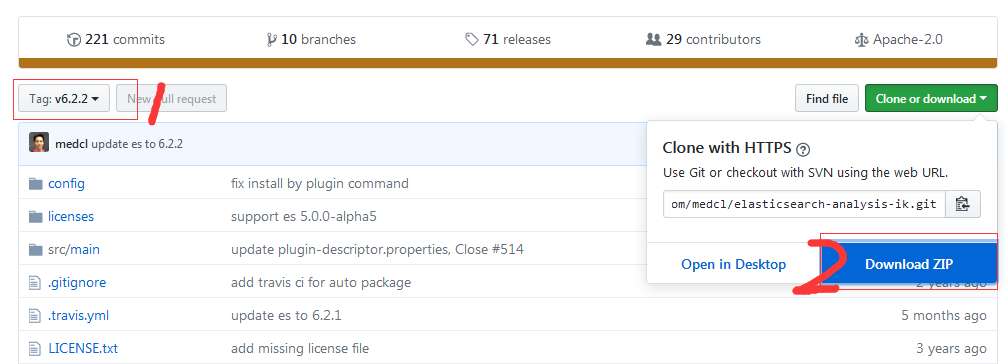

或者

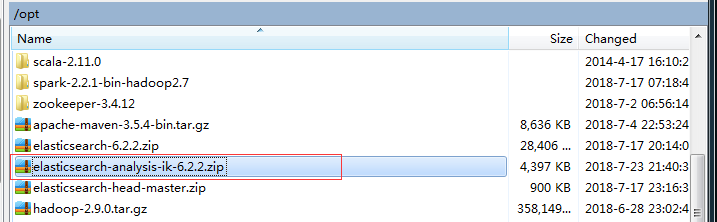

如上图所示,选择和elsticsearch 匹配的版本,并下载zip包。

第二步:解压下载的zip包并使用ecplise打开

①解压elasticsearch-analysis-ik-6.2.2.zip

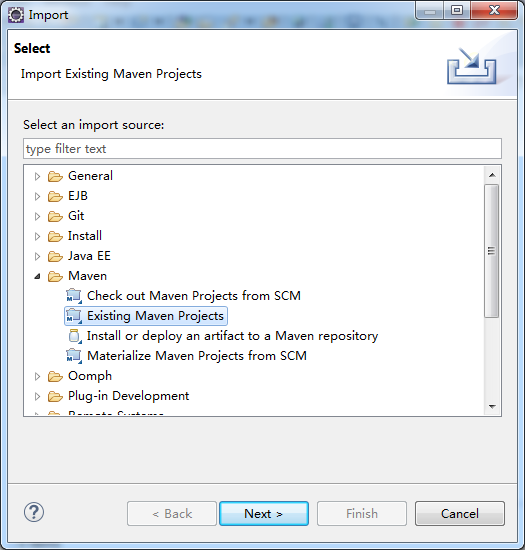

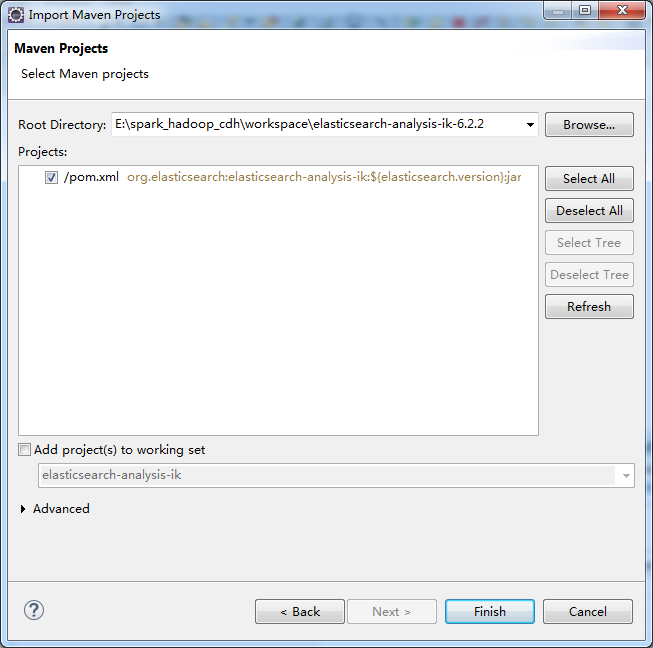

②打开eclispe导入 maven项目

下一步

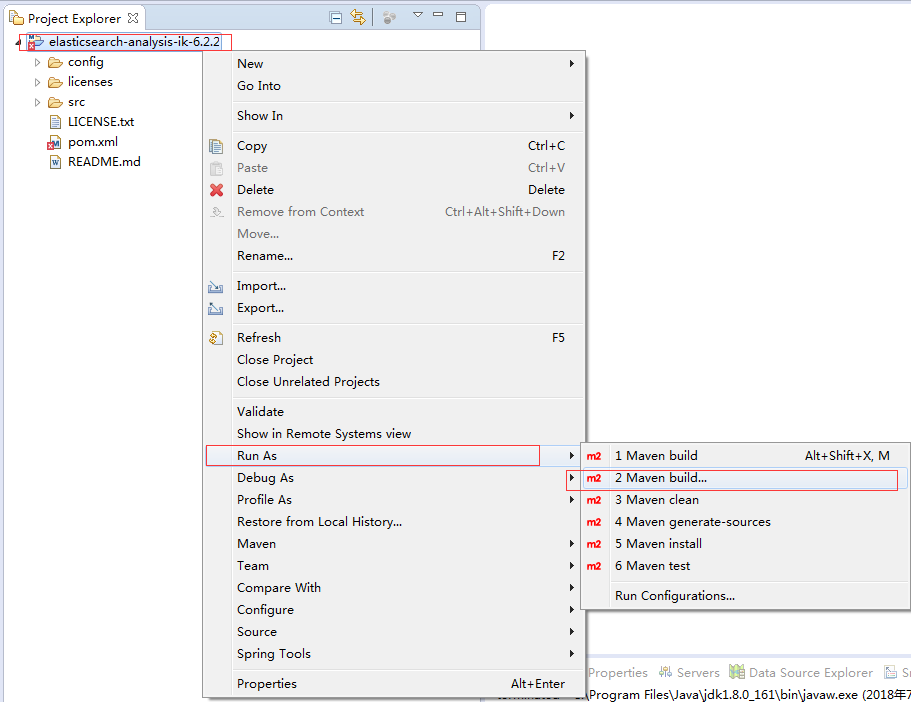

③导入后,使用maven build...编译jar包

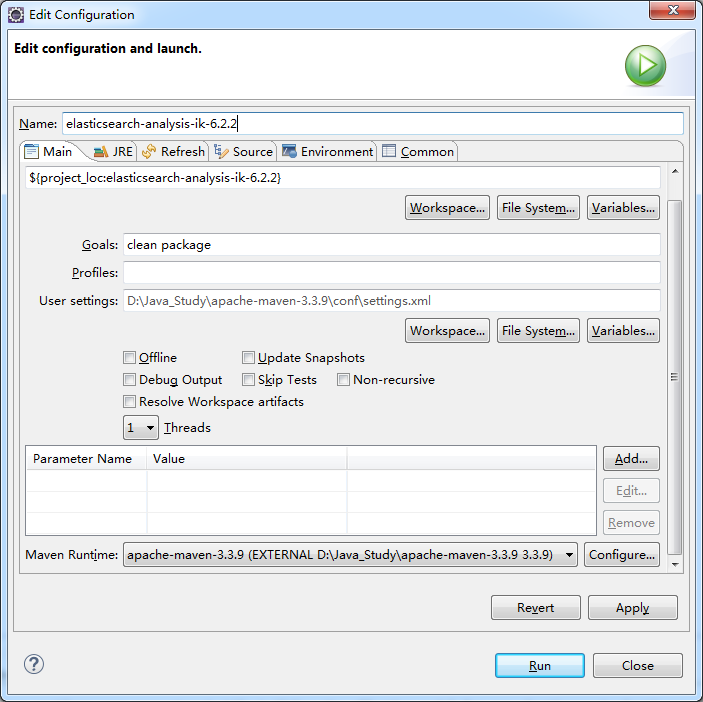

弹出编辑框:

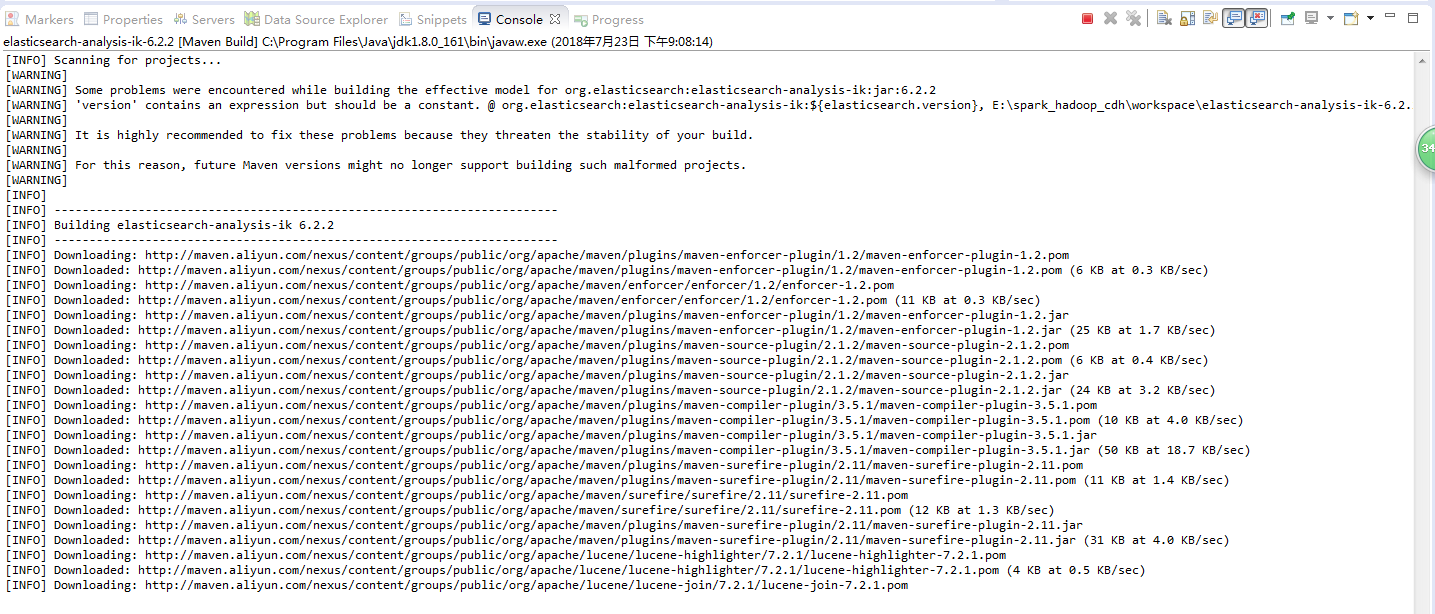

点击“Run”执行

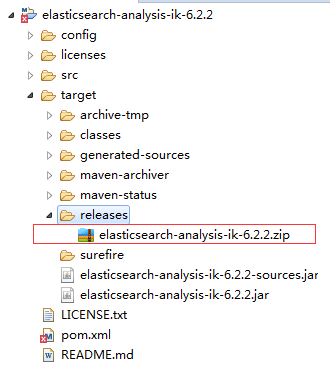

完成后,在target文件夹上右键 选择 Refresh,如图所示:

第三步:分别上传到ES的服务器并分别解压安装

把编译好的jar包上传到master服务器上,

执行命令安装:

[spark@master ~]$ cd /opt/ [spark@master opt]$ unzip elasticsearch-analysis-ik-6.2.2.zip -d /opt/elasticsearch-6.2.2/plugins/ Archive: elasticsearch-analysis-ik-6.2.2.zip creating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/ inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/plugin-descriptor.properties creating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/ inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/extra_main.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/extra_single_word.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/extra_single_word_full.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/extra_single_word_low_freq.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/extra_stopword.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/IKAnalyzer.cfg.xml inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/main.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/preposition.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/quantifier.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/stopword.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/suffix.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/config/surname.dic inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/elasticsearch-analysis-ik-6.2.2.jar inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/httpclient-4.5.2.jar inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/httpcore-4.4.4.jar inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/commons-logging-1.2.jar inflating: /opt/elasticsearch-6.2.2/plugins/elasticsearch/commons-codec-1.9.jar [spark@master opt]$ cd /opt/elasticsearch-6.2.2/plugins/

[spark@master plugins]$ mv elasticsearch/ ik/

slave1,slave2同样安装,这里省略。。

master,slave1,slave2三台服务器安装完成后,重启elasticsearch 即可加载ik分词器。

第四步:测试

1) 删除、创建索引:

curl -Xdelete "http://192.168.0.120:9200/index" curl -Xput "http://192.168.0.120:9200/index"

2)使用index索引创建mapping(对字段‘content’进行中文分词):

curl -XPOST "http://192.168.0.120:9200/index/fulltext/_mapping" -H 'Content-Type: application/json' -d' { "properties": { "content": { "type": "text", "analyzer": "ik_max_word", "search_analyzer": "ik_max_word" } } }'

3)先添加4条记录:

curl -XPOST "http://192.168.0.120:9200/index/fulltext/1" -H 'Content-Type: application/json' -d' {"content":"美国留给伊拉克的是个烂摊子吗"}' curl -XPOST "http://192.168.0.120:9200/index/fulltext/2" -H 'Content-Type: application/json' -d' {"content":"公安部:各地校车将享最高路权"}' curl -XPOST "http://192.168.0.120:9200/index/fulltext/3" -H 'Content-Type: application/json' -d' {"content":"中韩渔警冲突调查:韩警平均每天扣1艘中国渔船"}' curl -XPOST "http://192.168.0.120:9200/index/fulltext/4" -H 'Content-Type: application/json' -d' {"content":"中国驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首"}'

4)执行统计:

curl -XPOST "http://192.168.0.120:9200/index/fulltext/_search" -H 'Content-Type: application/json' -d' { "query" : { "match" : { "content" : "中国" }}, "highlight" : { "pre_tags" : ["<tag1>", "<tag2>"], "post_tags" : ["</tag1>", "</tag2>"], "fields" : { "content" : {} } } }'

返回结果:

{ "took": 133, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 2, "max_score": 0.6489038, "hits": [ { "_index": "index", "_type": "fulltext", "_id": "4", "_score": 0.6489038, "_source": { "content": "中国驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首" }, "highlight": { "content": [ "<tag1>中国</tag1>驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首" ] } }, { "_index": "index", "_type": "fulltext", "_id": "3", "_score": 0.2876821, "_source": { "content": "中韩渔警冲突调查:韩警平均每天扣1艘中国渔船" }, "highlight": { "content": [ "中韩渔警冲突调查:韩警平均每天扣1艘<tag1>中国</tag1>渔船" ] } } ] } }

5)再添加3条记录:

curl -XPOST "http://192.168.0.120:9200/index/fulltext/5" -H 'Content-Type: application/json' -d' {"content":"俄侦委:俄一辆卡车渡河时翻车 致2名中国游客遇难"}' curl -XPOST "http://192.168.0.120:9200/index/fulltext/6" -H 'Content-Type: application/json' -d' {"content":"韩国银行面向中国留学生推出微信支付服务"}' curl -XPOST "http://192.168.0.120:9200/index/fulltext/7" -H 'Content-Type: application/json' -d' {"content":"印媒:中国东北“锈带”在困境中反击"}'

6)重新执行统计:

curl -XPOST "http://192.168.0.120:9200/index/fulltext/_search" -H 'Content-Type: application/json' -d' { "query" : { "match" : { "content" : "中国" }}, "highlight" : { "pre_tags" : ["<tag1>", "<tag2>"], "post_tags" : ["</tag1>", "</tag2>"], "fields" : { "content" : {} } } }'

返回结果:

{ "took": 41, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 5, "max_score": 0.6785375, "hits": [ { "_index": "index", "_type": "fulltext", "_id": "7", "_score": 0.6785375, "_source": { "content": "印媒:中国东北“锈带”在困境中反击" }, "highlight": { "content": [ "印媒:<tag1>中国</tag1>东北“锈带”在困境中反击" ] } }, { "_index": "index", "_type": "fulltext", "_id": "6", "_score": 0.47000363, "_source": { "content": "韩国银行面向中国留学生推出微信支付服务" }, "highlight": { "content": [ "韩国银行面向<tag1>中国</tag1>留学生推出微信支付服务" ] } }, { "_index": "index", "_type": "fulltext", "_id": "4", "_score": 0.44000342, "_source": { "content": "中国驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首" }, "highlight": { "content": [ "<tag1>中国</tag1>驻洛杉矶领事馆遭亚裔男子枪击 嫌犯已自首" ] } }, { "_index": "index", "_type": "fulltext", "_id": "5", "_score": 0.2876821, "_source": { "content": "俄侦委:俄一辆卡车渡河时翻车 致2名中国游客遇难" }, "highlight": { "content": [ "俄侦委:俄一辆卡车渡河时翻车 致2名<tag1>中国</tag1>游客遇难" ] } }, { "_index": "index", "_type": "fulltext", "_id": "3", "_score": 0.2876821, "_source": { "content": "中韩渔警冲突调查:韩警平均每天扣1艘中国渔船" }, "highlight": { "content": [ "中韩渔警冲突调查:韩警平均每天扣1艘<tag1>中国</tag1>渔船" ] } } ] } }

IK支持自定义配置词库,配置文件在config文件夹下的analysis-ik/IKAnalyzer.cfg.xml,字典文件也在同级目录下,可以支持多个选项的配置,ext_dict-自定义词库,ext_stopwords-屏蔽词库。

同时还支持热更新配置,配置remote_ext_dict为http地址,输入一行一个词语,注意文档格式要为UTF8无BOM格式,如果词库发生更新,只需要更新response header中任意一个字段Last-Modified或ETag即可。

[spark@master config]$ pwd /opt/elasticsearch-6.2.2/plugins/ik/config [spark@master config]$ more IKAnalyzer.cfg.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd"> <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 --> <entry key="ext_dict"></entry> <!--用户可以在这里配置自己的扩展停止词字典--> <entry key="ext_stopwords"></entry> <!--用户可以在这里配置远程扩展字典 --> <!-- <entry key="remote_ext_dict">words_location</entry> --> <!--用户可以在这里配置远程扩展停止词字典--> <!-- <entry key="remote_ext_stopwords">words_location</entry> --> </properties> [spark@master config]$

参考:

《https://blog.csdn.net/moxiong3212/article/details/79338586》

《https://www.cnblogs.com/gaoxu387/p/7889626.html》