HeapSter介绍

用来统一收集和展示k8s集群中各个节点中cAdvisor上报的数据

HeapSter默认使用的是内存存储 无法持久化保存历史数据

需要单独安装InfluxDB来保存cAdvisor上报的数

kubelet中的cAdvisor插件负责收集各自主机和主机上运行Pod的资源数据

1.InfluxDB部署

apiVersion: apps/v1beta1 kind: Deployment metadata: name: monitoring-influxdb namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: influxdb spec: containers: - name: influxdb image: k8s.gcr.io/heapster-influxdb-amd64:v1.5.2 volumeMounts: - mountPath: /data name: influxdb-storage volumes: - name: influxdb-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: task: monitoring # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-influxdb name: monitoring-influxdb namespace: kube-system spec: ports: - port: 8086 targetPort: 8086 selector: k8s-app: influxdb

2.部署heapster-rbac

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: heapster roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:heapster subjects: - kind: ServiceAccount name: heapster namespace: kube-system

3.部署heapster

apiVersion: v1 kind: ServiceAccount metadata: name: heapster namespace: kube-system --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: heapster namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: heapster spec: serviceAccountName: heapster containers: - name: heapster image: k8s.gcr.io/heapster-amd64:v1.5.4 imagePullPolicy: IfNotPresent command: - /heapster - --source=kubernetes:https://kubernetes.default imagePullPolicy: IfNotPresent command: - /heapster - --source=kubernetes:https://kubernetes.default - --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086 --- apiVersion: v1 kind: Service metadata: labels: task: monitoring # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-system spec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapster

4.部署grafana

apiVersion: apps/v1beta1 kind: Deployment metadata: name: monitoring-grafana namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: grafana spec: containers: - name: grafana image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4 ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /etc/ssl/certs name: ca-certificates readOnly: true - mountPath: /var name: grafana-storage env: - name: INFLUXDB_HOST value: monitoring-influxdb - name: GF_SERVER_HTTP_PORT value: "3000" # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL # If you're only using the API Server proxy, set this value instead: # value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy value: / volumes: - name: ca-certificates hostPath: path: /etc/ssl/certs - name: grafana-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: kube-system spec: # In a production setup, we recommend accessing Grafana through an external Loadbalancer # or through a public IP. # type: LoadBalancer # You could also use NodePort to expose the service at a randomly-generated port type: NodePort ports: - port: 80 targetPort: 3000 selector: k8s-app: grafana

说明:

HeapSter从k8s的v1.11版本开始已经不能使用 k8s已经全面转向以Prometheus为核心的新监控体系架构

Metrics-Server的部署

metrics-server是k8s中一个单独的插件 需要自己手动进行部署 它相当于一个自定义的API Server,k8s使用kube-aggregator聚合kube-apiserver和metric-server一起对外提供服务

kube-aggregator做为前端代理服务器

1.kube-apiserver 2.metrics-server 3.xxx-server

部署任何一个k8s插件的时候都必须要注意插件的发布版本必须和当前k8s集群的版本保持一致 否则可能会因为版本不兼容出现一些异常

下载最新k8s版本的插件

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons

https://github.com/kubernetes/kubernetes/blob/cluster/addons/metrics-server/metrics-server-deployment.yaml(和本地不兼容)

下载指定k8s版本的文件

https://github.com/kubernetes/kubernetes/blob/release-1.11/cluster/addons/metrics-server/metrics-server-deployment.yaml

所有需要的yaml文件

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces verbs: - get - list - watch - apiGroups: - "extensions" resources: - deployments verbs: - get - list - update - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

apiVersion: apiregistration.k8s.io/v1beta1 kind: APIService metadata: name: v1beta1.metrics.k8s.io labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100

apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" kubernetes.io/name: "Metrics-server" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: https

apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: v1 kind: ConfigMap metadata: name: metrics-server-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: metrics-server-v0.2.1 namespace: kube-system labels: k8s-app: metrics-server kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.2.1 spec: selector: matchLabels: k8s-app: metrics-server version: v0.2.1 name: metrics-server labels: k8s-app: metrics-server version: v0.2.1 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical serviceAccountName: metrics-server containers: - name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.2.1 command: - /metrics-server ports: - containerPort: 443 name: https protocol: TCP - name: metrics-server-nanny image: k8s.gcr.io/addon-resizer:1.8.1 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 5m memory: 50Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: v1 kind: ConfigMap metadata: name: metrics-server-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: metrics-server-v0.2.1 namespace: kube-system labels: k8s-app: metrics-server kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.2.1 spec: selector: matchLabels: k8s-app: metrics-server version: v0.2.1 name: metrics-server labels: k8s-app: metrics-server version: v0.2.1 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical serviceAccountName: metrics-server containers: - name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.2.1 command: - /metrics-server - --source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure=true ports: - containerPort: 443 name: https protocol: TCP - name: metrics-server-nanny image: k8s.gcr.io/addon-resizer:1.8.1 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 5m memory: 50Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --cpu=40m - --extra-cpu=0.5m - --memory=40Mi - --extra-memory=4Mi - --threshold=5 - --deployment=metrics-server-v0.2.1 - --container=metrics-server - --poll-period=300000 - --estimator=exponential volumes: - name: metrics-server-config-volume configMap: name: metrics-server-config tolerations: - key: "CriticalAddonsOnly" operator: "Exists"

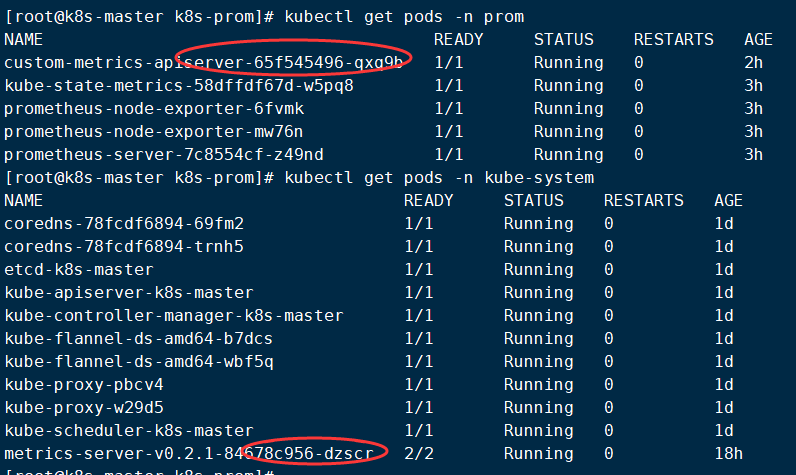

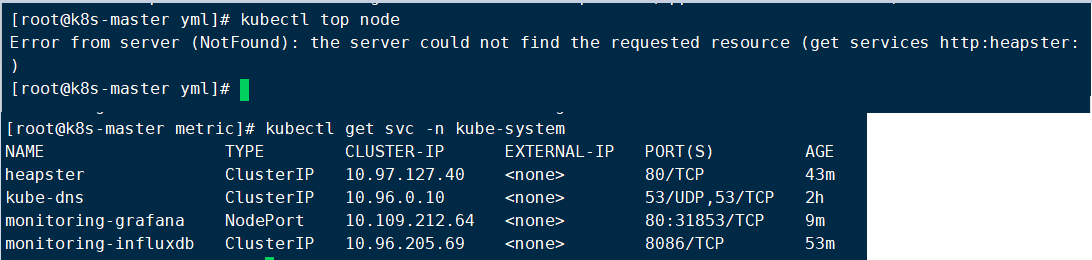

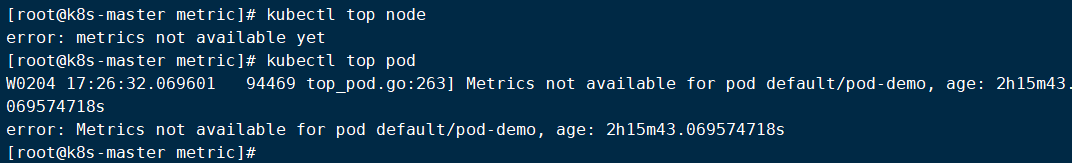

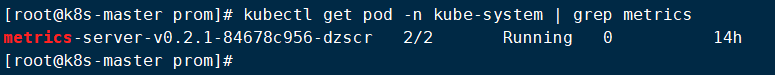

执行完以上文件后 稍微等待一段时间 执行以下命令查看metrics-server是否部署正常

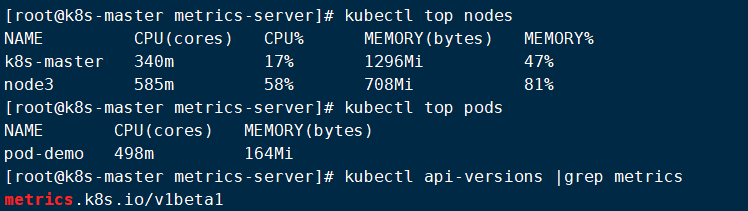

以下结果显示用户已经可以通过Metrics-server获取k8s中的核心监控指标(cpu和内存) 但是还没法获取用户的自定义监控指标

如果要收集自定义监控指标 必须要部署一个custom-metrics-apiserver的pod

metrics-server只能收集k8s的核心监控指标如:pod和node的CPU和内存的使用率

custom-metrics-apiserver能收集用户自定义的监控指标数据