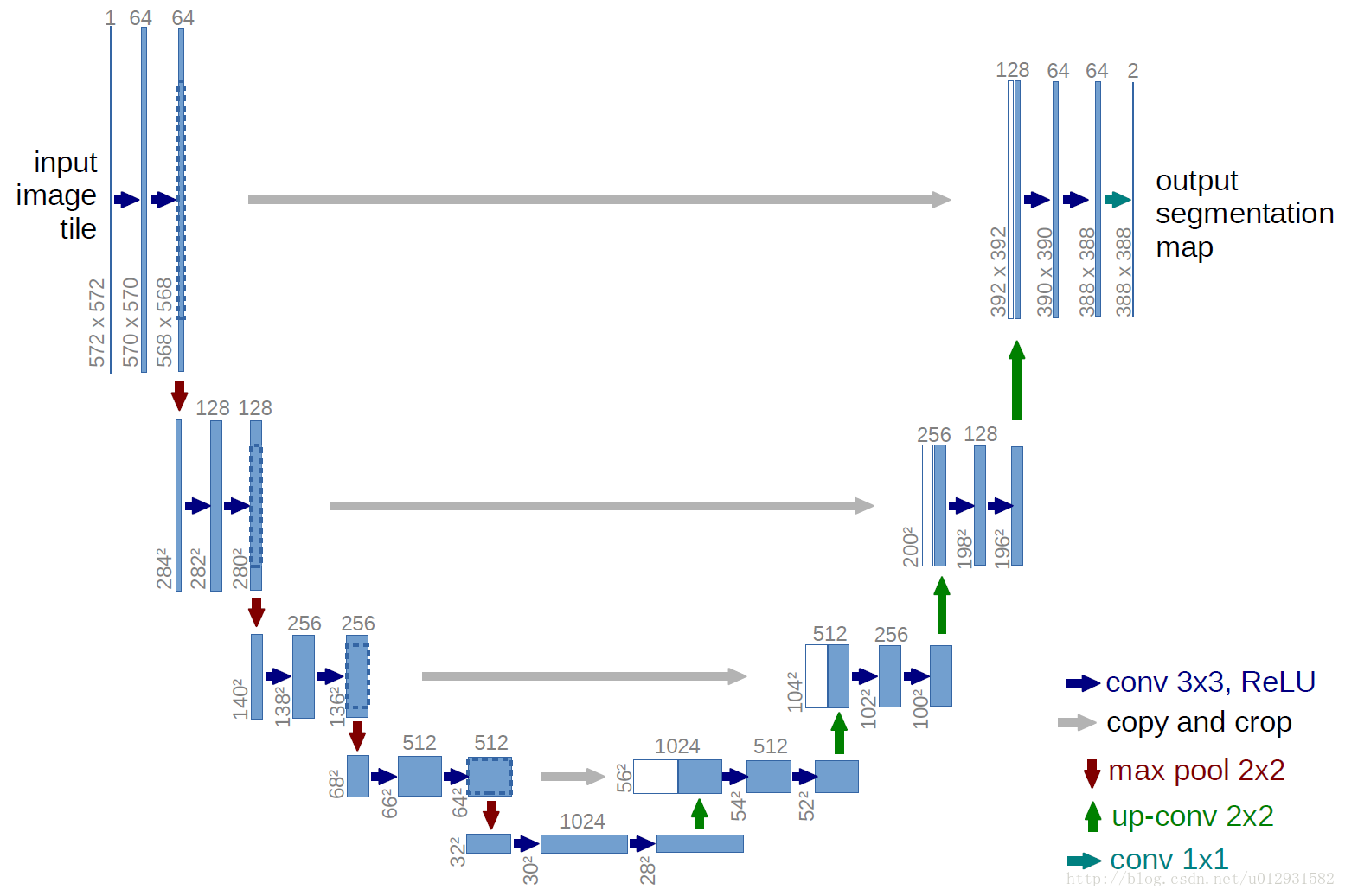

U-net网络主要思路是源于FCN,采用全卷积网络,对图像进行逐像素分类,能在图像分割领域达到不错的效果。

因其网络结构类似于U型,所以以此命名,可以由其架构清晰的看出,其构成是由左端的卷积压缩层,以及右端的转置卷积放大层组成;

左右两端之间还有联系,通过灰色箭头所指,右端在进行转置卷积操作的时候,会拼接左端前几次卷积后的结果,这样可以保证得到

更多的信息。

在网络的末端得到两张feature map之后还需要通过softmax函数得到概率图,整个网络输出的是类别数量的特征图,最后得到的是类别的概率图,针对每个像素点给出其属于哪个类别的概率。

Unet网络的结构提出主要是为了使用在医学图像分割领域,它与fcn相比,有以下有优点:1.多尺度 2.能适应超大图像的

输入。

unet的卷积过程,是从高分辨率(浅层特征)到低分辨率(深层特征)的过程。

unet的特点就是通过反卷积过程中的拼接,使得浅层特征和深层特征结合起来。对于医学图像来说,unet能用深层特征用于定位,浅层特征用于精确分割,所以unet常见于很多图像分割任务。

其在Keras实现的部分代码解析如下:

1 from model import * 2 from data import *#导入这两个文件中的所有函数 3 4 #os.environ[“CUDA_VISIBLE_DEVICES”] = “0” 5 6 7 data_gen_args = dict(rotation_range=0.2, 8 width_shift_range=0.05, 9 height_shift_range=0.05, 10 shear_range=0.05, 11 zoom_range=0.05, 12 horizontal_flip=True, 13 fill_mode=‘nearest’)#数据增强时的变换方式的字典 14 myGene = trainGenerator(2,‘data/membrane/train’,‘image’,‘label’,data_gen_args,save_to_dir = None) 15 #得到一个生成器,以batch=2的速率无限生成增强后的数据 16 17 model = unet() 18 model_checkpoint = ModelCheckpoint(‘unet_membrane.hdf5’, monitor=‘loss’,verbose=1, save_best_only=True) 19 #回调函数,第一个是保存模型路径,第二个是检测的值,检测Loss是使它最小,第三个是只保存在验证集上性能最好的模型 20 21 model.fit_generator(myGene,steps_per_epoch=300,epochs=1,callbacks=[model_checkpoint]) 22 #steps_per_epoch指的是每个epoch有多少个batch_size,也就是训练集总样本数除以batch_size的值 23 #上面一行是利用生成器进行batch_size数量的训练,样本和标签通过myGene传入 24 testGene = testGenerator(“data/membrane/test”) 25 results = model.predict_generator(testGene,30,verbose=1) 26 #30是step,steps: 在停止之前,来自 generator 的总步数 (样本批次)。 可选参数 Sequence:如果未指定,将使用len(generator) 作为步数。 27 #上面的返回值是:预测值的 Numpy 数组。 28 saveResult(“data/membrane/test”,results)#保存结果 29 1 30 data.py文件: 31 32 from __future__ import print_function 33 from keras.preprocessing.image import ImageDataGenerator 34 import numpy as np 35 import os 36 import glob 37 import skimage.io as io 38 import skimage.transform as trans 39 40 Sky = [128,128,128] 41 Building = [128,0,0] 42 Pole = [192,192,128] 43 Road = [128,64,128] 44 Pavement = [60,40,222] 45 Tree = [128,128,0] 46 SignSymbol = [192,128,128] 47 Fence = [64,64,128] 48 Car = [64,0,128] 49 Pedestrian = [64,64,0] 50 Bicyclist = [0,128,192] 51 Unlabelled = [0,0,0] 52 53 COLOR_DICT = np.array([Sky, Building, Pole, Road, Pavement, 54 Tree, SignSymbol, Fence, Car, Pedestrian, Bicyclist, Unlabelled]) 55 56 57 def adjustData(img,mask,flag_multi_class,num_class): 58 if(flag_multi_class):#此程序中不是多类情况,所以不考虑这个 59 img = img / 255 60 mask = mask[:,:,:,0] if(len(mask.shape) == 4) else mask[:,:,0] 61 #if else的简洁写法,一行表达式,为真时放在前面,不明白mask.shape=4的情况是什么,由于有batch_size,所以mask就有3维[batch_size,wigth,heigh],估计mask[:,:,0]是写错了,应该写成[0,:,:],这样可以得到一片图片, 62 new_mask = np.zeros(mask.shape + (num_class,)) 63 #np.zeros里面是shape元组,此目的是将数据厚度扩展到num_class层,以在层的方向实现one-hot结构 64 65 for i in range(num_class): 66 #for one pixel in the image, find the class in mask and convert it into one-hot vector 67 #index = np.where(mask == i) 68 #index_mask = (index[0],index[1],index[2],np.zeros(len(index[0]),dtype = np.int64) + i) if (len(mask.shape) == 4) else (index[0],index[1],np.zeros(len(index[0]),dtype = np.int64) + i) 69 #new_mask[index_mask] = 1 70 new_mask[mask == i,i] = 1#将平面的mask的每类,都单独变成一层, 71 new_mask = np.reshape(new_mask,(new_mask.shape[0],new_mask.shape[1]*new_mask.shape[2],new_mask.shape[3])) if flag_multi_class else np.reshape(new_mask,(new_mask.shape[0]*new_mask.shape[1],new_mask.shape[2])) 72 mask = new_mask 73 elif(np.max(img) > 1): 74 img = img / 255 75 mask = mask /255 76 mask[mask > 0.5] = 1 77 mask[mask <= 0.5] = 0 78 return (img,mask) 79 #上面这个函数主要是对训练集的数据和标签的像素值进行归一化 80 81 82 def trainGenerator(batch_size,train_path,image_folder,mask_folder,aug_dict,image_color_mode = "grayscale", 83 mask_color_mode = "grayscale",image_save_prefix = "image",mask_save_prefix = "mask", 84 flag_multi_class = False,num_class = 2,save_to_dir = None,target_size = (256,256),seed = 1): 85 ''' 86 can generate image and mask at the same time 87 use the same seed for image_datagen and mask_datagen to ensure the transformation for image and mask is the same 88 if you want to visualize the results of generator, set save_to_dir = "your path" 89 ''' 90 image_datagen = ImageDataGenerator(**aug_dict) 91 mask_datagen = ImageDataGenerator(**aug_dict) 92 image_generator = image_datagen.flow_from_directory(#https://blog.csdn.net/nima1994/article/details/80626239 93 train_path,#训练数据文件夹路径 94 classes = [image_folder],#类别文件夹,对哪一个类进行增强 95 class_mode = None,#不返回标签 96 color_mode = image_color_mode,#灰度,单通道模式 97 target_size = target_size,#转换后的目标图片大小 98 batch_size = batch_size,#每次产生的(进行转换的)图片张数 99 save_to_dir = save_to_dir,#保存的图片路径 100 save_prefix = image_save_prefix,#生成图片的前缀,仅当提供save_to_dir时有效 101 seed = seed) 102 mask_generator = mask_datagen.flow_from_directory( 103 train_path, 104 classes = [mask_folder], 105 class_mode = None, 106 color_mode = mask_color_mode, 107 target_size = target_size, 108 batch_size = batch_size, 109 save_to_dir = save_to_dir, 110 save_prefix = mask_save_prefix, 111 seed = seed) 112 train_generator = zip(image_generator, mask_generator)#组合成一个生成器 113 for (img,mask) in train_generator: 114 #由于batch是2,所以一次返回两张,即img是一个2张灰度图片的数组,[2,256,256] 115 img,mask = adjustData(img,mask,flag_multi_class,num_class)#返回的img依旧是[2,256,256] 116 yield (img,mask) 117 #每次分别产出两张图片和标签,不懂yield的请看https://blog.csdn.net/mieleizhi0522/article/details/82142856 118 # yield相当于生成器 119 #上面这个函数主要是产生一个数据增强的图片生成器,方便后面使用这个生成器不断生成图片 120 121 122 def testGenerator(test_path,num_image = 30,target_size = (256,256),flag_multi_class = False,as_gray = True): 123 for i in range(num_image): 124 img = io.imread(os.path.join(test_path,"%d.png"%i),as_gray = as_gray) 125 img = img / 255 126 img = trans.resize(img,target_size) 127 img = np.reshape(img,img.shape+(1,)) if (not flag_multi_class) else img 128 img = np.reshape(img,(1,)+img.shape) 129 #将测试图片扩展一个维度,与训练时的输入[2,256,256]保持一致 130 yield img 131 132 #上面这个函数主要是对测试图片进行规范,使其尺寸和维度上和训练图片保持一致 133 134 def geneTrainNpy(image_path,mask_path,flag_multi_class = False,num_class = 2,image_prefix = "image",mask_prefix = "mask",image_as_gray = True,mask_as_gray = True): 135 image_name_arr = glob.glob(os.path.join(image_path,"%s*.png"%image_prefix)) 136 #相当于文件搜索,搜索某路径下与字符匹配的文件https://blog.csdn.net/u010472607/article/details/76857493/ 137 image_arr = [] 138 mask_arr = [] 139 for index,item in enumerate(image_name_arr):#enumerate是枚举,输出[(0,item0),(1,item1),(2,item2)] 140 img = io.imread(item,as_gray = image_as_gray) 141 img = np.reshape(img,img.shape + (1,)) if image_as_gray else img 142 mask = io.imread(item.replace(image_path,mask_path).replace(image_prefix,mask_prefix),as_gray = mask_as_gray) 143 #重新在mask_path文件夹下搜索带有mask字符的图片(标签图片) 144 mask = np.reshape(mask,mask.shape + (1,)) if mask_as_gray else mask 145 img,mask = adjustData(img,mask,flag_multi_class,num_class) 146 image_arr.append(img) 147 mask_arr.append(mask) 148 image_arr = np.array(image_arr) 149 mask_arr = np.array(mask_arr)#转换成array 150 return image_arr,mask_arr 151 #该函数主要是分别在训练集文件夹下和标签文件夹下搜索图片,然后扩展一个维度后以array的形式返回,是为了在没用数据增强时的读取文件夹内自带的数据 152 153 154 def labelVisualize(num_class,color_dict,img): 155 img = img[:,:,0] if len(img.shape) == 3 else img 156 img_out = np.zeros(img.shape + (3,)) 157 #变成RGB空间,因为其他颜色只能再RGB空间才会显示 158 for i in range(num_class): 159 img_out[img == i,:] = color_dict[i] 160 #为不同类别涂上不同的颜色,color_dict[i]是与类别数有关的颜色,img_out[img == i,:]是img_out在img中等于i类的位置上的点 161 return img_out / 255 162 163 #上面函数是给出测试后的输出之后,为输出涂上不同的颜色,多类情况下才起作用,两类的话无用 164 165 def saveResult(save_path,npyfile,flag_multi_class = False,num_class = 2): 166 for i,item in enumerate(npyfile): 167 img = labelVisualize(num_class,COLOR_DICT,item) if flag_multi_class else item[:,:,0] 168 #多类的话就图成彩色,非多类(两类)的话就是黑白色 169 io.imsave(os.path.join(save_path,"%d_predict.png"%i),img)19 194 #下面是model.py: 195 196 197 198 import numpy as np 199 import os 200 import skimage.io as io 201 import skimage.transform as trans 202 import numpy as np 203 from keras.models import * 204 from keras.layers import * 205 from keras.optimizers import * 206 from keras.callbacks import ModelCheckpoint, LearningRateScheduler 207 from keras import backend as keras 208 209 210 def unet(pretrained_weights = None,input_size = (256,256,1)): 211 inputs = Input(input_size) 212 conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(inputs) 213 conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv1) 214 pool1 = MaxPooling2D(pool_size=(2, 2))(conv1) 215 conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool1) 216 conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv2) 217 pool2 = MaxPooling2D(pool_size=(2, 2))(conv2) 218 conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool2) 219 conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv3) 220 pool3 = MaxPooling2D(pool_size=(2, 2))(conv3) 221 conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool3) 222 conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv4) 223 drop4 = Dropout(0.5)(conv4) 224 pool4 = MaxPooling2D(pool_size=(2, 2))(drop4) 225 226 conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool4) 227 conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv5) 228 drop5 = Dropout(0.5)(conv5) 229 230 up6 = Conv2D(512, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(drop5))#上采样之后再进行卷积,相当于转置卷积操作! 231 merge6 = concatenate([drop4,up6],axis=3) 232 conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge6) 233 conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv6) 234 235 up7 = Conv2D(256, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv6)) 236 merge7 = concatenate([conv3,up7],axis = 3) 237 conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge7) 238 conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv7) 239 240 up8 = Conv2D(128, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv7)) 241 merge8 = concatenate([conv2,up8],axis = 3) 242 conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge8) 243 conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv8) 244 245 up9 = Conv2D(64, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv8)) 246 merge9 = concatenate([conv1,up9],axis = 3) 247 conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge9) 248 conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9) 249 conv9 = Conv2D(2, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9) 250 conv10 = Conv2D(1, 1, activation = 'sigmoid')(conv9)#我怀疑这个sigmoid激活函数是多余的,因为在后面的loss中用到的就是二进制交叉熵,包含了sigmoid 251 252 model = Model(input = inputs, output = conv10) 253 254 model.compile(optimizer = Adam(lr = 1e-4), loss = 'binary_crossentropy', metrics = ['accuracy'])#模型执行之前必须要编译https://keras-cn.readthedocs.io/en/latest/getting_started/sequential_model/ 255 #利用二进制交叉熵,也就是sigmoid交叉熵,metrics一般选用准确率,它会使准确率往高处发展 256 #model.summary() 257 258 if(pretrained_weights): 259 model.load_weights(pretrained_weights) 260 261 return model 262 263