转自https://www.cnblogs.com/leeSmall/p/9351343.html 仅供个人学习

一、什么是正向代理、什么是反向代理

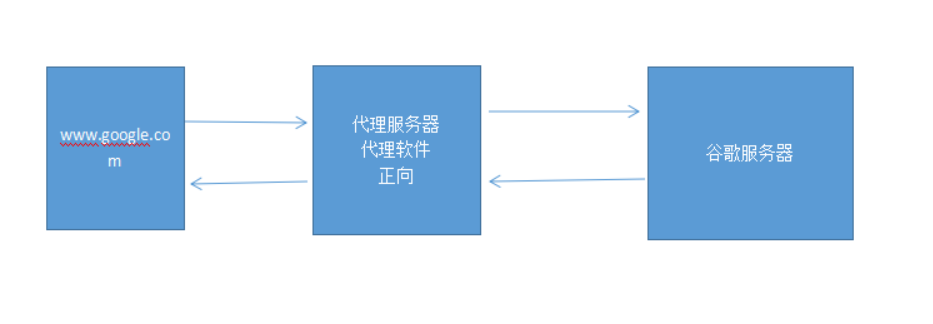

1. 正向代理,意思是一个位于客户端和原始服务器(origin server)之间的服务器,为了从原始服务器取得内容,客户端向代理发送一个请求并指定目标(原始服务器),然后代理向原始服务器转交请求并将获得的内容返回给客户端。

访问google使用代理服务器

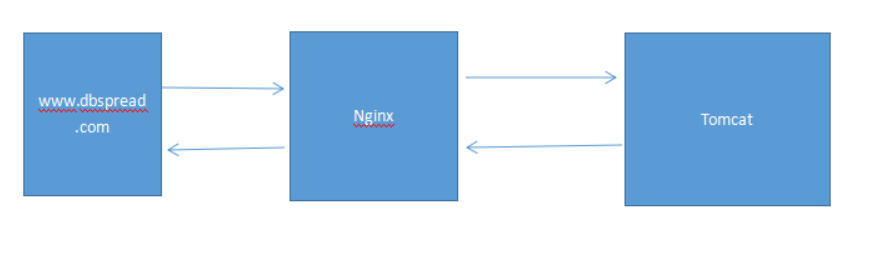

2. 反向代理(Reverse Proxy)方式是指以代理服务器来接受internet上的连接请求,然后将请求转发给内部网络上的服务器,并将从服务器上得到的结果返回给internet上请求连接的客户端,此时代理服务器对外就表现为一个反向代理服务器

代理访问自己的内部服务器

二、Nginx工作原理

Nginx由内核和模块组成,其中,内核的设计非常微小和简洁,完成的工作也非常简单,仅仅通过查找配置文件将客户端请求映射到一个location block(location是Nginx配置中的一个指令,用于URL匹配),而在这个location中所配置的每个指令将会启动不同的模块去完成相应的工作。

Nginx的模块从结构上分为核心模块、基础模块和第三方模块:

核心模块:HTTP模块、EVENT模块和MAIL模块

基础模块:HTTP Access模块、HTTP FastCGI模块、HTTP Proxy模块和HTTP Rewrite模块,

第三方模块:HTTP Upstream Request Hash模块、Notice模块和HTTP Access Key模块。

Nginx的高并发得益于其采用了epoll模型,与传统的服务器程序架构不同,epoll是linux内核2.6以后才出现的。Nginx采用epoll模型,异步非阻塞,而apache采用的是select模型:

Select特点:select 选择句柄的时候,是遍历所有句柄,也就是说句柄有事件响应时,select需要遍历所有句柄才能获取到哪些句柄有事件通知,因此效率是非常低。

epoll的特点:epoll对于句柄事件的选择不是遍历的,是事件响应的,就是句柄上事件来就马上选择出来,不需要遍历整个句柄链表,因此效率非常高。

1. 什么是Nginx

Nginx ("engine x") 是一个高性能的HTTP和反向代理服务器,也是一个 IMAP/POP3/SMTP 代理服务器。 Nginx 是由 Igor Sysoev 为俄罗斯访问量第二的 Rambler.ru 站点开发的,第一个公开版本0.1.0发布于2004年10月4日。其将源代码以类BSD许可证的形式发布,因它的稳定性、丰富的功能集、示例配置文件和低系统资源的消耗而闻名

常见的应用服务器: Apache/Microsoft IIS/Tomcat/Lighttpd/Nginx

2. Nginx的特点和组成

特点:Nginx特点是占有内存少,并发能力强,事实上nginx的并发能力确实在同类型的网页服务器中表现较好

组成:Nginx由内核和模块组成,其中,内核的设计非常微小和简洁,完成的工作也非常简单

核心模块:HTTP模块、EVENT模块和MAIL模块

基础模块:HTTP Access模块、HTTP FastCGI模块、HTTP Proxy模块和HTTP Rewrite模块,

第三方模块:HTTP Upstream Request Hash模块、Notice模块和HTTP Access Key模块。

3. Nginx的优点

1)高并发响应性能非常好,官方Nginx处理静态文件并发5w/s

2)反向代理性能非常强。(可用于负载均衡)

3)内存和cpu占用率低。(为Apache的1/5-1/10)

4)对后端服务有健康检查功能。

5)支持PHP cgi方式和fastcgi方式。

6)配置代码简洁且容易上手。

说明:处理静态文件是nginx最主要的功能

4. Nginx安装

4.1、编译环境gcc g++ 开发库之类的需要提前装好

yum -y install make zlib zlib-devel gcc-c++ libtool openssl openssl-devel

4.2、首先安装PCRE pcre功能是让nginx有rewrite功能

检查系统里是否安装了pcre软件

rpm -qa pcre 如果没有显示说明没有安装 反之安装过

rpm -e --nodeps pcre 删除pcre

安装pcre:yum install pcre-devel pcre -y

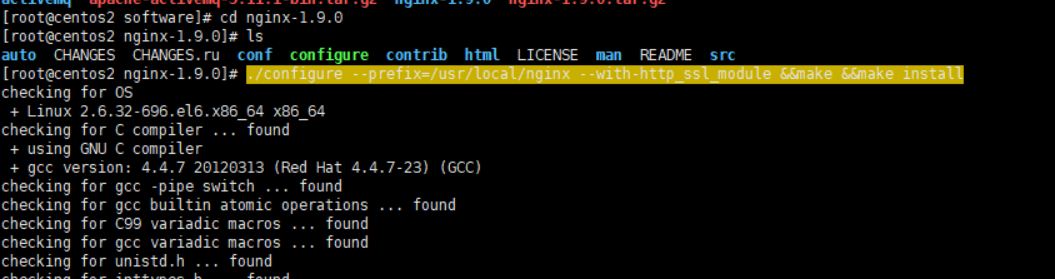

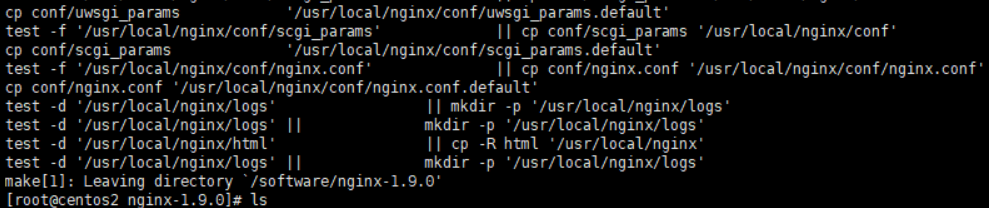

4.3、nginx安装

#下载Nginx源码包

cd /usr/local

wget http://nginx.org/download/nginx-1.9.0.tar.gz

#解压Nginx源码包

tar -zxvf nginx-1.9.0.tar.gz

#进入解压目录,开始安装,默认安装地址 /usr/local/nginx

cd nginx-1.9.0

./configure --prefix=/usr/local/nginx --with-http_ssl_module &&make &&make install

#自此Nginx安装完毕

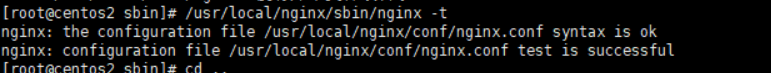

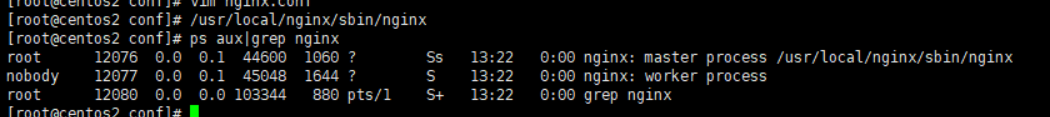

检查nginx配置文件是否正确,返回OK即正确。

/usr/local/nginx/sbin/nginx -t

#然后启动nginx,

/usr/local/nginx/sbin/nginx 回车即可。

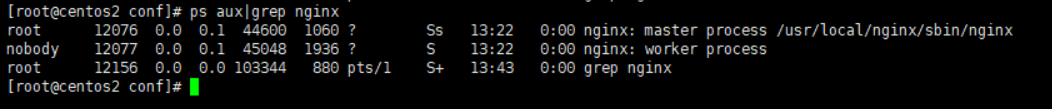

#查看进程是否已启动:

ps aux |grep nginx

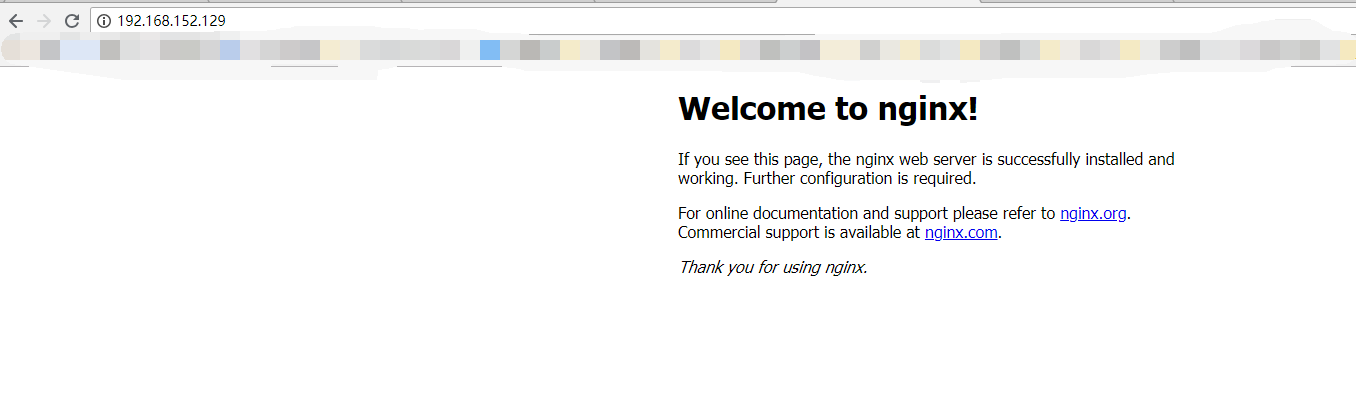

4.4 页面访问

4.5 卸载nginx

删除nginx文件即可

rm -rf /usr/local/nginx

三、Nginx常用命令和升级

1. Nginx常用命令

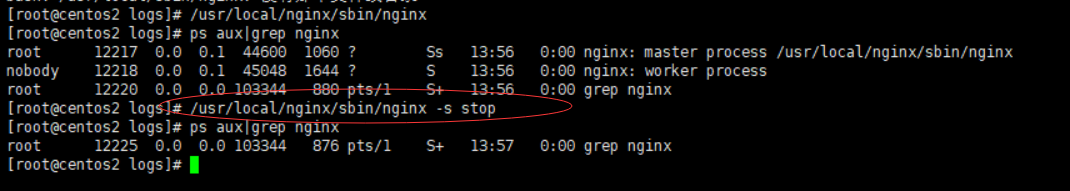

查看nginx进程:

ps -ef|grep nginx或者ps aux |grep nginx

说明:nginx的进程由主进程和工作进程组成。

启动nginx:

/usr/local/nginx/sbin/nginx

说明:启动结果显示nginx的主线程和工作线程,工作线程的数量跟nginx.conf中的配置参数worker_processes有关。

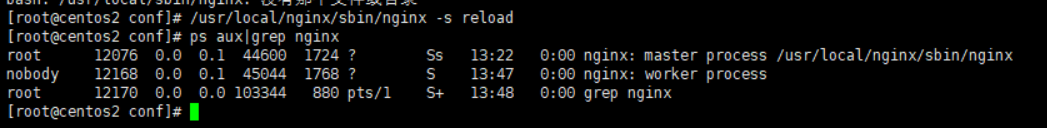

平滑启动nginx:

kill -HUP 'cat /var/run/nginx.pid'

或者

/usr/local/nginx/sbin/nginx -s reload

其中进程文件路径在配置文件nginx.conf中可以找到。

平滑启动的意思是在不停止nginx的情况下,重启nginx,重新加载配置文件,启动新的工作线程,完美停止旧的工作线程。

完美停止nginx:

kill -QUIT 'cat /var/run/nginx.pid'

快速停止nginx :

kill -TERM 'cat /var/run/nginx.pid'

或者

kill -INT `cat /var/run/nginx.pid`

完美停止工作进程(主要用于平滑升级):

kill -WINCH 'cat /var/run/nginx.pid'

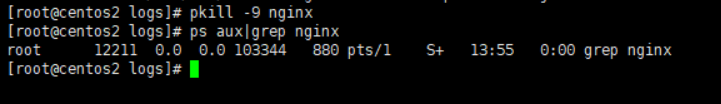

强制停止nginx:

pkill -9 nginx

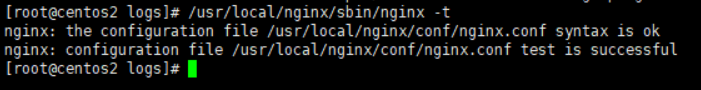

检查对nginx.conf文件的修改是否正确:

nginx -t -c /etc/nginx/nginx.conf 或者 /usr/local/nginx/sbin/nginx -t

停止nginx的命令:

/usr/local/nginx/sbin/nginx -s stop

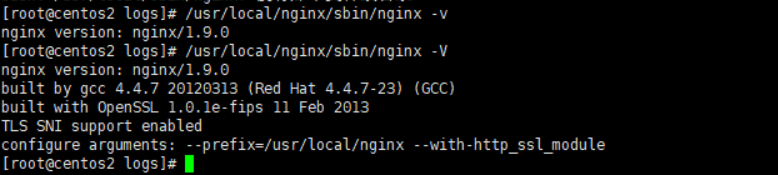

查看nginx的版本信息:

/usr/local/nginx/sbin/nginx -v

查看完整的nginx的配置信息:

/usr/local/nginx/sbin/nginx -V

2. Nginx升级

下载所需版本的Nginx:

wget http://www.nginx.org/download/nginx-1.4.2.tar.gz

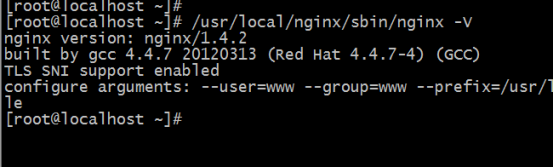

获取旧版本nginx的configure选项:

/usr/local/nginx/sbin/nginx -V

编译新版本的nginx

tar -xvf nginx-1.4.2.tar.gz

cd nginx-1.4.2

./configure --prefix=/usr/local/nginx --user=www --group=www --with-http_stub_status_module --with-http_ssl_module

make

备份旧版本的nginx可执行文件,复制新版本的nginx可执行文件:

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.old

cp objs/nginx /usr/local/nginx/sbin/

测试新版本nginx是否正常:

/usr/local/nginx/sbin/nginx -t

平滑重启升级nginx:

kill –USR2 `cat /usr/local/nginx/log/nginx.pid`

旧版本Nginx的pid变为oldbin,这是旧版本和新版本的nginx同时运行,过一段时间等旧nginx处理完用户请求后,执行下面操作

从容关闭旧版本的Nginx进程

kill -WINCH `cat /usr/local/nginx/log/nginx.oldbin`

决定是否升级到新版的nginx:

kill –HUP `cat /usr/local/nginx/log/nginx.oldbin` ##nginx在不重载配置文件启动工作进程

kill –QUIT `cat /usr/local/nginx/log/nginx.oldbin` ##关闭旧版nginx

验证nginx是否升级成功

/usr/local/nginx/sbin/nginx –V ###显示下图则升级成功

四、搭建Nginx负载均衡

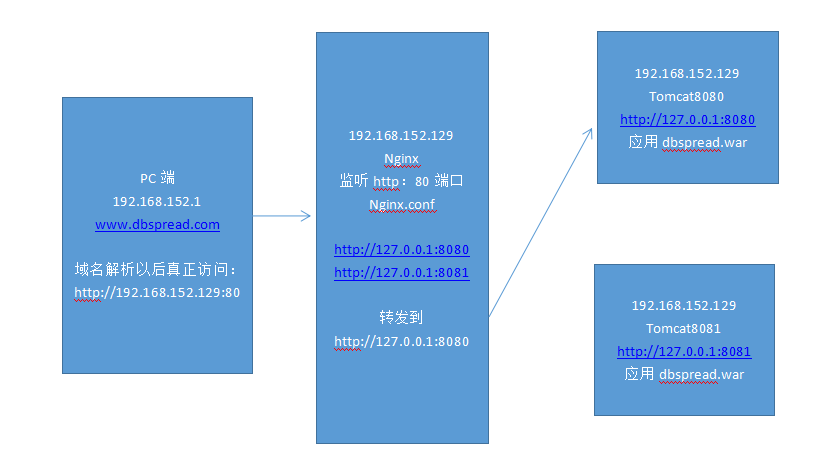

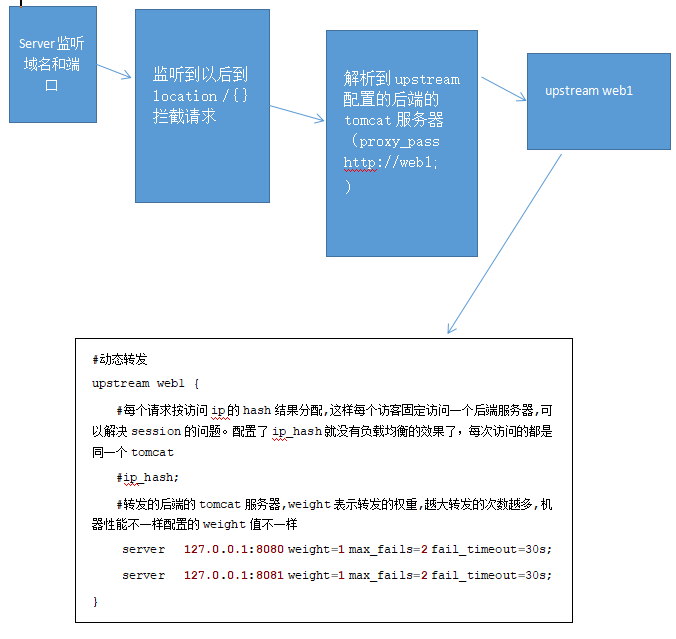

1. nginx负载均衡原理图

说明:

在nginx的nginx.conf里面监听80端口,并配置后端tomcat的地址,前端访问的时候即可转发到后端的tomcat

2. 搭建步骤

2.1 准备两个端口不一样的tomcat分别为8080,8081

8080的server.xml文件

<Server port="8005" shutdown="SHUTDOWN">

<Listener className="org.apache.catalina.startup.VersionLoggerListener" />

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!--Initialize Jasper prior to webapps are loaded. Documentation at /docs/jasper-howto.html -->

<Listener className="org.apache.catalina.core.JasperListener" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL HTTP/1.1 Connector on port 8080

-->

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL HTTP/1.1 Connector on port 8443

This connector uses the BIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11Protocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

8081的server.xml文件

<Server port="8006" shutdown="SHUTDOWN">

<Listener className="org.apache.catalina.startup.VersionLoggerListener" />

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!--Initialize Jasper prior to webapps are loaded. Documentation at /docs/jasper-howto.html -->

<Listener className="org.apache.catalina.core.JasperListener" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL HTTP/1.1 Connector on port 8080

-->

<Connector port="8081" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL HTTP/1.1 Connector on port 8443

This connector uses the BIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11Protocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8010" protocol="AJP/1.3" redirectPort="8443" />

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

2.2 在两个tomcat的root目录下分别放内容不一样的index.html,然后启动两个tomcat

tomcat-8080/webapps/ROOT

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge,chrome=1">

<meta name="renderer" content="webkit">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=1, user-scalable=no">

<title>test</title>

</head>

<body>

欢迎来到8080端口tomcat

</body>

</html>

tomcat-8081/webapps/ROOT

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge,chrome=1">

<meta name="renderer" content="webkit">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=1, user-scalable=no">

<title>test</title>

</head>

<body>

欢迎来到8081端口tomcat

</body>

</html>

2.3 修改前面安装的nginx的配置文件nginx.conf

user root root; #使用什么用户启动NGINX 在运行时使用哪个用户哪个组

worker_processes 4; #启动进程数,一般是1或8个,根据你的电脑CPU数,一般8个

worker_cpu_affinity 00000001 00000010 00000100 00001000; #CPU逻辑数——把每个进程分别绑在CPU上面,为每个进程分配一个CPU

#pid /usr/local/nginx/logs/nginx.pid

worker_rlimit_nofile 102400; #一个进程打开的最大文件数目,与NGINX并发连接有关系

#工作模式及连接数上限

events

{

use epoll; #多路复用IO 基于LINUX2.6以上内核,可以大大提高NGINX的性能 uname -a查看内核版本号

worker_connections 102400; #单个worker process最大连接数,其中NGINX最大连接数=连接数*进程数,一般1GB内存的机器上可以打开的最大数大约是10万左右

multi_accept on; #尽可能多的接受请求,默认是关闭状态

}

#处理http请求的一个应用配置段

http

{

#引用mime.types,这个类型定义了很多,当web服务器收到静态的资源文件请求时,依据请求文件的后缀名在服务器的MIME配置文件中找到对应的MIME #Type,根据MIMETYPE设置并response响应类型(Content-type)

include mime.types;

default_type application/octet-stream; #定义的数据流,有的时候默认类型可以指定为text,这跟我们的网页发布还是资源下载是有关系的

fastcgi_intercept_errors on; #表示接收fastcgi输出的http 1.0 response code

charset utf-8;

server_names_hash_bucket_size 128; #保存服务器名字的hash表

#用来缓存请求头信息的,容量4K,如果header头信息请求超过了,nginx会直接返回400错误,先根据client_header_buffer_size配置的值分配一个buffer,如果##分配的buffer无法容纳request_line/request_header,那么就会##再次根据large_client_header_buffers配置的参数分配large_buffer,如果large_buffer还是无#法容纳,那么就会返回414(处理request_line)/400(处理request_header)错误。

client_header_buffer_size 4k;

large_client_header_buffers 4 32k;

client_max_body_size 300m; #允许客户端请求的最大单文件字节数 上传文件时根据需求设置这个参数

#指定NGINX是否调用这个函数来输出文件,对于普通的文件我们必须设置为ON,如果NGINX专门做为一个下载端的话可以关掉,好处是降低磁盘与网络的IO处理数及#系统的UPTIME

sendfile on;

#autoindex on;开启目录列表访问,适合下载服务器

tcp_nopush on; #防止网络阻塞

#非常重要,根据实际情况设置值,超时时间,客户端到服务端的连接持续有效时间,60秒内可避免重新建立连接,时间也不能设太长,太长的话,若请求数10000##,都占用连接会把服务托死

keepalive_timeout 60;

tcp_nodelay on; #提高数据的实时响应性

client_body_buffer_size 512k; #缓冲区代理缓冲用户端请求的最大字节数(请求多)

proxy_connect_timeout 5; #nginx跟后端服务器连接超时时间(代理连接超时)

proxy_read_timeout 60; #连接成功后,后端服务器响应时间(代理接收超时)

proxy_send_timeout 5; #后端服务器数据回传时间(代理发送超时)

proxy_buffer_size 16k; #设置代理服务器(nginx)保存用户头信息的缓冲区大小

proxy_buffers 4 64k; #proxy_buffers缓冲区,网页平均在32k以下的话,这样设置

proxy_busy_buffers_size 128k; #高负荷下缓冲大小

proxy_temp_file_write_size 128k; #设定缓存文件夹大小,大于这个值,将从upstream服务器传

gzip on; #NGINX可以压缩静态资源,比如我的静态资源有10M,压缩后只有2M,那么浏览器下载的就少了

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 2; #压缩级别大小,最小1,最大9.值越小,压缩后比例越小,CPU处理更快,为1时,原10M压缩完后8M,但设为9时,压缩完可能只有2M了。一般设置为2

gzip_types text/plain application/x-javascript text/css application/xml; #压缩类型:text,js css xml 都会被压缩

gzip_vary on; #作用是在http响应中增加一行目的是改变反向代理服务器的缓存策略

#日志格式

log_format main '$remote_addr - $remote_user [$time_local] "$request" ' #ip 远程用户 当地时间 请求URL

'$status $body_bytes_sent "$http_referer" ' #状态 发送的大小 响应的头

'"$http_user_agent" $request_time'; #客户端使用的浏览器 页面响应的时间

#动态转发

upstream web1 {

#每个请求按访问ip的hash结果分配,这样每个访客固定访问一个后端服务器,可以解决session的问题。配置了ip_hash就没有负载均衡的效果了,每次访问的都是同一个tomcat

#ip_hash;

#转发的后端的tomcat服务器,weight表示转发的权重,越大转发的次数越多,机器性能不一样配置的weight值不一样

server 127.0.0.1:8080 weight=1 max_fails=2 fail_timeout=30s;

server 127.0.0.1:8081 weight=1 max_fails=2 fail_timeout=30s;

}

upstream web2 {

server 127.0.0.1:8090 weight=1 max_fails=2 fail_timeout=30s;

server 127.0.0.1:8091 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80; #监听80端口

server_name www.dbspread.com; #域名

index index.jsp index.html index.htm;

root /usr/local/nginx/html; #定义服务器的默认网站根目录位置

#监听完成以后通过斜杆(/)拦截请求转发到后端的tomcat服务器

location /

{

#如果后端的服务器返回502、504、执行超时等错误,自动将请求转发到upstream负载均衡池中的另一台服务器,实现故障转移。

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_set_header Host $host; #获取客户端的主机名存到变量Host里面,从而让tomcat取到客户端机器的信息

proxy_set_header X-Real-IP $remote_addr; #获取客户端的主机名存到变量X-Real-IP里面,从而让tomcat取到客户端机器的信息

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://web1; #跳转到对应的应用web1

}

# location ~ .*.(php|jsp|cgi|shtml)?$ #动态分离 ~匹配 以.*结尾(以PHP JSP结尾走这段)

# {

# proxy_set_header Host $host;

# proxy_set_header X-Real-IP $remote_addr;

# proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# proxy_pass http://jvm_web2;

# }

#静态分离 ~匹配 以.*结尾(以PHP JSP结尾走这段),当然不是越久越好,如果有10000个用户在线,都保存几个月,系统托跨

location ~ .*.(html|htm|gif|jpg|jpeg|bmp|png|ico|txt|js|css)$

{

root /var/local/static;

expires 30d;

}

#日志级别有[debug|info|notice|warn|error|crit] error_log 级别分为 debug, info, notice, warn, error, crit 默认为crit, 生产环境用error

#crit 记录的日志最少,而debug记录的日志最多

access_log /usr/local/logs/web2/access.log main;

error_log /usr/local/logs/web2/error.log crit;

}

}

说明:nginx配置文件的解析流程

2.4 启动nginx

/usr/local/nginx/sbin/nginx

2.5 在本机C:WindowsSystem32driversetchosts配置域名映射

192.168.152.129 www.dbspread.com

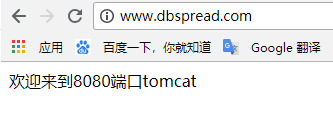

2.6 在浏览器地址栏输入地址www.dbspread.com访问查看效果

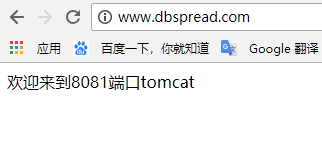

首次进入效果:

再次刷新页面效果