1 # -*- coding: utf-8 -*- 2 """ 3 Created on Thu Nov 1 17:51:28 2018 4 5 @author: zhen 6 """ 7 8 import tensorflow as tf 9 from tensorflow.examples.tutorials.mnist import input_data 10 11 max_steps = 1000 12 learning_rate = 0.001 13 dropout = 0.9 14 data_dir = 'C:/Users/zhen/MNIST_data_bak/' 15 log_dir = 'C:/Users/zhen/MNIST_log_bak/' 16 17 mnist = input_data.read_data_sets(data_dir, one_hot=True) # 加载数据,把数据转换成one_hot编码 18 sess = tf.InteractiveSession() # 创建内置sess 19 20 with tf.name_scope('input'): 21 x = tf.placeholder(tf.float32, [None, 784], name='x-inpupt') 22 y_ = tf.placeholder(tf.float32, [None, 10], name='y-input') 23 24 with tf.name_scope("input_reshape"): 25 image_shaped_input = tf.reshape(x, [-1, 28, 28, 1]) 26 tf.summary.image('input', image_shaped_input, 10) # 输出包含图像的summary,该图像有四维张量构建,用于可视化 27 28 # 定义神经网络的初始化方法 29 def weight_variable(shape): 30 initial = tf.truncated_normal(shape, stddev=0.1) #从截断的正态分布中输出随机值,类似tf.random_normal从正态分布中输出随机值 31 return tf.Variable(initial) 32 33 def bias_variable(shape): 34 initial = tf.constant(0.1, shape=shape) 35 return tf.Variable(initial) 36 37 # 定义Variable变量的数据汇总函数 38 def variable_summaries(var): 39 with tf.name_scope('summaries'): 40 mean = tf.reduce_mean(var) # 求平均值 41 tf.summary.scalar('mean', mean) # 输出一个含有标量值的summary protocal buffer,用于可视化 42 with tf.name_scope('stddev'): 43 stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean))) 44 tf.summary.scalar('stddev', stddev) 45 tf.summary.scalar('max', tf.reduce_max(var)) 46 tf.summary.scalar('min', tf.reduce_min(var)) 47 tf.summary.histogram('histogram', var) # 用于显示直方图信息 48 49 # 创建MLP多层神经网络 50 def nn_layer(input_tensor, input_dim, output_dim, layer_name, act=tf.nn.relu): 51 with tf.name_scope(layer_name): 52 with tf.name_scope('weights'): 53 weights = weight_variable([input_dim, output_dim]) 54 variable_summaries(weights) 55 with tf.name_scope('biases'): 56 biases = bias_variable([output_dim]) 57 variable_summaries(biases) 58 with tf.name_scope('Wx_plus_b'): 59 preactivate = tf.matmul(input_tensor, weights) + biases # 矩阵乘 60 tf.summary.histogram('pre_activations', preactivate) 61 activations = act(preactivate, name='activation') # 激活函数relu 62 tf.summary.histogram('activations', activations) 63 return activations 64 65 hidden1 = nn_layer(x, 784, 500, 'layer1') 66 67 with tf.name_scope('dropout'): 68 keep_prob = tf.placeholder(tf.float32) 69 tf.summary.scalar('dropout_keep_probability', keep_prob) 70 dropped = tf.nn.dropout(hidden1, keep_prob) # 训练过程中随机舍弃部分神经元,为了防止或减轻过拟合 71 72 y = nn_layer(dropped, 500, 10, 'layer2', act=tf.identity) 73 74 with tf.name_scope('scross_entropy'): 75 diff = tf.nn.softmax_cross_entropy_with_logits(logits=y, labels=y_) # 求交叉熵 76 with tf.name_scope('total'): 77 cross_entropy = tf.reduce_mean(diff) 78 tf.summary.scalar('cross_entropy', cross_entropy) 79 80 with tf.name_scope('train'): 81 train_step = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy) #Adam优化算法,全局最优,引入了二次方梯度矫正 82 with tf.name_scope('accuracy'): 83 with tf.name_scope('accuracy'): 84 correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1)) 85 with tf.name_scope('accuracy'): 86 accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) 87 88 tf.summary.scalar('accuracy', accuracy) 89 90 merged = tf.summary.merge_all() # 整合之前定义的所有summary,在此处才开始执行,summary为延迟加载 91 train_writer = tf.summary.FileWriter(log_dir + '/train', sess.graph) 92 test_writer = tf.summary.FileWriter(log_dir + '/test') # 可视化数据存储在日志文件中 93 tf.global_variables_initializer().run() 94 95 def feed_dict(train): 96 if train: 97 xs, ys = mnist.train.next_batch(100) 98 k = dropout 99 else: 100 xs, ys = mnist.test.images, mnist.test.labels 101 k = 1.0 102 return {x:xs, y_:ys, keep_prob:k} 103 104 saver = tf.train.Saver() 105 for i in range(max_steps): 106 if i % 100 == 0: 107 summary, acc = sess.run([merged, accuracy], feed_dict(False)) 108 test_writer.add_summary(summary, i) 109 print('Accuray at step %s:%s' % (i, acc)) 110 else: 111 if i % 100 == 99: 112 run_options = tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE) # 定义TensorFlow运行选项 113 run_metadata = tf.RunMetadata() # 定义TensorFlow运行元信息,记录训练运行时间及内存占用等信息 114 summary, _ = sess.run([merged, train_step], feed_dict=feed_dict(True)) 115 train_writer.add_run_metadata(run_metadata, 'stp%03d' % i) 116 train_writer.add_summary(summary, 1) 117 saver.save(sess, log_dir + 'model.ckpt', i) 118 else: 119 summary, _ = sess.run([merged,train_step], feed_dict=feed_dict(True)) 120 train_writer.add_summary(summary, i) 121 122 train_writer.close() 123 test_writer.close() 124 125

程序执行完成后,在dos命令窗口或linux窗口运行 命令,参数logdir是你程序保存log日志设置的地址。

命令,参数logdir是你程序保存log日志设置的地址。

效果如下:

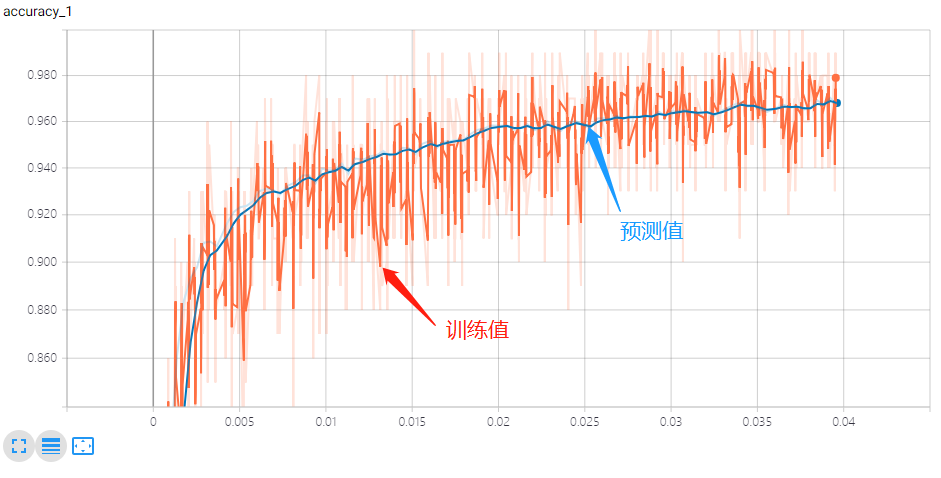

结果:

一层神经网络:

二层神经网络:

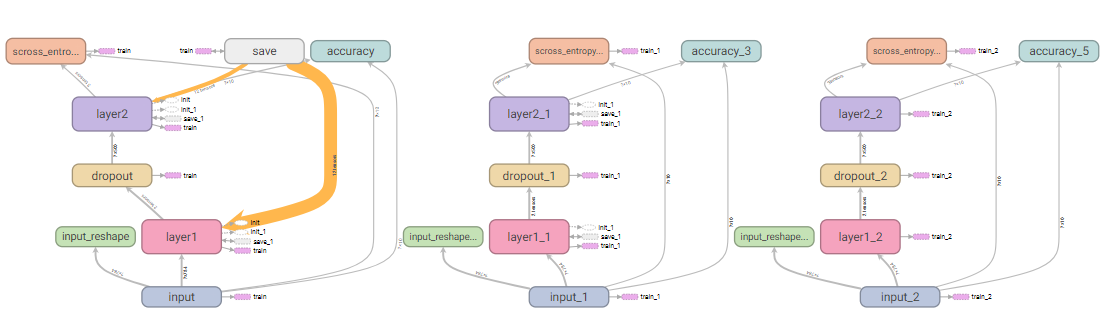

神经网络计算图:

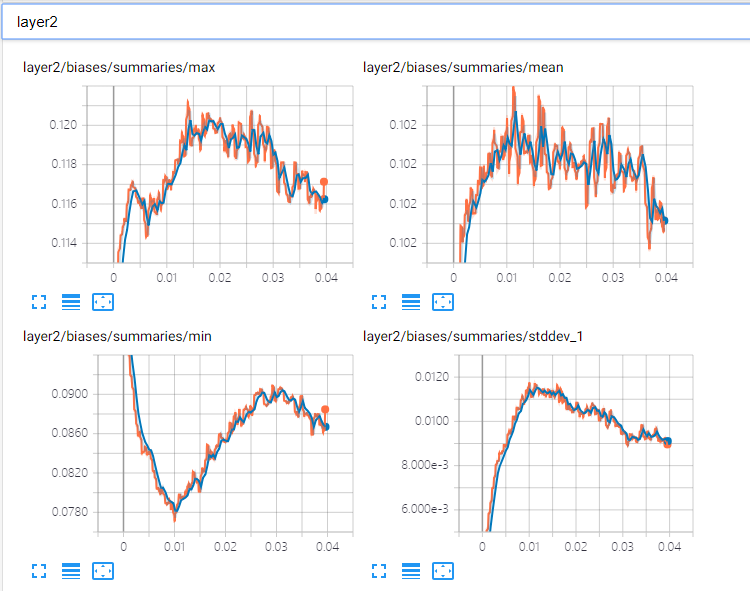

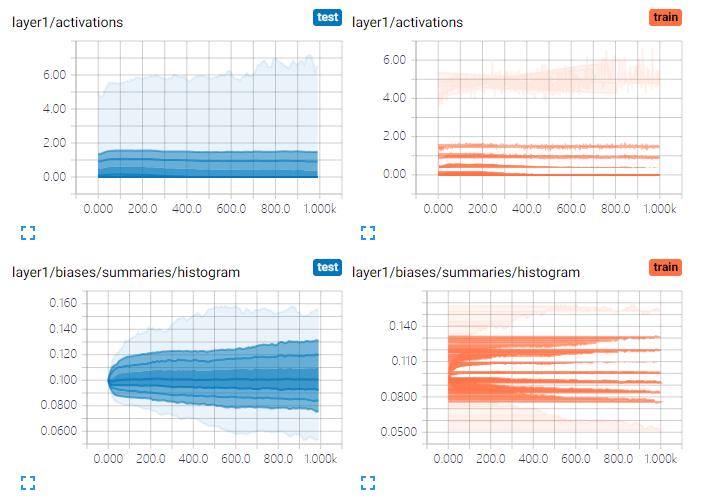

神经元输出的分布:

数据分布直方图:

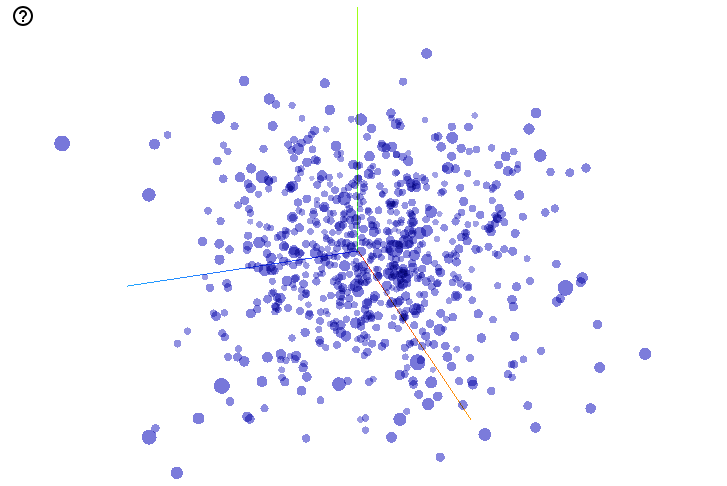

数据可视化: