1 # -*- coding: utf-8 -*- 2 """ 3 Created on Mon Oct 22 17:23:49 2018 4 5 @author: zhen 6 """ 7 import tensorflow as tf 8 from tensorflow.examples.tutorials.mnist import input_data 9 import numpy as np 10 from tensorflow.contrib.layers import fully_connected 11 12 # 构建图阶段 13 n_inputs = 28 * 28 14 n_hidden1 = 300 15 n_hidden2 = 100 16 n_outputs = 10 17 18 x = tf.placeholder(tf.float32, shape=(None, n_inputs), name='x') 19 y = tf.placeholder(tf.int64, shape=(None), name='y') 20 21 # 构建神经网络,基于两个隐藏层,激活函数:relu,Softmax 22 def neuron_layer(x, n_neurons, name, activation=None): 23 with tf.name_scope(name): 24 # 取输入矩阵的维度作为层的输入连接个数 25 n_inputs = int(x.get_shape()[1]) 26 stddev = 2 / np.sqrt(n_inputs) 27 init = tf.truncated_normal((n_inputs, n_neurons), stddev=stddev) 28 w = tf.Variable(init, name='weights') 29 b = tf.Variable(tf.zeros([n_neurons]), name='biases') 30 z = tf.matmul(x, w) + b 31 if activation == 'relu': 32 return tf.nn.relu(z) 33 else: 34 return z 35 36 with tf.name_scope("dnn"): 37 # 创建输入层到第一层隐藏层的映射 38 hidden1 = neuron_layer(x, n_hidden1, "hidden1", activation="relu") 39 # 创建第一层隐藏层到第二层隐藏层的映射 40 hidden2 = neuron_layer(hidden1, n_hidden2, "hidden2", activation="relu") 41 # 创建第二层隐藏层到输出层的映射 42 logits = neuron_layer(hidden2, n_outputs, "outputs") 43 44 with tf.name_scope("loss"): 45 entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y, logits=logits) 46 loss = tf.reduce_mean(entropy, name="loss") 47 48 learning_rate = 0.01 49 50 with tf.name_scope("train"): 51 optimizer = tf.train.GradientDescentOptimizer(learning_rate) 52 training_op = optimizer.minimize(loss) 53 54 with tf.name_scope("eval"): 55 correct = tf.nn.in_top_k(logits, y, 1) 56 accuracy = tf.reduce_mean(tf.cast(correct, tf.float32)) 57 58 init = tf.global_variables_initializer() 59 saver = tf.train.Saver() 60 61 # 计算图阶段 62 mnist = input_data.read_data_sets("C:/Users/zhen/MNIST_data_bak/") 63 n_epochs = 20 64 batch_size = 50 65 66 with tf.Session() as sess: 67 init.run() 68 for epoch in range(n_epochs): 69 for iteration in range(mnist.train.num_examples // batch_size): 70 x_batch, y_batch = mnist.train.next_batch(batch_size) 71 sess.run(training_op, feed_dict={x:x_batch, y:y_batch}) 72 acc_train = accuracy.eval(feed_dict={x:x_batch, y:y_batch}) 73 acc_test = accuracy.eval(feed_dict={x:mnist.test.images, y:mnist.test.labels}) 74 print(epoch, "train accuracy:", acc_train, "test accuracy", acc_test) 75 save_path = saver.save(sess, "./my_dnn_model_final.ckpt") 76

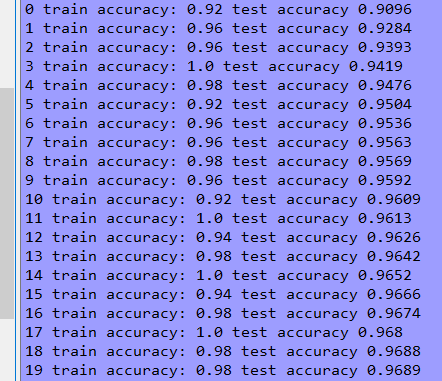

结果:

分析:

从结果可知:整体训练和测试准确率较高,前期波动较大,后期逐渐趋于稳定,收敛!