安装第三方插件库

安装第三方插件库

1、 requests , 下载地址

https://github.com/requests/requests

安装:

利用 pip 安装

pip3 install requests

或

easy_install requests (两个方法都没有成功,因为我的cmd 有问题)

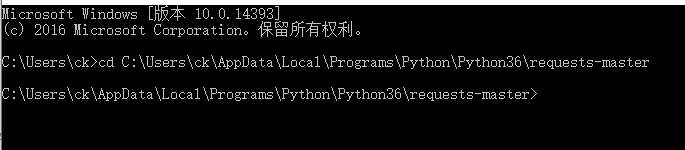

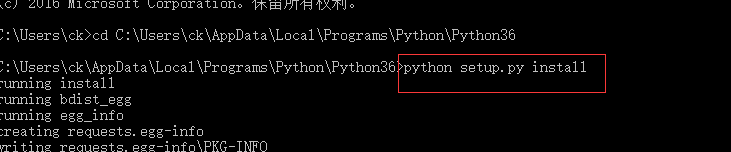

我用的比较暴力的方法,就是下载直接解压在python目录下面:

然后开始召唤cmd

运行 setup.py install

2、 BeautifulSoup

将压缩包解压到Python安装目录下,并顺序执行cmd命令:

setup.py build

setup.py install

3、首先我们引入一个小例子来感受一下

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('http://www.baidu.com') print('type(request)', type(request)) print('request.status_code', request.status_code) print('request.encoding', request.encoding) print('request.cookies', request.cookies) print('request.text', request.text)

以上代码我们请求了本站点的网址,然后打印出了返回结果的类型,状态码,编码方式,Cookies等内容。

运行结果如下:

type(request) <class 'requests.models.Response'> request.status_code 200 request.encoding ISO-8859-1 request.cookies <RequestsCookieJar[<Cookie BDORZ=27315 for .baidu.com/>]> request.text <!DOCTYPE html> <!--STATUS OK--><html> <head><meta http-equiv=content-type content=text/html;charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content=always name=referrer><link rel=stylesheet type=text/css href=http://s1.bdstatic.com/r/www/cache/bdorz/baidu.min.css><title>ç™¾åº¦ä¸€ä¸‹ï¼Œä½ å°±çŸ¥é“</title></head> <body link=#0000cc> <div id=wrapper> <div id=head> <div class=head_wrapper> <div class=s_form> <div class=s_form_wrapper> <div id=lg> <img hidefocus=true src=//www.baidu.com/img/bd_logo1.png width=270 height=129> </div> <form id=form name=f action=//www.baidu.com/s class=fm> <input type=hidden name=bdorz_come value=1> <input type=hidden name=ie value=utf-8> <input type=hidden name=f value=8> <input type=hidden name=rsv_bp value=1> <input type=hidden name=rsv_idx value=1> <input type=hidden name=tn value=baidu><span class="bg s_ipt_wr"><input id=kw name=wd class=s_ipt value maxlength=255 autocomplete=off autofocus></span><span class="bg s_btn_wr"><input type=submit id=su value=百度一下 class="bg s_btn"></span> </form> </div> </div> <div id=u1> <a href=http://news.baidu.com name=tj_trnews class=mnav>æ–°é—»</a> <a href=http://www.hao123.com name=tj_trhao123 class=mnav>hao123</a> <a href=http://map.baidu.com name=tj_trmap class=mnav>地图</a> <a href=http://v.baidu.com name=tj_trvideo class=mnav>视频</a> <a href=http://tieba.baidu.com name=tj_trtieba class=mnav>è´´å§</a> <noscript> <a href=http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u=http%3A%2F%2Fwww.baidu.com%2f%3fbdorz_come%3d1 name=tj_login class=lb>登录</a> </noscript> <script>document.write('<a href="http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u='+ encodeURIComponent(window.location.href+ (window.location.search === "" ? "?" : "&")+ "bdorz_come=1")+ '" name="tj_login" class="lb">登录</a>');</script> <a href=//www.baidu.com/more/ name=tj_briicon class=bri style="display: block;">更多产å“</a> </div> </div> </div> <div id=ftCon> <div id=ftConw> <p id=lh> <a href=http://home.baidu.com>关于百度</a> <a href=http://ir.baidu.com>About Baidu</a> </p> <p id=cp>©2017 Baidu <a href=http://www.baidu.com/duty/>使用百度å‰å¿…读</a> <a href=http://jianyi.baidu.com/ class=cp-feedback>æ„è§å馈</a> 京ICPè¯030173å· <img src=//www.baidu.com/img/gs.gif> </p> </div> </div> </div> </body> </html>

基本请求

requests库提供了http所有的基本请求方式。例如:

request = requests.get(url) request = requests.post(url) request = requests.put(url) request = requests.delete(url) request = requests.head(url) request = requests.opinions(url)

GET请求

基本GET请求

最基本的GET请求可以直接用get方法

#!/usr/bin/env python3 #coding:utf-8 import requests params = { 'key1': 'value1', 'key2': 'value2' } request = requests.get('http://httpbin.org/get', params=params) print(request.url)

运行结果

http://httpbin.org/get?key1=value1&key2=value2

GET JSON 我们能读取服务器响应的内容。以 GitHub 时间线为例:

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('https://api.github.com/events') print('request.text', request.text) print('request.json()', request.json)

输出

request.text [{"id":"5435287313","type":"PushEvent","actor":=...}]

request.json() <bound method Response.json of <Response [200]>>

Get原始套接字内容

如果想获取来自服务器的原始套接字响应,可以取得 r.raw 。 不过需要在初始请求中设置 stream=True

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('https://api.github.com/events', stream=True) print('request.raw', request.raw) print('request.raw.read(10)', request.raw.read(10))

这样就获取了网页原始套接字内容

request.raw <requests.packages.urllib3.response.HTTPResponse object at 0x106b5f9b0> request.raw.read(10) b'x1fx8bx08x00x00x00x00x00x00x03'

添加headers

通过传递headers参数来添加headers

#!/usr/bin/env python3 #coding:utf-8 import requests params = { 'key1': 'value1', 'key2': 'value2' } headers= { 'content-type': 'application/json' } request = requests.get('http://httpbin.org/get', params=params, headers=headers) print(request.url)

输出

http://httpbin.org/get?key1=value1&key2=value2

POST请求

对于 POST 请求来说,我们一般需要为它增加一些参数。那么最基本的传参方法可以利用data 这个参数

#!/usr/bin/env python3 #coding:utf-8 import requests data = { 'key1': 'value1', 'key2': 'value2' } request = requests.post('http://httpbin.org/post', data=data) print('request.text', request.text)

输出结果

request.text { "args": {}, "data": "", "files": {}, "form": { "key1": "value1", "key2": "value2" }, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Content-Length": "23", "Content-Type": "application/x-www-form-urlencoded", "Host": "httpbin.org", "User-Agent": "python-requests/2.13.0" }, "json": null, "origin": "120.236.174.172", "url": "http://httpbin.org/post" }

POST JSON

有时候我们需要传送的信息不是表单形式的,需要我们传JSON格式的数据过去,所以我们可以用json.dumps()方法把表单数据序列化

#!/usr/bin/env python3 #coding:utf-8 import json import requests data = { 'some': 'data' } request = requests.post('http://httpbin.org/post', data=json.dumps(data)) print('request.text', request.text)

输出

request.text { "args": {}, "data": "{"some": "data"}", "files": {}, "form": {}, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Content-Length": "16", "Host": "httpbin.org", "User-Agent": "python-requests/2.13.0" }, "json": { "some": "data" }, "origin": "120.236.174.172", "url": "http://httpbin.org/post" }

在2.4.2版本后,直接使用json参数就可以进行编码

#!/usr/bin/env python3 #coding:utf-8 import json import requests data = { 'some': 'data' } # request = requests.post('http://httpbin.org/post', data=json.dumps(data)) request = requests.post('http://httpbin.org/post', json=data) print('request.text', request.text)

上传文件

如果想要上传文件,那么直接用files参数即可

#!/usr/bin/env python3 #coding:utf-8 import requests files = { 'file': open('test.txt', 'rb') } request = requests.post('http://httpbin.org/post', files=files) print('request.text', request.text)

输出

request.text { "args": {}, "data": "", "files": { "file": "hello word! " }, "form": {}, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Content-Length": "156", "Content-Type": "multipart/form-data; boundary=e76e934f387f4013a0cb03f0cc7f636d", "Host": "httpbin.org", "User-Agent": "python-requests/2.13.0" }, "json": null, "origin": "120.236.174.172", "url": "http://httpbin.org/post" }

上传流

requests 是支持流式上传的,这允许你发送大的数据流或文件而无需先把它们读入内存。要使用流式上传,仅需为你的请求体提供一个类文件对象即可

#!/usr/bin/env python3 #coding:utf-8 import requests with open('test.txt', 'rb') as f: request = requests.post('http://httpbin.org/post', data=f) print('request.text', request.text)

输出结果和直接用文件上传一样

Cookies

如果一个响应中包含了cookie,那么我们可以利用 cookies 变量来拿到也可以利用cookies参数来向服务器发送cookies信息

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('http://httpbin.org/cookies') print('request.cookies', requests.cookies) print('request.text', request.text) cookies = { 'cookies_are': 'working' } request = requests.get('http://httpbin.org/cookies', cookies=cookies) print('request.cookies', requests.cookies) print('request.text', request.text)

输出

request.cookies <module 'requests.cookies' from '/usr/local/lib/python3.6/site-packages/requests/cookies.py'> request.text { "cookies": {} } request.cookies <module 'requests.cookies' from '/usr/local/lib/python3.6/site-packages/requests/cookies.py'> request.text { "cookies": { "cookies_are": "working" } }

超时配置

可以利用 timeout 变量来配置最大请求时间

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('http://www.google.com.hk', timeout=0.01) print(request.url)

如果超时,会抛出一个异常

Traceback (most recent call last): File "/usr/local/lib/python3.6/site-packages/requests/packages/urllib3/connection.py", line 141, in _new_conn (self.host, self.port), self.timeout, **extra_kw) File "/usr/local/lib/python3.6/site-packages/requests/packages/urllib3/util/connection.py", line 83, in create_connection raise err File "/usr/local/lib/python3.6/site-packages/requests/packages/urllib3/util/connection.py", line 73, in create_connection sock.connect(sa) socket.timeout: timed out

会话对象

在以上的请求中,每次请求其实都相当于发起了一个新的请求。也就是相当于我们每个请求都用了不同的浏览器单独打开的效果。也就是它并不是指的一个会话,即使请求的是同一个网址。比如

#!/usr/bin/env python3 #coding:utf-8 import requests requests.get('http://httpbin.org/cookies/set/sessioncookie/123456789') request = requests.get('http://httpbin.org/cookies') print(request.text)

输出

{ "cookies": {} }

很明显,这不在一个会话中,无法获取 cookies,那么在一些站点中,我们需要保持一个持久的会话怎么办呢?就像用一个浏览器逛淘宝一样,在不同的选项卡之间跳转,这样其实就是通过request.Session建立了一个长久会话

解决方案如下

#!/usr/bin/env python3 #coding:utf-8 import requests # 通过session建立长久会话 session = requests.Session() session.get('http://httpbin.org/cookies/set/sessioncookie/123456789') request = session.get('http://httpbin.org/cookies') print(request.text)

输出

{ "cookies": { "sessioncookie": "123456789" } }

发现可以成功获取到 cookies 了,这就是建立一个会话到作用。体会一下。

那么既然会话是一个全局的变量,那么我们肯定可以用来全局的配置了。

当在会话中设置headers,又在请求中设置headers,两个变量都会传送过去

#!/usr/bin/env python3 #coding:utf-8 import requests headers1 = { 'test1': 'true' } headers2 = { 'test2': 'true' } session = requests.Session() session.headers.update(headers1) request = session.get('http://httpbin.org/headers', headers=headers2) print(request.text)

输出

{ "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Host": "httpbin.org", "Test1": "true", "Test2": "true", "User-Agent": "python-requests/2.13.0" } }

SSL证书验证

现在随处可见 https 开头的网站,Requests可以为HTTPS请求验证SSL证书,就像web浏览器一样。要想检查某个主机的SSL证书,你可以使用 verify参数(默认为True)

现在 12306 证书不是无效的嘛,来测试一下

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('https://kyfw.12306.cn/otn', verify=True) print(request.text)

输出

Traceback (most recent call last): File "/usr/local/lib/python3.6/site-packages/requests/packages/urllib3/contrib/pyopenssl.py", line 436, in wrap_socket cnx.do_handshake() File "/usr/local/lib/python3.6/site-packages/OpenSSL/SSL.py", line 1426, in do_handshake self._raise_ssl_error(self._ssl, result) File "/usr/local/lib/python3.6/site-packages/OpenSSL/SSL.py", line 1174, in _raise_ssl_error _raise_current_error() File "/usr/local/lib/python3.6/site-packages/OpenSSL/_util.py", line 48, in exception_from_error_queue raise exception_type(errors) OpenSSL.SSL.Error: [('SSL routines', 'ssl3_get_server_certificate', 'certificate verify failed')]

如果想跳过证书验证,只需要把verify设置为False

来试下 github 的

#!/usr/bin/env python3 #coding:utf-8 import requests request = requests.get('https://kyfw.12306.cn/otn', verify=True) print(request.text)

请求正常

代理

如果需要使用代理,你可以通过为任意请求方法提供 proxies 参数来配置单个请求

#!/usr/bin/env python3 #coding:utf-8 import requests proxies = { "https://www.google.com.hk/": "192.168.199.101" } request = requests.get('https://www.google.com.hk/', proxies=proxies) print(request.text)

以上讲解了 requests 中最常用的参数,如果需要用到更多,请参考官方文档 API

参考:http://blog.csdn.net/seanliu96/article/details/60511165