项目实战-友盟项目介绍以及环境搭建

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

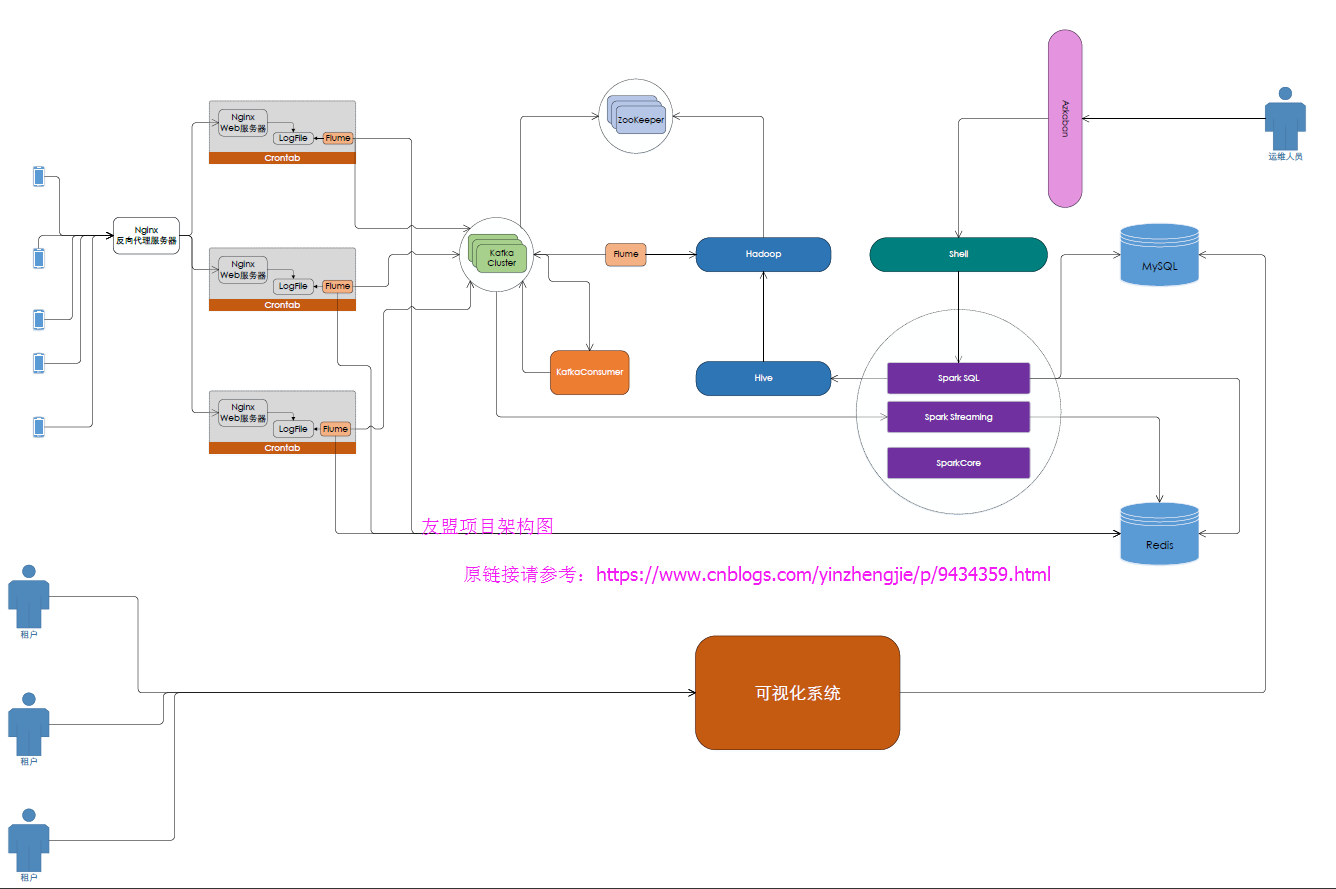

一.项目架构介绍

二.环境搭建

1>.搭建Nginx反向代理

参考笔记:https://www.cnblogs.com/yinzhengjie/p/9428404.html

2>.启动hadoop集群

[yinzhengjie@s101 ~]$ start-dfs.sh SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting namenodes on [s101 s105] s101: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s101.out s105: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s105.out s104: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s104.out s102: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s102.out s103: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s103.out Starting journal nodes [s102 s103 s104] s102: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s102.out s103: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s103.out s104: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s104.out SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting ZK Failover Controllers on NN hosts [s101 s105] s101: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s101.out s105: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s105.out [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 25169 Application 27156 NameNode 27972 DFSZKFailoverController 28029 Jps 24542 ConsoleConsumer 命令执行成功 ============= s102 jps ============ 8609 QuorumPeerMain 11345 Jps 11110 JournalNode 8999 Kafka 11036 DataNode 命令执行成功 ============= s103 jps ============ 7444 Kafka 7753 JournalNode 7100 QuorumPeerMain 7676 DataNode 7951 Jps 命令执行成功 ============= s104 jps ============ 6770 QuorumPeerMain 7109 Kafka 7336 DataNode 7610 Jps 7419 JournalNode 命令执行成功 ============= s105 jps ============ 19397 NameNode 19255 DFSZKFailoverController 19535 Jps 命令执行成功 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ hdfs dfs -mkdir -p /home/yinzhengjie/data/logs/umeng/raw-log SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs dfs -ls -R /home/yinzhengjie/data/logs SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] drwxr-xr-x - yinzhengjie supergroup 0 2018-08-06 23:36 /home/yinzhengjie/data/logs/umeng drwxr-xr-x - yinzhengjie supergroup 0 2018-08-06 23:36 /home/yinzhengjie/data/logs/umeng/raw-log [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ start-yarn.sh starting yarn daemons s101: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s101.out s105: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s105.out s102: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s102.out s104: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s104.out s103: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s103.out [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 25169 Application 29281 ResourceManager 27156 NameNode 27972 DFSZKFailoverController 30103 Jps 28523 Application 24542 ConsoleConsumer 命令执行成功 ============= s102 jps ============ 8609 QuorumPeerMain 11110 JournalNode 8999 Kafka 12343 Jps 11897 NodeManager 11036 DataNode 命令执行成功 ============= s103 jps ============ 8369 Jps 7444 Kafka 7753 JournalNode 8091 NodeManager 7100 QuorumPeerMain 7676 DataNode 命令执行成功 ============= s104 jps ============ 6770 QuorumPeerMain 7746 NodeManager 8018 Jps 7109 Kafka 7336 DataNode 7419 JournalNode 命令执行成功 ============= s105 jps ============ 19956 NodeManager 19397 NameNode 20293 Jps 19255 DFSZKFailoverController 命令执行成功 [yinzhengjie@s101 ~]$

3>.kafka配置

[yinzhengjie@s101 ~]$ more `which xcall.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数" exit fi #获取用户输入的命令 cmd=$@ for (( i=101;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo ============= s$i $cmd ============ #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 ssh s$i $cmd #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xzk.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop|restart|status}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function zookeeperManger(){ case $cmd in start) echo "启动服务" remoteExecution start ;; stop) echo "停止服务" remoteExecution stop ;; restart) echo "重启服务" remoteExecution restart ;; status) echo "查看状态" remoteExecution status ;; *) echo "无效参数,用法为: $0 {start|stop|restart|status}" ;; esac } #定义执行的命令 function remoteExecution(){ for (( i=102 ; i<=104 ; i++ )) ; do tput setaf 2 echo ========== s$i zkServer.sh $1 ================ tput setaf 9 ssh s$i "source /etc/profile ; zkServer.sh $1" done } #调用函数 zookeeperManger [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xzk.sh start 启动服务 ========== s102 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s103 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s104 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 23771 Jps 命令执行成功 ============= s102 jps ============ 8609 QuorumPeerMain 8639 Jps 命令执行成功 ============= s103 jps ============ 7100 QuorumPeerMain 7135 Jps 命令执行成功 ============= s104 jps ============ 6770 QuorumPeerMain 6799 Jps 命令执行成功 ============= s105 jps ============ 18932 Jps 命令执行成功 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xkafka.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop}" exit fi #获取用户输入的命令 cmd=$1 for (( i=102 ; i<=104 ; i++ )) ; do tput setaf 2 echo ========== s$i $cmd ================ tput setaf 9 case $cmd in start) ssh s$i "source /etc/profile ; kafka-server-start.sh -daemon /soft/kafka/config/server.properties" echo s$i "服务已启动" ;; stop) ssh s$i "source /etc/profile ; kafka-server-stop.sh" echo s$i "服务已停止" ;; *) echo "无效参数,用法为: $0 {start|stop}" exit ;; esac done [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xkafka.sh start ========== s102 start ================ s102 服务已启动 ========== s103 start ================ s103 服务已启动 ========== s104 start ================ s104 服务已启动 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 23921 Jps 命令执行成功 ============= s102 jps ============ 8609 QuorumPeerMain 8999 Kafka 9068 Jps 命令执行成功 ============= s103 jps ============ 7491 Jps 7444 Kafka 7100 QuorumPeerMain 命令执行成功 ============= s104 jps ============ 6770 QuorumPeerMain 7109 Kafka 7176 Jps 命令执行成功 ============= s105 jps ============ 18983 Jps 命令执行成功 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ kafka-topics.sh --zookeeper s102:2181 --create --topic yinzhengjie-umeng-raw-logs --replication-factor 3 --partitions 4 Created topic "yinzhengjie-umeng-raw-logs". [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ kafka-topics.sh --zookeeper s102:2181 --list __consumer_offsets __transaction_state t7 t9 test topic1 yinzhengjie yinzhengjie-umeng-raw-logs [yinzhengjie@s101 ~]$

[yinzhengjie@s101 conf]$ kafka-console-consumer.sh --zookeeper s102:2181 --topic yinzhengjie-umeng-raw-logs Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

4>.flume配置

[yinzhengjie@s101 ~]$ more /soft/flume/conf/yinzhengjie-exec-umeng-nginx-to-kafka.conf a1.sources = r1 a1.channels = c1 a1.sinks = k1 a1.sources.r1.type = exec a1.sources.r1.command = tail -F /usr/local/openresty/nginx/logs/access.log a1.channels.c1.type = memory a1.channels.c1.capacity = 10000 a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.kafka.topic = yinzhengjie-umeng-raw-logs a1.sinks.k1.kafka.bootstrap.servers = s102:9092 a1.sinks.k1.kafka.flumeBatchSize = 20 a1.sinks.k1.kafka.producer.acks = 1 a1.sinks.k1.kafka.producer.linger.ms = 0 a1.sources.r1.channels=c1 a1.sinks.k1.channel=c1 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ flume-ng agent -f /soft/flume/conf/yinzhengjie-exec-umeng-nginx-to-kafka.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie-exec-umeng-nginx-to-kafka.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/08/06 21:59:22 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/08/06 21:59:22 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie-exec-umeng-nginx-to-kafka.conf 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 21:59:22 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/08/06 21:59:22 INFO node.AbstractConfigurationProvider: Creating channels 18/08/06 21:59:22 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/08/06 21:59:22 INFO node.AbstractConfigurationProvider: Created channel c1 18/08/06 21:59:22 INFO source.DefaultSourceFactory: Creating instance of source r1, type exec 18/08/06 21:59:22 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: org.apache.flume.sink.kafka.KafkaSink 18/08/06 21:59:22 INFO kafka.KafkaSink: Using the static topic yinzhengjie-umeng-raw-logs. This may be overridden by event headers 18/08/06 21:59:22 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/08/06 21:59:22 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.ExecSource{name:r1,state:IDLE} }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@6bcf9394 counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/08/06 21:59:22 INFO node.Application: Starting Channel c1 18/08/06 21:59:22 INFO node.Application: Waiting for channel: c1 to start. Sleeping for 500 ms 18/08/06 21:59:22 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/08/06 21:59:22 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/08/06 21:59:23 INFO node.Application: Starting Sink k1 18/08/06 21:59:23 INFO node.Application: Starting Source r1 18/08/06 21:59:23 INFO source.ExecSource: Exec source starting with command: tail -F /usr/local/openresty/nginx/logs/access.log 18/08/06 21:59:23 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: r1: Successfully registered new MBean. 18/08/06 21:59:23 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: r1 started 18/08/06 21:59:23 INFO producer.ProducerConfig: ProducerConfig values: compression.type = none metric.reporters = [] metadata.max.age.ms = 300000 metadata.fetch.timeout.ms = 60000 reconnect.backoff.ms = 50 sasl.kerberos.ticket.renew.window.factor = 0.8 bootstrap.servers = [s102:9092] retry.backoff.ms = 100 sasl.kerberos.kinit.cmd = /usr/bin/kinit buffer.memory = 33554432 timeout.ms = 30000 key.serializer = class org.apache.kafka.common.serialization.StringSerializer sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 ssl.keystore.type = JKS ssl.trustmanager.algorithm = PKIX block.on.buffer.full = false ssl.key.password = null max.block.ms = 60000 sasl.kerberos.min.time.before.relogin = 60000 connections.max.idle.ms = 540000 ssl.truststore.password = null max.in.flight.requests.per.connection = 5 metrics.num.samples = 2 client.id = ssl.endpoint.identification.algorithm = null ssl.protocol = TLS request.timeout.ms = 30000 ssl.provider = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] acks = 1 batch.size = 16384 ssl.keystore.location = null receive.buffer.bytes = 32768 ssl.cipher.suites = null ssl.truststore.type = JKS security.protocol = PLAINTEXT retries = 0 max.request.size = 1048576 value.serializer = class org.apache.kafka.common.serialization.ByteArraySerializer ssl.truststore.location = null ssl.keystore.password = null ssl.keymanager.algorithm = SunX509 metrics.sample.window.ms = 30000 partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner send.buffer.bytes = 131072 linger.ms = 0 18/08/06 21:59:23 INFO utils.AppInfoParser: Kafka version : 0.9.0.1 18/08/06 21:59:23 INFO utils.AppInfoParser: Kafka commitId : 23c69d62a0cabf06 18/08/06 21:59:23 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: k1: Successfully registered new MBean. 18/08/06 21:59:23 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: k1 started

[yinzhengjie@s101 ~]$ more /soft/flume/conf/yinzhengjie-exec-umeng-kafka-to-hdfs.conf a1.sources = r1 a1.channels = c1 a1.sinks = k1 a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource a1.sources.r1.batchSize = 5000 a1.sources.r1.batchDurationMillis = 2000 a1.sources.r1.kafka.bootstrap.servers = s102:9092 a1.sources.r1.kafka.topics = yinzhengjie-umeng-raw-logs a1.sources.r1.kafka.consumer.group.id = g10 a1.channels.c1.type=memory a1.sinks.k1.type = hdfs #目标目录 a1.sinks.k1.hdfs.path = /home/yinzhengjie/data/logs/umeng/raw-log/%Y%m/%d/%H%M #文件前缀 a1.sinks.k1.hdfs.filePrefix = events- #round控制目录 #是否允许目录环绕 a1.sinks.k1.hdfs.round = true #目录环绕的值 a1.sinks.k1.hdfs.roundValue = 1 #目录环绕的时间单位 a1.sinks.k1.hdfs.roundUnit = minute #控制文件 #滚动文件间隔(单位秒) a1.sinks.k1.hdfs.rollInterval = 30 #滚动文件的大小(10K) a1.sinks.k1.hdfs.rollSize = 10240 #滚动文件消息条数(500) a1.sinks.k1.hdfs.rollCount = 500 #使用本地时间 a1.sinks.k1.hdfs.useLocalTimeStamp = true #控制文件类型,DataStream是文本类型,默认是序列文件。 a1.sinks.k1.hdfs.fileType = DataStream a1.sources.r1.channels=c1 a1.sinks.k1.channel=c1 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ flume-ng agent -f /soft/flume/conf/yinzhengjie-exec-umeng-kafka-to-hdfs.conf -n a1 Warning: No configuration directory set! Use --conf <dir> to override. Warning: JAVA_HOME is not set! Info: Including Hadoop libraries found via (/soft/hadoop/bin/hadoop) for HDFS access Info: Including HBASE libraries found via (/soft/hbase/bin/hbase) for HBASE access Info: Including Hive libraries found via () for Hive access + exec /soft/jdk/bin/java -Xmx20m -cp '/soft/flume/lib/*:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/soft/hbase/bin/../conf:/soft/jdk//lib/tools.jar:/soft/hbase/bin/..:/soft/hbase/bin/../lib/activation-1.1.jar:/soft/hbase/bin/../lib/aopalliance-1.0.jar:/soft/hbase/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/soft/hbase/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/soft/hbase/bin/../lib/api-asn1-api-1.0.0-M20.jar:/soft/hbase/bin/../lib/api-util-1.0.0-M20.jar:/soft/hbase/bin/../lib/asm-3.1.jar:/soft/hbase/bin/../lib/avro-1.7.4.jar:/soft/hbase/bin/../lib/commons-beanutils-1.7.0.jar:/soft/hbase/bin/../lib/commons-beanutils-core-1.8.0.jar:/soft/hbase/bin/../lib/commons-cli-1.2.jar:/soft/hbase/bin/../lib/commons-codec-1.9.jar:/soft/hbase/bin/../lib/commons-collections-3.2.2.jar:/soft/hbase/bin/../lib/commons-compress-1.4.1.jar:/soft/hbase/bin/../lib/commons-configuration-1.6.jar:/soft/hbase/bin/../lib/commons-daemon-1.0.13.jar:/soft/hbase/bin/../lib/commons-digester-1.8.jar:/soft/hbase/bin/../lib/commons-el-1.0.jar:/soft/hbase/bin/../lib/commons-httpclient-3.1.jar:/soft/hbase/bin/../lib/commons-io-2.4.jar:/soft/hbase/bin/../lib/commons-lang-2.6.jar:/soft/hbase/bin/../lib/commons-logging-1.2.jar:/soft/hbase/bin/../lib/commons-math-2.2.jar:/soft/hbase/bin/../lib/commons-math3-3.1.1.jar:/soft/hbase/bin/../lib/commons-net-3.1.jar:/soft/hbase/bin/../lib/disruptor-3.3.0.jar:/soft/hbase/bin/../lib/findbugs-annotations-1.3.9-1.jar:/soft/hbase/bin/../lib/guava-12.0.1.jar:/soft/hbase/bin/../lib/guice-3.0.jar:/soft/hbase/bin/../lib/guice-servlet-3.0.jar:/soft/hbase/bin/../lib/hadoop-annotations-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-auth-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-hdfs-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-api-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-client-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-common-2.5.1.jar:/soft/hbase/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6.jar:/soft/hbase/bin/../lib/hbase-annotations-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-client-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6.jar:/soft/hbase/bin/../lib/hbase-common-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-examples-1.2.6.jar:/soft/hbase/bin/../lib/hbase-external-blockcache-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6.jar:/soft/hbase/bin/../lib/hbase-it-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-prefix-tree-1.2.6.jar:/soft/hbase/bin/../lib/hbase-procedure-1.2.6.jar:/soft/hbase/bin/../lib/hbase-protocol-1.2.6.jar:/soft/hbase/bin/../lib/hbase-resource-bundle-1.2.6.jar:/soft/hbase/bin/../lib/hbase-rest-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6.jar:/soft/hbase/bin/../lib/hbase-server-1.2.6-tests.jar:/soft/hbase/bin/../lib/hbase-shell-1.2.6.jar:/soft/hbase/bin/../lib/hbase-thrift-1.2.6.jar:/soft/hbase/bin/../lib/htrace-core-3.1.0-incubating.jar:/soft/hbase/bin/../lib/httpclient-4.2.5.jar:/soft/hbase/bin/../lib/httpcore-4.4.1.jar:/soft/hbase/bin/../lib/jackson-core-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-jaxrs-1.9.13.jar:/soft/hbase/bin/../lib/jackson-mapper-asl-1.9.13.jar:/soft/hbase/bin/../lib/jackson-xc-1.9.13.jar:/soft/hbase/bin/../lib/jamon-runtime-2.4.1.jar:/soft/hbase/bin/../lib/jasper-compiler-5.5.23.jar:/soft/hbase/bin/../lib/jasper-runtime-5.5.23.jar:/soft/hbase/bin/../lib/javax.inject-1.jar:/soft/hbase/bin/../lib/java-xmlbuilder-0.4.jar:/soft/hbase/bin/../lib/jaxb-api-2.2.2.jar:/soft/hbase/bin/../lib/jaxb-impl-2.2.3-1.jar:/soft/hbase/bin/../lib/jcodings-1.0.8.jar:/soft/hbase/bin/../lib/jersey-client-1.9.jar:/soft/hbase/bin/../lib/jersey-core-1.9.jar:/soft/hbase/bin/../lib/jersey-guice-1.9.jar:/soft/hbase/bin/../lib/jersey-json-1.9.jar:/soft/hbase/bin/../lib/jersey-server-1.9.jar:/soft/hbase/bin/../lib/jets3t-0.9.0.jar:/soft/hbase/bin/../lib/jettison-1.3.3.jar:/soft/hbase/bin/../lib/jetty-6.1.26.jar:/soft/hbase/bin/../lib/jetty-sslengine-6.1.26.jar:/soft/hbase/bin/../lib/jetty-util-6.1.26.jar:/soft/hbase/bin/../lib/joni-2.1.2.jar:/soft/hbase/bin/../lib/jruby-complete-1.6.8.jar:/soft/hbase/bin/../lib/jsch-0.1.42.jar:/soft/hbase/bin/../lib/jsp-2.1-6.1.14.jar:/soft/hbase/bin/../lib/jsp-api-2.1-6.1.14.jar:/soft/hbase/bin/../lib/junit-4.12.jar:/soft/hbase/bin/../lib/leveldbjni-all-1.8.jar:/soft/hbase/bin/../lib/libthrift-0.9.3.jar:/soft/hbase/bin/../lib/log4j-1.2.17.jar:/soft/hbase/bin/../lib/metrics-core-2.2.0.jar:/soft/hbase/bin/../lib/MyHbase-1.0-SNAPSHOT.jar:/soft/hbase/bin/../lib/netty-all-4.0.23.Final.jar:/soft/hbase/bin/../lib/paranamer-2.3.jar:/soft/hbase/bin/../lib/phoenix-4.10.0-HBase-1.2-client.jar:/soft/hbase/bin/../lib/protobuf-java-2.5.0.jar:/soft/hbase/bin/../lib/servlet-api-2.5-6.1.14.jar:/soft/hbase/bin/../lib/servlet-api-2.5.jar:/soft/hbase/bin/../lib/slf4j-api-1.7.7.jar:/soft/hbase/bin/../lib/slf4j-log4j12-1.7.5.jar:/soft/hbase/bin/../lib/snappy-java-1.0.4.1.jar:/soft/hbase/bin/../lib/spymemcached-2.11.6.jar:/soft/hbase/bin/../lib/xmlenc-0.52.jar:/soft/hbase/bin/../lib/xz-1.0.jar:/soft/hbase/bin/../lib/zookeeper-3.4.6.jar:/soft/hadoop-2.7.3/etc/hadoop:/soft/hadoop-2.7.3/share/hadoop/common/lib/*:/soft/hadoop-2.7.3/share/hadoop/common/*:/soft/hadoop-2.7.3/share/hadoop/hdfs:/soft/hadoop-2.7.3/share/hadoop/hdfs/lib/*:/soft/hadoop-2.7.3/share/hadoop/hdfs/*:/soft/hadoop-2.7.3/share/hadoop/yarn/lib/*:/soft/hadoop-2.7.3/share/hadoop/yarn/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/lib/*:/soft/hadoop-2.7.3/share/hadoop/mapreduce/*::/soft/hive/lib/*:/contrib/capacity-scheduler/*.jar:/conf:/lib/*' -Djava.library.path=:/soft/hadoop-2.7.3/lib/native:/soft/hadoop-2.7.3/lib/native org.apache.flume.node.Application -f /soft/flume/conf/yinzhengjie-exec-umeng-kafka-to-hdfs.conf -n a1 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-flume-1.8.0-bin/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 18/08/06 23:42:46 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting 18/08/06 23:42:46 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/soft/flume/conf/yinzhengjie-exec-umeng-kafka-to-hdfs.conf 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Added sinks: k1 Agent: a1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Processing:k1 18/08/06 23:42:46 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [a1] 18/08/06 23:42:46 INFO node.AbstractConfigurationProvider: Creating channels 18/08/06 23:42:46 INFO channel.DefaultChannelFactory: Creating instance of channel c1 type memory 18/08/06 23:42:46 INFO node.AbstractConfigurationProvider: Created channel c1 18/08/06 23:42:46 INFO source.DefaultSourceFactory: Creating instance of source r1, type org.apache.flume.source.kafka.KafkaSource 18/08/06 23:42:46 INFO sink.DefaultSinkFactory: Creating instance of sink: k1, type: hdfs 18/08/06 23:42:47 INFO node.AbstractConfigurationProvider: Channel c1 connected to [r1, k1] 18/08/06 23:42:47 INFO node.Application: Starting new configuration:{ sourceRunners:{r1=PollableSourceRunner: { source:org.apache.flume.source.kafka.KafkaSource{name:r1,state:IDLE} counterGroup:{ name:null counters:{} } }} sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@703b8ec0 counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} } 18/08/06 23:42:47 INFO node.Application: Starting Channel c1 18/08/06 23:42:47 INFO node.Application: Waiting for channel: c1 to start. Sleeping for 500 ms 18/08/06 23:42:47 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean. 18/08/06 23:42:47 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: c1 started 18/08/06 23:42:47 INFO node.Application: Starting Sink k1 18/08/06 23:42:47 INFO node.Application: Starting Source r1 18/08/06 23:42:47 INFO kafka.KafkaSource: Starting org.apache.flume.source.kafka.KafkaSource{name:r1,state:IDLE}... 18/08/06 23:42:47 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: k1: Successfully registered new MBean. 18/08/06 23:42:47 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: k1 started 18/08/06 23:42:47 INFO consumer.ConsumerConfig: ConsumerConfig values: metric.reporters = [] metadata.max.age.ms = 300000 value.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer group.id = g10 partition.assignment.strategy = [org.apache.kafka.clients.consumer.RangeAssignor] reconnect.backoff.ms = 50 sasl.kerberos.ticket.renew.window.factor = 0.8 max.partition.fetch.bytes = 1048576 bootstrap.servers = [s102:9092] retry.backoff.ms = 100 sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 ssl.keystore.type = JKS ssl.trustmanager.algorithm = PKIX enable.auto.commit = false ssl.key.password = null fetch.max.wait.ms = 500 sasl.kerberos.min.time.before.relogin = 60000 connections.max.idle.ms = 540000 ssl.truststore.password = null session.timeout.ms = 30000 metrics.num.samples = 2 client.id = ssl.endpoint.identification.algorithm = null key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer ssl.protocol = TLS check.crcs = true request.timeout.ms = 40000 ssl.provider = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.keystore.location = null heartbeat.interval.ms = 3000 auto.commit.interval.ms = 5000 receive.buffer.bytes = 32768 ssl.cipher.suites = null ssl.truststore.type = JKS security.protocol = PLAINTEXT ssl.truststore.location = null ssl.keystore.password = null ssl.keymanager.algorithm = SunX509 metrics.sample.window.ms = 30000 fetch.min.bytes = 1 send.buffer.bytes = 131072 auto.offset.reset = latest 18/08/06 23:42:47 INFO utils.AppInfoParser: Kafka version : 0.9.0.1 18/08/06 23:42:47 INFO utils.AppInfoParser: Kafka commitId : 23c69d62a0cabf06 18/08/06 23:42:49 INFO kafka.SourceRebalanceListener: topic yinzhengjie-umeng-raw-logs - partition 3 assigned. 18/08/06 23:42:49 INFO kafka.SourceRebalanceListener: topic yinzhengjie-umeng-raw-logs - partition 2 assigned. 18/08/06 23:42:49 INFO kafka.SourceRebalanceListener: topic yinzhengjie-umeng-raw-logs - partition 1 assigned. 18/08/06 23:42:49 INFO kafka.SourceRebalanceListener: topic yinzhengjie-umeng-raw-logs - partition 0 assigned. 18/08/06 23:42:49 INFO kafka.KafkaSource: Kafka source r1 started. 18/08/06 23:42:49 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: r1: Successfully registered new MBean. 18/08/06 23:42:49 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: r1 started

5>.Hive配置

[yinzhengjie@s101 ~]$ hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in file:/soft/apache-hive-2.1.1-bin/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive (default)> show databases; OK database_name default yinzhengjie Time taken: 1.013 seconds, Fetched: 2 row(s) hive (default)> use yinzhengjie; OK Time taken: 0.025 seconds hive (yinzhengjie)> show tables; OK tab_name student teacher teacherbak teachercopy Time taken: 0.037 seconds, Fetched: 4 row(s) hive (yinzhengjie)> hive (yinzhengjie)> create table raw_logs( > servertimems float , > servertimestr string, > clientip string, > clienttimems bigint, > status int , > log string > ) > PARTITIONED BY (ym int, day int , hm int) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY '#' > LINES TERMINATED BY ' ' > STORED AS TEXTFILE; OK Time taken: 0.51 seconds hive (yinzhengjie)> hive (yinzhengjie)> show tables; OK tab_name raw_logs student teacher teacherbak teachercopy Time taken: 0.018 seconds, Fetched: 5 row(s) hive (yinzhengjie)>

hive (yinzhengjie)> load data inpath '/home/yinzhengjie/data/logs/umeng/raw-log/201808/06/2346' into table raw_logs partition(ym=201808 , day=06 ,hm=2346); Loading data to table yinzhengjie.raw_logs partition (ym=201808, day=6, hm=2346) OK Time taken: 1.846 seconds hive (yinzhengjie)>

hive (yinzhengjie)> select servertimems,clientip from raw_logs limit 3; OK servertimems clientip 1.53362432E9 127.0.0.1 1.53362432E9 127.0.0.1 1.53362432E9 127.0.0.1 Time taken: 0.148 seconds, Fetched: 3 row(s) hive (yinzhengjie)>

[yinzhengjie@s101 download]$ cat /home/yinzhengjie/download/umeng_create_logs_ddl.sql use yinzhengjie ; --startuplogs create table if not exists startuplogs ( appChannel string , appId string , appPlatform string , appVersion string , brand string , carrier string , country string , createdAtMs bigint , deviceId string , deviceStyle string , ipAddress string , network string , osType string , province string , screenSize string , tenantId string ) partitioned by (ym int ,day int , hm int) stored as parquet ; --eventlogs create table if not exists eventlogs ( appChannel string , appId string , appPlatform string , appVersion string , createdAtMs bigint , deviceId string , deviceStyle string , eventDurationSecs bigint , eventId string , osType string , tenantId string ) partitioned by (ym int ,day int , hm int) stored as parquet ; --errorlogs create table if not exists errorlogs ( appChannel string , appId string , appPlatform string , appVersion string , createdAtMs bigint , deviceId string , deviceStyle string , errorBrief string , errorDetail string , osType string , tenantId string ) partitioned by (ym int ,day int , hm int) stored as parquet ; --usagelogs create table if not exists usagelogs ( appChannel string , appId string , appPlatform string , appVersion string , createdAtMs bigint , deviceId string , deviceStyle string , osType string , singleDownloadTraffic bigint , singleUploadTraffic bigint , singleUseDurationSecs bigint , tenantId string ) partitioned by (ym int ,day int , hm int) stored as parquet ; --pagelogs create table if not exists pagelogs ( appChannel string , appId string , appPlatform string , appVersion string , createdAtMs bigint , deviceId string , deviceStyle string , nextPage string , osType string , pageId string , pageViewCntInSession int , stayDurationSecs bigint , tenantId string , visitIndex int ) partitioned by (ym int ,day int , hm int) stored as parquet ; [yinzhengjie@s101 download]$

[yinzhengjie@s101 ~]$ hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hbase-1.2.6/lib/phoenix-4.10.0-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in file:/soft/apache-hive-2.1.1-bin/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive (default)> show databases; OK database_name default yinzhengjie Time taken: 1.159 seconds, Fetched: 2 row(s) hive (default)> use yinzhengjie; OK Time taken: 0.055 seconds hive (yinzhengjie)> hive (yinzhengjie)> show tables; OK tab_name myusers raw_logs student teacher teacherbak teachercopy Time taken: 0.044 seconds, Fetched: 6 row(s) hive (yinzhengjie)> hive (yinzhengjie)> source /home/yinzhengjie/download/umeng_create_logs_ddl.sql; #执行在Linux存的HQL语句,这个文件必须真实存在,而且都应该是HQL语句 OK Time taken: 0.008 seconds OK Time taken: 0.257 seconds OK Time taken: 0.058 seconds OK Time taken: 0.073 seconds OK Time taken: 0.065 seconds OK Time taken: 0.053 seconds hive (yinzhengjie)> show tables; OK tab_name errorlogs eventlogs myusers pagelogs raw_logs startuplogs student teacher teacherbak teachercopy usagelogs Time taken: 0.014 seconds, Fetched: 11 row(s) hive (yinzhengjie)>

三.