Spark进阶之路-日志服务器的配置

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

如果你还在纠结如果配置Spark独立模式(Standalone)集群,可以参考我之前分享的笔记:https://www.cnblogs.com/yinzhengjie/p/9379045.html 。然而本篇博客的重点是如何配置日志服务器,并将日志落地在hdfs上。

一.准备实验环境

1>.集群管理脚本

[yinzhengjie@s101 ~]$ more `which xcall.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数" exit fi #获取用户输入的命令 cmd=$@ for (( i=101;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo ============= s$i $cmd ============ #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 ssh s$i $cmd #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xrsync.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数"; exit fi #获取文件路径 file=$@ #获取子路径 filename=`basename $file` #获取父路径 dirpath=`dirname $file` #获取完整路径 cd $dirpath fullpath=`pwd -P` #同步文件到DataNode for (( i=102;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo =========== s$i %file =========== #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 rsync -lr $filename `whoami`@s$i:$fullpath #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$

2>.开启hdfs分布式文件系统

[yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 18546 DFSZKFailoverController 18234 NameNode 18991 Jps 命令执行成功 ============= s102 jps ============ 12980 QuorumPeerMain 13061 DataNode 13382 Jps 13147 JournalNode 命令执行成功 ============= s103 jps ============ 13072 Jps 12836 JournalNode 12663 QuorumPeerMain 12750 DataNode 命令执行成功 ============= s104 jps ============ 12455 QuorumPeerMain 12537 DataNode 12862 Jps 12623 JournalNode 命令执行成功 ============= s105 jps ============ 12337 Jps 12151 DFSZKFailoverController 12043 NameNode 命令执行成功 [yinzhengjie@s101 ~]$

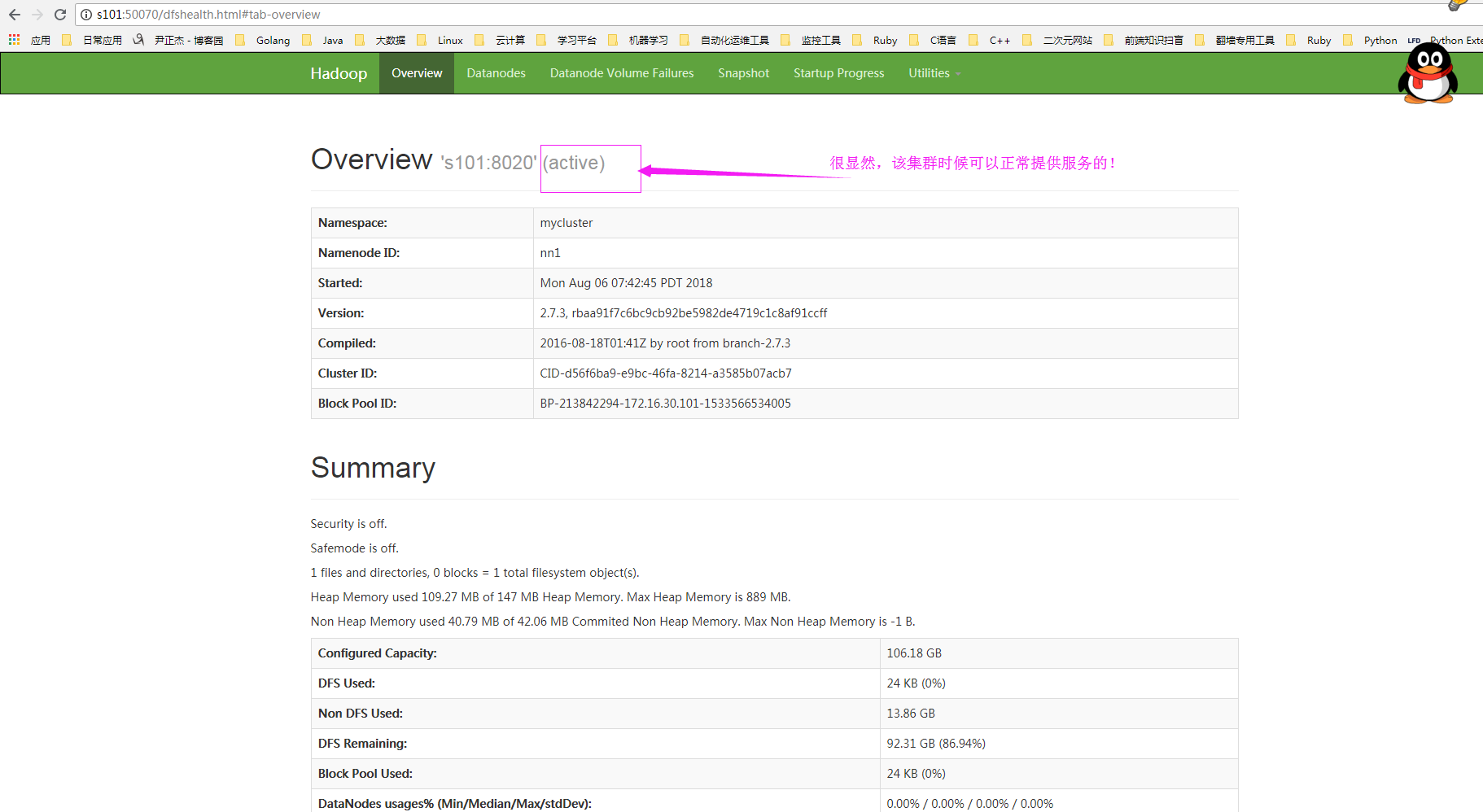

3>.检查服务是否开启成功

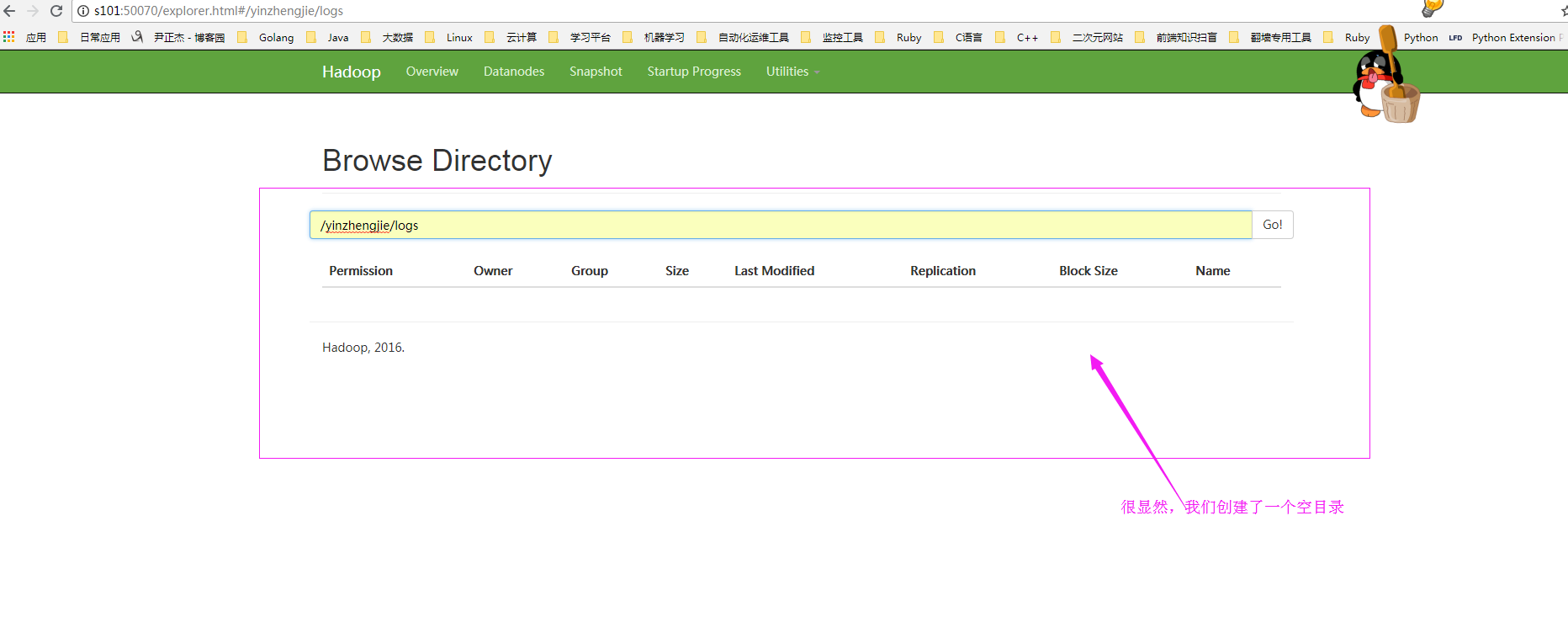

4>.在hdfs中创建指定目录用于存放日志文件

[yinzhengjie@s101 ~]$ hdfs dfs -mkdir -p /yinzhengjie/logs [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs dfs -ls -R / drwxr-xr-x - yinzhengjie supergroup 0 2018-08-13 15:19 /yinzhengjie drwxr-xr-x - yinzhengjie supergroup 0 2018-08-13 15:19 /yinzhengjie/logs [yinzhengjie@s101 ~]$

二.修改配置文件

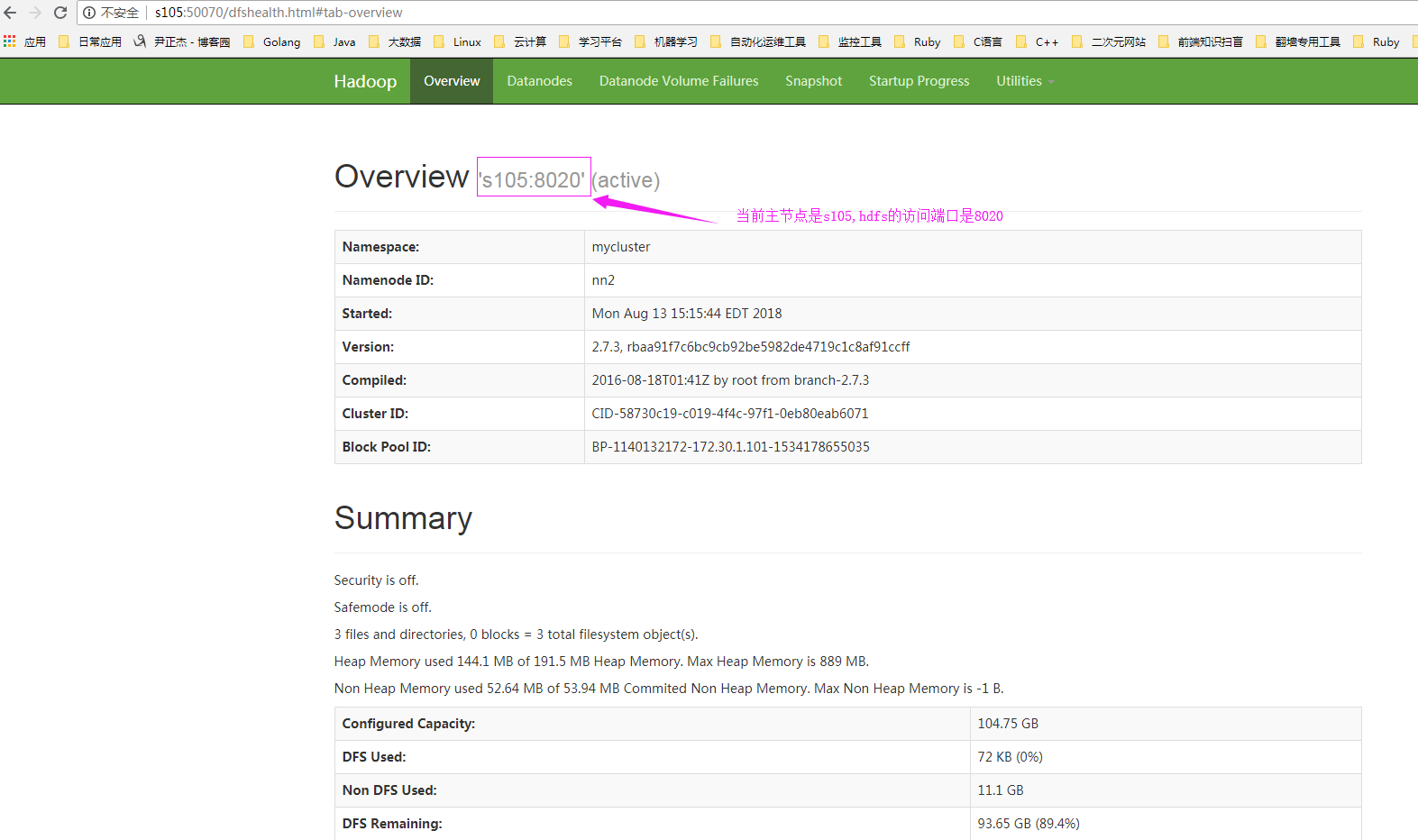

1>.查看可用的hdfs的NameNode节点

2>.开启log日志[温馨提示:HDFS上的目录需要提前存在

[yinzhengjie@s101 ~]$ cp /soft/spark/conf/spark-defaults.conf.template /soft/spark/conf/spark-defaults.conf [yinzhengjie@s101 ~]$ echo "spark.eventLog.enabled true" >> /soft/spark/conf/spark-defaults.conf [yinzhengjie@s101 ~]$ echo "spark.eventLog.dir hdfs://s105:8020/yinzhengjie/logs" >> /soft/spark/conf/spark-defaults.conf [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ cat /soft/spark/conf/spark-defaults.conf | grep -v ^# | grep -v ^$ spark.eventLog.enabled true #表示开启log功能 spark.eventLog.dir hdfs://s105:8020/yinzhengjie/logs #指定log存放的位置 [yinzhengjie@s101 ~]$

2>.修改spark-env.sh文件

[yinzhengjie@s101 ~]$ cat /soft/spark/conf/spark-env.sh | grep -v ^# | grep -v ^$ export JAVA_HOME=/soft/jdk SPARK_MASTER_HOST=s101 SPARK_MASTER_PORT=7077 export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=4000 -Dspark.history.retainedApplications=3 -Dspark.history.fs.logDirectory=hdfs://s105:8020/yinzhengjie/logs" [yinzhengjie@s101 ~]$ 参数描述: spark.eventLog.dir: #Application在运行过程中所有的信息均记录在该属性指定的路径下; spark.history.ui.port=4000 #调整WEBUI访问的端口号为4000 spark.history.fs.logDirectory= hdfs://s105:8020/yinzhengjie/logs #配置了该属性后,在start-history-server.sh时就无需再显式的指定路径,Spark History Server页面只展示该指定路径下的信息 spark.history.retainedApplications=3 #指定保存Application历史记录的个数,如果超过这个值,旧的应用程序信息将被删除,这个是内存中的应用数,而不是页面上显示的应用数。

3>.分发修改的spark-env.sh配置文件

[yinzhengjie@s101 ~]$ xrsync.sh /soft/spark-2.1.1-bin-hadoop2.7/conf

=========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 [yinzhengjie@s101 ~]$

三.启动日志服务器

1>.启动Spark集群

[yinzhengjie@s101 ~]$ /soft/spark/sbin/start-all.sh starting org.apache.spark.deploy.master.Master, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.master.Master-1-s101.out s104: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker-1-s104.out s102: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker-1-s102.out s103: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker-1-s103.out [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 7025 Jps 6136 NameNode 6942 Master 6447 DFSZKFailoverController 命令执行成功 ============= s102 jps ============ 2720 QuorumPeerMain 3652 DataNode 4040 Worker 3739 JournalNode 4095 Jps 命令执行成功 ============= s103 jps ============ 2720 QuorumPeerMain 4165 Jps 3734 DataNode 3821 JournalNode 4110 Worker 命令执行成功 ============= s104 jps ============ 4080 Worker 3781 JournalNode 4135 Jps 2682 QuorumPeerMain 3694 DataNode 命令执行成功 ============= s105 jps ============ 3603 NameNode 4228 Jps 3710 DFSZKFailoverController 命令执行成功 [yinzhengjie@s101 ~]$

2>.启动日志服务器

[yinzhengjie@s101 conf]$ start-history-server.sh starting org.apache.spark.deploy.history.HistoryServer, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.history.HistoryServer-1-s101.out [yinzhengjie@s101 conf]$

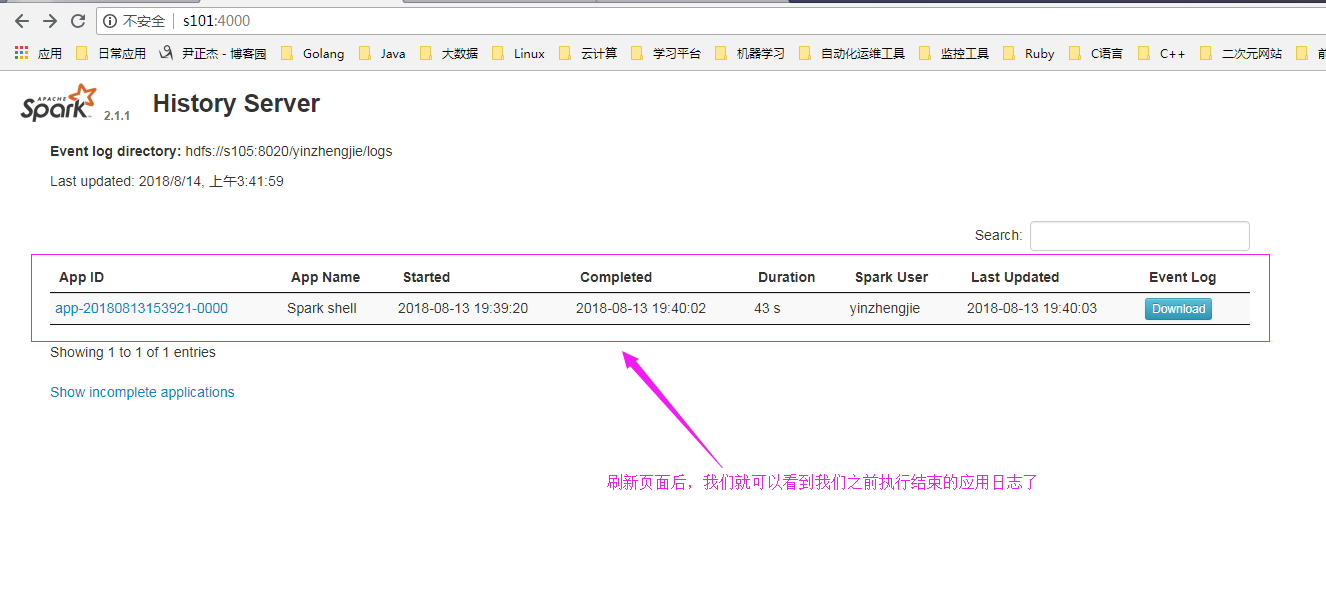

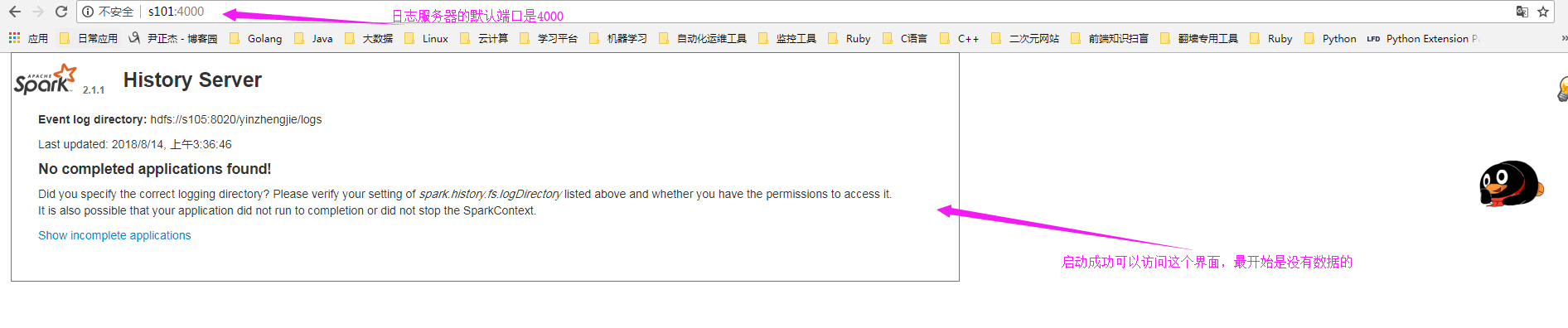

3>.通过webUI访问日志服务器

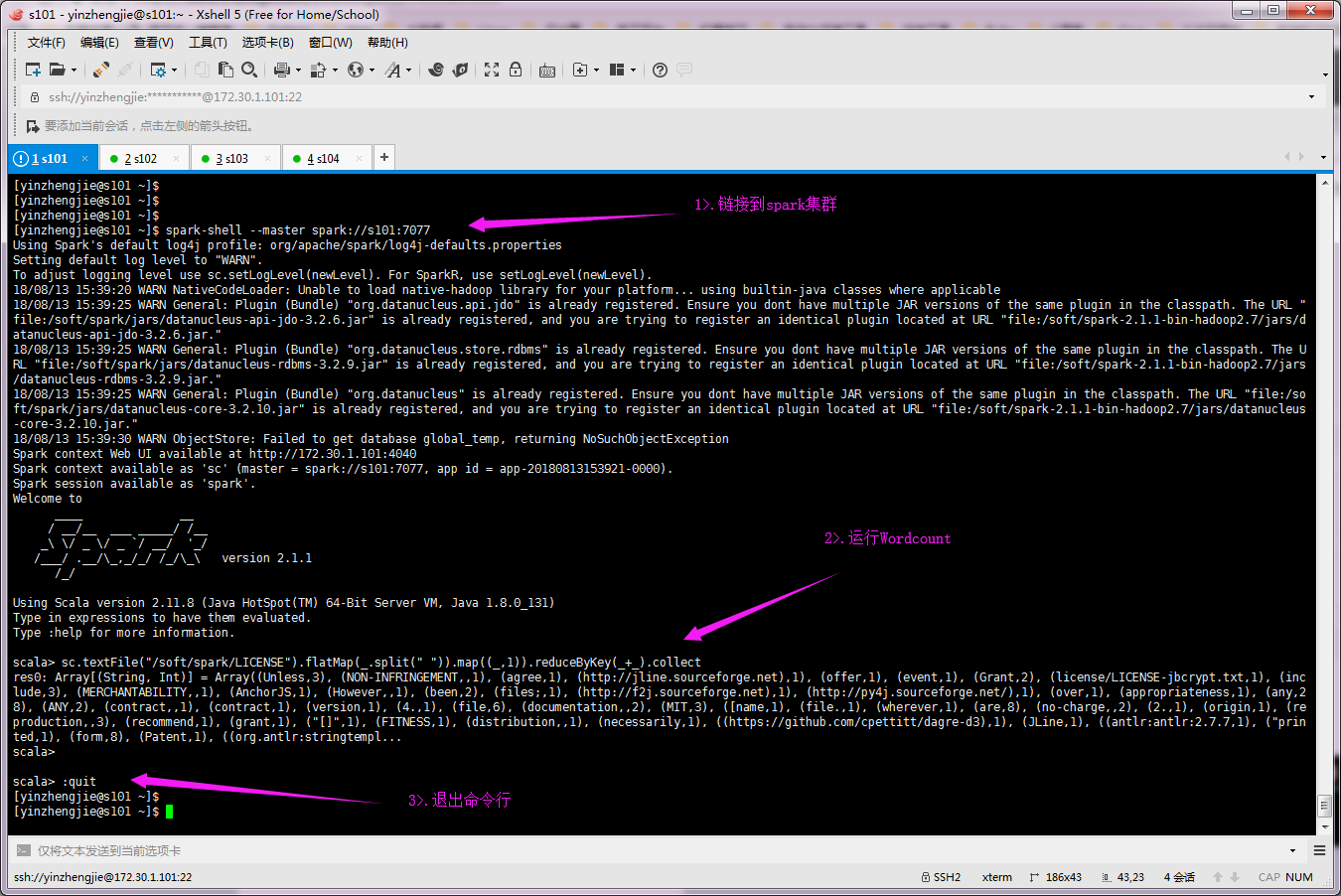

4>.运行Wordcount并退出程序([yinzhengjie@s101 ~]$ spark-shell --master spark://s101:7077)

5>.再次查看日志服务器页面