Spark集群之yarn提交作业优化案例

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.启动Hadoop集群

1>.自定义批量管理脚本

[yinzhengjie@s101 ~]$ more `which xzk.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop|restart|status}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function zookeeperManger(){ case $cmd in start) echo "启动服务" remoteExecution start ;; stop) echo "停止服务" remoteExecution stop ;; restart) echo "重启服务" remoteExecution restart ;; status) echo "查看状态" remoteExecution status ;; *) echo "无效参数,用法为: $0 {start|stop|restart|status}" ;; esac } #定义执行的命令 function remoteExecution(){ for (( i=102 ; i<=104 ; i++ )) ; do tput setaf 2 echo ========== s$i zkServer.sh $1 ================ tput setaf 9 ssh s$i "source /etc/profile ; zkServer.sh $1" done } #调用函数 zookeeperManger [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xcall.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数" exit fi #获取用户输入的命令 cmd=$@ for (( i=101;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo ============= s$i $cmd ============ #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 ssh s$i $cmd #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$

2>.启动zookeeper集群

[yinzhengjie@s101 ~]$ xzk.sh start 启动服务 ========== s102 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s103 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s104 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [yinzhengjie@s101 ~]$

3>.启动hdfs分布式文件系统

[yinzhengjie@s101 ~]$ start-dfs.sh SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting namenodes on [s101 s105] s101: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s101.out s105: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s105.out s102: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s102.out s103: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s103.out s104: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s104.out Starting journal nodes [s102 s103 s104] s102: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s102.out s104: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s104.out s103: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s103.out SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting ZK Failover Controllers on NN hosts [s101 s105] s101: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s101.out s105: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s105.out [yinzhengjie@s101 ~]$

4>.启动yarn集群

[yinzhengjie@s101 ~]$ start-yarn.sh starting yarn daemons s101: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s101.out s105: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s105.out s102: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s102.out s104: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s104.out s103: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s103.out [yinzhengjie@s101 ~]$

5>.查看集群是否启动成功

[yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 3065 ResourceManager 2602 NameNode 2907 DFSZKFailoverController 3374 Jps 命令执行成功 ============= s102 jps ============ 2356 JournalNode 2277 DataNode 2202 QuorumPeerMain 2476 NodeManager 2589 Jps 命令执行成功 ============= s103 jps ============ 2595 Jps 2197 QuorumPeerMain 2358 JournalNode 2279 DataNode 2478 NodeManager 命令执行成功 ============= s104 jps ============ 2272 DataNode 2197 QuorumPeerMain 2469 NodeManager 2583 Jps 2346 JournalNode 命令执行成功 ============= s105 jps ============ 2640 Jps 2258 NameNode 2358 DFSZKFailoverController 命令执行成功 [yinzhengjie@s101 ~]$

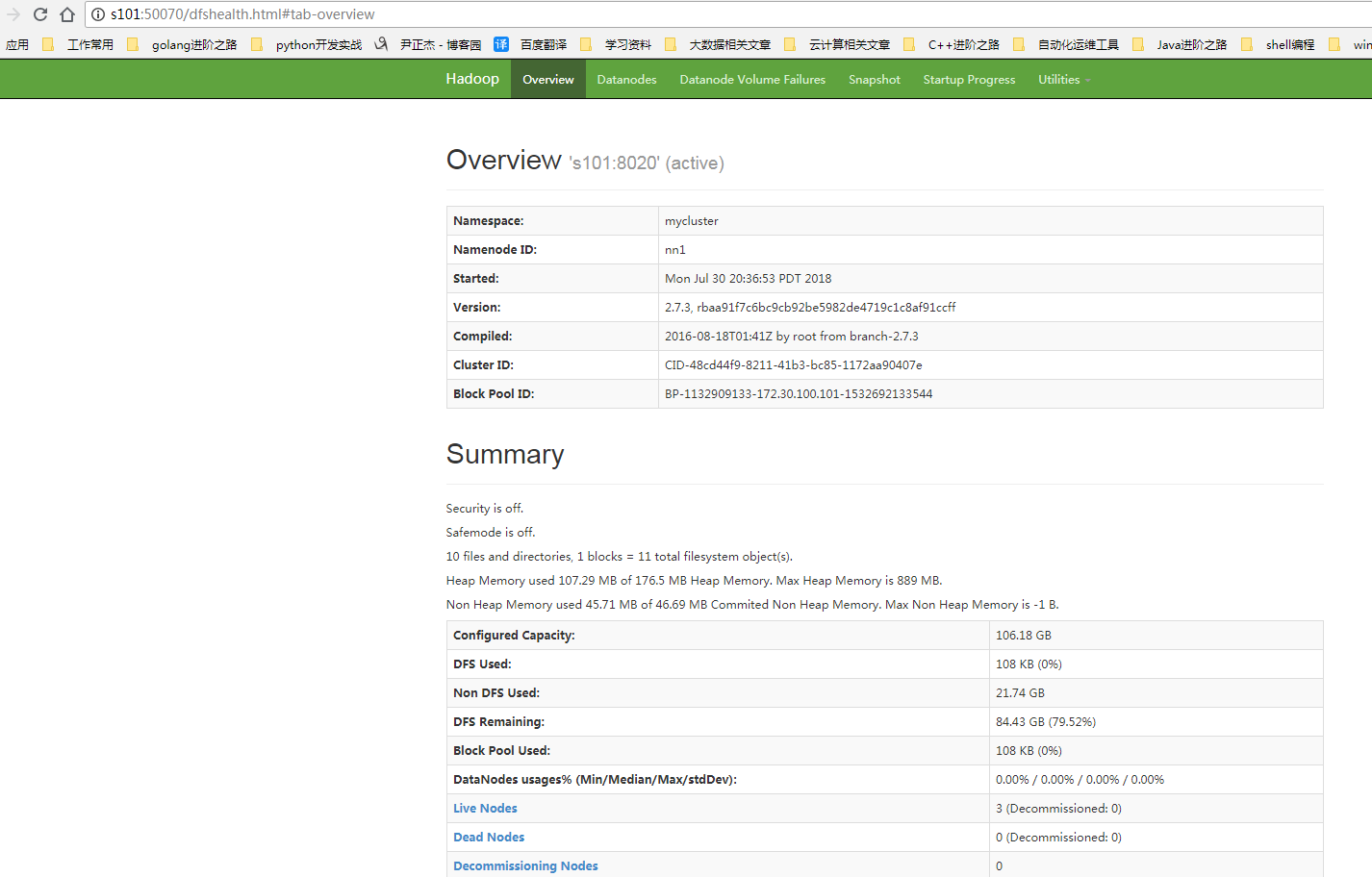

检查WebUI是否正常打开:

二.Spark集群的运行模式

1>.local

本地模式,不需要启动任何进程.使用jvm多个线程模拟worker。

2>.standalone

独立模式,master + worker,启动方式:spark-submit --master spark://s101:7077

3>.yarn

不需要启动任务spark进程,不需要安装spark集群,启动方式如:spark-submit --master yarn | yarn-client | yarn-cluster

1.yarn-client driver运行在client,appmaster只负责请求资源列表。 2.yarn-cluster appmaster除了请求资源列表之外,还要运行driver程序。

三.使用yarn操作步骤

我们需要停止spark集群,只需要安装Spark软件并且启动hadoop集群即可。

四.优化yarn集群配置案例