Hadoop基础-镜像文件(fsimage)和编辑日志(edits)

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.查看日志镜像文件(如:fsimage_0000000000000000767)内容

1>.镜像文件的作用

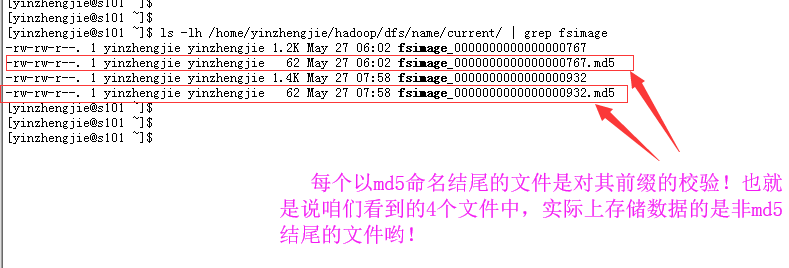

通过查看上面的XML文件,可以明显的知道镜像文件是存放的是目录结构(你也可以理解是一个树形结构),文件属性等信息,说到这就不说不提一下镜像文件的md5校验文件了,这个校验文件是为了判断镜像文件是否被修改。fsimage文件是namenode中关于元数据的镜像,一般称为检查点。它是在NameNode启动时对整个文件系统的快照 。

2>.用"hdfs oiv"命令下载镜像文件格式为XML,操作如下:

[yinzhengjie@s101 ~]$ ll

total 0

drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop

drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep fsimage

-rw-rw-r--. 1 yinzhengjie yinzhengjie 1.2K May 27 06:02 fsimage_0000000000000000767

-rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 06:02 fsimage_0000000000000000767.md5

-rw-rw-r--. 1 yinzhengjie yinzhengjie 1.4K May 27 07:58 fsimage_0000000000000000932

-rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 07:58 fsimage_0000000000000000932.md5

[yinzhengjie@s101 ~]$ hdfs oiv -i ./hadoop/dfs/name/current/fsimage_0000000000000000767 -o yinzhengjie.xml -p XML

[yinzhengjie@s101 ~]$ ll

total 8

drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop

drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell

-rw-rw-r--. 1 yinzhengjie yinzhengjie 4934 May 27 08:10 yinzhengjie.xml

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sz yinzhengjie.xml

rz

zmodem trl+C ȡ

100% 4 KB 4 KB/s 00:00:01 0 Errors

[yinzhengjie@s101 ~]$

<?xml version="1.0"?>

<fsimage><NameSection>

<genstampV1>1000</genstampV1><genstampV2>1019</genstampV2><genstampV1Limit>0</genstampV1Limit><lastAllocatedBlockId>1073741839</lastAllocatedBlockId><txid>767</txid></NameSection>

<INodeSection><lastInodeId>16414</lastInodeId><inode><id>16385</id><type>DIRECTORY</type><name></name><mtime>1527331031268</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>9223372036854775807</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16387</id><type>FILE</type><name>xrsync.sh</name><replication>3</replication><mtime>1527308253459</mtime><atime>1527330550802</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission><blocks><block><id>1073741826</id><genstamp>1002</genstamp><numBytes>700</numBytes></block>

</blocks>

</inode>

<inode><id>16389</id><type>FILE</type><name>hadoop-2.7.3.tar.gz</name><replication>3</replication><mtime>1527310784699</mtime><atime>1527310775186</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission><blocks><block><id>1073741827</id><genstamp>1003</genstamp><numBytes>134217728</numBytes></block>

<block><id>1073741828</id><genstamp>1004</genstamp><numBytes>79874467</numBytes></block>

</blocks>

</inode>

<inode><id>16402</id><type>DIRECTORY</type><name>shell</name><mtime>1527331084147</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16403</id><type>DIRECTORY</type><name>awk</name><mtime>1527332686407</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16404</id><type>DIRECTORY</type><name>sed</name><mtime>1527332624472</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16405</id><type>DIRECTORY</type><name>grep</name><mtime>1527332592029</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16406</id><type>FILE</type><name>yinzhengjie.sh</name><replication>3</replication><mtime>1527331084161</mtime><atime>1527331084147</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16409</id><type>FILE</type><name>1.txt</name><replication>3</replication><mtime>1527332587208</mtime><atime>1527332587194</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16410</id><type>FILE</type><name>2.txt</name><replication>3</replication><mtime>1527332592042</mtime><atime>1527332592029</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16411</id><type>FILE</type><name>zabbix.sql</name><replication>3</replication><mtime>1527332604168</mtime><atime>1527332604154</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16412</id><type>FILE</type><name>nagios.sh</name><replication>3</replication><mtime>1527332624486</mtime><atime>1527332624472</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16413</id><type>FILE</type><name>keepalive.sh</name><replication>3</replication><mtime>1527332677350</mtime><atime>1527332677335</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16414</id><type>FILE</type><name>nginx.conf</name><replication>3</replication><mtime>1527332686421</mtime><atime>1527332686407</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

</INodeSection>

<INodeReferenceSection></INodeReferenceSection><SnapshotSection><snapshotCounter>0</snapshotCounter></SnapshotSection>

<INodeDirectorySection><directory><parent>16385</parent><inode>16389</inode><inode>16402</inode><inode>16387</inode></directory>

<directory><parent>16402</parent><inode>16403</inode><inode>16405</inode><inode>16404</inode><inode>16406</inode></directory>

<directory><parent>16403</parent><inode>16413</inode><inode>16414</inode></directory>

<directory><parent>16404</parent><inode>16412</inode><inode>16411</inode></directory>

<directory><parent>16405</parent><inode>16409</inode><inode>16410</inode></directory>

</INodeDirectorySection>

<FileUnderConstructionSection></FileUnderConstructionSection>

<SnapshotDiffSection><diff><inodeid>16385</inodeid></diff></SnapshotDiffSection>

<SecretManagerSection><currentId>0</currentId><tokenSequenceNumber>0</tokenSequenceNumber></SecretManagerSection><CacheManagerSection><nextDirectiveId>1</nextDirectiveId></CacheManagerSection>

</fsimage

[yinzhengjie@s101 ~]$ cat yinzhengjie.xml

<?xml version="1.0"?>

<fsimage><NameSection>

<genstampV1>1000</genstampV1><genstampV2>1019</genstampV2><genstampV1Limit>0</genstampV1Limit><lastAllocatedBlockId>1073741839</lastAllocatedBlockId><txid>767</txid></NameSection>

<INodeSection><lastInodeId>16414</lastInodeId><inode><id>16385</id><type>DIRECTORY</type><name></name><mtime>1527331031268</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>9223372036854775807</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16387</id><type>FILE</type><name>xrsync.sh</name><replication>3</replication><mtime>1527308253459</mtime><atime>1527330550802</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission><blocks><block><id>1073741826</id><genstamp>1002</genstamp><numBytes>700</numBytes></block>

</blocks>

</inode>

<inode><id>16389</id><type>FILE</type><name>hadoop-2.7.3.tar.gz</name><replication>3</replication><mtime>1527310784699</mtime><atime>1527310775186</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission><blocks><block><id>1073741827</id><genstamp>1003</genstamp><numBytes>134217728</numBytes></block>

<block><id>1073741828</id><genstamp>1004</genstamp><numBytes>79874467</numBytes></block>

</blocks>

</inode>

<inode><id>16402</id><type>DIRECTORY</type><name>shell</name><mtime>1527331084147</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16403</id><type>DIRECTORY</type><name>awk</name><mtime>1527332686407</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16404</id><type>DIRECTORY</type><name>sed</name><mtime>1527332624472</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16405</id><type>DIRECTORY</type><name>grep</name><mtime>1527332592029</mtime><permission>yinzhengjie:supergroup:rwxr-xr-x</permission><nsquota>-1</nsquota><dsquota>-1</dsquota></inode>

<inode><id>16406</id><type>FILE</type><name>yinzhengjie.sh</name><replication>3</replication><mtime>1527331084161</mtime><atime>1527331084147</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16409</id><type>FILE</type><name>1.txt</name><replication>3</replication><mtime>1527332587208</mtime><atime>1527332587194</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16410</id><type>FILE</type><name>2.txt</name><replication>3</replication><mtime>1527332592042</mtime><atime>1527332592029</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16411</id><type>FILE</type><name>zabbix.sql</name><replication>3</replication><mtime>1527332604168</mtime><atime>1527332604154</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16412</id><type>FILE</type><name>nagios.sh</name><replication>3</replication><mtime>1527332624486</mtime><atime>1527332624472</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16413</id><type>FILE</type><name>keepalive.sh</name><replication>3</replication><mtime>1527332677350</mtime><atime>1527332677335</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

<inode><id>16414</id><type>FILE</type><name>nginx.conf</name><replication>3</replication><mtime>1527332686421</mtime><atime>1527332686407</atime><perferredBlockSize>134217728</perferredBlockSize><permission>yinzhengjie:supergroup:rw-r--r--</permission></inode>

</INodeSection>

<INodeReferenceSection></INodeReferenceSection><SnapshotSection><snapshotCounter>0</snapshotCounter></SnapshotSection>

<INodeDirectorySection><directory><parent>16385</parent><inode>16389</inode><inode>16402</inode><inode>16387</inode></directory>

<directory><parent>16402</parent><inode>16403</inode><inode>16405</inode><inode>16404</inode><inode>16406</inode></directory>

<directory><parent>16403</parent><inode>16413</inode><inode>16414</inode></directory>

<directory><parent>16404</parent><inode>16412</inode><inode>16411</inode></directory>

<directory><parent>16405</parent><inode>16409</inode><inode>16410</inode></directory>

</INodeDirectorySection>

<FileUnderConstructionSection></FileUnderConstructionSection>

<SnapshotDiffSection><diff><inodeid>16385</inodeid></diff></SnapshotDiffSection>

<SecretManagerSection><currentId>0</currentId><tokenSequenceNumber>0</tokenSequenceNumber></SecretManagerSection><CacheManagerSection><nextDirectiveId>1</nextDirectiveId></CacheManagerSection>

</fsimage>

[yinzhengjie@s101 ~]$

二.查看编辑日志文件内容

1.编辑日志的作用

顾名思义,编辑日志当然是记录对文件或者目录的修改信息啦,比如删除目录,修改文件等信息都会被该文件记录。编辑日志一般命名规则为:“edits_*”(下面你会看到类似的文件), 它是在NameNode启动后,记录对文件系统的改动序列。

2>.使用oev命令查询hadoop的编辑日志文件,操作如下:

[yinzhengjie@s101 ~]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep edits | tail -5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 08:33 edits_0000000000000001001-0000000000000001002 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 08:34 edits_0000000000000001003-0000000000000001004 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 08:35 edits_0000000000000001005-0000000000000001006 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 08:36 edits_0000000000000001007-0000000000000001008 -rw-rw-r--. 1 yinzhengjie yinzhengjie 1.0M May 27 08:36 edits_inprogress_0000000000000001009 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ ll total 8 drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell -rw-rw-r--. 1 yinzhengjie yinzhengjie 4934 May 27 08:10 yinzhengjie.xml [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs oev -i ./hadoop/dfs/name/current/edits_0000000000000001007-0000000000000001008 -o edits.xml -p XML [yinzhengjie@s101 ~]$ ll total 12 -rw-rw-r--. 1 yinzhengjie yinzhengjie 315 May 27 08:39 edits.xml drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell -rw-rw-r--. 1 yinzhengjie yinzhengjie 4934 May 27 08:10 yinzhengjie.xml [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ cat edits.xml <?xml version="1.0" encoding="UTF-8"?> <EDITS> <EDITS_VERSION>-63</EDITS_VERSION> <RECORD> <OPCODE>OP_START_LOG_SEGMENT</OPCODE> <DATA> <TXID>1007</TXID> </DATA> </RECORD> <RECORD> <OPCODE>OP_END_LOG_SEGMENT</OPCODE> <DATA> <TXID>1008</TXID> </DATA> </RECORD> </EDITS> [yinzhengjie@s101 ~]$

3>.查看正在使用的编辑日志文件

哪个文件是正在使用的编辑日志文件呢?估计你已经看出来了,就是带有“edits_inprogress_*”字样的文件。

[yinzhengjie@s101 ~]$ ll total 0 drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell [yinzhengjie@s101 ~]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep edits | tail -5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 08:59 edits_0000000000000001053-0000000000000001054 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:00 edits_0000000000000001055-0000000000000001056 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:01 edits_0000000000000001057-0000000000000001058 -rw-rw-r--. 1 yinzhengjie yinzhengjie 912 May 27 09:02 edits_0000000000000001059-0000000000000001071 -rw-rw-r--. 1 yinzhengjie yinzhengjie 1.0M May 27 09:02 edits_inprogress_0000000000000001072 [yinzhengjie@s101 ~]$ hdfs oev -i ./hadoop/dfs/name/current/edits_inprogress_0000000000000001072 -o yinzhengjie.xml -p XML [yinzhengjie@s101 ~]$ ll total 4 drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell -rw-rw-r--. 1 yinzhengjie yinzhengjie 205 May 27 09:03 yinzhengjie.xml [yinzhengjie@s101 ~]$ more yinzhengjie.xml <?xml version="1.0" encoding="UTF-8"?> <EDITS> <EDITS_VERSION>-63</EDITS_VERSION> <RECORD> <OPCODE>OP_START_LOG_SEGMENT</OPCODE> <DATA> <TXID>1072</TXID> </DATA> </RECORD> </EDITS> [yinzhengjie@s101 ~]$

三.手动对编辑日志进行滚动

1>.用hdfs dfsadmin 命令进行日志滚动操作

[yinzhengjie@s101 ~]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep edits | tail -5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:12 edits_0000000000000001090-0000000000000001091 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:13 edits_0000000000000001092-0000000000000001093 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:14 edits_0000000000000001094-0000000000000001095 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:14 edits_0000000000000001096-0000000000000001097 -rw-rw-r--. 1 yinzhengjie yinzhengjie 1.0M May 27 09:14 edits_inprogress_0000000000000001098 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs dfsadmin -rollEdits Successfully rolled edit logs. New segment starts at txid 1100 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep edits | tail -5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:13 edits_0000000000000001092-0000000000000001093 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:14 edits_0000000000000001094-0000000000000001095 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:14 edits_0000000000000001096-0000000000000001097 -rw-rw-r--. 1 yinzhengjie yinzhengjie 42 May 27 09:15 edits_0000000000000001098-0000000000000001099 -rw-rw-r--. 1 yinzhengjie yinzhengjie 1.0M May 27 09:15 edits_inprogress_0000000000000001100 [yinzhengjie@s101 ~]$

2>.启动hdfs时,编辑日志滚动

重启hdfs服务器是,镜像文件编辑日子进行融合,会自动滚动编辑日志。其实我们可以通过webUI来查看这个过程。

四.保存名称空间(也就是手动保存镜像文件)

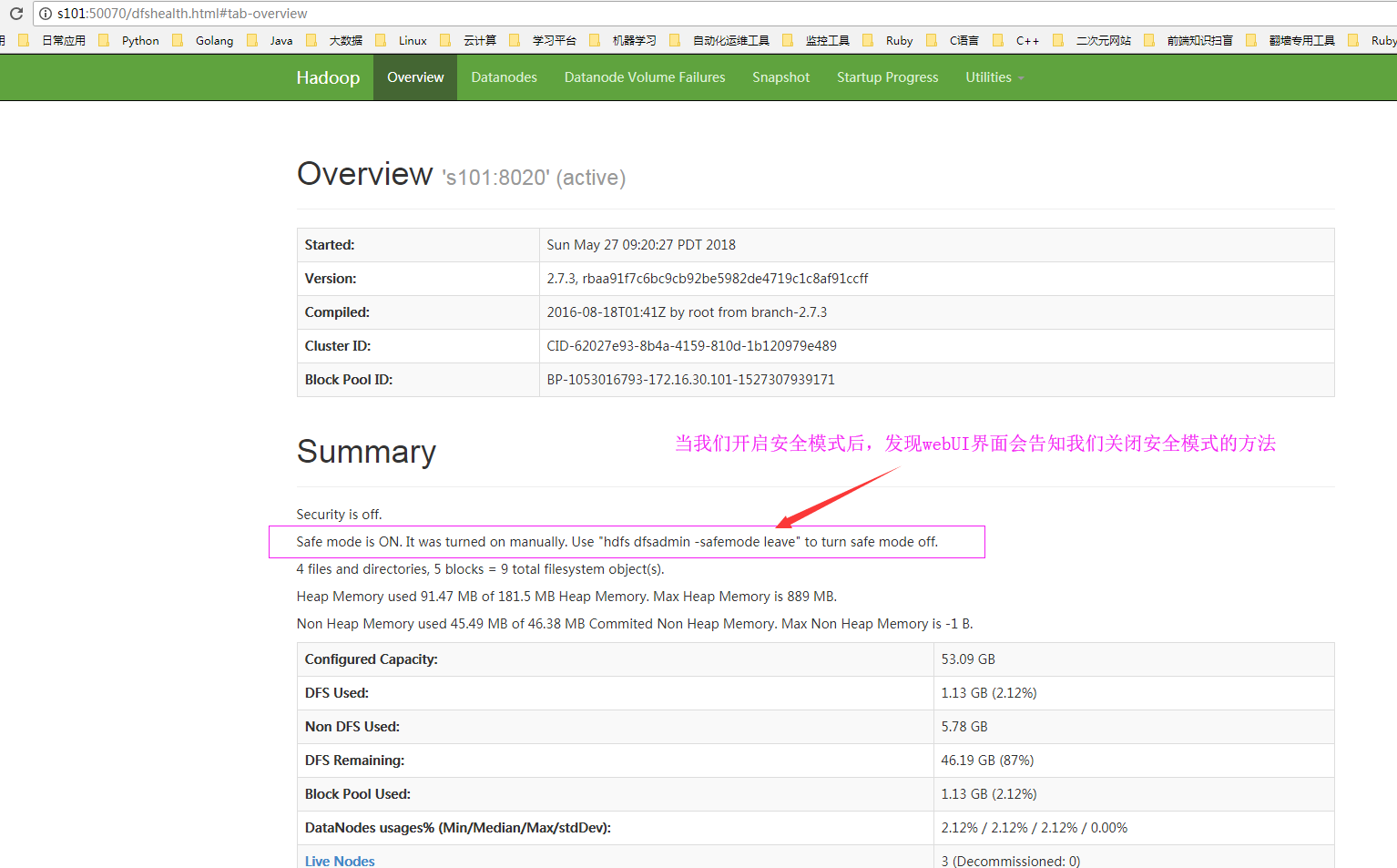

1>.查看hdfs当前的模式,默安全模式默认是关闭的

[yinzhengjie@s101 ~]$ hdfs dfsadmin -safemode get

Safe mode is OFF

[yinzhengjie@s101 ~]$

2>.开启安全模式

开启安全模式我们只需要执行:“hdfs dfsadmin -safemode enter” 即可。

[yinzhengjie@s101 ~]$ hdfs dfsadmin -safemode get Safe mode is OFF [yinzhengjie@s101 ~]$ hdfs dfsadmin -safemode enter Safe mode is ON [yinzhengjie@s101 ~]$ hdfs dfsadmin -safemode get Safe mode is ON [yinzhengjie@s101 ~]$

NameNode进入安全模式后,无法进行写的操作,但是读取的操作依然是可以正常进行的哟,此时如下:

[yinzhengjie@s101 ~]$ ll total 4 drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell -rw-rw-r--. 1 yinzhengjie yinzhengjie 205 May 27 09:03 yinzhengjie.xml [yinzhengjie@s101 ~]$ hdfs dfs -ls / Found 3 items -rw-r--r-- 3 yinzhengjie supergroup 214092195 2018-05-25 21:59 /hadoop-2.7.3.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 185540433 2018-05-27 09:02 /jdk-8u131-linux-x64.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 700 2018-05-25 21:17 /xrsync.sh [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs dfs -put yinzhengjie.xml / put: Cannot create file/yinzhengjie.xml._COPYING_. Name node is in safe mode. [yinzhengjie@s101 ~]$

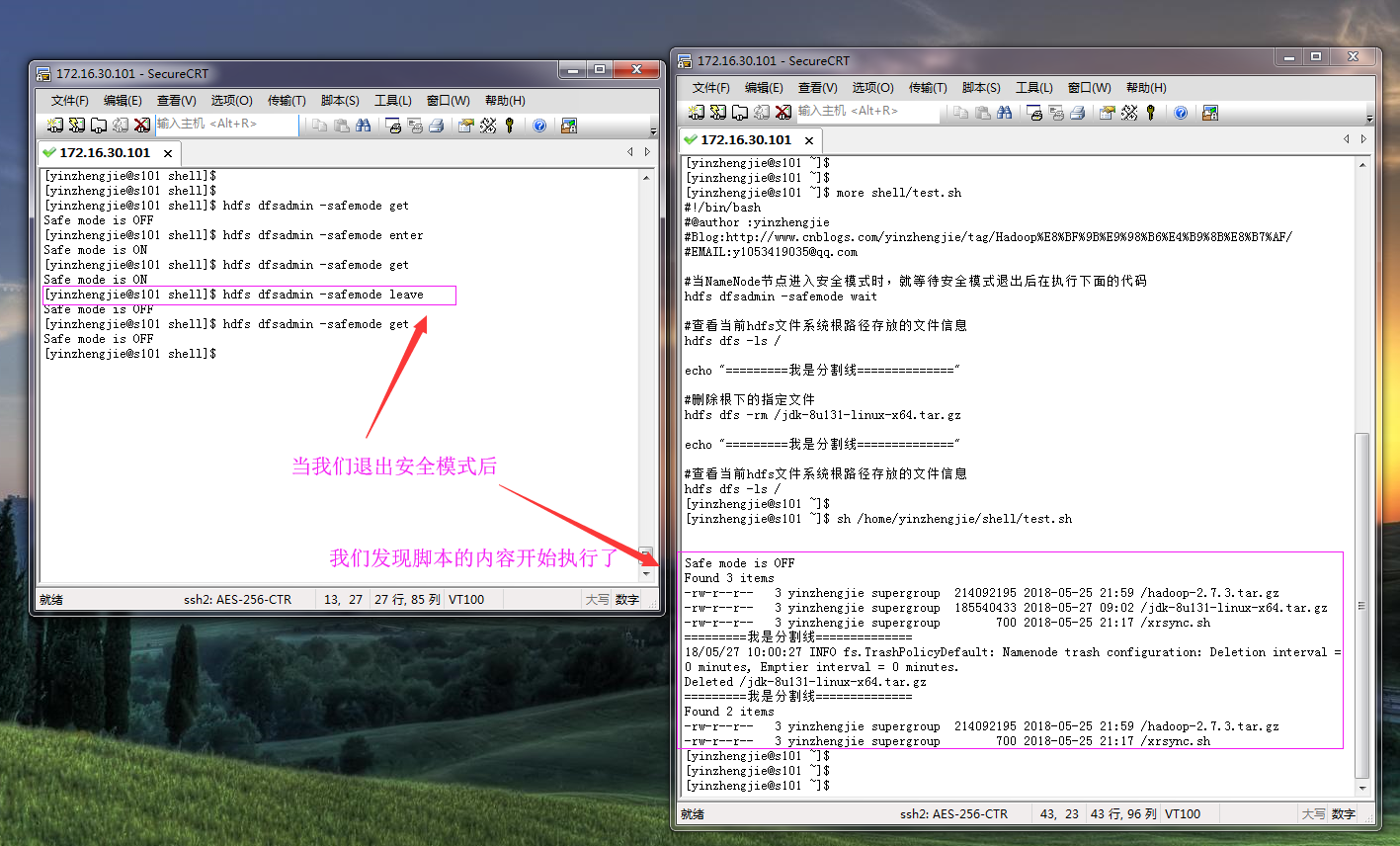

3>.退出安全模式

通过上面的测试案例我们知道,当NameNode进入安全模式时,无法进行删除操作,只要我们退出安全模式就可以正常上传文件啦。

[yinzhengjie@s101 ~]$ hdfs dfs -ls / Found 3 items -rw-r--r-- 3 yinzhengjie supergroup 214092195 2018-05-25 21:59 /hadoop-2.7.3.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 185540433 2018-05-27 09:02 /jdk-8u131-linux-x64.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 700 2018-05-25 21:17 /xrsync.sh [yinzhengjie@s101 ~]$ ll total 4 drwxrwxr-x. 4 yinzhengjie yinzhengjie 35 May 25 19:08 hadoop drwxrwxr-x. 2 yinzhengjie yinzhengjie 96 May 25 22:05 shell -rw-rw-r--. 1 yinzhengjie yinzhengjie 205 May 27 09:03 yinzhengjie.xml [yinzhengjie@s101 ~]$ hdfs dfs -put yinzhengjie.xml / put: Cannot create file/yinzhengjie.xml._COPYING_. Name node is in safe mode. [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hdfs dfsadmin -safemode leave Safe mode is OFF [yinzhengjie@s101 ~]$ hdfs dfs -put yinzhengjie.xml / [yinzhengjie@s101 ~]$ hdfs dfs -ls / Found 4 items -rw-r--r-- 3 yinzhengjie supergroup 214092195 2018-05-25 21:59 /hadoop-2.7.3.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 185540433 2018-05-27 09:02 /jdk-8u131-linux-x64.tar.gz -rw-r--r-- 3 yinzhengjie supergroup 700 2018-05-25 21:17 /xrsync.sh -rw-r--r-- 3 yinzhengjie supergroup 205 2018-05-27 09:40 /yinzhengjie.xml [yinzhengjie@s101 ~]$

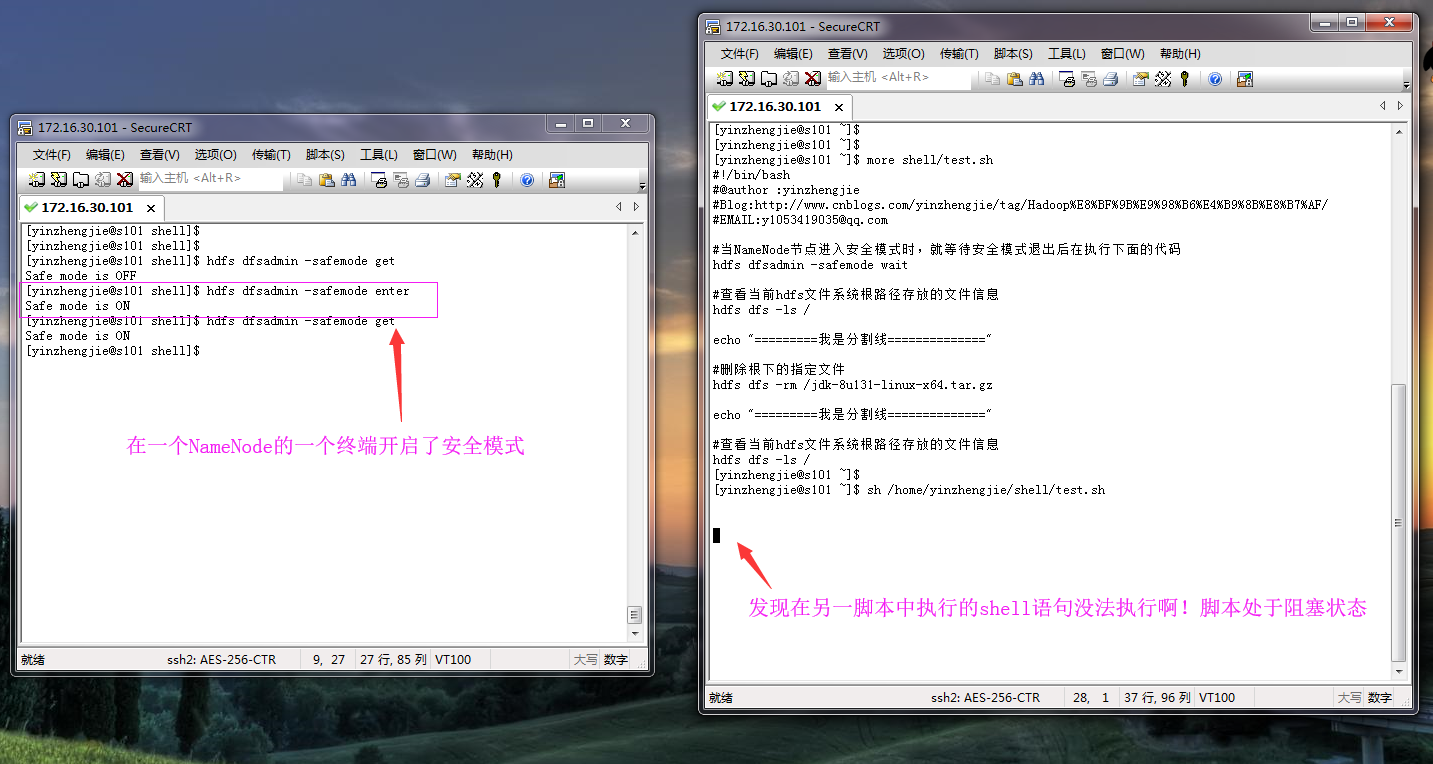

4>.等待安全模式的应用

我们先将客户端开启安全模式

然后我们再退出安全模式:

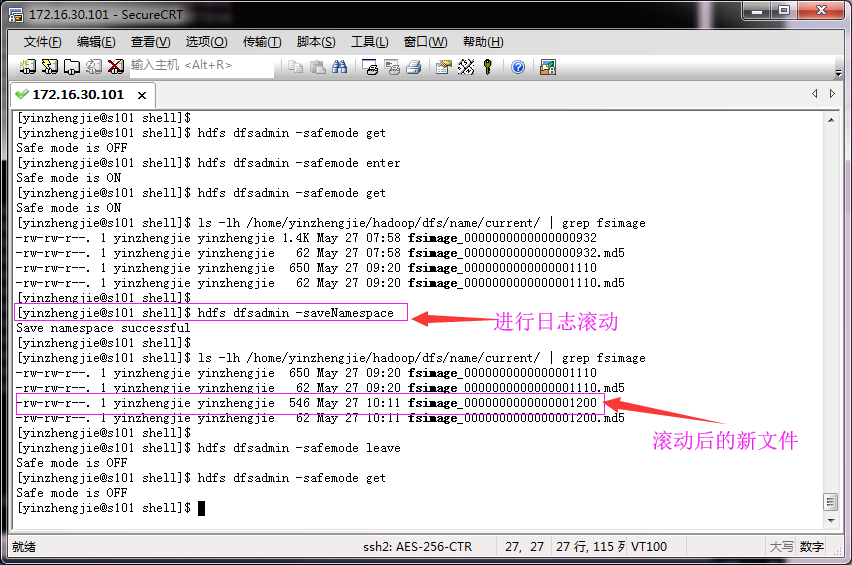

5>.保存名称空间

[yinzhengjie@s101 shell]$ hdfs dfsadmin -safemode get Safe mode is OFF [yinzhengjie@s101 shell]$ hdfs dfsadmin -safemode enter Safe mode is ON [yinzhengjie@s101 shell]$ hdfs dfsadmin -safemode get Safe mode is ON [yinzhengjie@s101 shell]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep fsimage

-rw-rw-r--. 1 yinzhengjie yinzhengjie 1.4K May 27 07:58 fsimage_0000000000000000932 -rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 07:58 fsimage_0000000000000000932.md5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 650 May 27 09:20 fsimage_0000000000000001110 -rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 09:20 fsimage_0000000000000001110.md5 [yinzhengjie@s101 shell]$ [yinzhengjie@s101 shell]$ hdfs dfsadmin -saveNamespace Save namespace successful [yinzhengjie@s101 shell]$ [yinzhengjie@s101 shell]$ ls -lh /home/yinzhengjie/hadoop/dfs/name/current/ | grep fsimage -rw-rw-r--. 1 yinzhengjie yinzhengjie 650 May 27 09:20 fsimage_0000000000000001110 -rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 09:20 fsimage_0000000000000001110.md5 -rw-rw-r--. 1 yinzhengjie yinzhengjie 546 May 27 10:11 fsimage_0000000000000001200 -rw-rw-r--. 1 yinzhengjie yinzhengjie 62 May 27 10:11 fsimage_0000000000000001200.md5 [yinzhengjie@s101 shell]$ [yinzhengjie@s101 shell]$ hdfs dfsadmin -safemode leave Safe mode is OFF [yinzhengjie@s101 shell]$ hdfs dfsadmin -safemode get Safe mode is OFF [yinzhengjie@s101 shell]$

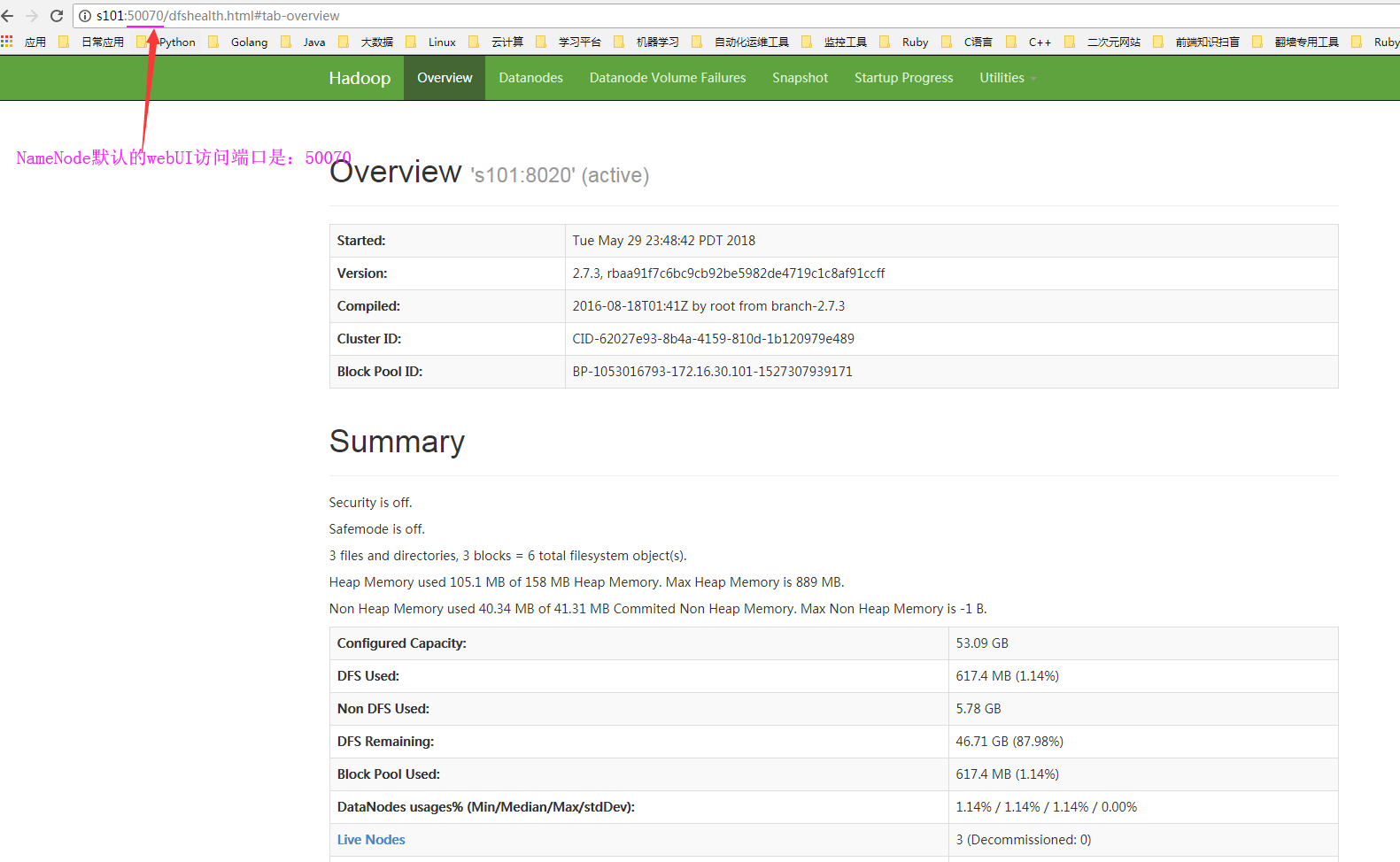

五.hdfs启动过程如下

hdfs启动过程大致分为以下三个步骤:

第一:将以“edits_inprogress_*”文件实例化成为新编辑日志文件;

第二:将镜像文件和新编辑日志文件加载到内存,重现编辑日志的执行过程,生成新的镜像文件检查点文件(.ckpt文件);

第三:将新的镜像文件检查点文件(.ckpt文件)后缀去掉,变为新镜像文件;

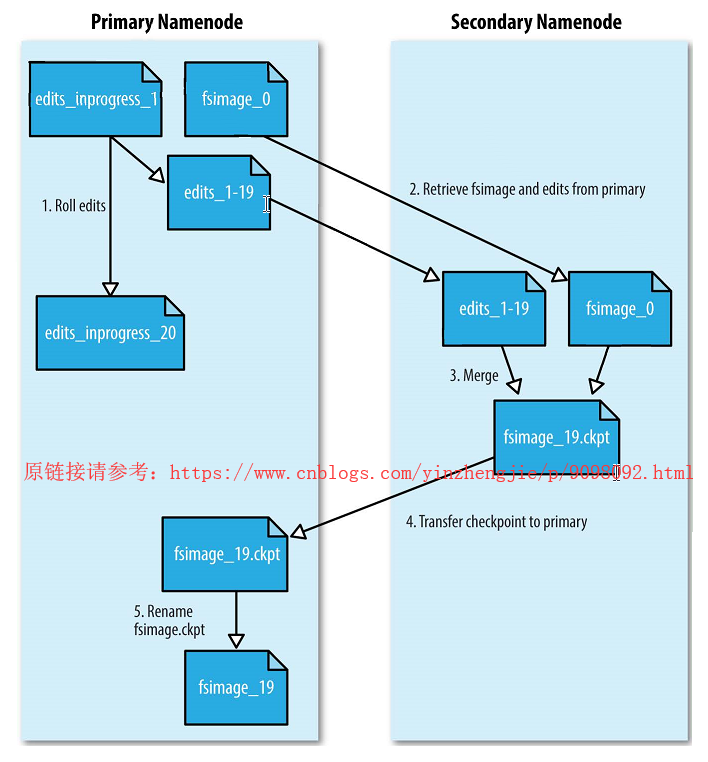

为了方便了理解,我在《Hadoop》权威指南找到一张详细的流程图,如下:

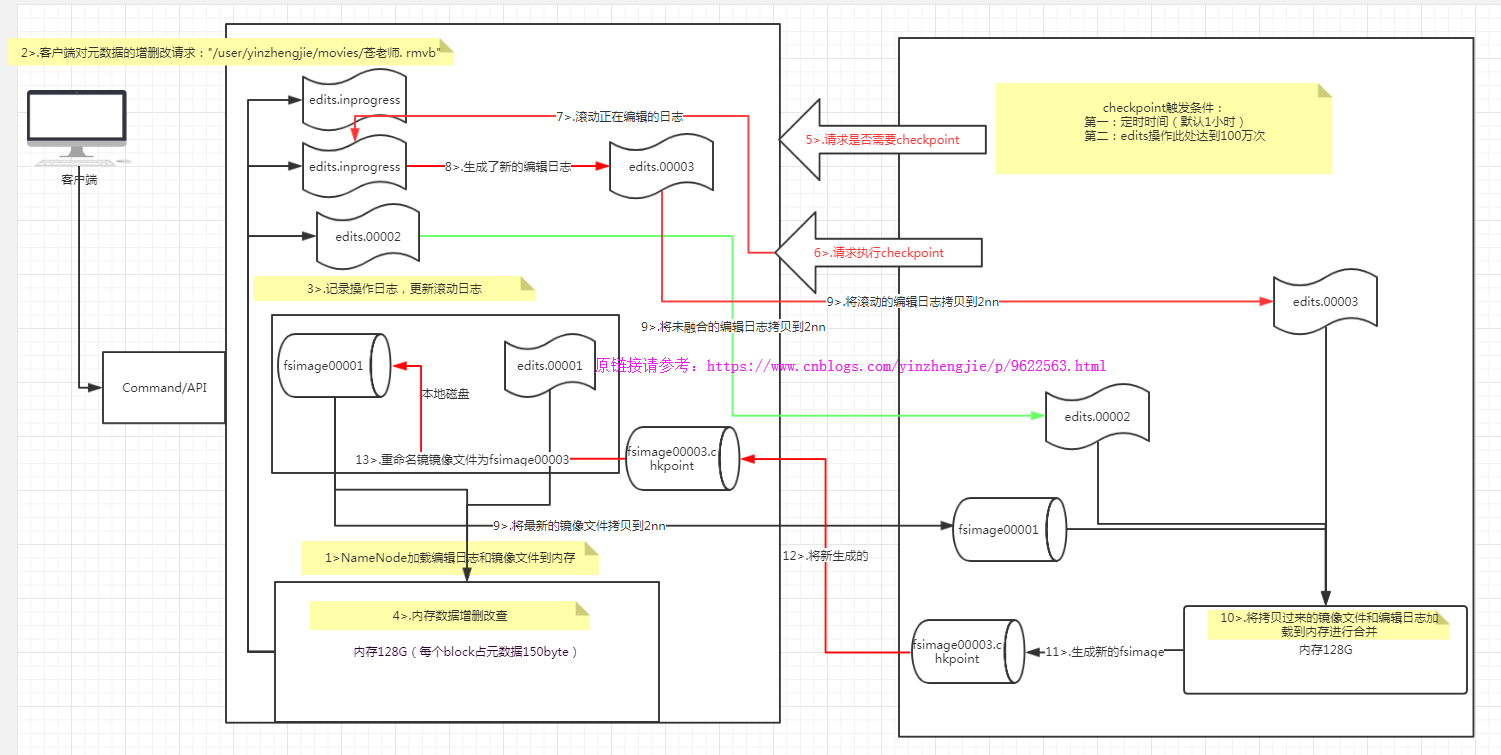

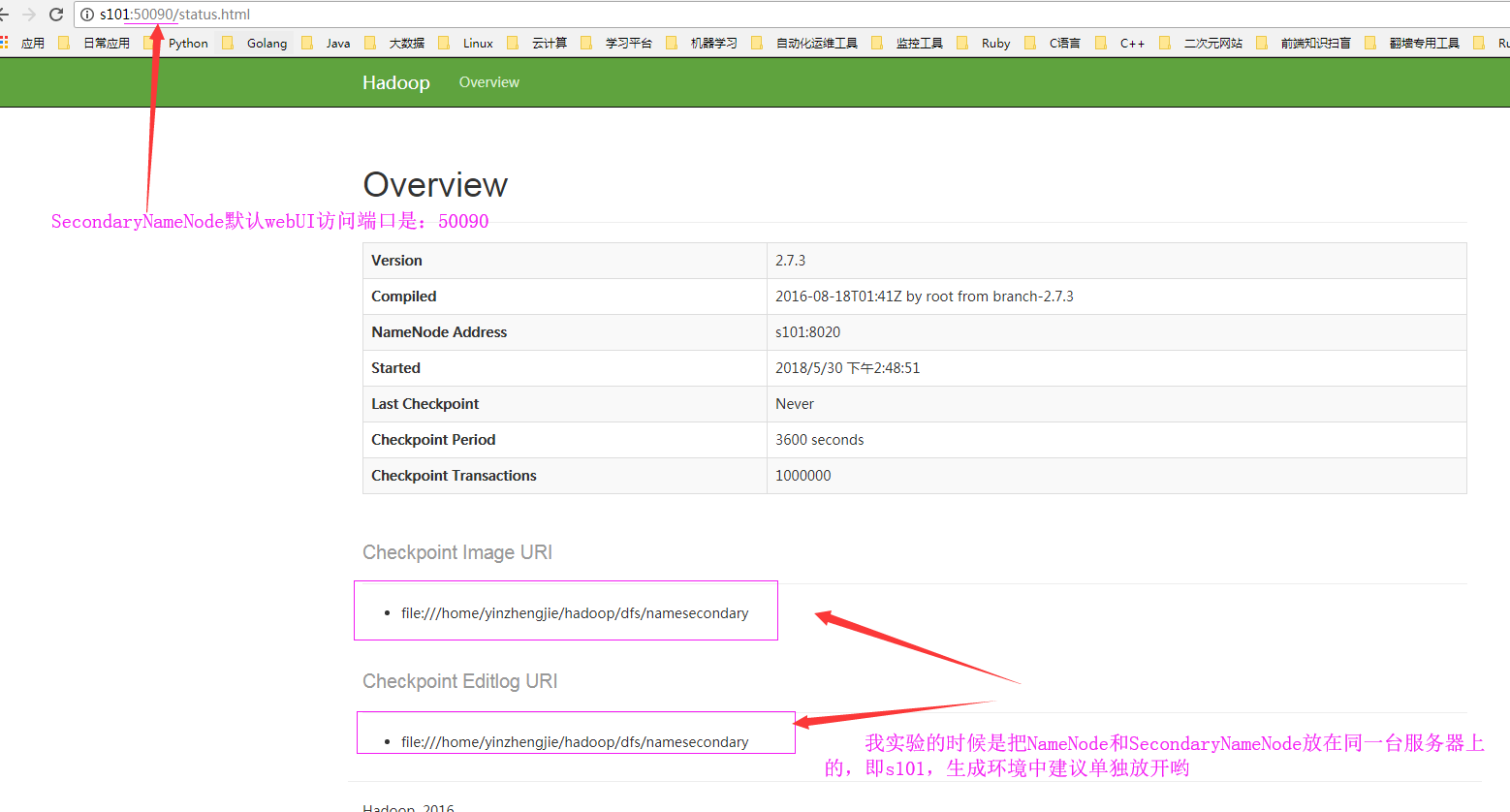

如果你觉得上图描述的不够详细的话,也可以参考我画的草图如下,已经很清楚的描述了NameNode和SecondaryNameNode的工作机制:

六.日志滚动(secondarynamenode,简称2nn)

上面我演示了如何手动实现滚动日志以及保存名称空间,但是手动滚动日志的话需要进入安全模式,一旦进入安全模式用户就无法正常进行写入操作,只能进行读取,且不说运维人员如果频繁的这样操作很麻烦,咱们就说说用户体验度肯定是会下降的。这时候就有了SecondaryNameNode,在说SecondaryNameNode的功能之前,我们想来说一下NameNode和DataNode。

1>.NameNode

NameNode是存放目录,类型,权限等元数据信息的,你可以理解它只是存放真实路径的一个映射!还记得我们年轻的时候看过周星驰的一部电影吗?它是我喜欢的中国喜剧演员,我记得在他主演的一部叫做《鹿鼎记》的电影中,韦小宝拜陈近南为师时,陈近南给韦小宝一本秘籍,韦小宝说:“咦,这么大一本?我看要练个把月”,陈近南接着说:“这一本只不过是绝世武功的目录”。

2>.DataNode

存放真实数据的服务器,用户所有上传的文件都会保存到DataNode的,真实环境中,保存的分量可能不止一份哟!

3>.SecondaryNameNode

我们知道NameNode保存是实时记录元数据信息的,这些数据信息的记录信息会临时保存到内存中,然后将数据记录到编辑日志中(比如:edits_0000000000000001007-0000000000000001008),当重启NameNode服务时,编辑日志才会合并到镜像文件中,从而得到一个文件系统的最新快照。但是在产品集群中NameNode是很少重启的,这也意味着当NameNode运行了很长时间后,edit logs文件会变得很大。在这种情况下就会出现一些问题:比如:edit logs文件会变的很大,怎么去管理这个文件是一个挑战。NameNode的重启会花费很长时间,因为编辑日志中i很多改动要合并到fsimage文件上。 如果NameNode挂掉了,那我们就丢失了很多改动因为此时的fsimage文件非常旧。

因此为了克服这个问题,我们需要一个易于管理的机制来帮助我们减小edit logs文件的大小和得到一个最新的fsimage文件(这个跟咱们玩的虚拟机的快照功能类似),这样也会减小在NameNode上的压力。这时候SecondaryNameNode就可以帮我们轻松搞定这个问题。SecondaryNameNode默认每个3600s(1h) 创建一次检查点(这个时间周期可以在配置文件修改“dfs.namenode.checkpoint.period“属性实现自定义时间周期检查点),创建检查点的细节如下:

第一:在NameNode中先进行日志的滚动;

第二:将镜像文件和新的编辑(edits)日志文件更新(fetch)到SecondaryNameNode进行融合操作,产生新的检查点文件(*.ckpt);

第三:将检查点文件(.ckpt)发送到NameNode;

第四:NameNode将其重命名为新的的镜像文件;

那么问题来了:SecondaryNameNode会自动帮我们实现日志滚动,并将生成的文件放在NameNode存放镜像的目录中,当NameNode重启时,是重新生成新的fsimage文件还是直接使用SecondaryNameNode给他最新提供好的呢?答案是:重新生成,他们各干各的不耽误,只不过NameNode重新生成的起点是SecondaryNameNode最近一次融合的结束点开始的!

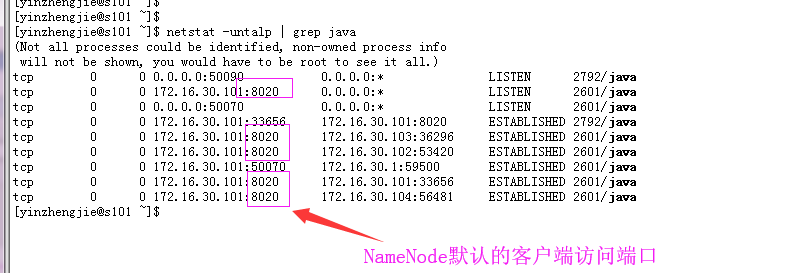

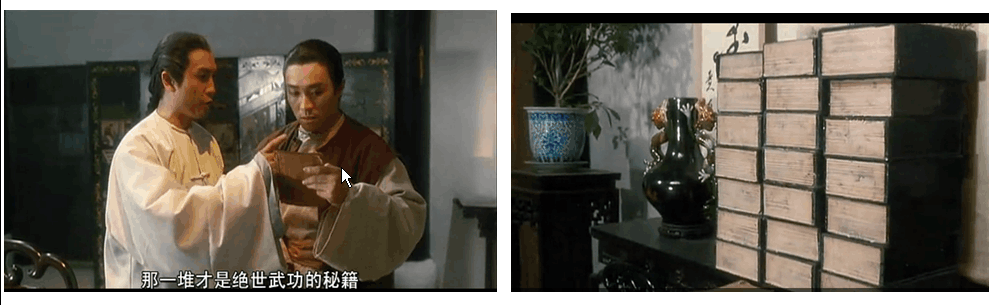

七.Hadoop默认的webUI访问端口

1>.Namenode的默认访问端口是50070

2>.SecondaryNameNode的默认访问端口是50090

3>.NameNode默认的客户端链接端口是8020