Apache Hadoop 2.9.2 的集群管理之服役和退役

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

随着公司业务的发展,客户量越来越多,产生的日志自然也就越来越大来,可能我们现有集群的DataNode节点的容量依旧不能满足存储数据的需求,因此需要在现有的集群基础之上动态添加DataNode在生成环境中也是很有可能的。

一.添加新节点的过程(服役)

1>.查看需要加入进群的节点的IP地址

[root@node110.yinzhengjie.org.cn ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.30.1.110 netmask 255.255.255.0 broadcast 172.30.1.255 inet6 fe80::21c:42ff:fe11:4014 prefixlen 64 scopeid 0x20<link> ether 00:1c:42:11:40:14 txqueuelen 1000 (Ethernet) RX packets 61 bytes 7872 (7.6 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 45 bytes 7540 (7.3 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@node110.yinzhengjie.org.cn ~]#

[root@node110.yinzhengjie.org.cn ~]# cat /etc/hosts | grep yinzhengjie 172.30.1.101 node101.yinzhengjie.org.cn 172.30.1.102 node102.yinzhengjie.org.cn 172.30.1.103 node103.yinzhengjie.org.cn 172.30.1.110 node110.yinzhengjie.org.cn [root@node110.yinzhengjie.org.cn ~]#

[root@node110.yinzhengjie.org.cn ~]# yum -y install java-1.8.0-openjdk-devel Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Resolving Dependencies --> Running transaction check ---> Package java-1.8.0-openjdk-devel.x86_64 1:1.8.0.201.b09-2.el7_6 will be installed --> Processing Dependency: java-1.8.0-openjdk(x86-64) = 1:1.8.0.201.b09-2.el7_6 for package: 1:java-1.8.0-openjdk-devel-1.8.0.201.b09-2.el7_6.x86_64 --> Running transaction check ---> Package java-1.8.0-openjdk.x86_64 1:1.8.0.201.b09-2.el7_6 will be installed --> Processing Dependency: java-1.8.0-openjdk-headless(x86-64) = 1:1.8.0.201.b09-2.el7_6 for package: 1:java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64 --> Running transaction check ---> Package java-1.8.0-openjdk-headless.x86_64 1:1.8.0.201.b09-2.el7_6 will be installed --> Finished Dependency Resolution Dependencies Resolved ========================================================================================================================================================================================================================================== Package Arch Version Repository Size ========================================================================================================================================================================================================================================== Installing: java-1.8.0-openjdk-devel x86_64 1:1.8.0.201.b09-2.el7_6 updates 9.8 M Installing for dependencies: java-1.8.0-openjdk x86_64 1:1.8.0.201.b09-2.el7_6 updates 260 k java-1.8.0-openjdk-headless x86_64 1:1.8.0.201.b09-2.el7_6 updates 32 M Transaction Summary ========================================================================================================================================================================================================================================== Install 1 Package (+2 Dependent packages) Total download size: 42 M Installed size: 144 M Downloading packages: (1/3): java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64.rpm | 260 kB 00:00:00 (2/3): java-1.8.0-openjdk-devel-1.8.0.201.b09-2.el7_6.x86_64.rpm | 9.8 MB 00:00:11 (3/3): java-1.8.0-openjdk-headless-1.8.0.201.b09-2.el7_6.x86_64.rpm | 32 MB 00:00:18 ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ Total 2.3 MB/s | 42 MB 00:00:18 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : 1:java-1.8.0-openjdk-headless-1.8.0.201.b09-2.el7_6.x86_64 1/3 Installing : 1:java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64 2/3 Installing : 1:java-1.8.0-openjdk-devel-1.8.0.201.b09-2.el7_6.x86_64 3/3 Verifying : 1:java-1.8.0-openjdk-1.8.0.201.b09-2.el7_6.x86_64 1/3 Verifying : 1:java-1.8.0-openjdk-headless-1.8.0.201.b09-2.el7_6.x86_64 2/3 Verifying : 1:java-1.8.0-openjdk-devel-1.8.0.201.b09-2.el7_6.x86_64 3/3 Installed: java-1.8.0-openjdk-devel.x86_64 1:1.8.0.201.b09-2.el7_6 Dependency Installed: java-1.8.0-openjdk.x86_64 1:1.8.0.201.b09-2.el7_6 java-1.8.0-openjdk-headless.x86_64 1:1.8.0.201.b09-2.el7_6 Complete! [root@node110.yinzhengjie.org.cn ~]#

2>.将NameNode节点配置环境拷贝到需要加入进群的节点的服务器

[root@node101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop/hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.namenode.checkpoint.period</name> <value>3600</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:///${hadoop.tmp.dir}/dfs/namenode1,file:///${hadoop.tmp.dir}/dfs/namenode2,file:///${hadoop.tmp.dir}/dfs/namenode3</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:///${hadoop.tmp.dir}/dfs/data1,file:///${hadoop.tmp.dir}/dfs/data2</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> <property> <name>dfs.namenode.heartbeat.recheck-interval</name> <value>300000</value> </property> <property> <name> dfs.heartbeat.interval </name> <value>3</value> </property> <!-- <property> <name>dfs.hosts</name> <value>file:///${hadoop.tmp.dir}/DataNodesHostname.txt</value> </property> --> <property> <name>dfs.hosts.exclude</name> <value>file:///${hadoop.tmp.dir}/dfs.hosts.exclude.txt</value> </property> </configuration> <!-- hdfs-site.xml 配置文件的作用: #HDFS的相关设定,如文件副本的个数、块大小及是否使用强制权限等,此中的参数定义会覆盖hdfs-default.xml文件中的默认配置. dfs.namenode.checkpoint.period 参数的作用: #两个定期检查点之间的秒数,默认是3600,即1小时。 dfs.namenode.name.dir 参数的作用: #指定namenode的工作目录,默认是file://${hadoop.tmp.dir}/dfs/name,namenode的本地目录可以配置成多个,且每个目录存放内容相同,增加了可靠性。建议配置的多目录用不同磁盘挂在,这样可以提升IO性能! dfs.datanode.data.dir 参数的作用: #指定datanode的工作目录,议配置的多目录用不同磁盘挂在,这样可以提升IO性能!但是多个目录存储的数据并不相同哟!而是把数据存放在不同的目录,当namenode存储数据时效率更高! dfs.replication 参数的作用: #为了数据可用性及冗余的目的,HDFS会在多个节点上保存同一个数据块的多个副本,其默认为3个。而只有一个节点的伪分布式环境中其仅用 保存一个副本即可,这可以通过dfs.replication属性进行定义。它是一个软件级备份。 dfs.heartbeat.interval 参数的作用: #设置心跳检测时间 dfs.namenode.heartbeat.recheck-interval和dfs.heartbeat.interval 参数的作用: #设置HDFS中NameNode和DataNode的超时时间,计算公式为:timeout = 2 * dfs.namenode.heartbeat.recheck-interval + 10 * dfs.heartbeat.interval。 dfs.hosts 参数的作用: #添加白名单,功能和黑名单(dfs.hosts.exclude)相反。我这里是将其注释掉了。 dfs.hosts.exclude 参数的作用: #这是我们添加的黑名单,该属性的value定义的是一个配置文件,这个配置文件的主机就是需要退役的节点。 --> [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ssh-copy-id node110.yinzhengjie.org.cn /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'node110.yinzhengjie.org.cn (172.30.1.110)' can't be established. ECDSA key fingerprint is SHA256:7fUJXbFRvzvhON0FKnxvIivwkEZsiQ1jXE3V3i7ONEg. ECDSA key fingerprint is MD5:7b:34:fb:ed:80:13:ed:7b:83:db:24:56:75:68:0c:5b. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node110.yinzhengjie.org.cn's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'node110.yinzhengjie.org.cn'" and check to make sure that only the key(s) you wanted were added. [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# scp -r /yinzhengjie/softwares/hadoop-2.9.2/ node110.yinzhengjie.org.cn:/yinzhengjie/softwares/ ....... README.txt 100% 1366 2.8MB/s 00:00 mapred-config.cmd 100% 1640 4.8MB/s 00:00 hdfs-config.sh 100% 1427 3.9MB/s 00:00 httpfs-config.sh 100% 7666 17.4MB/s 00:00 hdfs-config.cmd 100% 1640 4.3MB/s 00:00 yarn-config.cmd 100% 2131 6.0MB/s 00:00 mapred-config.sh 100% 2223 5.6MB/s 00:00 hadoop-config.cmd 100% 8484 16.3MB/s 00:00 hadoop-config.sh 100% 11KB 17.3MB/s 00:00 yarn-config.sh 100% 2134 5.4MB/s 00:00 kms-config.sh 100% 7899 18.3MB/s 00:00 libhadooppipes.a 100% 1594KB 85.3MB/s 00:00 libhadooputils.a 100% 465KB 69.0MB/s 00:00 libhdfs.so.0.0.0 100% 278KB 44.4MB/s 00:00 wordcount-part 100% 933KB 93.5MB/s 00:00 wordcount-simple 100% 924KB 49.3MB/s 00:00 pipes-sort 100% 910KB 54.9MB/s 00:00 wordcount-nopipe 100% 972KB 46.8MB/s 00:00 libhdfs.so 100% 278KB 54.1MB/s 00:00 libhdfs.a 100% 444KB 65.7MB/s 00:00 libhadoop.so 100% 822KB 65.9MB/s 00:00 libhadoop.a 100% 1406KB 65.3MB/s 00:00 libhadoop.so.1.0.0 100% 822KB 85.6MB/s 00:00 [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# scp /etc/profile node110.yinzhengjie.org.cn:/etc/ profile 100% 1937 3.8MB/s 00:00 [root@node101.yinzhengjie.org.cn ~]#

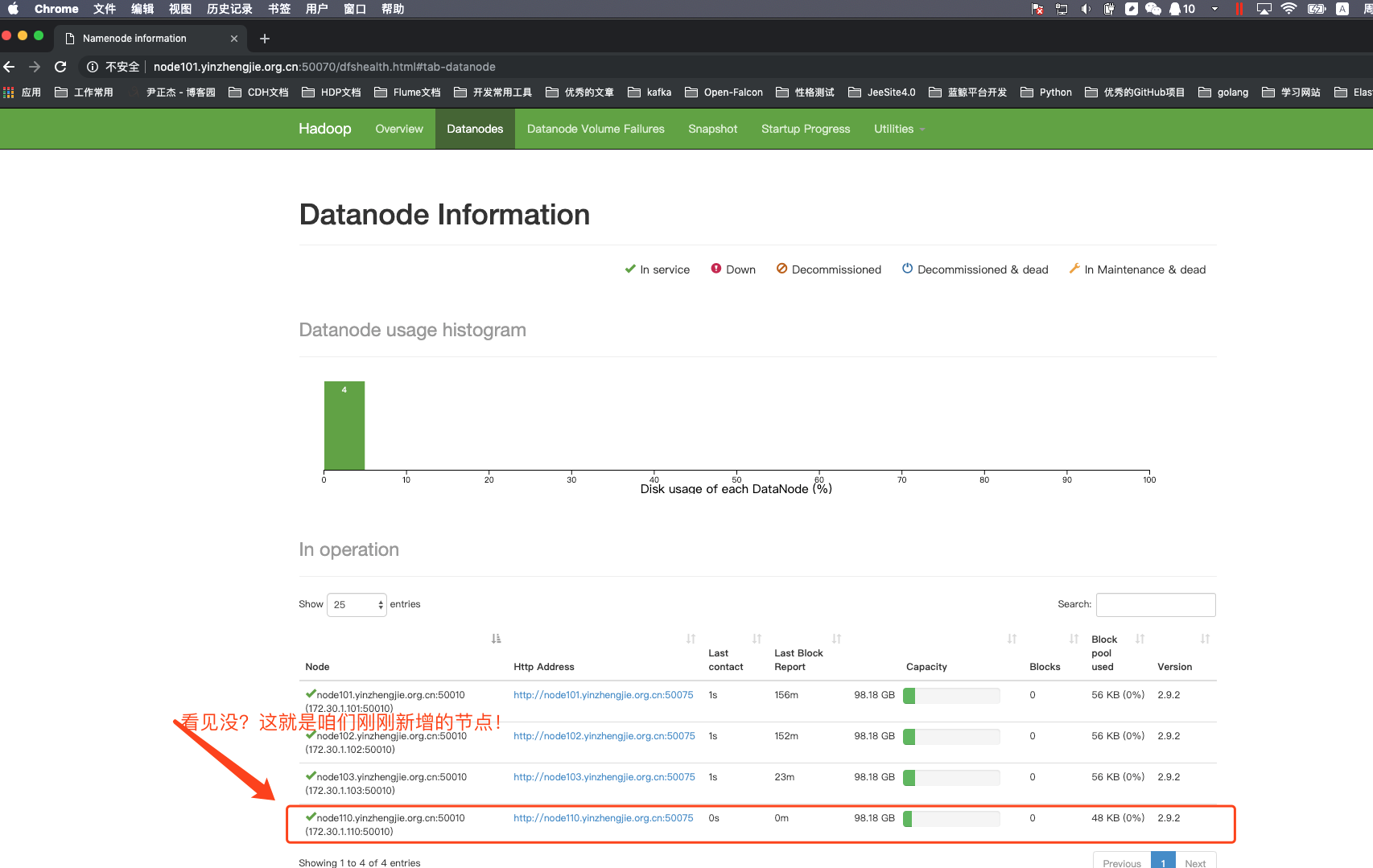

3>.在新加入的datanode节点上单独启动datanode进程

[root@node110.yinzhengjie.org.cn ~]# hadoop-daemon.sh start datanode starting datanode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-datanode-node110.yinzhengjie.org.cn.out [root@node110.yinzhengjie.org.cn ~]# [root@node110.yinzhengjie.org.cn ~]# jps 4080 DataNode 4167 Jps [root@node110.yinzhengjie.org.cn ~]#

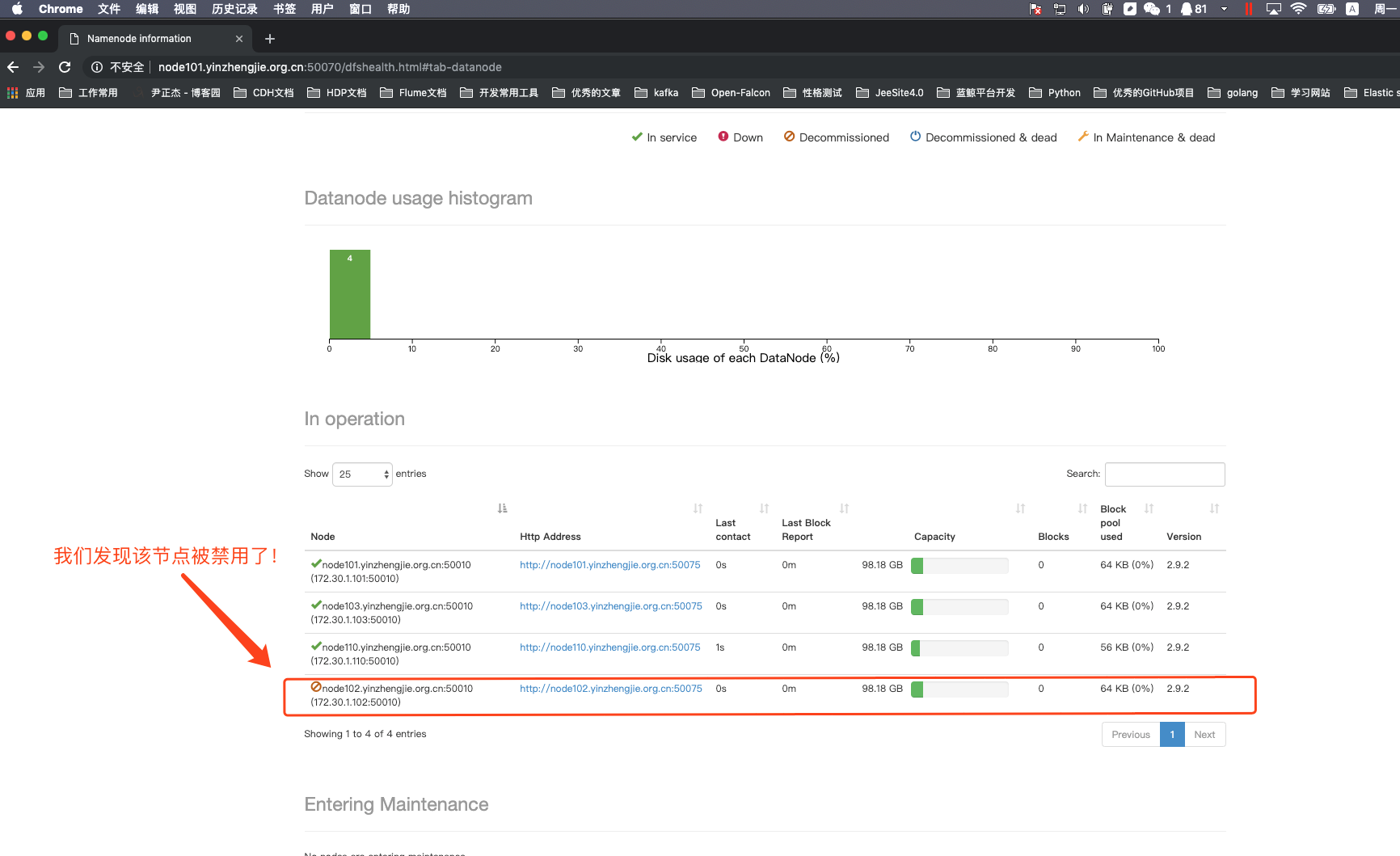

4>.编辑黑名单,将node102.yinzhengjie.org.cn节点加入我们定义好的配置文件

[root@node101.yinzhengjie.org.cn ~]# cat /data/hadoop/hdfs/dfs.hosts.exclude.txt node102.yinzhengjie.org.cn [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -refreshNodes

Refresh nodes successful

[root@node101.yinzhengjie.org.cn ~]#

5>.关于服役相关说明

根据上述的步骤,我们可以成功将一个节点加入到集另一个群中。也可以禁用某个节点,只需要将该主机加入到我们自定义的黑名单配置文件中即可。其实我们将节点加入集群成功后,我们最好将slaves文件也修改为最新的状态,从而达到便于管理集群的DataNode的目的,不用每次启动集群时都新加入的集群需要单独启动!

[root@node101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop/slaves node101.yinzhengjie.org.cn node102.yinzhengjie.org.cn node103.yinzhengjie.org.cn node110.yinzhengjie.org.cn [root@node101.yinzhengjie.org.cn ~]#

二.删除旧节点的过程(退役)

1>.修改NameNode的配置文件并同步到其他节点上去

[root@node101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop/hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.namenode.checkpoint.period</name> <value>3600</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:///${hadoop.tmp.dir}/dfs/namenode1,file:///${hadoop.tmp.dir}/dfs/namenode2,file:///${hadoop.tmp.dir}/dfs/namenode3</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:///${hadoop.tmp.dir}/dfs/data1,file:///${hadoop.tmp.dir}/dfs/data2</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> <property> <name>dfs.namenode.heartbeat.recheck-interval</name> <value>300000</value> </property> <property> <name> dfs.heartbeat.interval </name> <value>3</value> </property> <property> <name>dfs.hosts</name> <value>/data/hadoop/hdfs/DataNodesHostname.txt</value> </property> <!-- <property> <name>dfs.hosts.exclude</name> <value>/data/hadoop/hdfs/dfs.hosts.exclude.txt</value> </property> --> </configuration> <!-- hdfs-site.xml 配置文件的作用: #HDFS的相关设定,如文件副本的个数、块大小及是否使用强制权限等,此中的参数定义会覆盖hdfs-default.xml文件中的默认配置. dfs.namenode.checkpoint.period 参数的作用: #两个定期检查点之间的秒数,默认是3600,即1小时。 dfs.namenode.name.dir 参数的作用: #指定namenode的工作目录,默认是file://${hadoop.tmp.dir}/dfs/name,namenode的本地目录可以配置成多个,且每个目录存放内容相同,增加了可靠性。建议配置的多目录用不同磁盘挂在,这样可以提升IO性能! dfs.datanode.data.dir 参数的作用: #指定datanode的工作目录,议配置的多目录用不同磁盘挂在,这样可以提升IO性能!但是多个目录存储的数据并不相同哟!而是把数据存放在不同的目录,当namenode存储数据时效率更高! dfs.replication 参数的作用: #为了数据可用性及冗余的目的,HDFS会在多个节点上保存同一个数据块的多个副本,其默认为3个。而只有一个节点的伪分布式环境中其仅用 保存一个副本即可,这可以通过dfs.replication属性进行定义。它是一个软件级备份。 dfs.heartbeat.interval 参数的作用: #设置心跳检测时间 dfs.namenode.heartbeat.recheck-interval和dfs.heartbeat.interval 参数的作用: #设置HDFS中NameNode和DataNode的超时时间,计算公式为:timeout = 2 * dfs.namenode.heartbeat.recheck-interval + 10 * dfs.heartbeat.interval。 dfs.hosts 参数的作用: #添加白名单,功能和黑名单(dfs.hosts.exclude)相反。 dfs.hosts.exclude 参数的作用: #这是我们添加的黑名单,该属性的value定义的是一个配置文件,这个配置文件的主机就是需要退役的节点。我这里是将其注释掉了。 --> [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop/slaves node101.yinzhengjie.org.cn node102.yinzhengjie.org.cn node103.yinzhengjie.org.cn node110.yinzhengjie.org.cn [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# stop-dfs.sh Stopping namenodes on [node101.yinzhengjie.org.cn] node101.yinzhengjie.org.cn: stopping namenode node101.yinzhengjie.org.cn: stopping datanode node102.yinzhengjie.org.cn: stopping datanode node103.yinzhengjie.org.cn: stopping datanode node110.yinzhengjie.org.cn: stopping datanode Stopping secondary namenodes [account.jetbrains.com] account.jetbrains.com: stopping secondarynamenode [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# scp -r /yinzhengjie/softwares/hadoop-2.9.2/ node102.yinzhengjie.org.cn:/yinzhengjie/softwares/ ........ hadoop-yarn-server-applicationhistoryservice-2.9.2.jar 100% 528KB 45.6MB/s 00:00 mssql-jdbc-6.2.1.jre7.jar 100% 774KB 79.0MB/s 00:00 hadoop-streaming-2.9.2.jar 100% 132KB 49.4MB/s 00:00 commons-math3-3.1.1.jar 100% 1562KB 66.9MB/s 00:00 json-io-2.5.1.jar 100% 73KB 60.5MB/s 00:00 ojalgo-43.0.jar 100% 1625KB 74.2MB/s 00:00 hadoop-azure-datalake-2.9.2.jar 100% 52KB 51.3MB/s 00:00 README.txt 100% 1366 3.0MB/s 00:00 mapred-config.cmd 100% 1640 4.0MB/s 00:00 hdfs-config.sh 100% 1427 4.0MB/s 00:00 httpfs-config.sh 100% 7666 15.9MB/s 00:00 hdfs-config.cmd 100% 1640 4.0MB/s 00:00 yarn-config.cmd 100% 2131 6.2MB/s 00:00 mapred-config.sh 100% 2223 5.9MB/s 00:00 hadoop-config.cmd 100% 8484 17.1MB/s 00:00 hadoop-config.sh 100% 11KB 17.5MB/s 00:00 yarn-config.sh 100% 2134 5.1MB/s 00:00 kms-config.sh 100% 7899 17.0MB/s 00:00 libhadooppipes.a 100% 1594KB 96.4MB/s 00:00 libhadooputils.a 100% 465KB 73.5MB/s 00:00 libhdfs.so.0.0.0 100% 278KB 32.1MB/s 00:00 wordcount-part 100% 933KB 80.2MB/s 00:00 wordcount-simple 100% 924KB 81.8MB/s 00:00 pipes-sort 100% 910KB 81.9MB/s 00:00 wordcount-nopipe 100% 972KB 67.6MB/s 00:00 libhdfs.so 100% 278KB 63.1MB/s 00:00 libhdfs.a 100% 444KB 42.7MB/s 00:00 libhadoop.so 100% 822KB 64.9MB/s 00:00 libhadoop.a 100% 1406KB 71.3MB/s 00:00 libhadoop.so.1.0.0 100% 822KB 71.9MB/s 00:00 [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# scp -r /yinzhengjie/softwares/hadoop-2.9.2/ node103.yinzhengjie.org.cn:/yinzhengjie/softwares/ ...... hadoop-yarn-server-applicationhistoryservice-2.9.2.jar 100% 528KB 92.0MB/s 00:00 mssql-jdbc-6.2.1.jre7.jar 100% 774KB 101.6MB/s 00:00 hadoop-streaming-2.9.2.jar 100% 132KB 65.0MB/s 00:00 commons-math3-3.1.1.jar 100% 1562KB 78.3MB/s 00:00 json-io-2.5.1.jar 100% 73KB 28.8MB/s 00:00 ojalgo-43.0.jar 100% 1625KB 103.0MB/s 00:00 hadoop-azure-datalake-2.9.2.jar 100% 52KB 37.3MB/s 00:00 README.txt 100% 1366 2.0MB/s 00:00 mapred-config.cmd 100% 1640 3.6MB/s 00:00 hdfs-config.sh 100% 1427 2.2MB/s 00:00 httpfs-config.sh 100% 7666 10.0MB/s 00:00 hdfs-config.cmd 100% 1640 2.8MB/s 00:00 yarn-config.cmd 100% 2131 3.4MB/s 00:00 mapred-config.sh 100% 2223 2.4MB/s 00:00 hadoop-config.cmd 100% 8484 13.4MB/s 00:00 hadoop-config.sh 100% 11KB 20.1MB/s 00:00 yarn-config.sh 100% 2134 4.6MB/s 00:00 kms-config.sh 100% 7899 13.5MB/s 00:00 libhadooppipes.a 100% 1594KB 87.6MB/s 00:00 libhadooputils.a 100% 465KB 72.3MB/s 00:00 libhdfs.so.0.0.0 100% 278KB 65.6MB/s 00:00 wordcount-part 100% 933KB 76.3MB/s 00:00 wordcount-simple 100% 924KB 67.2MB/s 00:00 pipes-sort 100% 910KB 91.2MB/s 00:00 wordcount-nopipe 100% 972KB 95.2MB/s 00:00 libhdfs.so 100% 278KB 79.6MB/s 00:00 libhdfs.a 100% 444KB 85.3MB/s 00:00 libhadoop.so 100% 822KB 100.9MB/s 00:00 libhadoop.a 100% 1406KB 86.2MB/s 00:00 libhadoop.so.1.0.0 100% 822KB 73.3MB/s 00:00 [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# scp -r /yinzhengjie/softwares/hadoop-2.9.2/ node110.yinzhengjie.org.cn:/yinzhengjie/softwares/ ....... mssql-jdbc-6.2.1.jre7.jar 100% 774KB 52.9MB/s 00:00 hadoop-streaming-2.9.2.jar 100% 132KB 48.1MB/s 00:00 commons-math3-3.1.1.jar 100% 1562KB 71.7MB/s 00:00 json-io-2.5.1.jar 100% 73KB 37.7MB/s 00:00 ojalgo-43.0.jar 100% 1625KB 57.2MB/s 00:00 hadoop-azure-datalake-2.9.2.jar 100% 52KB 26.5MB/s 00:00 README.txt 100% 1366 1.5MB/s 00:00 mapred-config.cmd 100% 1640 1.8MB/s 00:00 hdfs-config.sh 100% 1427 805.6KB/s 00:00 httpfs-config.sh 100% 7666 8.9MB/s 00:00 hdfs-config.cmd 100% 1640 2.1MB/s 00:00 yarn-config.cmd 100% 2131 2.3MB/s 00:00 mapred-config.sh 100% 2223 3.0MB/s 00:00 hadoop-config.cmd 100% 8484 9.9MB/s 00:00 hadoop-config.sh 100% 11KB 26.7MB/s 00:00 yarn-config.sh 100% 2134 2.7MB/s 00:00 kms-config.sh 100% 7899 9.6MB/s 00:00 libhadooppipes.a 100% 1594KB 79.1MB/s 00:00 libhadooputils.a 100% 465KB 52.7MB/s 00:00 libhdfs.so.0.0.0 100% 278KB 53.3MB/s 00:00 wordcount-part 100% 933KB 74.5MB/s 00:00 wordcount-simple 100% 924KB 74.3MB/s 00:00 pipes-sort 100% 910KB 81.6MB/s 00:00 wordcount-nopipe 100% 972KB 83.4MB/s 00:00 libhdfs.so 100% 278KB 51.4MB/s 00:00 libhdfs.a 100% 444KB 64.2MB/s 00:00 libhadoop.so 100% 822KB 55.9MB/s 00:00 libhadoop.a 100% 1406KB 77.2MB/s 00:00 libhadoop.so.1.0.0 100% 822KB 78.3MB/s 00:00 [root@node101.yinzhengjie.org.cn ~]#

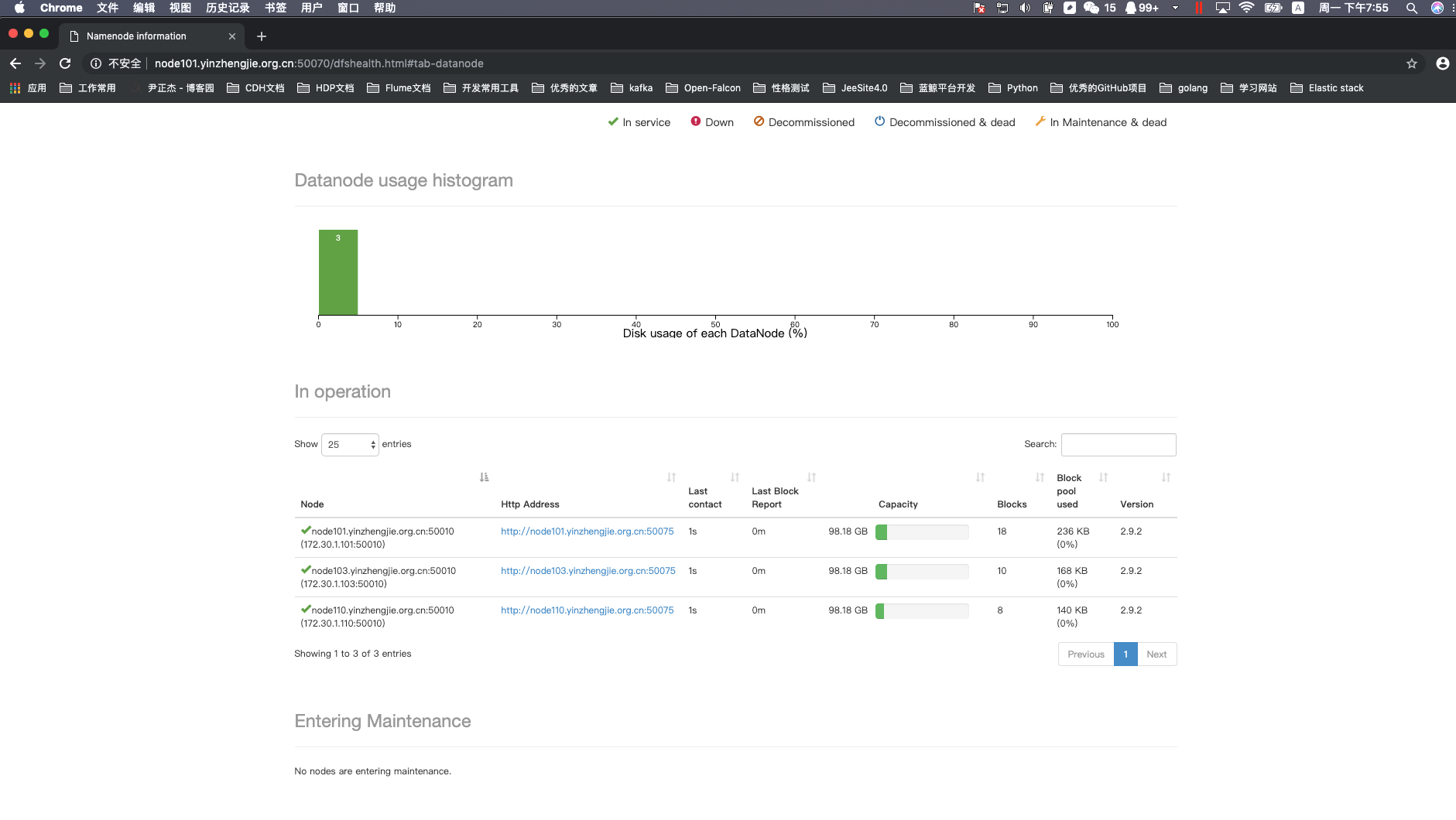

2>.编辑白名单

[root@node101.yinzhengjie.org.cn ~]# cat /data/hadoop/hdfs/DataNodesHostname.txt node101.yinzhengjie.org.cn node103.yinzhengjie.org.cn node110.yinzhengjie.org.cn [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# start-dfs.sh Starting namenodes on [node101.yinzhengjie.org.cn] node101.yinzhengjie.org.cn: starting namenode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-namenode-node101.yinzhengjie.org.cn.out node102.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-datanode-node102.yinzhengjie.org.cn.out node101.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-datanode-node101.yinzhengjie.org.cn.out node103.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-datanode-node103.yinzhengjie.org.cn.out node110.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-datanode-node110.yinzhengjie.org.cn.out Starting secondary namenodes [account.jetbrains.com] account.jetbrains.com: starting secondarynamenode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-secondarynamenode-node101.yinzhengjie.org.cn.out [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 13141 Jps 12389 NameNode 12553 DataNode 12857 SecondaryNameNode [root@node101.yinzhengjie.org.cn ~]#

3>.关于yarn的服役和退役

配置的套路和上面配置DataNode的套路几乎一致!详情请参考:https://www.cnblogs.com/yinzhengjie/p/9101070.html。