- 1、初衷:想在网上批量下载点听书、脱口秀之类,资源匮乏,大家可以一试

- 2、技术:wireshark scrapy jsonMonogoDB

- 3、思路:wireshark分析移动APP返回的各种连接分类、列表、下载地址等(json格式)

- 4、思路:scrapy解析json,并生成下载连接

- 5、思路:存储到MongoDB

- 6、难点:wireshark分析各类地址,都是简单的scrapy的基础使用,官网的说明文档都有

- 7、按照:tree /F生成的文件目录进行说明吧

1 items.py 字段设置,根据需要改变

'''

from scrapy import Item,Field

class QtscrapyItem(Item):

id = Field()

parent_info = Field()

title = Field()

update_time = Field()

file_path = Field()

source = Field()

'''

2 pipelines.py 字段设置及相关处理,根据需要改变

'''

import pymongo as pymongo

from scrapy import signals

import json

import codecs

from scrapy.conf import settings

class QtscrapyPipeline(object):

def init(self):

self.file = codecs.open('qingting_209.json', 'wb', encoding='utf-8')

def process_item(self, item, spider):

line = json.dumps(dict(item), ensure_ascii=False) + "

"

# print(line)

self.file.write(line)

return item

class QtscrapyMongoPipeline(object):

def init(self):

host = settings['MONGODB_HOST']

port = settings['MONGODB_PORT']

dbName = settings['MONGODB_DBNAME']

client = pymongo.MongoClient(host=host, port=port)

tdb = client[dbName]

self.post = tdb[settings['MONGODB_DOCNAME']]

def process_item(self, item, spider):

qtfm = dict(item)

self.post.insert(qtfm)

return item

'''

3 settings.py 基础配置 配置数据库存储相关 QtscrapyPipeline 来自pipelines.py中定义的类

'''

ITEM_PIPELINES = {

# 'qtscrapy.pipelines.QtscrapyPipeline': 300,

'qtscrapy.pipelines.QtscrapyMongoPipeline': 300,

}

MONGODB_HOST = '127.0.0.1'

MONGODB_PORT = 12345

MONGODB_DBNAME = 'qingtingDB'

MONGODB_DOCNAME = 'qingting'

'''

└─spiders

4 qingting.py 爬虫,各显神通

'''

from scrapy.spiders import BaseSpider

from scrapy.http import Request

import sys, json

from qtscrapy.items import QtscrapyItem

from scrapy_redis.spiders import RedisSpider

reload(sys)

sys.setdefaultencoding("utf-8")

1 酷我听书地址分析

http://ts.kuwo.cn/service/gethome.php?act=new_home

http://ts.kuwo.cn/service/getlist.v31.php?act=catlist&id=97

http://ts.kuwo.cn/service/getlist.v31.php?act=cat&id=21&type=hot

http://ts.kuwo.cn/service/getlist.v31.php?act=detail&id=100102396

2 配合Redis使用class qtscrapy(RedisSpider):

class qtscrapy(BaseSpider):

name = "qingting"

# redis_key = 'qingting:start_urls'

base_url = "http://api2.qingting.fm/v6/media/recommends/guides/section/"

start_urls = ["http://api2.qingting.fm/v6/media/recommends/guides/section/0",

"http://ts.kuwo.cn/service/gethome.php?act=new_home",

"http://api.mting.info/yyting/bookclient/ClientTypeResource.action?type=0&pageNum=0&pageSize=500&token=_4WfzpCah8ujgJZZzboaUGkJQvWGfEEL-zdukwv7lbY*&q=0&imei=ODY1MTY2MDIxNzMzNjI0"]

allowed_domains = ["api2.qingting.fm", "ts.kuwo.cn", "api.mting.info"]

def parse(self, response):

3 根据返回的url判断,在思考是scrapy执行多爬虫还是这种混杂

if "qingting" in response.url:

qt_json = json.loads(response.body, encoding="utf-8")

if qt_json["data"] is not None:

for data in qt_json["data"]:

if data is not None:

for de in data["recommends"]:

if de["parent_info"] is None:

pass

else:

jm_url = "http://api2.qingting.fm/v6/media/channelondemands/%(parent_id)s/programs/curpage/1/pagesize/1000" %

de["parent_info"]

yield Request(jm_url, callback=self.get_qt_jmlist, meta={"de": de})

for i in range(0, 250):

url = self.base_url + str(i)

yield Request(url, callback=self.parse)

if "kuwo" in response.url:

kw_json = json.loads(response.body, encoding="utf-8")

if kw_json["cats"] is not None:

for data in kw_json["cats"]:

pp_id = data["Id"]

kw_url = "http://ts.kuwo.cn/service/getlist.v31.php?act=catlist&id=%s" % pp_id

yield Request(kw_url, callback=self.get_kw_catlist)

if "mting" in response.url:

# print(response)

lr_json = json.loads(response.body, encoding="utf-8")

if len(lr_json["list"]) > 0:

for l in lr_json["list"]:

try:

lr_url = "http://api.mting.info/yyting/bookclient/ClientTypeResource.action?type=%(id)s&pageNum=0&pageSize=1000&sort=2&token=_4WfzpCah8ujgJZZzboaUGkJQvWGfEEL-zdukwv7lbY*&imei=ODY1MTY2MDIxNzMzNjI0" % l

yield Request(lr_url, callback=self.get_lr_booklist)

except:

pass

for r in range(-10, 1000):

lr_url = "http://api.mting.info/yyting/bookclient/ClientTypeResource.action?type=%s&pageNum=0&pageSize=1000&token=_4WfzpCah8ujgJZZzboaUGkJQvWGfEEL-zdukwv7lbY*&q=0&imei=ODY1MTY2MDIxNzMzNjI0" % t

yield Request(lr_url, callback=self.parse)

4 需递归几次是由App结构决定的

def get_qt_jmlist(self, response):

jm_json = json.loads(response.body, encoding="utf-8")

de = response.meta["de"]

for jm_data in jm_json["data"]:

if jm_data is None:

pass

else:

try:

file_path = "http://upod.qingting.fm/%(file_path)s?deviceid=ffffffff-ebbe-fdec-ffff-ffffb1c8b222" %

jm_data["mediainfo"]["bitrates_url"][0]

item = QtscrapyItem()

# print(item)

# print(jm_data["id"])

item["id"] = str(jm_data["id"])

parent_info = "%(parent_id)s_%(parent_name)s" % de["parent_info"]

item["parent_info"] = parent_info

item["title"] = jm_data["title"]

item["update_time"] = str(jm_data["update_time"])[:str(jm_data["update_time"]).index(' ')]

item["file_path"] = file_path

item["source"] = "qingting"

yield item

except:

pass

pass

def get_kw_catlist(self, response):

try:

kw_json = json.loads(response.body, encoding="utf-8")

if kw_json["sign"] is not None:

if kw_json["list"] is not None:

for data in kw_json["list"]:

p_id = data["Id"]

kw_p_url = "http://ts.kuwo.cn/service/getlist.v31.php?act=cat&id=%s&type=hot" % p_id

yield Request(kw_p_url, callback=self.get_kw_cat)

except:

print("*" * 300)

print(self.name, kw_json)

pass

def get_kw_cat(self, response):

try:

kw_json = json.loads(response.body, encoding="utf-8")

p_info = {}

if kw_json["sign"] is not None:

if kw_json["list"] is not None:

for data in kw_json["list"]:

id = data["Id"]

p_info["p_id"] = data["Id"]

p_info["p_name"] = data["Name"]

kw_pp_url = "http://ts.kuwo.cn/service/getlist.v31.php?act=detail&id=%s" % id

yield Request(kw_pp_url, callback=self.get_kw_jmlist, meta={"p_info": p_info})

except:

print("*" * 300)

print(self.name, kw_json)

pass

def get_kw_jmlist(self, response):

jm_json = json.loads(response.body, encoding="utf-8")

p_info = response.meta["p_info"]

for jm_data in jm_json["Chapters"]:

if jm_data is None:

pass

else:

try:

file_path = "http://cxcnd.kuwo.cn/tingshu/res/WkdEWF5XS1BB/%s" % jm_data["Path"]

item = QtscrapyItem()

item["id"] = str(jm_data["Id"])

parent_info = "%(p_id)s_%(p_name)s" % p_info

item["parent_info"] = parent_info

item["title"] = jm_data["Name"]

item["update_time"] = ""

item["file_path"] = file_path

item["source"] = "kuwo"

yield item

except:

pass

pass

def get_lr_booklist(self, response):

s_lr_json = json.loads(response.body, encoding="utf-8")

if len(s_lr_json["list"]) > 0:

for s_lr in s_lr_json["list"]:

s_lr_url = "http://api.mting.info/yyting/bookclient/ClientGetBookResource.action?bookId=%(id)s&pageNum=1&pageSize=2000&sortType=0&token=_4WfzpCah8ujgJZZzboaUGkJQvWGfEEL-zdukwv7lbY*&imei=ODY1MTY2MDIxNzMzNjI0" % s_lr

meta = {}

meta["id"] = s_lr["id"]

meta["name"] = s_lr["name"]

yield Request(s_lr_url, callback=self.get_lr_kmlist, meta={"meta": meta})

def get_lr_kmlist(self, response):

ss_lr_json = json.loads(response.body, encoding="utf-8")

parent = response.meta["meta"]

if len(ss_lr_json["list"]) > 0:

for ss_lr in ss_lr_json["list"]:

try:

item = QtscrapyItem()

item["id"] = str(ss_lr["id"])

parent_info = "%(id)s_%(name)s" % parent

item["parent_info"] = parent_info

item["title"] = ss_lr["name"]

item["update_time"] = ""

item["file_path"] = ss_lr["path"]

item["source"] = "lr"

yield item

except:

pass

'''

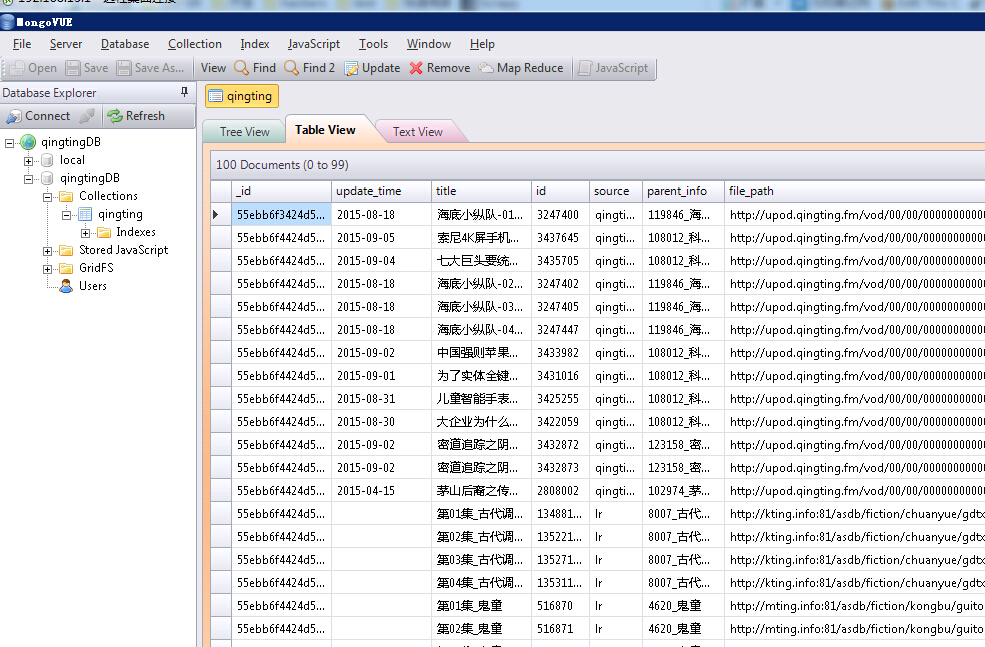

5 结果展示,爬取了大概40万记录