转译自 https://open-cas.github.io/cache.html

https://open-cas.github.io/doxygen/ocf/

https://www.sdnlab.com/23341.html

相关概念

1.cache

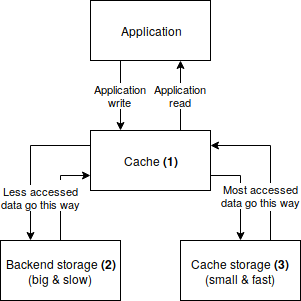

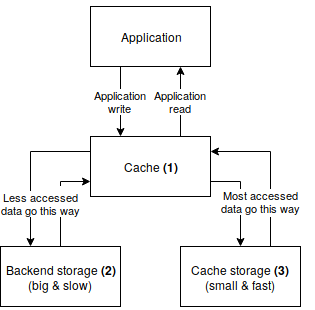

In general a cache (1) is a component that mediates data exchange between an application and a backend storage (2), selectively storing most accessed data on a relatively smaller and faster cache storage (3). Every time the cached data is accessed by the application, an I/O operation is performed on the cache storage, decreasing data access time and improving performance of the whole system.

cache line 是cache处理应用数据的基本单位。 每个cache line 和一个core line对应,但是在一定时间中不是每个core line有对应的cache line。 如果有,那么这个core line被mapped 到cache中,否则是unmapped。

当一个应用执行IO操作时,cache检查被访问的core line是否已经映射到cache的cache line了。如果没有,那就是cache miss,cache engine需要从后端的存储设备访问数据。然后根据cache mode 决定是否把core line 映射到cache line,如果映射的话,执行的是cache insert的操作。若是cache hit,那么应用程序从cache获取数据。

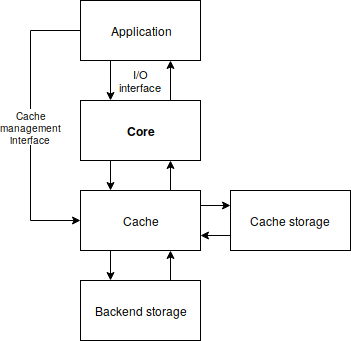

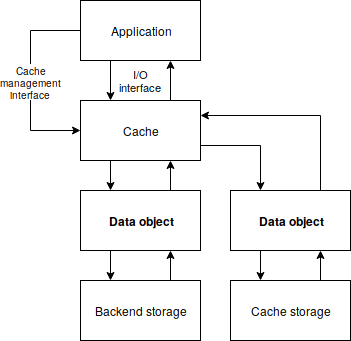

cache object

在OCF中,一个cache object提供了一个和cache 状态机交互的接口。其API可以attach cache storage, add and remove cores, modify various configuration parameters and get statistics. 每个cache操作一个单一的cache storage( cache volume), 其存储数据和元数据。

Single cache can handle data from many cores. It’s called a multi-core configuration. The core object can become backend storage of another core or cache storage of some cache, which makes it possible to construct complex multilevel configurations.

2.cache operation

mapping

Mapping is an operation of linking up a core line with a cache line in the cache metadata. 在映射的过程中,cache storage的一个cache line大小的区域被分配给core line。之后在cache操作中,这块区域可以用来存储core line数据,直到cache line被驱逐evicted了。

mapping 更新cache line metadata中的core id和core line number以及哈希表的信息。

insertion

Insertion is an operation of writing core line data to a cache line. During insertion the data of I/O request is being written to an area of cache storage storing data of the cache line corresponding to the core line being accessed. 元数据中cache line的valid和dirty bit被更新。

insertion不改变cache line mapping metadata,只是改变valid和 dirty bit。Because of that it can be done only after successfull mapping operation. However, unlike mapping, which operates on whole cache lines, insertion is performed with sector granularity. Every change of valid bit from 0 to 1 is considered an insertion.

Insertion may occur for both read and write requests. Additionally, in the Write-Back mode the insertion may introduce a dirty data. In such situation, besides setting a valid bit, it also sets a dirty bit for accessed sectors.

update

Update is an operation of overwriting cache line data in the cache storage. update发生在当写请求的core line被mapped到cache line了且被访问的sector是valid。若cache line中一些被写的sectors是invalid的,那么这些sector被inserted,这意味着update和insertion可能在一个io 请求中被执行。

Update can be performed only during a write request (a read can never change the data) and similarly to insert it can introduce dirty data, so it may update dirty bit for accessed sectors in the cache line. However it will never change valid bit, as update touches only sectors that are already valid.

invalidation

Invalidation is an operation of marking one or more cache line sectors as invalid.

It can be done for example during handling of discard request, during purge operation, or as result of some internal cache operations. More information about cases when invalidation is performed can be found here.

eviction

Eviction is an operation of removing cache line mappings. 当cache中没有足够的空间for mapping data of incoming I/O requests。 被驱逐的cache line是通过eviction policy algorithm选择的。LRU(least recently used)选择最长时间没有被访问的cache lines。

flushing

Flushing is an operation of synchronizing data in the cache storage and the backend storage. 读cache storage中被标记为dirty的sectors,把他们写道后端存储设备,然后设置cache line元数据中的dirty bit为0。

flush是一个cache management操作,必须由用户触发。经常在detach core之前执行以防数据丢失。

cleaning

Cleaning is an operation very simillar to flushing, but it’s performed by the cache automatically as a background task. 是由cleaning policy控制的。目前OCF支持三种cleaning policy:

- Approximately Least Recently Used (ALRU),

- Aggressive Cleaning Policy (ACP),

- No Operation (NOP).

3.cache configuration

The cache mode parameter determines how incoming I/O requests are handled by the cache engine. Depending on the cache mode some requests may be inserted to the cache or not. The cache mode determines also if data stored on cache should always be coherent with data stored in the backend storage (if there is a possibility of dirty data).

- Write-Through (WT),

- Write-Back (WB),

- Write-Around (WA),

- Write-Invalidate (WI),

- Write-Only (WO).

- Pass-Through (PT).

(1)Write-Through(WT):

I/O在写到cache device的同时,也会直接write-through到后端的core device。WT模式保证了数据在core device和cache device总是同步的,因此不用担心突然掉电造成的缓存数据丢失。因为每次数据依然要写到慢速的后端设备,所以这个模式只能够加速数据读取的过程.

(2)Write-Back (WB):

I/O写时,数据首先写到cache device,然后就会告诉应用写完毕。这些写操作会周期性的写回到core device。这里CAS可以选择不同的cleaning policy。比如ALRU(Approximately Least Recently Used),ACP(Aggressive Cleaning Policy)或者 NOP(No Operation,即不自动写回,而由用户主动的做flush操作)。因此,WB模式能够既加速读操作,又加速写操作。但是也会有突然掉电造成缓存数据没有及时写回到core device而导致数据丢失的情况。

(3)Write-Around (WA):

讲这个模式之前,先介绍一下缓存污染(cache pollution)。首先我们要知道cache device和core device其实是通过cache line和core line的mapping确定对应关系的。缓存污染表示很多不常用的数据被缓存到了cache device中。那么在后面新的写I/O的情况下,没有空闲的cache line,没有足够的空间去做mapping,此时需要逐出(evict)某条对应的cache line mapping,这就造成了额外的开销。

WA模式有点类似于WT模式,不同的是,写操作只有在cache line有对应的core line的情况下(即这部分数据已经被读缓存过)会同时更新cache device和core device。其他情况,则直接写到core device。因此WA模式也只能加速读操作,并且保证了数据的一致性。而且还能避免缓存污染这样的情况。

(4)Write-Invalidate(WI)

在这个模式中,只有读操作会被map到缓存中。对于写操作,会直接写入到core device,同时,如果有对应的cache line在缓存中,就让这条cache line变为无效。WI模式对于读密集型I/O有更好的加速作用。并且能够减少缓存的evict操作。

(5)Write-only (WO)

写到cache,读的话从后端读,不放到cache。In Write-Only mode, the cache engine writes the data exactly like in Write-Back mode so the data is written to cache storage without writing it to backend storage immediatelly. Read operations do not promote data to cache. Write-Only mode will accelerate only write intensive operations, as reads need to be performed only on the backend storage. There is a risk of data loss if the cache storage fails before the data is written to the backend storage.

(6)Pass-Through (PT)

很好理解,PT模式即所有IO直接绕过cache device直接和core device交互。

cache line size

The cache line size parameter determines the size of a data block on which the cache operates (cache line). It’s the minimum portion of data on the backend storage (core line) that can be mapped into the cache.

OCF allows to set cache line size to one of the following values:

- 4 KiB,

- 8 KiB,

- 16 KiB,

- 32 KiB,

- 64 KiB.

4.core

The core object is an abstraction that allows application access to backend storage cached by a cache. It provides API for submitting I/O requests, which are handled according to current cache mode and other cache and core configuration parameters. During normal cache operation the backend storage is exclusively owned by the cache object, and application should never access it directly unless the core is removed from the cache. That’s it, using the core API is the only proper way of accessing the data on the backend storage.

5.volume

A volume is generic representation of a storage, that allows OCF to access different types of storages using common abstract interface. OCF uses a volume interface for accessing both backend storage and cache storage. Storage represented by volume may be any kind of storage that allows for random block access - it may be HDD or SSD drive, ramdisk, network storage or any other kind of non-volatile or volatile memory.

6.cache line

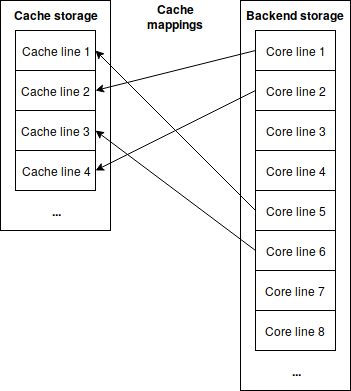

A cache line is the smallest portion of data that can be mapped into a cache. Every mapped cache line is associated with a core line, which is a corresponding region on a backend storage. Both the cache storage and the backend storage are split into blocks of the size of a cache line, and all the cache mappings are aligned to these blocks. The relationship between a cache line and a core line is illustrated on the picture below.

cache line metadata

OCF maintains small portion of metadata per each cache line, which contains the following information:

- core id,

- core line number,

- valid and dirty bits for every sector.

The core id determines on which core is the core line corresponding to given cache line located, whereas the core line number determines precise core line location on the backend storage. Valid and dirty bits are explained below.

Valid and dirty bits

valid是说该cache line是否被mapped,dirty是说数据在cache和backend storage中是否一致。

Valid and dirty bits define current cache line state. When a cache line is valid it means, that it’s mapped to a core line determined by a core id and a core line number. Otherwise all the other cache line metadata information is invalid and should be ignored. When a cache line is in invalid state, it may be used to map core line accessed by I/O request, and then it becomes valid. There are few situations in which a cache line can return to invalid state:

- When the cache line is being evicted.

- When the core pointed to by the core id is being removed.

- When the core pointed to by the core id is being purged.

- When the entire cache is being purged.

- During discard operation being performed on the corresponding core line.

- During I/O request when a cache mode which may perform an invalidation is selected. Currently these cache modes are Write-Invalidate and Pass-Through.

The dirty bit determines if the cache line data stored in the cache is in sync with the corresponding data on the backend storage. If a cache line is dirty, then only data on the cache storage is up to date, and it will need to be flushed at some point in the future (after that it will be marked as clean by zeroing dirty bit). A cache line can become dirty only during write operation in Write-Back mode.

Valid and dirty bits are maintained per sector, which means that not every sector in a valid cache line has to be valid, as well as not every sector in a dirty cache line has to be dirty. An entire cache line is considered valid if at least one of its sectors is valid, and similarly it’s considered dirty if at least one of its sectors is dirty.至少一个sector是valid的,那么该cache line是valid的,至少一个sector是dirty的,那么该cache line是dirty的。