1 安装系统

Virtual box 4.3

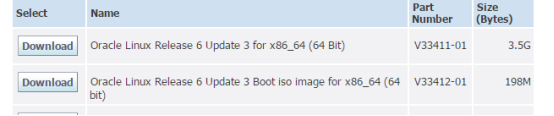

Oracle linux 6.3

Oracle 11g r2

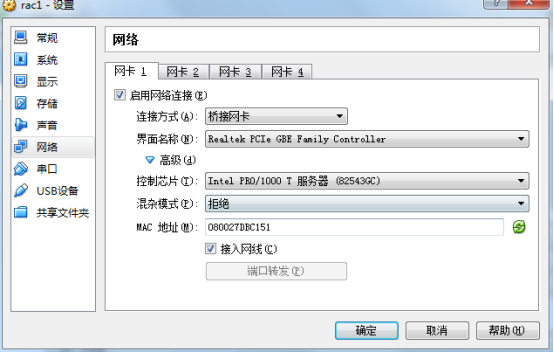

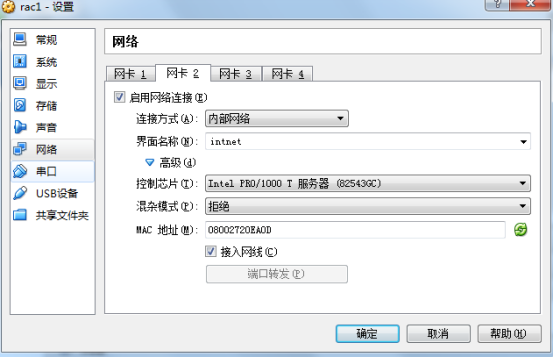

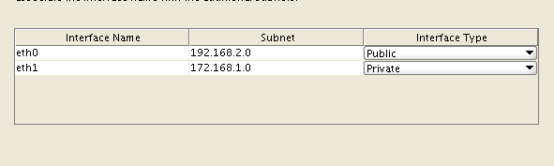

Make sure "Adapter 1" is enabled, set to "Bridged Adapter", then click on the "Adapter 2" tab.

Make sure "Adapter 2" is enabled, set to "Bridged Adapter" or "Internal Network", then click on the "System" section.

Move "Hard Disk" to the top of the boot order and uncheck the "Floppy" option, then click the "OK" button.

取消软驱跟硬盘的启动方式,然后ok

The virtual machine is now configured so we can start the guest operating system installation.

2安装oracle linux 6.3

skip

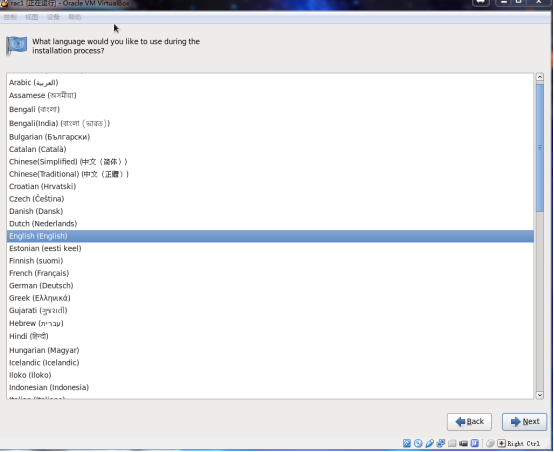

选择语言

选择存储设备

Basic storage devices

Yes

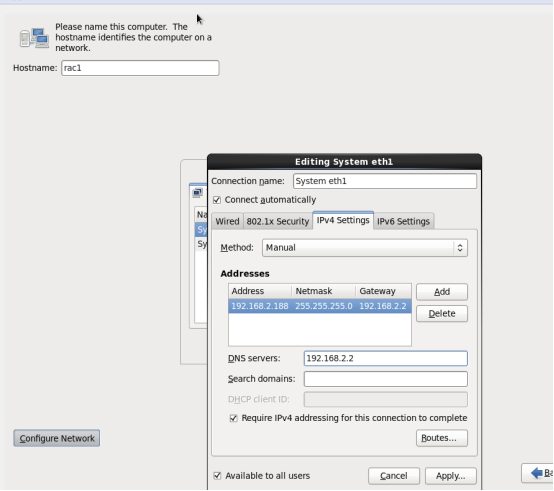

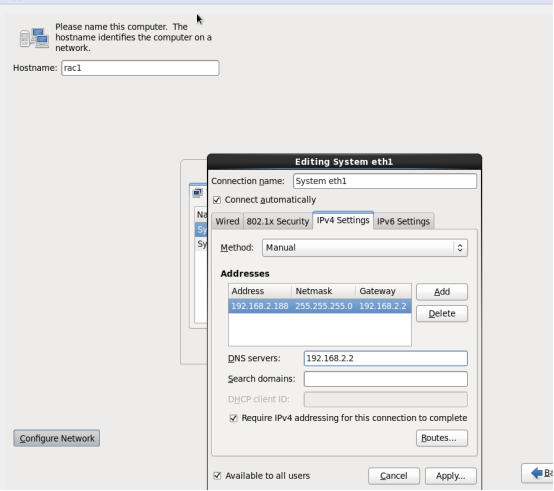

Hostname----rac1

Configure network

Edit--connect automatically--eth0,eth1

0

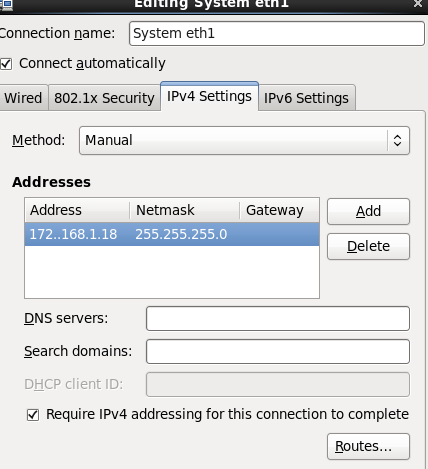

Ipv4

192.168.2.188 255.255.255.0 192.168.2.2

1

172.168.1.18 vmac1-priv ----r1 eth1

时区

Password--xxxx

Replace existing linux system

Wirte

Desktop--

oracle linux service--

Customer later

安装包

reboot

forward

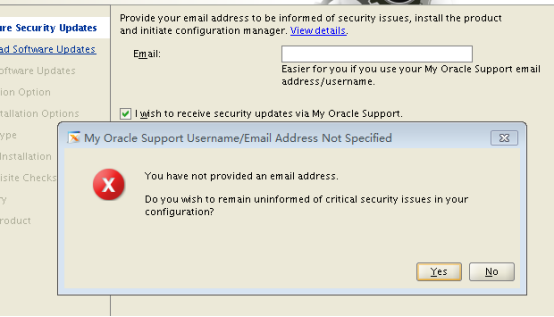

No.不要注册,no thankds

Username

Fullname

Password

不同步时区,

Finish

3 rac1系统设置

3.1 Disable firewall

[root@rac1 ~]# service iptables stop

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Unloading modules: [ OK ]

[root@rac1 ~]# chkconfig iptables off

[root@rac1 ~]# chkconfig iptables --list

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

3.2 Disable selinux

vi /etc/selinux/config

Selinux=disable

[root@rac1 ~]# vi /etc/selinux/config

SELINUX=disabled

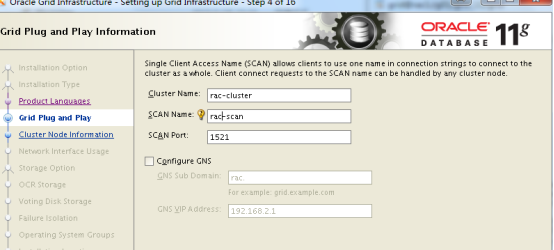

3.3 /etc/hosts

Vi /etc/hosts copy ip 配置

[root@rac1 ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.188 rac1

192.168.2.189 rac2

#Private IP

172.168.1.18 rac1-priv

172.168.1.19 rac2-priv

#Virtual IP

192.168.2.137 rac1-vip

192.168.2.138 rac2-vip

#Scan IP

192.168.2.139 rac-scan

Ping rac1

Ssh rac1

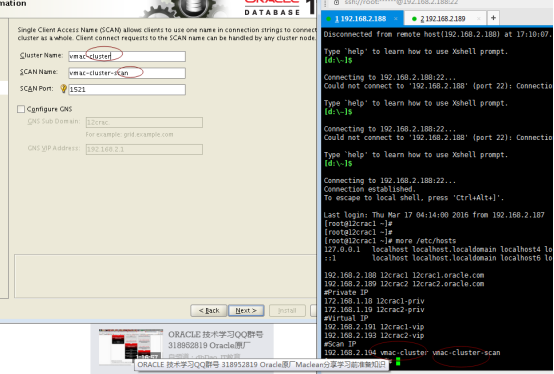

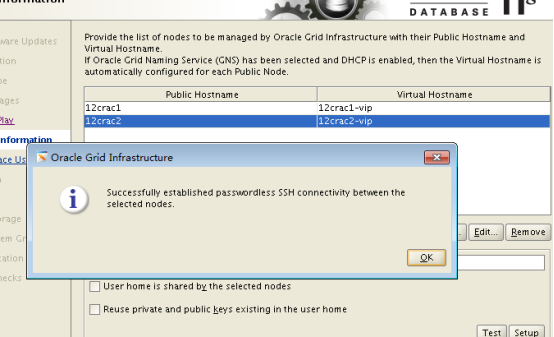

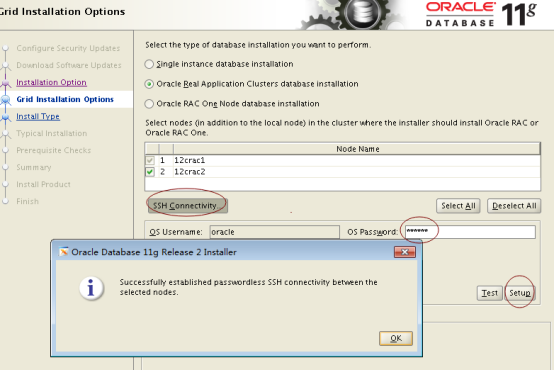

192.168.2.188 12crac1

192.168.2.189 12crac2

#Private IP

172.168.1.18 12crac1-priv

172.168.1.19 12crac2-priv

#Virtual IP

192.168.2.137 12crac1-vip

192.168.2.138 12crac2-vip

#Scan IP

192.168.2.139 12crac-scan

[root@12crac1 ~]# hostname

12crac1

3.4创建用户和组

[root@rac1 ~]# groupdel asmadmin

- [root@rac1 ~]# groupdel asmdba

- [root@rac1 ~]# groupdel asmoper

- [root@rac1 ~]# groupdel oinstall

- groupdel: cannot remove the primary group of user 'oracle'

- [root@rac1 ~]# groupdel dba

- [root@rac1 ~]# groupdel oinstall

- groupdel: cannot remove the primary group of user 'oracle'

- [root@rac1 ~]# userdel oracle

- [root@rac1 ~]# userdel grid

- [root@rac1 ~]# groupdel oinstall

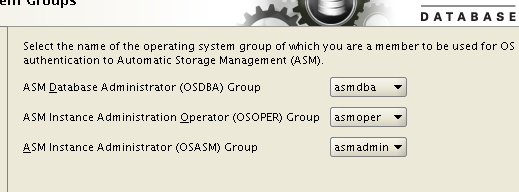

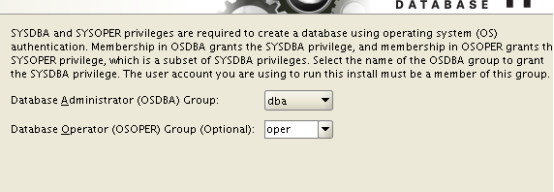

groupadd -g 5000 asmadmin

groupadd -g 5001 asmdba

groupadd -g 5002 asmoper

groupadd -g 6000 oinstall

groupadd -g 6001 dba

groupadd -g 6002 oper

useradd -g oinstall -G asmadmin,asmdba,asmoper grid

useradd -g oinstall -G dba,asmdba oracle

[root@rac1 ~]# userdel grid

userdel: user grid is currently logged in

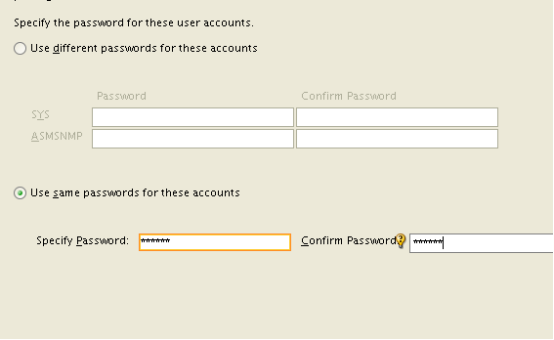

passwd oracle

--oracle

passwd grid

--grid

useradd: warning: the home directory already exists.

[root@rac1 home]# userdel oracle

[root@rac1 home]# ll

total 12

drwx------. 4 54322 54321 4096 Jan 27 13:59 grid

drwx------. 31 hongquanrac1 hongquanrac1 4096 Jan 28 03:06 hongquanrac1

drwx------. 4 54321 54321 4096 Jan 27 13:42 oracle

[root@rac1 home]# rm -rf oracle/

[root@rac1 home]# useradd -g oinstall -G dba,asmdba oracle

Creating mailbox file: File exists

[root@rac1 home]# cd /var/spool/mail/

[root@rac1 mail]# ll

total 0

-rw-rw----. 1 54322 mail 0 Jan 27 13:59 grid

-rw-rw----. 1 hongquanrac1 mail 0 Jan 26 22:23 hongquanrac1

-rw-rw----. 1 54321 mail 0 Jan 27 13:42 oracle

-rw-rw----. 1 rpc mail 0 Jan 26 21:59 rpc

[root@rac1 mail]# rm oracle

rm: remove regular empty file `oracle'? y

[root@rac1 mail]# useradd -g oinstall -G dba,asmdba oracle

useradd: user 'oracle' already exists

[root@rac1 mail]# userdel oracle

[root@rac1 mail]# useradd -g oinstall -G dba,asmdba oracle

useradd: warning: the home directory already exists.

Not copying any file from skel directory into it.

[root@rac1 mail]# id oracle

uid=502(oracle) gid=6000(oinstall) groups=6000(oinstall),5001(asmdba),6001(dba)

[root@rac1 mail]# su - oracle

[oracle@rac1 ~]$

--------------

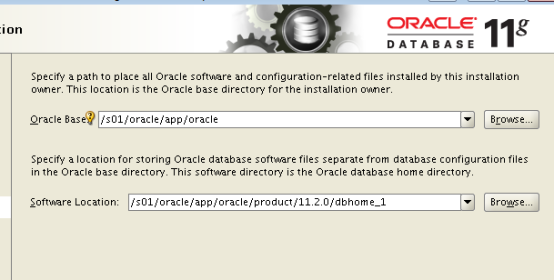

mkdir /s01

mkdir /g01

chown oracle:oinstall /s01

chown grid:oinstall /g01

[root@rac1 ~]# mkdir /s01

[root@rac1 ~]# mkdir /g01

[root@rac1 ~]# chown oracle:oinstall /s01

[root@rac1 ~]# chown grid:oinstall /g01

安装相应的包

[root@vmac6 ~]# cd /etc/yum.repos.d

[root@vmac6 yum.repos.d]# mv public-yum-ol6.repo public-yum-ol6.repo.bak

[root@vmac6 yum.repos.d]# touch public-yum-ol6.repo

[root@vmac6 yum.repos.d]# vi public-yum-ol6.repo

[oel6]

name = Enterprise Linux 6.3 DVD

baseurl=file:///media/"OL6.3 x86_64 Disc 1 20120626"/Server

gpgcheck=0

enabled=1

----OL6.3 x86_64 Disc 1 20120626

[root@vmac1 ~]# yum install oracle-rdbms-server-11gR2-preinstall-1.0-6.el6

--oracle-rdbms-server-12cR1-preinstall

Oracle官方文档中指定的在OracleLinux 6上使用oracle-rdbms-server-12cR1-preinstall功能,其实应该具体指定到ORACLELinux 6U6(6.6)版本才能支持。

3.5 etc/security/limits.conf

[root@rac1 ~]# vi /etc/security/limits.conf

# oracle-rdbms-server-11gR2-preinstall setting for nofile soft limit is 1024

oracle soft nofile 1024

# oracle-rdbms-server-11gR2-preinstall setting for nofile hard limit is 65536

oracle hard nofile 65536

# oracle-rdbms-server-11gR2-preinstall setting for nproc soft limit is 2047

oracle soft nproc 2047

# grid-rdbms-server-11gR2-preinstall setting for nofile soft limit is 1024

grid soft nofile 1024

# grid-rdbms-server-11gR2-preinstall setting for nofile hard limit is 65536

grid hard nofile 65536

# grid-rdbms-server-11gR2-preinstall setting for nproc soft limit is 2047

grid soft nproc 2047

# grid-rdbms-server-11gR2-preinstall setting for nproc hard limit is 16384

grid hard nproc 16384

# grid-rdbms-server-11gR2-preinstall setting for stack soft limit is 10240KB

grid soft stack 10240

# grid-rdbms-server-11gR2-preinstall setting for stack hard limit is 32768KB

grid hard stack 32768

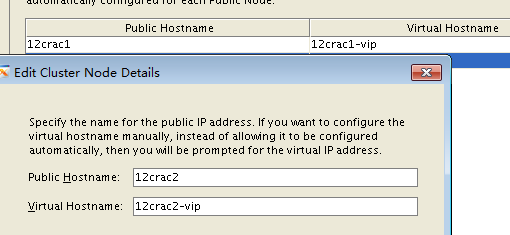

4 克隆并配置虚拟主机

默认配置到安装的目录,6.3的网络配置不一样

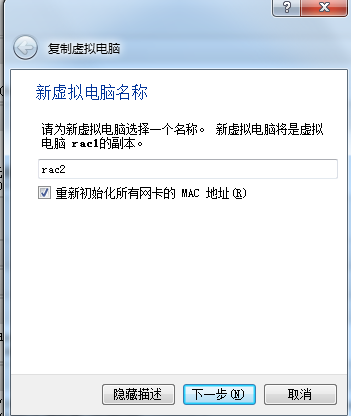

复制主机,当前状态,重新初始化mac地址------完全复制rac2

Init 6 重启,init 0 关机

修改vmac2的IP

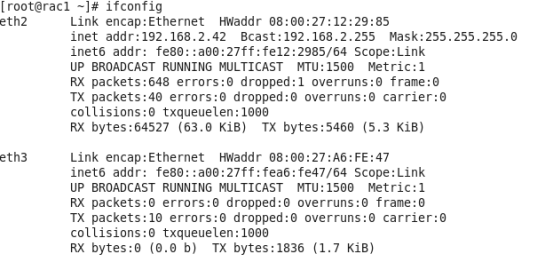

cd /etc/udev/rules.d/

vi 70-persistent-net.rules

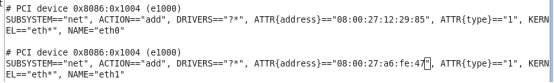

[root@rac1 ~]# vim /etc/udev/rules.d/70-persistent-net.rules

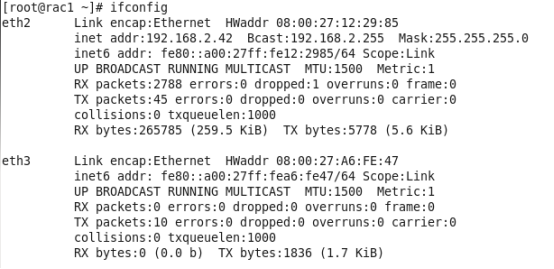

用eth2,eth3的mac地址分别替换eth0,eth1的mac地址,并删除2,3

使用ifconfig

start_udev

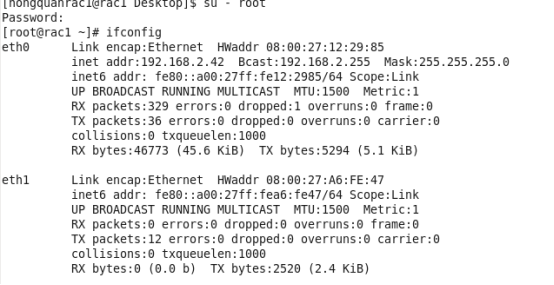

Reboot/init 6重启过后

重启过后可以连接,不能连接请检查配置eth0,eth1的顺序

[root@rac1 ~]# more /etc/udev/rules.d/70-persistent-net.rules

# This file was automatically generated by the /lib/udev/write_net_rules

# program, run by the persistent-net-generator.rules rules file.

#

# You can modify it, as long as you keep each rule on a single

# line, and change only the value of the NAME= key.

# PCI device 0x8086:0x1004 (e1000)

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="08:00:27:12:29:85", ATTR{type}=="1", KERN

EL=="eth*", NAME="eth0"

# PCI device 0x8086:0x1004 (e1000)

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="08:00:27:a6:fe:47", ATTR{type}=="1", KERN

EL=="eth*", NAME="eth1"

[root@rac1 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 08:00:27:12:29:85

inet addr:192.168.2.189 Bcast:192.168.2.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe12:2985/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:478 errors:0 dropped:2 overruns:0 frame:0

TX packets:83 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:53165 (51.9 KiB) TX bytes:10537 (10.2 KiB)

eth1 Link encap:Ethernet HWaddr 08:00:27:A6:FE:47

inet addr:192.168.2.134 Bcast:192.168.2.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fea6:fe47/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:4565 (4.4 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:480 (480.0 b) TX bytes:480 (480.0 b)

[root@rac1 network-scripts]# pwd

/etc/sysconfig/network-scripts

[root@rac1 network-scripts]# more ifcfg-Auto_eth0

HWADDR=08:00:27:12:29:85

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.2.189

PREFIX=24

GATEWAY=192.168.2.2

DNS1=192.168.2.2

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME="Auto eth0"

UUID=6819daf9-606b-428f-a8fe-7a447825579c

ONBOOT=yes

LAST_CONNECT=1422351622

[root@rac1 network-scripts]# more ifcfg-Auto_eth1

HWADDR=08:00:27:A6:FE:47

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.2.134

PREFIX=24

GATEWAY=192.168.2.2

DNS1=192.168.2.2

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME="Auto eth1"

UUID=e1c6038b-e6d7-4f3e-8ec7-c482191a5b00

ONBOOT=yes

LAST_CONNECT=1422351621

修改主机名

[root@rac1 network-scripts]# vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=rac2

GATEWAY=192.168.2.2

[root@rac2 ~]# ping rac2

PING rac2 (192.168.2.189) 56(84) bytes of data.

64 bytes from rac2 (192.168.2.189): icmp_seq=1 ttl=64 time=0.045 ms

64 bytes from rac2 (192.168.2.189): icmp_seq=2 ttl=64 time=0.134 ms

64 bytes from rac2 (192.168.2.189): icmp_seq=3 ttl=64 time=0.045 ms

^C

--- rac2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2664ms

rtt min/avg/max/mdev = 0.045/0.074/0.134/0.043 ms

[root@rac2 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

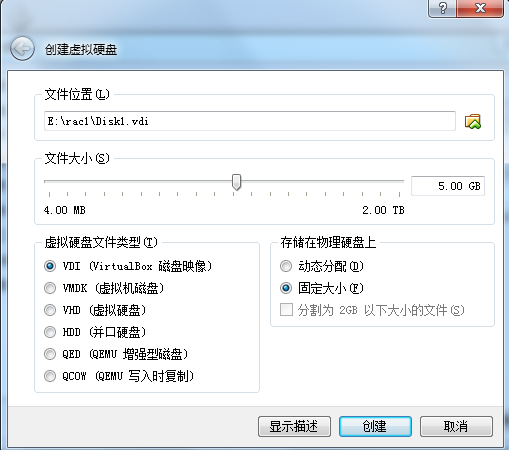

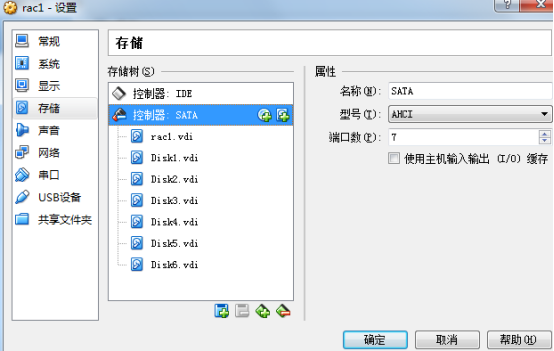

5 vbox虚拟共享存储并使用udev绑定

ASMLIB !=ASM

5.1 UDEV来绑定

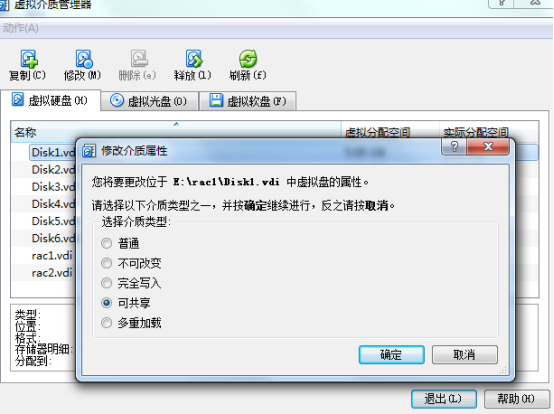

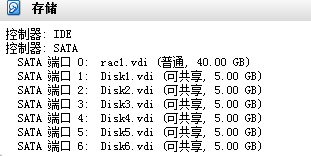

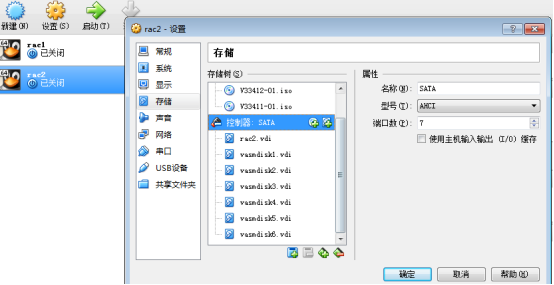

Rac1创建 VID格式,固定大小 的虚拟磁盘(6个 5个G),存储--stat

E:\rac1\Disk1.vdi

配置完rac1 后,在设置rac2,添加已经创建好的共享磁盘

虚拟介质管理,修改创建的磁盘为 可共享,重启vbox

Rac2创建磁盘---使用已共享的6个磁盘,确定已共享

分别启动rac1,rac2进行绑定

[root@12crac1 ~]# cd /dev/

[root@12crac1 dev]# ls -l sd*

brw-rw---- 1 root disk 8, 0 Mar 17 04:12 sda

brw-rw---- 1 root disk 8, 1 Mar 17 04:12 sda1

brw-rw---- 1 root disk 8, 2 Mar 17 04:12 sda2

brw-rw---- 1 root disk 8, 16 Mar 17 04:12 sdb

brw-rw---- 1 root disk 8, 32 Mar 17 04:12 sdc

brw-rw---- 1 root disk 8, 48 Mar 17 04:12 sdd

brw-rw---- 1 root disk 8, 64 Mar 17 04:12 sde

brw-rw---- 1 root disk 8, 80 Mar 17 04:12 sdf

brw-rw---- 1 root disk 8, 96 Mar 17 04:12 sdg

[root@12crac2 ~]# cd /dev/

[root@12crac2 dev]# ls -l sd*

brw-rw---- 1 root disk 8, 0 Mar 17 04:12 sda

brw-rw---- 1 root disk 8, 1 Mar 17 04:12 sda1

brw-rw---- 1 root disk 8, 2 Mar 17 04:12 sda2

brw-rw---- 1 root disk 8, 16 Mar 17 04:12 sdb

brw-rw---- 1 root disk 8, 32 Mar 17 04:12 sdc

brw-rw---- 1 root disk 8, 48 Mar 17 04:12 sdd

brw-rw---- 1 root disk 8, 64 Mar 17 04:12 sde

brw-rw---- 1 root disk 8, 80 Mar 17 04:12 sdf

brw-rw---- 1 root disk 8, 96 Mar 17 04:12 sdg

2个节点上分别执行

cd /dev

ls -l /dev

在Linux 6上使用UDEV解决RAC ASM存储设备名问题

for i in b c d e f g ;

do

echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done

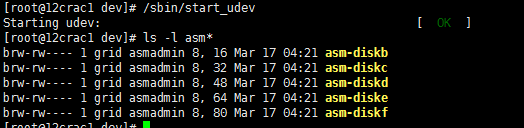

/sbin/start_udev

分别在2个节点上执行

节点1

[root@rac1 ~]# cd /dev/

[root@rac1 dev]# ls -l sd*

brw-rw---- 1 root disk 8, 0 Jan 28 02:17 sda

brw-rw---- 1 root disk 8, 1 Jan 28 02:17 sda1

brw-rw---- 1 root disk 8, 2 Jan 28 02:17 sda2

brw-rw---- 1 root disk 8, 16 Jan 28 02:17 sdb

brw-rw---- 1 root disk 8, 32 Jan 28 02:17 sdc

brw-rw---- 1 root disk 8, 48 Jan 28 02:17 sdd

brw-rw---- 1 root disk 8, 64 Jan 28 02:17 sde

brw-rw---- 1 root disk 8, 80 Jan 28 02:17 sdf

brw-rw---- 1 root disk 8, 96 Jan 28 02:17 sdg

[root@rac1 dev]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

[root@rac1 dev]# echo "options=--whitelisted --replace-whitespace" >> /etc/scsi_id.config

[root@rac1 dev]# for i in b c d e f ;

> do

> echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

> done

[root@rac1 dev]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBc4a46c76-e22aed86", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBd0dbffe4-cb516af9", NAME="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBe1ddb493-474a8e0e", NAME="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBa6b6a83b-f9d00a2a", NAME="asm-diske", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB1b8dba16-09341986", NAME="asm-diskf", OWNER="grid", GROUP="asmadmin", MODE="0660"

[root@rac1 ~]# /sbin/start_udev

Starting udev: [ OK ]

[root@rac1 ~]# cd /dev/

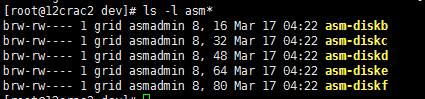

[root@rac1 dev]# ls -l asm*

brw-rw---- 1 grid asmadmin 8, 16 Jan 28 02:37 asm-diskb

brw-rw---- 1 grid asmadmin 8, 32 Jan 28 02:38 asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Jan 28 02:38 asm-diskd

brw-rw---- 1 grid asmadmin 8, 64 Jan 28 02:38 asm-diske

brw-rw---- 1 grid asmadmin 8, 80 Jan 28 02:38 asm-diskf

节点2 一样的操作

5.2 手动配置ssh等效性

Oracle用户

[root@rac1 grid_11]# /bin/ln -s /usr/bin/ssh /usr/local/bin/ssh

[root@rac1 grid_11]# /bin/ln -s /usr/bin/scp /usr/local/bin/scp

[root@rac1 grid_11]#

[oracle@rac1 ~]$ mkdir ~/.ssh

[oracle@rac1 ~]$ cd .ssh

[oracle@rac1 .ssh]$ ssh-keygen -t rsa

[oracle@rac1 .ssh]$ ssh-keygen -t dsa

[oracle@rac1 .ssh]$ touch authorized_keys

[oracle@rac1 .ssh]$ ssh rac1 cat /home/oracle/.ssh/id_rsa.pub >> authorized_keys

[oracle@rac1 .ssh]$ ssh rac1 cat /home/oracle/.ssh/id_dsa.pub >> authorized_keys

[oracle@rac1 .ssh]$ ssh rac2 cat /home/oracle/.ssh/id_dsa.pub >> authorized_keys

oracle@rac2's password:

cat: /home/oracle/.ssh/id_dsa.pub: No such file or directory

在节点2上执行以上操作

[oracle@rac1 .ssh]$ ssh rac2 cat /home/oracle/.ssh/id_dsa.pub >> authorized_keys

oracle@rac2's password: ---成功

[oracle@rac1 .ssh]$ scp authorized_keys rac1:/home/oracle/.ssh/

authorized_keys

[oracle@rac1 .ssh]$ ssh rac1 date

Wed Jan 28 04:40:34 EST 2015

[oracle@rac1 .ssh]$ ssh rac2 date

Wed Jan 28 04:40:46 EST 2015

Grid用户的等效性

[grid@rac1 ~]$ cd .ssh/

[grid@rac1 .ssh]$ ssh-keygen -t rsa

[grid@rac1 .ssh]$ ssh-keygen -t dsa

[grid@rac1 .ssh]$ touch authorized_keys

[grid@rac1 .ssh]$ ssh rac1 cat /home/grid/.ssh/id_rsa.pub >> authorized_keys

[grid@rac1 .ssh]$ ssh rac2 cat /home/grid/.ssh/id_rsa.pub >> authorized_keys

cat: /home/grid/.ssh/id_rsa.pub: No such file or directory

[grid@rac1 .ssh]$ ssh rac2 cat /home/grid/.ssh/id_rsa.pub >> authorized_keys

grid@rac2's password:

[grid@rac1 .ssh]$ ssh rac1 cat /home/grid/.ssh/id_dsa.pub >> authorized_keys

[grid@rac1 .ssh]$ ssh rac2 cat /home/grid/.ssh/id_dsa.pub >> authorized_keys

[grid@rac1 .ssh]$ scp authorized_keys rac2:/home/grid/.ssh/

authorized_keys

2个节点分别执行

[grid@rac1 .ssh]$ ssh rac1 date

Wed Jan 28 04:50:39 EST 2015

[grid@rac1 .ssh]$ ssh rac2 date

Wed Jan 28 04:50:45 EST 2015

2个节点分别执行

[grid@rac1 .ssh]$ ssh-agent $SHELL

[grid@rac1 .ssh]$ ssh-add

Identity added: /home/grid/.ssh/id_rsa (/home/grid/.ssh/id_rsa)

Identity added: /home/grid/.ssh/id_dsa (/home/grid/.ssh/id_dsa)

[grid@rac1 .ssh]$

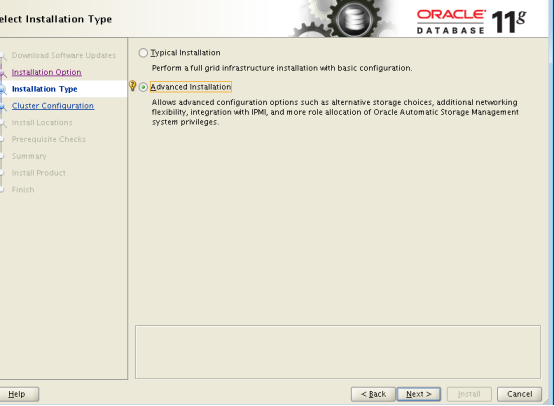

6 安装11g r2.1.0 gird

解压

[root@rac1 software]# unzip linux.x64_11gR2_grid.zip

[root@rac1 software]# unzip linux.x64_11gR2_grid.zip -d grid_11

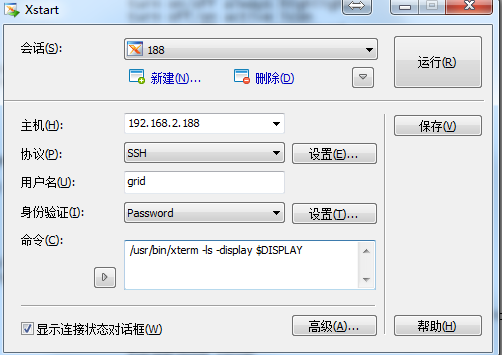

xterm

[root@rac2 software]# xterm

-bash: xterm: command not found

[root@rac2 software]# yum install xterm

[root@rac1 software]# xterm -help

X.Org 6.8.99.903(253) usage:

xterm [-options ...] [-e command args]

/usr/bin/xterm -ls -display $DISPLAY

----------------------11.2.3

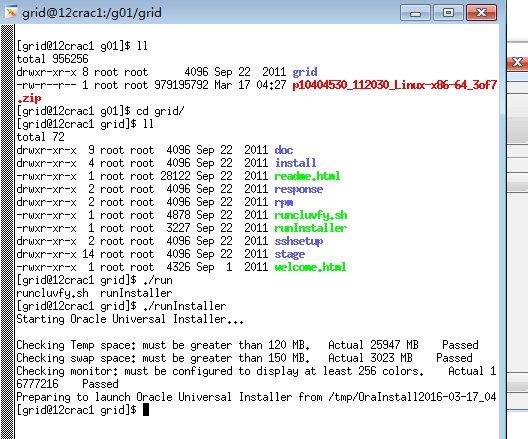

[root@12crac1 g01]# ll

total 956252

-rw-r--r-- 1 root root 979195792 Mar 17 04:27 p10404530_112030_Linux-x86-64_3of7.zip

[root@12crac1 g01]# unzip p10404530_112030_Linux-x86-64_3of7.zip

[root@12crac1 g01]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/vg_12crac1-lv_root

37727768 9239268 26571984 26% /

tmpfs 1544612 88 1544524 1% /dev/shm

/dev/sda1 495844 55656 414588 12% /boot

[root@12crac1 g01]# cd

[root@12crac1 ~]# mkdir /media/"OL6.3 x86_64 Disc 1 20120626"

mkdir: cannot create directory `/media/OL6.3 x86_64 Disc 1 20120626': File exists

[root@12crac1 ~]# mount -t iso9660 /dev/sr0 /media/"OL6.3 x86_64 Disc 1 20120626"

mount: no medium found on /dev/sr0

[root@12crac1 ~]# cd /media/

[root@12crac1 media]# ll

total 12

drwxr-xr-x. 2 root root 4096 Mar 17 02:22 cdrom

drwxr-xr-x 12 yhq1314 yhq1314 6144 Jun 26 2012 OL6.3 x86_64 Disc 1 20120626

dr-xr-xr-x 4 yhq1314 yhq1314 2048 Jun 26 2012 Oracle Linux Server

[root@12crac1 ~]# mount -t iso9660 /dev/sr0 /media/"OL6.3 x86_64 Disc 1 20120626"

mount: block device /dev/sr0 is write-protected, mounting read-only

[root@12crac1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/vg_12crac1-lv_root

37727768 9239324 26571928 26% /

tmpfs 1544612 112 1544500 1% /dev/shm

/dev/sda1 495844 55656 414588 12% /boot

/dev/sr0 3589186 3589186 0 100% /media/OL6.3 x86_64 Disc 1 20120626

[root@12crac1 ~]# yum install xterm

--/usr/bin/xterm -ls -display $DISPLAY

[root@rac1 g01]# chown -R grid:oinstall grid_11

选择normal的话,需要3块磁盘

2个节点分别运行

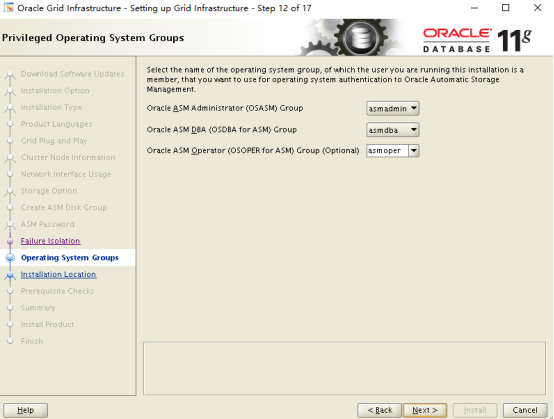

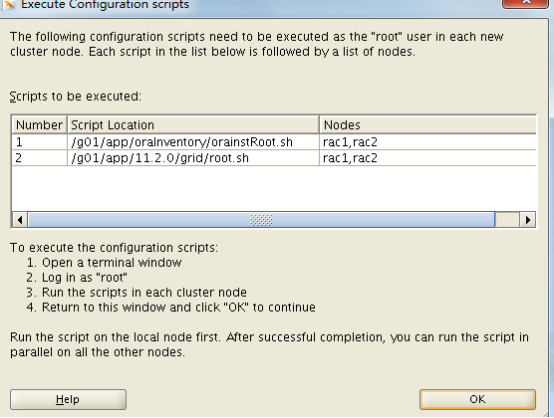

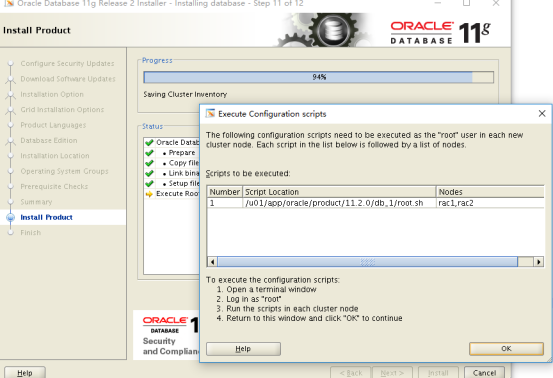

/g01/oraInventory/orainstRoot.sh --节点1运行完在运行2节点

/g01/11.2.0/grid/root.sh --1节点运行完运行2节点

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@rac1 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.OCR.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora....C1.lsnr application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora....C2.lsnr application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

[grid@rac1 ~]$ olsnodes -n #.查看集群中节点配置信息

rac1 1

rac2 2

[grid@rac1 ~]$ olsnodes -n -i -s -t

rac1 1 rac1vip Active Unpinned

rac2 2 rac2vip Active Unpinned

[grid@rac1 ~]$ ps -ef|grep lsnr|grep -v 'grep'|grep -v 'ocfs'|awk '{print$9}'

LISTENER_SCAN1

LISTENER

[grid@rac1 ~]$ srvctl status asm -a

ASM is running on rac2,rac1

ASM is enabled.

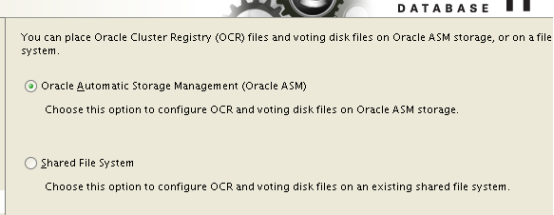

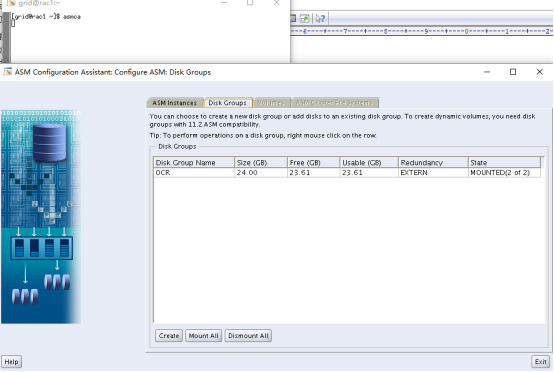

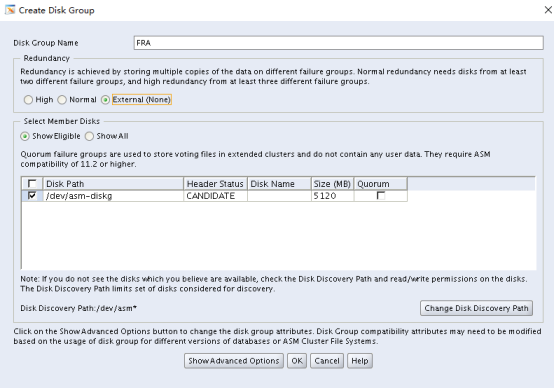

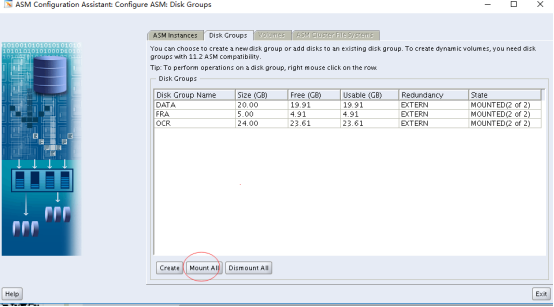

7 asmca

只在节点rac1执行即可

进入grid用户下

[root@rac1 ~]# su - grid

利用asmca

[grid@rac1 ~]$ asmca

这里看到安装grid时配置的OCR盘已存在

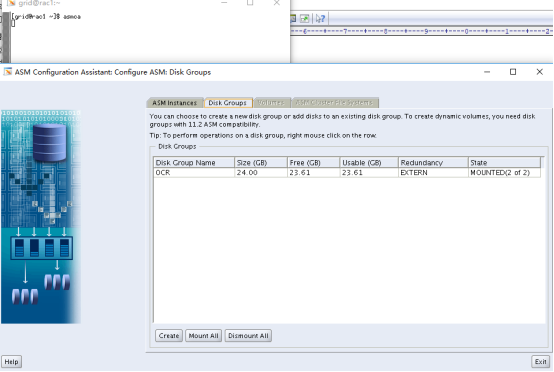

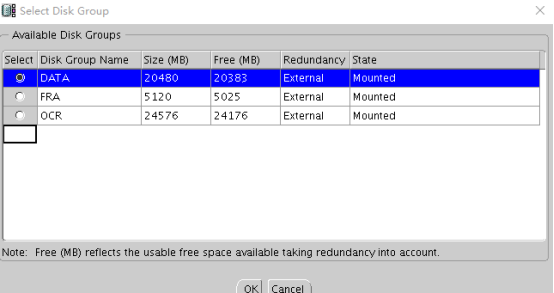

添加DATA盘,点击create,使用裸盘/dev/asm-diske,diskf

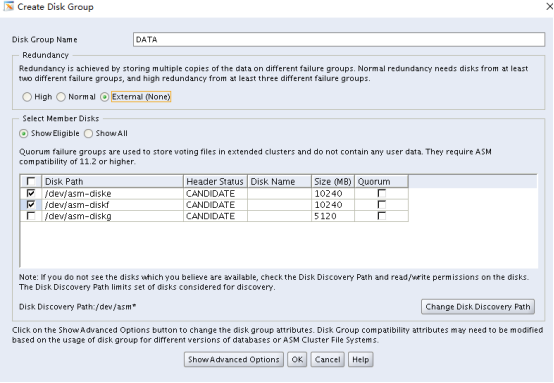

创建FRA

8安装db

节点1

[grid@12crac1 ~]$ vi .bash_profile

[grid@12crac1 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export GRID_HOME=/g01/11.2.0/grid

export ORACLE_HOME=/g01/11.2.0/grid

export PATH=$GRID_HOME/bin:$GRID_HOME/OPatch:/sbin:/bin:/usr/sbin:/usr/bin

export ORACLE_SID=+ASM1

export LD_LIBRARY_PATH=$GRID_HOME/lib:$GRID_HOME/lib32

export ORACLE_BASE=/g01/orabase

export ORA_NLS10=$ORACLE_HOME/nls/data

export NLS_LANG="Simplified Chinese"_China.AL32UTF8

节点2一样

配置root环境变量

[root@12crac1 ~]# vi .bash_profile

[root@12crac1 ~]# cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export GRID_HOME=/g01/11.2.0/grid

export ORACLE_HOME=/g01/11.2.0/grid

export PATH=$GRID_HOME/bin:$GRID_HOME/OPatch:/sbin:/bin:/usr/sbin:/usr/bin

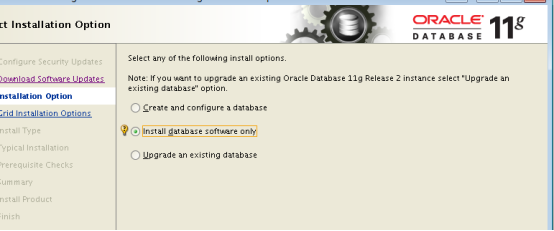

9 安装oracle数据库

[root@12crac1 s01]# ll

total 2442056

drwxr-xr-x 8 oracle oinstall 4096 Sep 22 2011 database

-rw-r--r--. 1 root root 1358454646 Jan 28 2015 p10404530_112030_Linux-x86-64_1of7.zip

-rw-r--r--. 1 root root 1142195302 Jan 29 2015 p10404530_112030_Linux-x86-64_2of7.zip

[oracle@12crac1 ~]$ logout

[root@12crac1 s01]# su - grid

[grid@12crac1 ~]$ crs_stat |grep vip

NAME=ora.12crac1.vip

TYPE=ora.cluster_vip_net1.type

NAME=ora.12crac2.vip

TYPE=ora.cluster_vip_net1.type

NAME=ora.scan1.vip

TYPE=ora.scan_vip.type

[grid@12crac1 ~]$ crsctl stat res ora.12crac1.vip

NAME=ora.12crac1.vip

TYPE=ora.cluster_vip_net1.type

TARGET=ONLINE

STATE=ONLINE on 12crac1

[grid@12crac1 ~]$ crsctl stat res ora.12crac1.vip -p

NAME=ora.12crac1.vip

TYPE=ora.cluster_vip_net1.type

ACL=owner:root:rwx,pgrp:root:r-x,other::r--,group:oinstall:r-x,user:grid:r-x

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTIVE_PLACEMENT=1

AGENT_FILENAME=%CRS_HOME%/bin/orarootagent%CRS_EXE_SUFFIX%

AUTO_START=restore

CARDINALITY=1

CHECK_INTERVAL=1

CHECK_TIMEOUT=30

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=vip)

DEGREE=1

DESCRIPTION=Oracle VIP resource

ENABLED=1

FAILOVER_DELAY=0

FAILURE_INTERVAL=0

FAILURE_THRESHOLD=0

GEN_USR_ORA_STATIC_VIP=

GEN_USR_ORA_VIP=

HOSTING_MEMBERS=12crac1

LOAD=1

LOGGING_LEVEL=1

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PLACEMENT=favored

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=0

SCRIPT_TIMEOUT=60

SERVER_POOLS=*

START_DEPENDENCIES=hard(ora.net1.network) pullup(ora.net1.network)

START_TIMEOUT=0

STATE_CHANGE_TEMPLATE=

STOP_DEPENDENCIES=hard(ora.net1.network)

STOP_TIMEOUT=0

TYPE_VERSION=2.1

UPTIME_THRESHOLD=1h

USR_ORA_ENV=

USR_ORA_VIP=12crac1-vip

VERSION=11.2.0.3.0

[grid@12crac1 ~]$ crsctl stat res ora.net1.network

NAME=ora.net1.network

TYPE=ora.network.type

TARGET=ONLINE , ONLINE

STATE=ONLINE on 12crac1, ONLINE on 12crac2

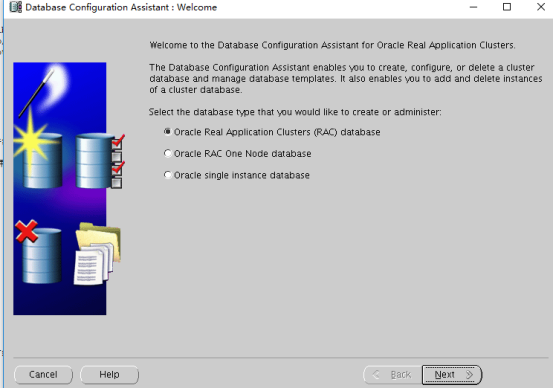

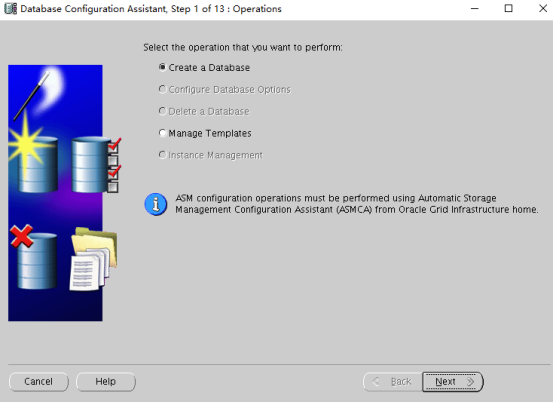

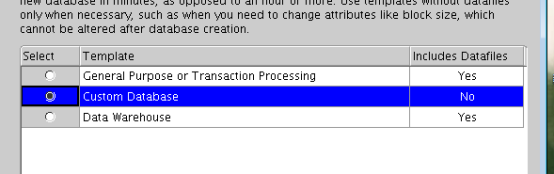

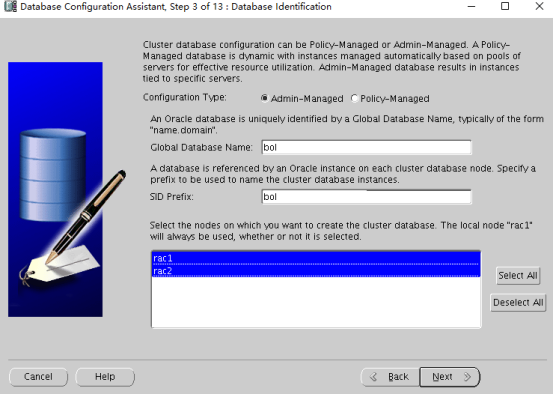

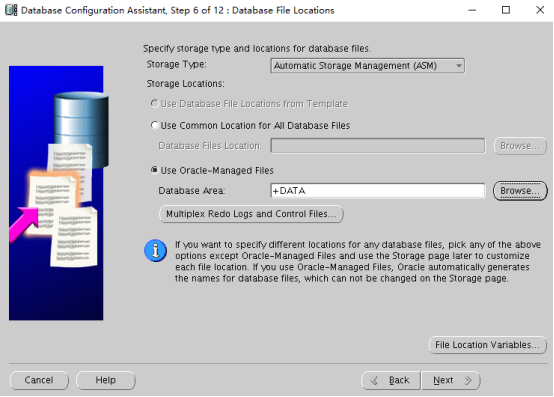

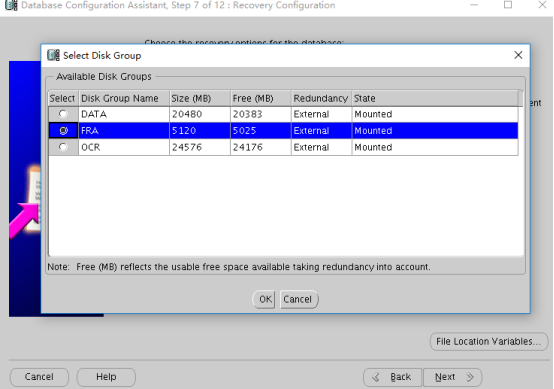

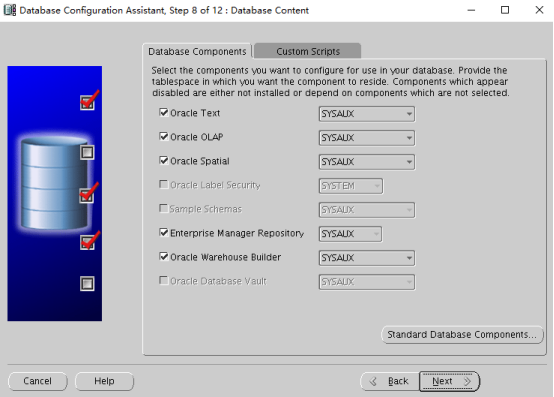

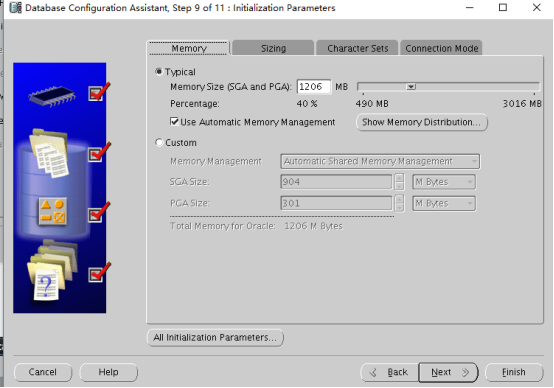

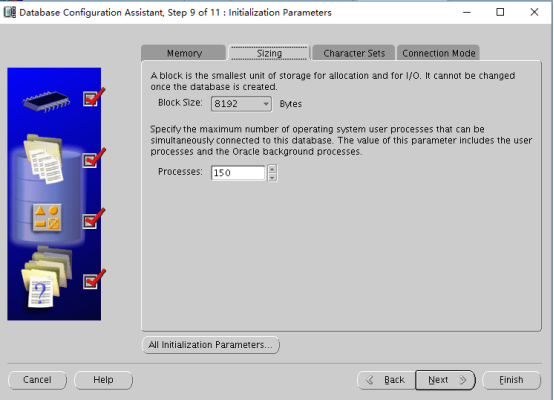

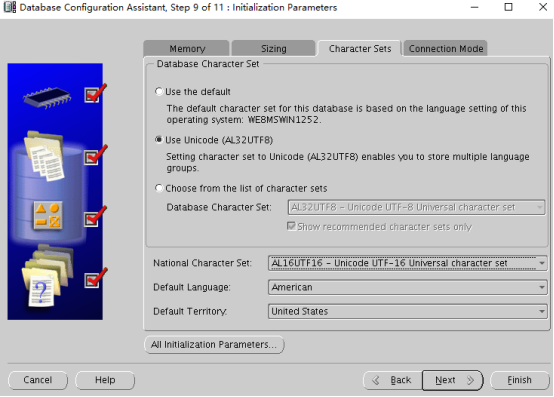

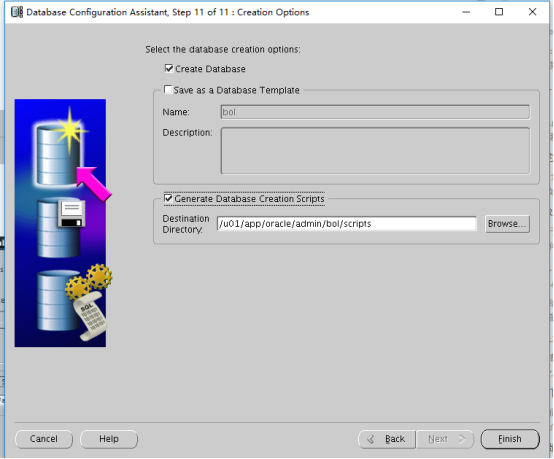

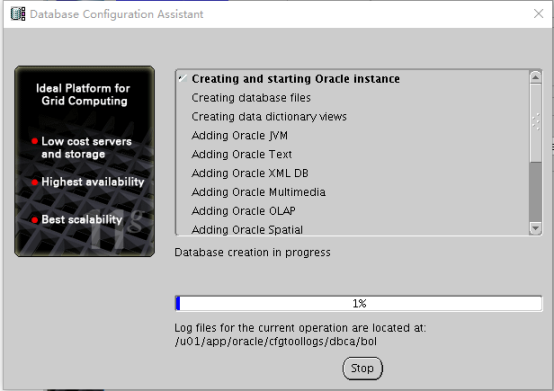

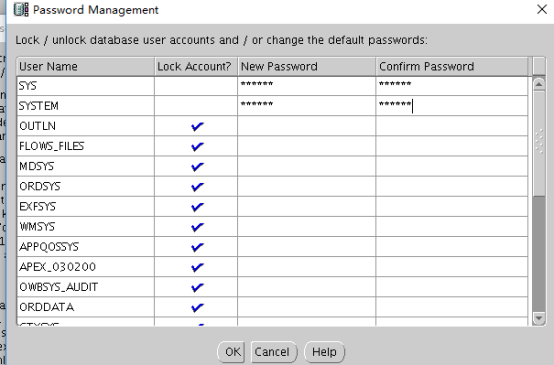

9 DBCA

dbca

[root@rac1 ~]# su - oracle

[oracle@rac1 ~]$ lsnrctl status

LSNRCTL for Linux: Version 11.2.0.4.0 - Production on 30-OCT-2018 08:04:27

Copyright (c) 1991, 2013, Oracle. All rights reserved.

Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 11.2.0.4.0 - Production

Start Date 30-OCT-2018 03:23:38

Uptime 0 days 4 hr. 40 min. 50 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/11.2.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/rac1/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.15.7.11)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.15.7.13)(PORT=1521)))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "bol" has 1 instance(s).

Instance "bol1", status READY, has 1 handler(s) for this service...

Service "bolXDB" has 1 instance(s).

Instance "bol1", status READY, has 1 handler(s) for this service...

The command completed successfully

[grid@12crac2 ~]$ crs_stat -t

名称 类型 目标 状态 主机

------------------------------------------------------------

ora....SM1.asm application ONLINE ONLINE 12crac1

ora....C1.lsnr application ONLINE ONLINE 12crac1

ora....ac1.gsd application OFFLINE OFFLINE

ora....ac1.ons application ONLINE ONLINE 12crac1

ora....ac1.vip ora....t1.type ONLINE ONLINE 12crac1

ora....SM2.asm application ONLINE ONLINE 12crac2

ora....C2.lsnr application ONLINE ONLINE 12crac2

ora....ac2.gsd application OFFLINE OFFLINE

ora....ac2.ons application ONLINE ONLINE 12crac2

ora....ac2.vip ora....t1.type ONLINE ONLINE 12crac2

ora.DATADG.dg ora....up.type ONLINE ONLINE 12crac1

ora...._P.lsnr ora....er.type ONLINE ONLINE 12crac1

ora....N1.lsnr ora....er.type ONLINE ONLINE 12crac1

ora....EMDG.dg ora....up.type ONLINE ONLINE 12crac1

ora.asm ora.asm.type ONLINE ONLINE 12crac1

ora.cvu ora.cvu.type ONLINE ONLINE 12crac1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE 12crac1

ora.oc4j ora.oc4j.type ONLINE ONLINE 12crac1

ora.ons ora.ons.type ONLINE ONLINE 12crac1

ora.scan1.vip ora....ip.type ONLINE ONLINE 12crac1

[grid@12crac2 ~]$ srvctl status listener

Listener LISTENER_P is enabled

Listener LISTENER_P is running on node(s): 12crac2,12crac1

[grid@12crac2 ~]$ olsnodes

12crac1

12crac2

[grid@12crac2 ~]$ srvctl status listener

Listener LISTENER_P is enabled

Listener LISTENER_P is running on node(s): 12crac2,12crac1

[grid@12crac1 ~]$ srvctl config scan

SCAN name: vmac-cluster-scan, Network: 1/192.168.2.0/255.255.255.0/eth0

SCAN VIP name: scan1, IP: /vmac-cluster-scan/192.168.2.194

[grid@12crac1 ~]$ srvctl config scan_listener

SCAN Listener LISTENER_SCAN1 exists. Port: TCP:1521

[oracle@12crac1 ~]$ echo $ORACLE_SID

PROD1

[oracle@12crac1 ~]$ cd /s01/orabase/cfgtoollogs/dbca/PROD/

[oracle@12crac1 PROD]$ ls

CreateDBCatalog.log CreateDBFiles.log CreateDB.log trace.log

[oracle@12crac1 PROD]$ ls -ltr

total 472

-rw-r----- 1 oracle oinstall 291 Mar 18 02:01 CreateDB.log

-rw-r----- 1 oracle oinstall 0 Mar 18 02:01 CreateDBFiles.log

-rw-r----- 1 oracle oinstall 463733 Mar 18 02:01 trace.log

-rw-r----- 1 oracle oinstall 9415 Mar 18 02:02 CreateDBCatalog.log

[oracle@12crac1 PROD]$ tail -f trace.log

[oracle@12crac1 PROD]$ tail -f CreateDBCatalog.log