主要内容:

一、NiTE2手部跟踪流程

我自己都感觉到天天在重复着相同的代码,但我觉得没什么不好的,对于新东西的学习只有在重复再重复的过程中,才能积累经验,较少犯“低级错误”的几率,所以在开始之前,让我们再熟练熟练NITE 2的手部跟踪流程,主要包括以下几个步骤:

1. 初始化NITE环境: nite::NiTE::initialize();

2. 创建HandTracker手部跟踪器: HandTracker mHandTracker; mHandTracker.create(&mDevice);

3. 设定手势探测(GESTURE_WAVE、GESTURE_CLICK和GESTURE_HAND_RAISE):mHandTracker.startGestureDetection( GESTURE_WAVE );等等;

4. 创建并读取HandTracker Frame信息:HandTrackerFrameRef mHandFrame; mHandTracker.readFrame( &mHandFrame );

5. 整个界面帧信息进行分析,统计得到符合的手势信息:const nite::Array<GestureData>& aGestures = mHandFrame.getGestures();

6. 通过跟踪得到的手势信息,开始对该特定手进行手部跟踪:const Point3f& rPos = rGesture.getCurrentPosition();HandId mHandID; mHandTracker.startHandTracking( rPos, &mHandID );

7. 读取并统计目前被跟踪的手信息:const nite::Array<HandData>& aHands = mHandFrame.getHands();

8. 确定手部是否属于跟踪状态,开始自己的操作:

if( rHand.isTracking() )

{

// 得到手心坐标

const Point3f& rPos = rHand.getPosition();

。。。

}

9. 关闭跟踪器:mHandTracker.destroy();

10. 最后关闭NITE环境:nite::NiTE::shutdown();

二、代码演示

在谈谈NITE 2与OpenCV结合的第一个程序和谈谈NITE 2与OpenCV结合提取指尖坐标中我们都是在深度图像中对获得的手部信息进行处理,但不知道在彩色图像中,手部跟踪获得手心坐标是怎么样的?是否也和深度图像显示一样,能够很好的定位到真正的手心中?为了回答自己的这些问题,模仿谈谈人体骨骼坐标在彩色图像中显示中的方法,将通过NiTE2手部跟踪得到的手心坐标映射到彩色图像和深度图像中,并显示对比。具体解释和代码如下:

#include "stdafx.h" #include <iostream> // OpenCV 头文件 #include <opencv2/core/core.hpp> #include <opencv2/highgui/highgui.hpp> #include <opencv2/imgproc/imgproc.hpp> #include <OpenNI.h> #include <NiTE.h> using namespace std; using namespace openni; using namespace nite; int main( int argc, char **argv ) { // 初始化OpenNI OpenNI::initialize(); // 打开Kinect设备 Device mDevice; mDevice.open( ANY_DEVICE ); // 创建深度数据流 VideoStream mDepthStream; mDepthStream.create( mDevice, SENSOR_DEPTH ); // 设置VideoMode模式 VideoMode mDepthMode; mDepthMode.setResolution( 640, 480 ); mDepthMode.setFps( 30 ); mDepthMode.setPixelFormat( PIXEL_FORMAT_DEPTH_1_MM ); mDepthStream.setVideoMode(mDepthMode); // 同样的设置彩色数据流 VideoStream mColorStream; mColorStream.create( mDevice, SENSOR_COLOR ); // 设置VideoMode模式 VideoMode mColorMode; mColorMode.setResolution( 640, 480 ); mColorMode.setFps( 30 ); mColorMode.setPixelFormat( PIXEL_FORMAT_RGB888 ); mColorStream.setVideoMode( mColorMode); // 设置深度图像映射到彩色图像 mDevice.setImageRegistrationMode( IMAGE_REGISTRATION_DEPTH_TO_COLOR ); // 为了得到骨骼数据,先初始化NiTE NiTE::initialize(); // 创建HandTracker跟踪器 HandTracker mHandTracker; mHandTracker.create(&mDevice); // 设定手势探测(GESTURE_WAVE、GESTURE_CLICK和GESTURE_HAND_RAISE) mHandTracker.startGestureDetection( GESTURE_WAVE ); mHandTracker.startGestureDetection( GESTURE_CLICK ); //mHandTracker.startGestureDetection( GESTURE_HAND_RAISE ); mHandTracker.setSmoothingFactor(0.1f); // 创建深度图像显示 cv::namedWindow("Depth Image", CV_WINDOW_AUTOSIZE); // 创建彩色图像显示 cv::namedWindow( "Hand Image", CV_WINDOW_AUTOSIZE ); // 环境初始化后,开始获取深度数据流和彩色数据流 mDepthStream.start(); mColorStream.start(); // 获得最大深度值 int iMaxDepth = mDepthStream.getMaxPixelValue(); while( true ) { // 创建OpenCV::Mat,用于显示彩色数据图像 cv::Mat cImageBGR; // 读取深度数据帧信息流 VideoFrameRef mDepthFrame; mDepthStream.readFrame(&mDepthFrame); // 读取彩色数据帧信息流 VideoFrameRef mColorFrame; mColorStream.readFrame( &mColorFrame ); //将深度数据转换成OpenCV格式 const cv::Mat mImageDepth( mDepthFrame.getHeight(), mDepthFrame.getWidth(), CV_16UC1, (void*)mDepthFrame.getData()); // 为了让深度图像显示的更加明显一些,将CV_16UC1 ==> CV_8U格式 cv::Mat mScaledDepth; mImageDepth.convertTo( mScaledDepth, CV_8U, 255.0 / iMaxDepth ); // 将彩色数据流转换为OpenCV格式,记得格式是:CV_8UC3(含R\G\B) const cv::Mat mImageRGB( mColorFrame.getHeight(), mColorFrame.getWidth(), CV_8UC3, (void*)mColorFrame.getData() ); // RGB ==> BGR cv::cvtColor( mImageRGB, cImageBGR, CV_RGB2BGR ); // 读取帧信息 HandTrackerFrameRef mHandFrame; mHandTracker.readFrame( &mHandFrame ); // 整个界面帧信息进行分析,找到符合的手势 const nite::Array<GestureData>& aGestures = mHandFrame.getGestures(); for( int i = 0; i < aGestures.getSize(); ++ i ) { const GestureData& rGesture = aGestures[i]; // 得到的手势信息中还包含了当前手势的坐标位置 const Point3f& rPos = rGesture.getCurrentPosition(); cout << " 手势位置为: (" << rPos.x << ", " << rPos.y << ", " << rPos.z << ")" << endl; // 得到手势识别后,开始手部跟踪 HandId mHandID; mHandTracker.startHandTracking( rPos, &mHandID ); cout << "确定手势位置,开始手部跟踪" << endl; } const nite::Array<HandData>& aHands = mHandFrame.getHands(); for( int i = 0; i < aHands.getSize(); ++ i ) { const HandData& rHand = aHands[i]; if( rHand.isNew() ) cout << " Start tracking"; else if( rHand.isLost() ) cout << " Lost"; // 确定手部是否属于跟踪状态 if( rHand.isTracking() ) { // 得到手心坐标 const Point3f& rPos = rHand.getPosition(); cout << " at " << rPos.x << ", " << rPos.y << ", " << rPos.z; cv::Point2f aPoint; mHandTracker.convertHandCoordinatesToDepth(rPos.x, rPos.y, rPos.z, &aPoint.x, &aPoint.y); // 将手心坐标映射到彩色图像和深度图像中 cv::circle( cImageBGR, aPoint, 3, cv::Scalar( 0, 0, 255 ), 4 ); cv::circle( mScaledDepth, aPoint, 3, cv::Scalar(0, 0, 255), 4); // 在彩色图像中画出手的轮廓边 cv::Point2f ctlPoint, ctrPoint, cdlPoint, cdrPoint; ctlPoint.x = aPoint.x - 100; ctlPoint.y = aPoint.y - 100; ctrPoint.x = aPoint.x - 100; ctrPoint.y = aPoint.y + 100; cdlPoint.x = aPoint.x + 100; cdlPoint.y = aPoint.y - 100; cdrPoint.x = aPoint.x + 100; cdrPoint.y = aPoint.y + 100; cv::line( cImageBGR, ctlPoint, ctrPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( cImageBGR, ctlPoint, cdlPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( cImageBGR, cdlPoint, cdrPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( cImageBGR, ctrPoint, cdrPoint, cv::Scalar( 255, 0, 0 ), 3 ); // 在深度图像中画出手的轮廓边 cv::Point2f mtlPoint, mtrPoint, mdlPoint, mdrPoint; mtlPoint.x = aPoint.x - 100; mtlPoint.y = aPoint.y - 100; mtrPoint.x = aPoint.x - 100; mtrPoint.y = aPoint.y + 100; mdlPoint.x = aPoint.x + 100; mdlPoint.y = aPoint.y - 100; mdrPoint.x = aPoint.x + 100; mdrPoint.y = aPoint.y + 100; cv::line( mScaledDepth, mtlPoint, mtrPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( mScaledDepth, mtlPoint, mdlPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( mScaledDepth, mdlPoint, mdrPoint, cv::Scalar( 255, 0, 0 ), 3 ); cv::line( mScaledDepth, mtrPoint, mdrPoint, cv::Scalar( 255, 0, 0 ), 3 ); } } // 显示图像 cv::imshow( "Depth Image", mScaledDepth ); cv::imshow( "Hand Image", cImageBGR ); // 按键“q”退出循环 if( cv::waitKey( 1 ) == 'q' ) break; } // 先销毁手部跟踪器 mHandTracker.destroy(); // 销毁彩色数据流和深度数据流 mColorStream.destroy(); mDepthStream.destroy(); // 关闭Kinect设备 mDevice.close(); // 关闭NITE和OpenNI环境 NiTE::shutdown(); OpenNI::shutdown(); return 0; }

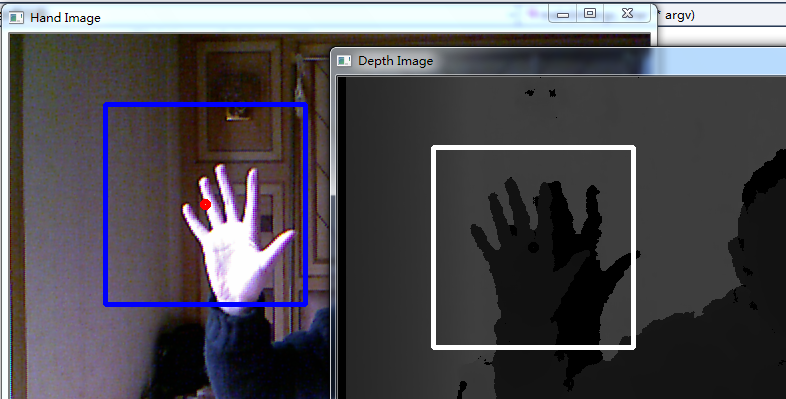

程序运行结果见下图:

接着画出手部运动轨迹,直接上代码:

#include <array> #include <iostream> #include <map> #include <vector> // OpenCV 头文件 #include <opencv2/core/core.hpp> #include <opencv2/highgui/highgui.hpp> #include <opencv2/imgproc/imgproc.hpp> // NiTE 头文件 #include <OpenNI.h> #include <NiTE.h> using namespace std; using namespace openni; using namespace nite; int main( int argc, char **argv ) { // 初始化OpenNI OpenNI::initialize(); // 打开Kinect设备 Device mDevice; mDevice.open( ANY_DEVICE ); // 创建深度数据流 VideoStream mDepthStream; mDepthStream.create( mDevice, SENSOR_DEPTH ); // 设置VideoMode模式 VideoMode mDepthMode; mDepthMode.setResolution( 640, 480 ); mDepthMode.setFps( 30 ); mDepthMode.setPixelFormat( PIXEL_FORMAT_DEPTH_1_MM ); mDepthStream.setVideoMode(mDepthMode); // 同样的设置彩色数据流 VideoStream mColorStream; mColorStream.create( mDevice, SENSOR_COLOR ); // 设置VideoMode模式 VideoMode mColorMode; mColorMode.setResolution( 640, 480 ); mColorMode.setFps( 30 ); mColorMode.setPixelFormat( PIXEL_FORMAT_RGB888 ); mColorStream.setVideoMode( mColorMode); // 设置深度图像映射到彩色图像 mDevice.setImageRegistrationMode( IMAGE_REGISTRATION_DEPTH_TO_COLOR ); // 初始化 NiTE if( NiTE::initialize() != nite::STATUS_OK ) { cerr << "NiTE initial error" << endl; return -1; } // 创建HandTracker跟踪器 HandTracker mHandTracker; if( mHandTracker.create() != nite::STATUS_OK ) { cerr << "Can't create user tracker" << endl; return -1; } // 设定手势探测(GESTURE_WAVE、GESTURE_CLICK和GESTURE_HAND_RAISE) mHandTracker.startGestureDetection( GESTURE_WAVE ); mHandTracker.startGestureDetection( GESTURE_CLICK ); //mHandTracker.startGestureDetection( GESTURE_HAND_RAISE ); mHandTracker.setSmoothingFactor(0.1f); // 创建深度图像显示 cv::namedWindow("Depth Image", CV_WINDOW_AUTOSIZE); // 创建彩色图像显示 cv::namedWindow( "Color Image", CV_WINDOW_AUTOSIZE ); // 保存点坐标 map< HandId,vector<cv::Point2f> > mapHandData; vector<cv::Point2f> vWaveList; vector<cv::Point2f> vClickList; cv::Point2f ptSize( 3, 3 ); array<cv::Scalar,8> aHandColor; aHandColor[0] = cv::Scalar( 255, 0, 0 ); aHandColor[1] = cv::Scalar( 0, 255, 0 ); aHandColor[2] = cv::Scalar( 0, 0, 255 ); aHandColor[3] = cv::Scalar( 255, 255, 0 ); aHandColor[4] = cv::Scalar( 255, 0, 255 ); aHandColor[5] = cv::Scalar( 0, 255, 255 ); aHandColor[6] = cv::Scalar( 255, 255, 255 ); aHandColor[7] = cv::Scalar( 0, 0, 0 ); // 环境初始化后,开始获取深度数据流和彩色数据流 mDepthStream.start(); mColorStream.start(); // 获得最大深度值 int iMaxDepth = mDepthStream.getMaxPixelValue(); // start while( true ) { // 创建OpenCV::Mat,用于显示彩色数据图像 cv::Mat cImageBGR; // 读取彩色数据帧信息流 VideoFrameRef mColorFrame; mColorStream.readFrame( &mColorFrame ); // 将彩色数据流转换为OpenCV格式,记得格式是:CV_8UC3(含R\G\B) const cv::Mat mImageRGB( mColorFrame.getHeight(), mColorFrame.getWidth(), CV_8UC3, (void*)mColorFrame.getData() ); // RGB ==> BGR cv::cvtColor( mImageRGB, cImageBGR, CV_RGB2BGR ); // 获取手Frame HandTrackerFrameRef mHandFrame; if( mHandTracker.readFrame( &mHandFrame ) == nite::STATUS_OK ) { openni::VideoFrameRef mDepthFrame = mHandFrame.getDepthFrame(); // 将深度数据转换成OpenCV格式 const cv::Mat mImageDepth( mDepthFrame.getHeight(), mDepthFrame.getWidth(), CV_16UC1, (void*)mDepthFrame.getData() ); // 为了让深度图像显示的更加明显一些,将CV_16UC1 ==> CV_8U格式 cv::Mat mScaledDepth, mImageBGR; mImageDepth.convertTo( mScaledDepth, CV_8U, 255.0 / 10000 ); // 将灰度图转换成BGR格式,为了画出点的颜色坐标和轨迹 cv::cvtColor( mScaledDepth, mImageBGR, CV_GRAY2BGR ); // 检测手势 const nite::Array<GestureData>& aGestures = mHandFrame.getGestures(); for( int i = 0; i < aGestures.getSize(); ++ i ) { const GestureData& rGesture = aGestures[i]; const Point3f& rPos = rGesture.getCurrentPosition(); cv::Point2f rPos2D; mHandTracker.convertHandCoordinatesToDepth( rPos.x, rPos.y, rPos.z, &rPos2D.x, &rPos2D.y ); // 画点 switch( rGesture.getType() ) { case GESTURE_WAVE: vWaveList.push_back( rPos2D ); break; case GESTURE_CLICK: vClickList.push_back( rPos2D ); break; } // 手部跟踪 HandId mHandID; if( mHandTracker.startHandTracking( rPos, &mHandID ) != nite::STATUS_OK ) cerr << "Can't track hand" << endl; } // 得到手心坐标 const nite::Array<HandData>& aHands = mHandFrame.getHands(); for( int i = 0; i < aHands.getSize(); ++ i ) { const HandData& rHand = aHands[i]; HandId uID = rHand.getId(); if( rHand.isNew() ) { mapHandData.insert( make_pair( uID, vector<cv::Point2f>() ) ); } if( rHand.isTracking() ) { // 将手心坐标映射到彩色图像和深度图像中 const Point3f& rPos = rHand.getPosition(); cv::Point2f rPos2D; mHandTracker.convertHandCoordinatesToDepth( rPos.x, rPos.y, rPos.z, &rPos2D.x, &rPos2D.y ); mapHandData[uID].push_back( rPos2D ); } if( rHand.isLost() ) mapHandData.erase( uID ); } // 画点和轨迹 for( auto itHand = mapHandData.begin(); itHand != mapHandData.end(); ++ itHand ) { const cv::Scalar& rColor = aHandColor[ itHand->first % aHandColor.size() ]; const vector<cv::Point2f>& rPoints = itHand->second; for( int i = 1; i < rPoints.size(); ++ i ) { cv::line( mImageBGR, rPoints[i-1], rPoints[i], rColor, 2 ); cv::line( cImageBGR, rPoints[i-1], rPoints[i], rColor, 2 ); } } // 画 click gesture 轨迹 for( auto itPt = vClickList.begin(); itPt != vClickList.end(); ++ itPt ) { cv::circle( mImageBGR, *itPt, 5, cv::Scalar( 0, 0, 255 ), 2 ); cv::circle( cImageBGR, *itPt, 5, cv::Scalar( 0, 0, 255 ), 2 ); } // 画 wave gesture 轨迹 for( auto itPt = vWaveList.begin(); itPt != vWaveList.end(); ++ itPt ) { cv::rectangle( mImageBGR, *itPt - ptSize, *itPt + ptSize, cv::Scalar( 0, 255, 0 ), 2 ); cv::rectangle( cImageBGR, *itPt - ptSize, *itPt + ptSize, cv::Scalar( 0, 255, 0 ), 2 ); } // 显示image cv::imshow( "Depth Image", mImageBGR ); cv::imshow("Color Image", cImageBGR); mHandFrame.release(); } else { cerr << "Can't get new frame" << endl; } // 按键“q”退出循环 if( cv::waitKey( 1 ) == 'q' ) break; } mHandTracker.destroy(); mColorStream.destroy(); NiTE::shutdown(); OpenNI::shutdown(); return 0; }

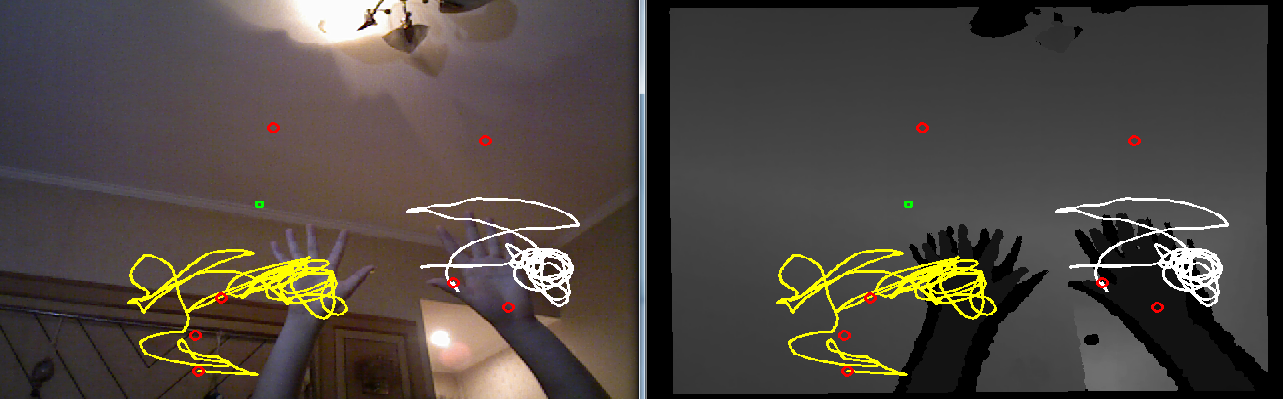

运行结果:

三、总结

最后说明的是:根据自己的感觉写代码,没做封装、优化、重构,完全是面向过程,而且肯定还存在细节的问题,会在后面进一步优化的。 写的粗糙,欢迎指正批评~~~