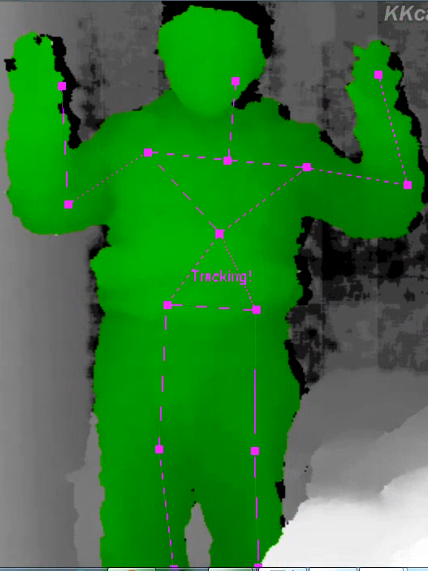

我觉得学习一个新的API,最好的办法是学习它提供的Samples。今天根据自己的了解,分享我自己对新的NITE 2的了解,首先看看NITE2 中提供的UserViewer,将它提供的工程文件夹导入到VS2010中,运行,直接看运行结果:

当人体出现在Kinect面前时,就能获取身体深度图像,当做出POSE_CROSSED_HANDS(“双手抱胸”)的动作时,就开始骨骼跟踪,最多获取全身15个主要关节点坐标的3D信息。现在开始代码的了解。首先看主函数main():

#include "Viewer.h" int main(int argc, char** argv) { openni::Status rc = openni::STATUS_OK; // 调用SampleViewer类构造函数,初始化 SampleViewer sampleViewer("叶梅树的骨骼跟踪"); // 调用SampleViewer的init进行初始化跟踪 rc = sampleViewer.Init(argc, argv); if (rc != openni::STATUS_OK) { return 1; } // 调用Run函数开始循环跟踪 sampleViewer.Run(); }

现在看看SampleViewer类怎么初始化,怎么获取人体深度信息,怎么开始实时跟踪人体骨骼信息的:

1 /************************************************************************/ 2 /* 类初始化,并创建m_pUserTracker对象,就是人体跟踪器 */ 3 /************************************************************************/ 4 SampleViewer::SampleViewer(const char* strSampleName) 5 { 6 ms_self = this; 7 strncpy(m_strSampleName, strSampleName, ONI_MAX_STR); 8 m_pUserTracker = new nite::UserTracker; 9 } 10 ... 11 openni::Status SampleViewer::Init(int argc, char **argv) 12 { 13 m_pTexMap = NULL; 14 15 // OpenNI初始化 16 openni::Status rc = openni::OpenNI::initialize(); 17 18 // 只有一台体感设备时,初始化为openni::ANY_DEVICE。 19 const char* deviceUri = openni::ANY_DEVICE; 20 for (int i = 1; i < argc-1; ++i) 21 { 22 if (strcmp(argv[i], "-device") == 0) 23 { 24 deviceUri = argv[i+1]; 25 break; 26 } 27 } 28 29 // 打开体感设备 30 openni::Status rc = m_device.open(deviceUri); 31 if (rc != openni::STATUS_OK) 32 { 33 printf("Open Device failed:\n%s\n", openni::OpenNI::getExtendedError()); 34 return rc; 35 } 36 37 /************************************************************************/ 38 /*NITE初始化时其实是调用NiteCAPI.h底层函数 niteInitialize(). 39 static Status initialize() 40 { 41 return (Status)niteInitialize(); 42 } */ 43 /************************************************************************/ 44 nite::NiTE::initialize(); 45 46 /************************************************************************/ 47 /* m_pUserTracker->create(&m_device)创建开始跟踪“指定打开的体感设备的人体信息”, 48 函数实现为(并且调用NiteCAPI.h底层函数niteInitializeUserTrackerByDevice()): 49 Status create(openni::Device* pDevice = NULL) 50 { 51 if (pDevice == NULL) 52 { 53 return (Status)niteInitializeUserTracker(&m_UserTrackerHandle); 54 // Pick a device 55 } 56 return (Status)niteInitializeUserTrackerByDevice(pDevice, &m_UserTrackerHandle); 57 } 58 /************************************************************************/ 59 if (m_pUserTracker->create(&m_device) != nite::STATUS_OK) 60 { 61 return openni::STATUS_ERROR; 62 } 63 80 return InitOpenGL(argc, argv); 81 82 } 83 openni::Status SampleViewer::Run() //Does not return 84 { 85 // 开始循环跟踪人体深度数据和人体骨骼坐标了。回调函数为Display()函数。 86 glutMainLoop(); 87 88 return openni::STATUS_OK; 89 }

现在看看回调函数Display()函数的前半部分:主要是定位到人体深度图像,并做像素转换处理,显示出来。

View Code

View Code

void SampleViewer::Display() { nite::UserTrackerFrameRef userTrackerFrame; openni::VideoFrameRef depthFrame; nite::Status rc = m_pUserTracker->readFrame(&userTrackerFrame); if (rc != nite::STATUS_OK) { printf("GetNextData failed\n"); return; } depthFrame = userTrackerFrame.getDepthFrame(); if (m_pTexMap == NULL) { // Texture map init m_nTexMapX = MIN_CHUNKS_SIZE(depthFrame.getVideoMode().getResolutionX(), TEXTURE_SIZE); m_nTexMapY = MIN_CHUNKS_SIZE(depthFrame.getVideoMode().getResolutionY(), TEXTURE_SIZE); m_pTexMap = new openni::RGB888Pixel[m_nTexMapX * m_nTexMapY]; } const nite::UserMap& userLabels = userTrackerFrame.getUserMap(); glClear (GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); glMatrixMode(GL_PROJECTION); glPushMatrix(); glLoadIdentity(); glOrtho(0, GL_WIN_SIZE_X, GL_WIN_SIZE_Y, 0, -1.0, 1.0); if (depthFrame.isValid() && g_drawDepth) { const openni::DepthPixel* pDepth = (const openni::DepthPixel*)depthFrame.getData(); int width = depthFrame.getWidth(); int height = depthFrame.getHeight(); // Calculate the accumulative histogram (the yellow display...) memset(m_pDepthHist, 0, MAX_DEPTH*sizeof(float)); int restOfRow = depthFrame.getStrideInBytes() / sizeof(openni::DepthPixel) - width; unsigned int nNumberOfPoints = 0; for (int y = 0; y < height; ++y) { for (int x = 0; x < width; ++x, ++pDepth) { if (*pDepth != 0) { m_pDepthHist[*pDepth]++; nNumberOfPoints++; } } pDepth += restOfRow; } for (int nIndex=1; nIndex<MAX_DEPTH; nIndex++) { m_pDepthHist[nIndex] += m_pDepthHist[nIndex-1]; } if (nNumberOfPoints) { for (int nIndex=1; nIndex<MAX_DEPTH; nIndex++) { m_pDepthHist[nIndex] = (unsigned int)(256 * (1.0f - (m_pDepthHist[nIndex] / nNumberOfPoints))); } } } memset(m_pTexMap, 0, m_nTexMapX*m_nTexMapY*sizeof(openni::RGB888Pixel)); float factor[3] = {1, 1, 1}; // check if we need to draw depth frame to texture if (depthFrame.isValid() && g_drawDepth) { const nite::UserId* pLabels = userLabels.getPixels(); const openni::DepthPixel* pDepthRow = (const openni::DepthPixel*)depthFrame.getData(); openni::RGB888Pixel* pTexRow = m_pTexMap + depthFrame.getCropOriginY() * m_nTexMapX; int rowSize = depthFrame.getStrideInBytes() / sizeof(openni::DepthPixel); for (int y = 0; y < depthFrame.getHeight(); ++y) { const openni::DepthPixel* pDepth = pDepthRow; openni::RGB888Pixel* pTex = pTexRow + depthFrame.getCropOriginX(); for (int x = 0; x < depthFrame.getWidth(); ++x, ++pDepth, ++pTex, ++pLabels) { if (*pDepth != 0) { if (*pLabels == 0) { if (!g_drawBackground) { factor[0] = factor[1] = factor[2] = 0; } else { factor[0] = Colors[colorCount][0]; factor[1] = Colors[colorCount][1]; factor[2] = Colors[colorCount][2]; } } else { factor[0] = Colors[*pLabels % colorCount][0]; factor[1] = Colors[*pLabels % colorCount][1]; factor[2] = Colors[*pLabels % colorCount][2]; } // // Add debug lines - every 10cm // else if ((*pDepth / 10) % 10 == 0) // { // factor[0] = factor[2] = 0; // } int nHistValue = m_pDepthHist[*pDepth]; pTex->r = nHistValue*factor[0]; pTex->g = nHistValue*factor[1]; pTex->b = nHistValue*factor[2]; factor[0] = factor[1] = factor[2] = 1; } } pDepthRow += rowSize; pTexRow += m_nTexMapX; } } glTexParameteri(GL_TEXTURE_2D, GL_GENERATE_MIPMAP_SGIS, GL_TRUE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, m_nTexMapX, m_nTexMapY, 0, GL_RGB, GL_UNSIGNED_BYTE, m_pTexMap); // Display the OpenGL texture map glColor4f(1,1,1,1); glEnable(GL_TEXTURE_2D); glBegin(GL_QUADS); g_nXRes = depthFrame.getVideoMode().getResolutionX(); g_nYRes = depthFrame.getVideoMode().getResolutionY(); // upper left glTexCoord2f(0, 0); glVertex2f(0, 0); // upper right glTexCoord2f((float)g_nXRes/(float)m_nTexMapX, 0); glVertex2f(GL_WIN_SIZE_X, 0); // bottom right glTexCoord2f((float)g_nXRes/(float)m_nTexMapX, (float)g_nYRes/(float)m_nTexMapY); glVertex2f(GL_WIN_SIZE_X, GL_WIN_SIZE_Y); // bottom left glTexCoord2f(0, (float)g_nYRes/(float)m_nTexMapY); glVertex2f(0, GL_WIN_SIZE_Y); glEnd(); glDisable(GL_TEXTURE_2D);

处理完之后,开始跟踪人体骨骼坐标:

// 读取人体跟踪器中的所有人 const nite::Array<nite::UserData>& users = userTrackerFrame.getUsers(); for (int i = 0; i < users.getSize(); ++i) { const nite::UserData& user = users[i]; updateUserState(user, userTrackerFrame.getTimestamp()); if (user.isNew()) { // 如果是新出现在Kinect,开始跟踪人体骨骼 m_pUserTracker->startSkeletonTracking(user.getId()); // 开始检测该人做的姿势是不是(POSE_CROSSED_HANDS) m_pUserTracker->startPoseDetection(user.getId(), nite::POSE_CROSSED_HANDS); } else if (!user.isLost()) { // 如果没有丢失跟踪的人,就“画出”相关信息 if (g_drawStatusLabel) { DrawStatusLabel(m_pUserTracker, user); } if (g_drawCenterOfMass) { DrawCenterOfMass(m_pUserTracker, user); } if (g_drawBoundingBox) { DrawBoundingBox(user); } // 当人体骨骼跟踪状态处于"SKELETON_TRACKED"就“画出”人体主要骨骼点的坐标和他们之间的连线。 if (users[i].getSkeleton().getState() == nite::SKELETON_TRACKED && g_drawSkeleton) { DrawSkeleton(m_pUserTracker, user); } } if (m_poseUser == 0 || m_poseUser == user.getId()) { const nite::PoseData& pose = user.getPose(nite::POSE_CROSSED_HANDS); if (pose.isEntered()) { // Start timer sprintf(g_generalMessage, "In exit pose. Keep it for %d second%s to exit\n", g_poseTimeoutToExit/1000, g_poseTimeoutToExit/1000 == 1 ? "" : "s"); printf("Counting down %d second to exit\n", g_poseTimeoutToExit/1000); m_poseUser = user.getId(); m_poseTime = userTrackerFrame.getTimestamp(); } else if (pose.isExited()) { memset(g_generalMessage, 0, sizeof(g_generalMessage)); printf("Count-down interrupted\n"); m_poseTime = 0; m_poseUser = 0; } else if (pose.isHeld()) { // tick if (userTrackerFrame.getTimestamp() - m_poseTime > g_poseTimeoutToExit * 1000) { printf("Count down complete. Exit...\n"); Finalize(); exit(2); } } } } if (g_drawFrameId) { DrawFrameId(userTrackerFrame.getFrameIndex()); } if (g_generalMessage[0] != '\0') { char *msg = g_generalMessage; glColor3f(1.0f, 0.0f, 0.0f); glRasterPos2i(100, 20); glPrintString(GLUT_BITMAP_HELVETICA_18, msg); } // Swap the OpenGL display buffers glutSwapBuffers(); }

其中“画出”骨骼坐标和它们之间的函数如下:

void DrawSkeleton(nite::UserTracker* pUserTracker, const nite::UserData& userData) { DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_HEAD), userData.getSkeleton().getJoint(nite::JOINT_NECK), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_SHOULDER), userData.getSkeleton().getJoint(nite::JOINT_LEFT_ELBOW), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_ELBOW), userData.getSkeleton().getJoint(nite::JOINT_LEFT_HAND), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_RIGHT_SHOULDER), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_ELBOW), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_RIGHT_ELBOW), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_HAND), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_SHOULDER), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_SHOULDER), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_SHOULDER), userData.getSkeleton().getJoint(nite::JOINT_TORSO), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_RIGHT_SHOULDER), userData.getSkeleton().getJoint(nite::JOINT_TORSO), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_TORSO), userData.getSkeleton().getJoint(nite::JOINT_LEFT_HIP), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_TORSO), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_HIP), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_HIP), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_HIP), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_HIP), userData.getSkeleton().getJoint(nite::JOINT_LEFT_KNEE), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_LEFT_KNEE), userData.getSkeleton().getJoint(nite::JOINT_LEFT_FOOT), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_RIGHT_HIP), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_KNEE), userData.getId() % colorCount); DrawLimb(pUserTracker, userData.getSkeleton().getJoint(nite::JOINT_RIGHT_KNEE), userData.getSkeleton().getJoint(nite::JOINT_RIGHT_FOOT), userData.getId() % colorCount); }

综上,定位跟踪人体骨骼坐标,基本包括以下几个步骤:

1. 初始化OpenNI、NITE等;

2. 创建 new nite::UserTracker对象;

3. m_pUserTracker->create(&m_device)

4. 开始跟踪人体骨骼 m_pUserTracker->startSkeletonTracking(user.getId());

5. 开始检测该人做的姿势是不是(POSE_CROSSED_HANDS):m_pUserTracker->startPoseDetection(user.getId(), nite::POSE_CROSSED_HANDS);

6. 最后获取人体骨骼坐标信息,主要包括15个骨骼点:

最后附上一些有关跟踪骨骼信息的类型和函数:

一:之前的NITE1我们需要做出“投降”的姿势,才能跟踪骨骼坐标,但现在多了一个姿势,“双手抱胸”。我想后面一个会更加容易获得,具体姿势类型为enum nite::PoseType:POSE_PSI和POSE_CROSSED_HANDS。

二:骨骼的状态,通过对获取的骨骼跟踪状态,进行相关的处理。

三:当然最重要的是nite::UserTrackerFrameRef,我把它叫做人体跟踪快照,对于这个类的含义的描述是这样的:

Snapshot of the User Tracker algorithm. It holds all the users identified at this time, including their position, skeleton and such, as well as the floor plane。具体是什么意思,你说的算~~~

四: 其实封装好的底层人体跟踪函数也不多,和手的跟踪差不多,也很好理解

// UserTracker NITE_API NiteStatus niteInitializeUserTracker(NiteUserTrackerHandle*); NITE_API NiteStatus niteInitializeUserTrackerByDevice(void*, NiteUserTrackerHandle*); NITE_API NiteStatus niteShutdownUserTracker(NiteUserTrackerHandle); NITE_API NiteStatus niteStartSkeletonTracking(NiteUserTrackerHandle, NiteUserId); NITE_API void niteStopSkeletonTracking(NiteUserTrackerHandle, NiteUserId); NITE_API bool niteIsSkeletonTracking(NiteUserTrackerHandle, NiteUserId); NITE_API NiteStatus niteSetSkeletonSmoothing(NiteUserTrackerHandle, float); NITE_API NiteStatus niteGetSkeletonSmoothing(NiteUserTrackerHandle, float*); NITE_API NiteStatus niteStartPoseDetection(NiteUserTrackerHandle, NiteUserId, NitePoseType); NITE_API void niteStopPoseDetection(NiteUserTrackerHandle, NiteUserId, NitePoseType); NITE_API void niteStopAllPoseDetection(NiteUserTrackerHandle, NiteUserId); NITE_API NiteStatus niteRegisterUserTrackerCallbacks(NiteUserTrackerHandle, NiteUserTrackerCallbacks*, void*); NITE_API void niteUnregisterUserTrackerCallbacks(NiteUserTrackerHandle, NiteUserTrackerCallbacks*); NITE_API NiteStatus niteReadUserTrackerFrame(NiteUserTrackerHandle, NiteUserTrackerFrame**);

总结:对于NITE2的理解,只有两个”手的跟踪“和”人体骨骼跟踪“,但对他们的封装更加简单了,我们也更加容易轻松的通过NITE2获取我们所需要的”手和人体骨骼“。对于获取信息之后如何处理,想怎么处理,已经不管NITE2的事情了。下面就让我们自己开始写自己的程序吧,相信看完之前的三篇”随笔博文“和这篇博文,

你也能轻松的开始使用OpenNI2 SDK 和NITE2了吧~~~