1、redis mongodb mysql的python包都提供了批量插入操作,但需要自己在外部对一个例如1000 001个任务进行分解成每1000个为1个小批次,还要处理整除批次数量后的余数,如果做一次还可以,如果是很多任务多要这样做,有点麻烦。

例如redis的,mongo的也一样,需要在外部自己准备一个批量列表,循环完后不要遗漏了没达到批次数量的任务。

city_items是一个迭代器,长度有点大,一下子不好分均匀,每次为了划割批次和兼容余数都要写一坨,如下

for city_item in city_items: task_dict = OrderedDict() task_dict['city_cn'] = city_item.get('city') task_dict['city_en'] = city_item.get('cityEn') task_dict['is_international'] = is_international task_dict['url'] = url_city self.logger.debug(task_dict) task_dict_list.append(task_dict) if len(task_dict_list) == 2000: self.logger.debug('执行2000个city任务插入') with self.redis_local_db7.pipeline(transaction=False) as p: for task_dict in task_dict_list: p.sadd(self.start_urls_key, json.dumps(task_dict)) p.execute() task_dict_list.clear() task_dict_list_lenth = len(task_dict_list) if task_dict_list_lenth > 0: self.logger.debug('执行{}个city任务插入'.format(task_dict_list_lenth)) with self.redis_local_db7.pipeline(transaction=False) as p: for task_dict in task_dict_list: p.sadd(self.start_urls_key, json.dumps(task_dict)) p.execute() task_dict_list.clear() self.logger.debug(total_city_count)

2、更简单的操作应该是这样,在类外只管提交单个任务就可以了,只需要调用一个提交任务的api,在类里面自动聚合多个任务成一个批次。想要处理速度快,一定要是一次批量插入多个任务。,而不是使用多线程,每个线程每次插入一个任务,这两种效率可是相差很大的,尤其是远程公网ip写入。

发出三大数据库的简单批量操作api,使用方法在unittest里面。里面实现的批量操作都是基于redis mongo mysql自身的批量操作api。

# coding=utf8 """ @author:Administrator @file: bulk_operation.py @time: 2018/08/27 三大数据库的更简单的批次操作 """ import atexit from typing import Union import abc import time from queue import Queue, Empty import unittest from pymongo import UpdateOne, InsertOne, collection, MongoClient import redis from app.utils_ydf import torndb_for_python3 from app.utils_ydf import LoggerMixin, decorators, LogManager, MongoMixin # NOQA class RedisOperation: """redis的操作,此类作用主要是规范下格式而已""" def __init__(self, operation_name: str, key: str, value: str): """ :param operation_name: redis操作名字,例如 sadd lpush等 :param key: redis的键 :param value: reids键的值 """ self.operation_name = operation_name self.key = key self.value = value class BaseBulkHelper(LoggerMixin, metaclass=abc.ABCMeta): """批量操纵抽象基类""" bulk_helper_map = {} def __new__(cls, base_object, *args, **kwargs): if str(base_object) not in cls.bulk_helper_map: # 加str是由于有一些类型的实例不能被hash作为字典的键 self = super().__new__(cls) return self else: return cls.bulk_helper_map[str(base_object)] def __init__(self, base_object: Union[collection.Collection, redis.Redis, torndb_for_python3.Connection], threshold: int = 100, is_print_log: bool = True): if str(base_object) not in self.bulk_helper_map: self._custom_init(base_object, threshold, is_print_log) self.bulk_helper_map[str(base_object)] = self def _custom_init(self, base_object, threshold, is_print_log): self.base_object = base_object self._threshold = threshold self._is_print_log = is_print_log self._to_be_request_queue = Queue(threshold * 2) self._current_time = time.time() atexit.register(self.__do_something_before_exit) # 程序自动结束前执行注册的函数 self._main_thread_has_exit = False self.__excute_bulk_operation_in_other_thread() self.logger.debug(f'{self.__class__}被实例化') def add_task(self, base_operation: Union[UpdateOne, InsertOne, RedisOperation, tuple]): """添加单个需要执行的操作,程序自动聚合陈批次操作""" self._to_be_request_queue.put(base_operation) @decorators.tomorrow_threads(10) def __excute_bulk_operation_in_other_thread(self): while True: if self._to_be_request_queue.qsize() >= self._threshold or time.time() > self._current_time + 10: self._do_bulk_operation() if self._main_thread_has_exit and self._to_be_request_queue.qsize() == 0: break time.sleep(10 ** -4) @abc.abstractmethod def _do_bulk_operation(self): raise NotImplementedError def __do_something_before_exit(self): self._main_thread_has_exit = True self.logger.critical(f'程序自动结束前执行 [{str(self.base_object)}] 剩余的任务') class MongoBulkWriteHelper(BaseBulkHelper): """ 一个更简单的mongo批量插入,可以直接提交一个操作,自动聚合多个操作为一个批次再插入,速度快了n倍。 """ def _do_bulk_operation(self): if self._to_be_request_queue.qsize() > 0: t_start = time.time() count = 0 request_list = [] for _ in range(self._threshold): try: request = self._to_be_request_queue.get_nowait() count += 1 request_list.append(request) except Empty: pass if request_list: self.base_object.bulk_write(request_list, ordered=False) if self._is_print_log: self.logger.info(f'[{str(self.base_object)}] 批量插入的任务数量是 {count} 消耗的时间是 {round(time.time() - t_start,6)}') self._current_time = time.time() class RedisBulkWriteHelper(BaseBulkHelper): """redis批量插入,比自带的更方便操作非整除批次""" def _do_bulk_operation(self): if self._to_be_request_queue.qsize() > 0: t_start = time.time() count = 0 pipeline = self.base_object.pipeline() for _ in range(self._threshold): try: request = self._to_be_request_queue.get_nowait() count += 1 except Empty: pass else: getattr(pipeline, request.operation_name)(request.key, request.value) pipeline.execute() pipeline.reset() if self._is_print_log: self.logger.info(f'[{str(self.base_object)}] 批量插入的任务数量是 {count} 消耗的时间是 {round(time.time() - t_start,6)}') self._current_time = time.time() class MysqlBulkWriteHelper(BaseBulkHelper): """mysql批量操作""" def __new__(cls, base_object: torndb_for_python3.Connection, *, sql_short: str = None, threshold: int = 100, is_print_log: bool = True): # print(cls.bulk_helper_map) if str(base_object) + sql_short not in cls.bulk_helper_map: # 加str是由于有一些类型的实例不能被hash作为字典的键 self = object.__new__(cls) return self else: return cls.bulk_helper_map[str(base_object) + sql_short] def __init__(self, base_object: torndb_for_python3.Connection, *, sql_short: str = None, threshold: int = 100, is_print_log: bool = True): if str(base_object) + sql_short not in self.bulk_helper_map: super()._custom_init(base_object, threshold, is_print_log) self.sql_short = sql_short self.bulk_helper_map[str(self.base_object) + sql_short] = self def _do_bulk_operation(self): if self._to_be_request_queue.qsize() > 0: t_start = time.time() count = 0 values_list = [] for _ in range(self._threshold): try: request = self._to_be_request_queue.get_nowait() count += 1 values_list.append(request) except Empty: pass if values_list: real_count = self.base_object.executemany_rowcount(self.sql_short, values_list) if self._is_print_log: self.logger.info(f'【{str(self.base_object)}】 批量插入的任务数量是 {real_count} 消耗的时间是 {round(time.time() - t_start,6)}') self._current_time = time.time() class _Test(unittest.TestCase, LoggerMixin): @unittest.skip def test_mongo_bulk_write(self): # col = MongoMixin().mongo_16_client.get_database('test').get_collection('ydf_test2') col = MongoClient().get_database('test').get_collection('ydf_test2') with decorators.TimerContextManager(): for i in range(50000 + 13): # time.sleep(0.01) item = {'_id': i, 'field1': i * 2} mongo_helper = MongoBulkWriteHelper(col, 10000, is_print_log=True) mongo_helper.add_task(UpdateOne({'_id': item['_id']}, {'$set': item}, upsert=True)) @unittest.skip def test_redis_bulk_write(self): with decorators.TimerContextManager(): r = redis.Redis(password='123456') # redis_helper = RedisBulkWriteHelper(r, 100) # 放在外面可以 for i in range(100003): # time.sleep(0.2) redis_helper = RedisBulkWriteHelper(r, 2000) # 也可以在这里无限实例化 redis_helper.add_task(RedisOperation('sadd', 'key1', str(i))) # @unittest.skip def test_mysql_bulk_write(self): mysql_conn = torndb_for_python3.Connection(host='localhost', database='test', user='root', password='123456', charset='utf8') with decorators.TimerContextManager(): # mysql_helper = MysqlBulkWriteHelper(mysql_conn, sql_short='INSERT INTO test.table_2 (column_1, column_2) VALUES (%s,%s)', threshold=200) # 最好写在循环外 for i in range(100000 + 9): mysql_helper = MysqlBulkWriteHelper(mysql_conn, sql_short='INSERT INTO test.table_2 (column_1, column_2) VALUES (%s,%s)', threshold=20000, ) # 支持无限实例化,如果不小心写在循环里面了也没关系 mysql_helper.add_task((i, i * 2)) if __name__ == '__main__': unittest.main()

三种数据库批量操作方式相同,调用方式就是,调用add_task方法,提交一个任务就可以了。

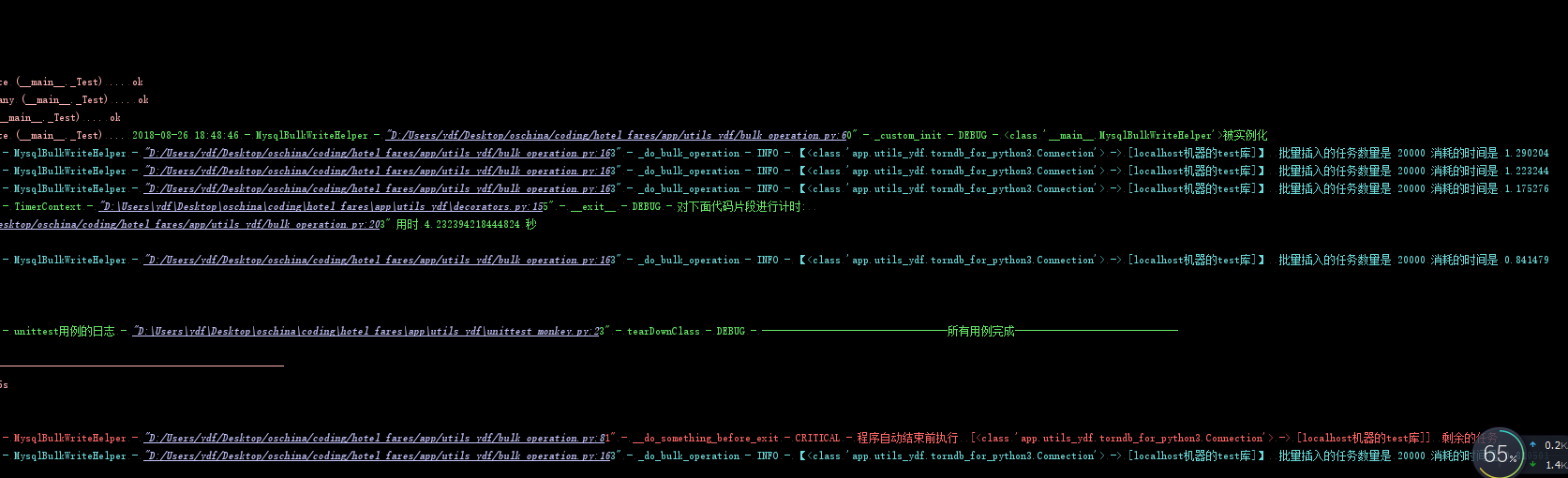

mysql批量操作的截图

3、代码里面主要是使用了模板模式、享元模式、代理模式这三种。

模板模式是节约代码,用于在扩展其他数据库种类批量操作,少写一些方法。可以使用策略模式代替。

享元模式,是不需要使用者很小心在一个合适的代码位置初始化,然后一直使用这个对象。可以支持在任意位置包括for循环里面初始化实例。

代理模式,用户不需要直接使用三大数据库的官方pipeline excutemany bulkwrite方法,对象里面自己来调用这些官方接口。