解决问题

1)容错

2)延时

3)监控

概述

官网:http://flume.apache.org

Flume是由Cloudera提供的一个分布式、高可靠、高可用的服务,用于分布式的海量日志的高收集、聚合、移动系统。

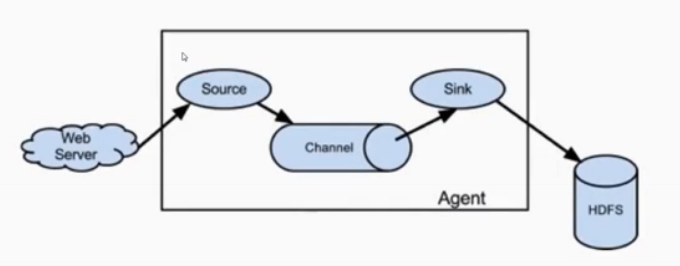

Agent:source,channel,sink

设计目标:

可靠性

扩展性

管理性

业界同类产品对比:

(推荐) Flume:Cludera/Apache Java语言

Scribe: Facebook C/C++ 不再维护

Chukwa: Yahoo/Apache Java 不再维护

Fluentd:Ruby

(推荐) Logstash: ELK(ElasticSearch,Kibana)

Flume发展史

Cloudera 0.9.2 Flume-0G

flume-728 Flume-NG ==>Apache

2012.7 1.0

2015.5 1.6

~ 1.7

Flume架构及核心组件

1)Source 收集

2)Channel 聚集 (通道)

3)Sink 输出

Flume安装条件

1)Java 1.7 or later

2) 内存

3)磁盘空间

4)目录权限读/写

安装

1.安装jdk

下载

解压到~/app

将java配置系统环境变量中:~/.bash_profile

export JAVA_HOME=/home/hadoop/app/java1.8

export Path=$JAVA_HOME/bin;$PATH

source下让其配置生效 source ~/.bash_profile

检测:java -version

2.安装Flume

下载 cdh5.7

解压到~/app

将java配置系统环境变量中::~/.bash_profile

export FLUME_HOME=/home/hadoop/app/apache-flume-1.6.0-cdh5.7.0-b

export PATH=$FLUME_HOME/bin;$PATH

source下让其配置生效

flume-env.sh的配置:export JAVA_HOME=/home/hadoop/app/jdk1.8.0_144

检测:flume-ng version

Windows Flume exec-memory-logger

exec-memory-logger.sources = exec-source

exec-memory-logger.sinks = logger-sink

exec-memory-logger.channels = memory-channel

exec-memory-logger.sources.exec-source.type = exec

exec-memory-logger.sources.exec-source.command =tail.exe -f f:applog.txt

exec-memory-logger.channels.memory-channel.type = memory

exec-memory-logger.sinks.logger-sink.type = logger

exec-memory-logger.sources.exec-source.channels = memory-channel

exec-memory-logger.sinks.logger-sink.channel = memory-channel

启动:

flume-ng.cmd agent -conf ../conf -conf-file ../conf/streaming_project.conf -name exec-memory-logger -property flume.root.logger=INFO,console

Windows Flume exec-memory-Kafka

exec-memory-kafka.sources = exec-source

exec-memory-kafka.sinks = kafka-sink

exec-memory-kafka.channels = memory-channel

exec-memory-kafka.sources.exec-source.type = exec

exec-memory-kafka.sources.exec-source.command =tail.exe -f f:applog.txt

exec-memory-kafka.channels.memory-channel.type = memory

exec-memory-kafka.sinks.kafka-sink.type = org.apache.flume.sink.kafka.KafkaSink

exec-memory-kafka.sinks.kafka-sink.brokerList =192.168.9.196:9092,192.168.9.197:9092,192.168.9.198:9092

exec-memory-kafka.sinks.kafka-sink.topic =test1

exec-memory-kafka.sinks.kafka-sink.batchSize=4

exec-memory-kafka.sinks.kafka-sink.requiredAcks=1

exec-memory-kafka.sources.exec-source.channels = memory-channel

exec-memory-kafka.sinks.kafka-sink.channel = memory-channel

启动:

flume-ng.cmd agent -conf ../conf -conf-file ../conf/exec-memory-kafka.conf -name exec-memory-kafka -property flume.root.logger=INFO,console