纯静态资源配置方式主是直接在配置文件中通过static_resources配置参数明确定义listeners、 clusters和secrets的配置方式,各配置参数的数据类型如下面的配置所示;

◼ 其中,listeners用于配置纯静态类型的侦听器列表,clusters用于定义可用的集群列表及每个集群的 端点,而可选的secrets用于定义TLS通信中用到数字证书等配置信息 ◼ 具体使用时,admin和static_resources两参数即可提供一个最小化的资源配置,甚至admin也可省略 { "listeners": [], "clusters": [], "secrets": [] }

1、基于envoy的预制docker镜像启动实例时,需要额外自定义配置文件,而后将其焙进新的镜像 中或以存储卷的方式向容器提供以启动容器;下面以二次打包镜像的方式进行测试:

docker pull envoyproxy/envoy-alpine:v1.20.0 docker run --name envoy-test -p 80:80 -v /envoy.yaml:/etc/envoy/envoy.yaml envoyproxy/envoy-alpine:v1.20.0 1. 为envoy容器创建专用的工作目录,例如/applications/envoy/ 2. 将前面的侦听器示例保存为此目录下的echo-demo/子目录中,文件 名为envoy.yaml 提示:完整的文件路径为/applications/envoy/echo-demo/envoy.yaml ; 3. 测试配置文件语法 # cd /applications/envoy/ # docker run --name echo-demo --rm -v $(pwd)/echo-demo/envoy.yaml:/etc/envoy/envoy.yaml envoyproxy/envoy-alpine:v1.20.0 --mode validate -c /etc/envoy/envoy.yaml 4. 若语法测试不存在问题,即可直接启动Envoy示例;下面第 一个命令 用于了解echo-demo容器的IP地 址 # docker run --name echo-demo --rm -v $(pwd)/echo-demo/envoy.yaml:/etc/envoy/envoy.yaml envoyproxy/envoy-alpine:v1.20.0 -c /etc/envoy/envoy.yaml 5. 使用nc命令发起测试,键入的任何内容将会由envoy.echo过滤器直接echo回来 # containerIP=$(docker container inspect --format="{{.NetworkSettings.IPAddress}}" echo-demo) # nc $containerIP 15001

2、基于直接部署二进制程序启动运行envoy实例时,直接指定要使用的配置文件即可:

1. 为envoy创建配置文件专用的存储目录,例如/etc/envoy/ 2. 将前面的侦听器示例保存为此目录下的envoy-echo-demo.yaml配置文件 3. 测试配置文件语法 # envoy --mode validate -c /etc/envoy/envoy-echo-demo.yaml 4. 若语法测试不存在问题,即可直接启动Envoy示例 # envoy -c /etc/envoy/envoy-echo-demo.yaml 5. 使用nc命令发起测试,键入的任何内容将会由envoy.echo过滤器直接echo回来 # nc 127.0.0.1 15001

二、Listener的简易静态配置

侦听器主要用于定义Envoy监听的用于接收Downstreams请求的套接字、用于处理请求时调 用的过滤器链及相关的其它配置属性;

listener的配置格式

static_resources: listeners: - name: #侦听器的名称 address: socket_address: address: #侦听的ip地址 port_value: #侦听的端口 filter_chains: - filters: - name: #配置filter chain config:

◼ 下面是一个最简单的静态侦听器配置示例

static_resources: listeners: #配置侦听器 - name: listener_0 #侦听器的名称 address: #配置侦听器的地址信息 socket_address: address: 0.0.0.0 #侦听的ip地址 port_value: 8080 #侦听的端口 filter_chains: #配置侦听器的filter chains - filters: - name: envoy.filters.network.echo #使用什么filter,这里是使用的echo filter

通常,集群代表了一组提供相同服务的上游服务器(端点)的组合,它可由用户静态配置, 也能够通过CDS动态获取。

集群需要在“预热”环节完成之后方能转为可用状态,这意味着集群管理器通过DNS解析或 EDS服务完成端点初始化,以及健康状态检测成功之后才可用。

clusters的配置格式

clusters: - name: ... # 集群的惟一名称,且未提供a lt_stat_name 时将会被用于统计信息中; alt_state_name: ... # 统计信息中使用的集群代名称; type: ... # 用于解析集群(生成集群端点)时使用的服务发现类型,可用值有STATIC、STRICT_DNS 、LOGICAL_DNS、ORIGINAL_DST和EDS等; lb_policy: # 负载均衡算法,支持ROUND_ROBIN、LEAST_REQUEST、RING_HASH、RANDOM、MAGLEV和CLUSTER_PROVIDED; load_assignment: # 为S TATIC、STRICT_DNS或LOGICAL_DNS类型的集群指定成员获取方式;EDS 类型的集成要使用eds_cluster_config 字段配置; cluster_name: ... # 集群名称; endpoints: # 端点列表; - locality: {} # 标识上游主机所处的位置,通常以region 、zone等进行标识; lb_endpoints: # 属于指定位置的端点列表; - endpoint_name: ... # 端点的名称; endpoint: # 端点定义; socket_adddress: # 端点地址标识; address: ... # 端点地址; port_value : ... # 端点端口; protocol: ... # 协议类型;

静态Cluster的各端点可以在配置中直接给出,也可借助DNS服务进行动态发现。

clusters: - name: test_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: test_cluster endpoints: - lb_endpoints: #转发都后端的端口服务器 - endpoint: address: socket_address: { address: 172.17.0.3, port_value: 80 } - endpoint: address: socket_address: { address: 172.17.0.4, port_value: 80 }

TCP代理过滤器在下游客户端及上游集群之间执行1:1网络连接代理

它可以单独用作隧道替换,也可以 同其他过滤器(如MongoDB过滤器或速率限制 过滤器) 结合使用。

TCP代理过滤器严格执行由全局资源管理于为每个上游集群的全局资源管理器设定的连接限制。

TCP代理过滤器检查上游集群的资源管理器是否可以在不超过该集群的最大连接数的情况下创建连接 .

TCP代理过滤器可直接将请求路由至指定的集群,也能够在多个目标集群间基于权重进行调度转发。

配置语法格式:

{ "stat_prefix": "...", # 用于统计数据中输出时使用的前缀字符; "cluster": "...", # 路由到的目标集群标识; "weighted_clusters": "{...}", "metadata_match": "{...}", "idle_timeout": "{...}", # 上下游连接间的超时时长,即没有发送和接收报文的超时时长; "access_log": [], # 访问日志; "max_connect_attempts": "{...}" # 最大连接尝试次数; }

下面的示例基于TCP代理将下游用户(本机)请求代理至后端的两个web服务器

static_resources: listeners: name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.tcp_proxy typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy stat_prefix: tcp #tcp前缀 cluster: local_cluster #路由到本地的local_cluster clusters: #定义local_cluster - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: #定义local_cluster后端的端点信息 - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.1.11, port_value: 8080 } - endpoint: address: socket_address: { address: 172.31.1.12, port_value: 8080 }

http_connection_manager通过引入L7过滤器链实现了对http协议的操纵,其中router 过滤器用 于配置路由转发。

配置格式:

listeners: - name: address: socket_address: { address: ..., port_value: ..., protocol: ... } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager #启用http_connection_manager stat_prefix: ... # 统计信息中使用的易读性的信息前缀; route_config: # 静态路由配置;动态配置应该使用rds字段进行指定; name: ... # 路由配置的名称; virtual_hosts: # 虚拟主机列表,用于构成路由表; - name: ... # 虚拟主机的逻辑名称,用于统计信息,与路由无关; domains: [] # 当前虚拟主机匹配的域名列表,支持使用“*” 通配符;匹配搜索次序为精确匹配、前缀通配、后缀通配及完全通配; routes: [] # 指定的域名下的路由列表,执行时按顺序搜索,第一个匹配到路由信息即为使用的路由机制; http_filters: # 定义http过滤器链 - name: envoy.filters.http.router # 调用7层的路由过滤器

提示:

◼而后搜索当前虚拟主机中的routes列表中的路由列表中各路由条目的match的定义,第一个匹配到 的match后的路由机制(route、redirect或direct_response)即生效;

六、HTTP L7路由基础配置

route_config.virtual_hosts.routes配置的路由信息用于将下游的客户端请求路由至合适 的上游集群中某Server上;

◼ 其路由方式是将url匹配match字段的定义

◆match字段可通过prefix (前缀)、path(路径)或safe_regex (正则表达式)三者之一来表示匹配模 式;

◼ 与match相关的请求将由route(路由规则)、redirect(重定向规则)或direct_response (直接响应)三个字段其中之一完成路由;

◼ 由route定义的路由目标必须是cluster(上游集群名称)、cluster_header(根据请求标头中 的cluster_header的值确定目标集群)或weighted_clusters(路由目标有多个集群,每个集群 拥有一定的权重)其中之一;

配置格式:

routes: - name: ... # 此路由条目的名称; match: prefix: ... # 请求的URL的前缀; route: # 路由条目; cluster: # 目标下游集群;

match:

1)基于prefix、path或regex三者其中任何一个进行URL匹配 提示:regex将会被safe_regex取代; 2)可额外根据headers 和query_parameters完成报文匹配 3)匹配的到报文可有三种路由机制 redirect跳转 direct_response直接应答 route路由

route:

1)支持cluster、weighted_clusters和cluster_header三者之一定义目标路由 2)转发期间可根据prefix_rewrite和host_rewrite完成URL重写 3)可额外配置流量管理机制,例如timeout、retry_policy 、cors、request_mirror_policy和rate_limits等;

1、管理接口admin的介绍

Envoy内建了一个管理服务(administration server ),它支持查询和修改操作,甚至有可能暴 露私有数据(例如统计数据、集群名称和证书信息等),因此非常有必要精心编排其访问控 制机制以避免非授权访问。

配置格式:

admin: access_log: [] # 访问日志协议的相关配置,通常需要指定日志过滤器及日志配置等; access_log_path: ... # 管理接口的访问日志文件路径,无须记录访问日志时使用/dev/null ; profile_path: ... # cpu profiler 的输出路径,默认为/var/log/envoy/envoy.prof ; address: # 监听的套接字; socket_address: protocol: ... address: ... port_value: ...

简单的admin配置实例

admin: access_log_path: /tmp/admin_access.log address: socket_address: { address: 0.0.0.0, port_value: 9901 } # 提示:此处 仅为出于方便测试的目的,才设定其监听于对外通信的任意IP地址;安全起见,应该使用127.0.0.1;

“L7 Front Proxy ”添加管理接口的方法,仅需要在其用到的envoy.yaml 配置文件中添加相关的配置信息即可;

下面给出的简单的测试命令

admin: access_log_path: /tmp/admin_access.log address: socket_address: { address: 0.0.0.0, port_value: 9901 } static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO …… clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC ……

admin接口内置了多个/path,不同的path可能会分别接受不同的GET或POST请求;

admin commands are: /: Admin home page # GET /ready:Outputs a string and error code reflecting the state of the server. # GET ,返回envoy服务当前的状态; /certs: print certs on machine # GET,列出已加载的所有TLS 证书及相关的信息; /clusters: upstream cluster status # GET,额外支持使用“GET /clusters?format=json ” /config_dump: dump current Envoy configs # GET,打印Envoy加载的各类配置信息;支持include_eds、master和resource 等查询参数; /contention: dump current Envoy mutex contention stats (if enabled) # GET,互斥跟踪 /cpuprofiler: enable/disable the CPU profiler# POST,启用或禁用cpuprofiler /healthcheck/fail: cause the server to fail health checks # POST,强制设定HTTP健康状态检查为失败; /healthcheck/ok: cause the server to pass health checks # POST,强制设定HTTP健康状态检查为成功; /heapprofiler: enable/disable the heap profiler # POST,启用或禁用heapprofiler ; /help: print out list of admin commands /hot_restart_version: print the hot restart compatibility version# GET,打印热重启相关的信息; /listeners: print listener addresses# GET,列出所有侦听器,支持使用“GET /listeners?format=json” /drain_listeners:Drains all listeners. # POST ,驱逐所有的listener,支持使用inboundonly (仅入站侦听器)和graceful(优雅关闭)等查询参数; /logging: query/change logging levels# POST,启用或禁用不同子组件上的不同日志记录级别 /memory: print current allocation/heap usage# POST,打印当前内在分配信息,以字节为单位; /quitquitquit: exit the server# POST,干净退出服务器; /reset_counters: reset all counters to zero # POST ,重围所有计数器; /tap:This endpoint is used for configuring an active tap session. # POST ,用于配置活动的带标签的session; /reopen_logs:Triggers reopen of all access logs. Behavior is similar to SIGUSR1 handling. # POST ,重新打开所有的日志,功能类似于SIGUSR1信号; /runtime: print runtime values# GET,以json 格式输出所有运行时相关值; /runtime_modify: modify runtime values # POST /runtime_modify?key1=value1&key2=value2 ,添加或修改在查询参数中传递的运行时值 /server_info: print server version/status information # GET,打印当前Envoy Server 的相关信息; /stats: print server stats# 按需输出统计数据,例如GET /stats?filter= regex,另外还支持json和prometheus两种输出格式; /stats/prometheus: print server stats in prometheus format# 输出prometheus格式的统计信息;

示例输出

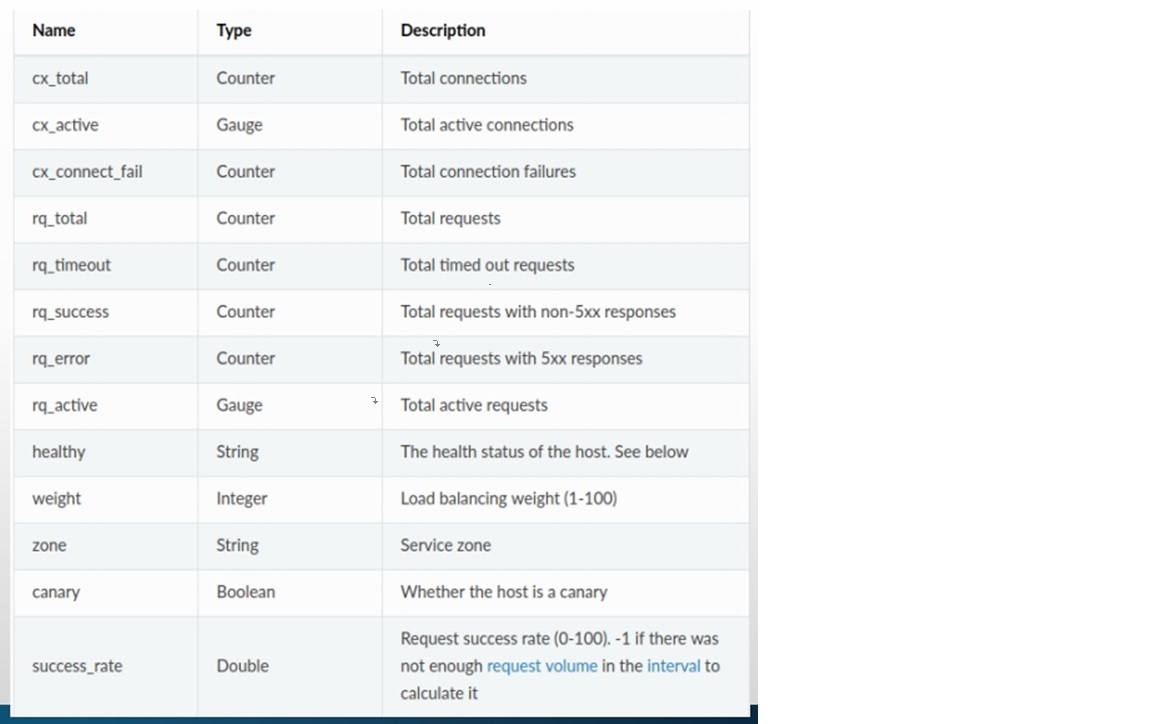

1)GET /clusters:列出所有已配置的集群,包括每个集群中发现的所有上游主机以及 每个主机的统计信 息;支持输出为json格式; 集群管理器信息:“version_info string”,无CDS时,则显示为“version_info::static”。 集群相关的信息:断路器、异常点检测和用于表示是否通过CDS添加的标识“add_via_api”。 每个主机的统计信息:包括总连接数、活动连接数、总请求数和主机的健康状态等;不健康的原因通常有以下三种 (1)failed_active_hc:未通过主动健康状态检测; (2)failed_eds_health:被EDS标记为不健康; (3)failed_outlier_check:未通过异常检测机制的检查; 2)GET /listeners: 列出所有已配置的侦听器,包括侦听器的名称以及监听的地址;支持输出为json格 式; ◼POST /reset_counters :将所有计数器重 围为0; 不过,它只 会影响Server 本地 的输出, 对于已经发送到 外部存储系统的统 计数 据无效 ; 3)GET /config_dump:以json格式打印当前从Envoy 的各 种组 件 加载的 配置信息; 4)GET /ready:获取Server 就绪与否的状态,LIVE状态为200 , 否则为503;

1)相较于静态资源配置来说,xDS API的动态配置机制使得Envoy的配置系统极具弹性; (1)但有时候配置的变动仅需要修改个别的功能特性,若通过xDS接口完成未免有些动静过大, Runtime便是面向这种场景的配置接口; (2)Runtime就是一个虚拟文件系统树,可通过一至多个本地文件系统目录、静态资源、 RTDS动态发现和Admin Interface进行定义和配置; 每个配置称为一个Layer,因而也称为“Layered Runtime”,这些Layer最终叠加生效; 2)换句话说,Runtime是与Envoy一起部署的外置实时配置系统,用于支持更改配置设置而无需 重启Envoy或更改主配置; (1)运行时配置相关的运行时参数也称为“功能标志(feature flags )”或“决策者(decider)”; (2)通过运行时参数更改配置将实时生效; 3)运行时配置的实现也称为运行时配置供应者; (1)Envoy当前支持的运行时配置的实现是由多个层级组成的虚拟文件系统 Envoy在配置的目录中监视符号链接的交换空间,并在发生交换时重新加载文件树; (2)但Envoy会使用默认运行时值和“null”提供给程序以确保其正确运行,因此,运行时配置系统并 不必不可少; 4)启用Envoy的运行时配置机制需要在Bootstrap文件中予以启用和配置 (1)定义在bootstrap配置文件中的layered_runtime 顶级字段之下 (2)一旦在bootstrap中给出layered_runtime字段,则至少要定义出一个layer; 5)运行时配置用于指定包含重新加载配置元素的虚拟文件系统树 (1)该虚拟文件可以通过静态引导配置、本地文件系统、管理控 制台和RTDS派生的叠加来 实现; (2)因此,可以将运行时视为由多个层组成的虚拟文件系统; 在分层运行时的引导配置中指定各层级,后续的各 层级中的 运行设置 会覆盖较 早的层级 ;

配置格式:

layered_runtime: # 配置运行配置供应者,未指定时则使用null供应者,即所有参数均加载其默认值; layers: # 运行时的层级列表,后面的层将覆盖先前层上的配置; - name: ... # 运行时的层级名称,仅用于“GET /runtime”时的输出; static_layer: {...} # 静态运行时层级,遵循运行时probobuf JSON表示编码格式;不同于静态的xDS 资源,静态运行时层一样可被后面的层所覆盖; # 此项配置,以及后面三个层级类型彼此互斥,因此一个列表项中仅可定义一层; disk_layer: {...} # 基于本地磁盘的运行时层级; symlink_root: ... # 通过符号链接访问的文件系统树; subdirectory: ... # 指定要在根目录中加载的子目录; append_service_cluster: ... # 是否将服务集群附加至符号链接根目录下的子路径上; admin_layer: {...} # 管理控制台运行时层级,即通过/runtime管理端点查看,通过/runtime_modify管理端点修改的配置方式; rtds_layer: {...} # 运行时发现服务(runtime discovery service )层级,即通过xDS API 中的RTDS API动态发现相关的层级配置; name: ... # 在rtds_config 上为RTDS层订阅的资源; rtds_config:RTDS 的ConfigSource;

一个典型的配置示例,它定义了四个层级

layers: - name: static_layer_0 static_layer: health_check: min_interval: 5 - name: disk_layer_0 disk_layer: { symlink_root: /srv/runtime/current, subdirectory: envoy } - name: disk_layer_1 disk_layer: { symlink_root: /srv/runtime/current, subdirectory: envoy_override, append_service_cluster: true } - name: admin_layer_0 admin_layer: {} ◆静态引导配置层级,直接指定配置的运行时参数及其值; ◆本地磁盘文件系统 ◆本地磁盘文件系统,子目录覆盖(override_subdirectory) ◆管理控制台层级

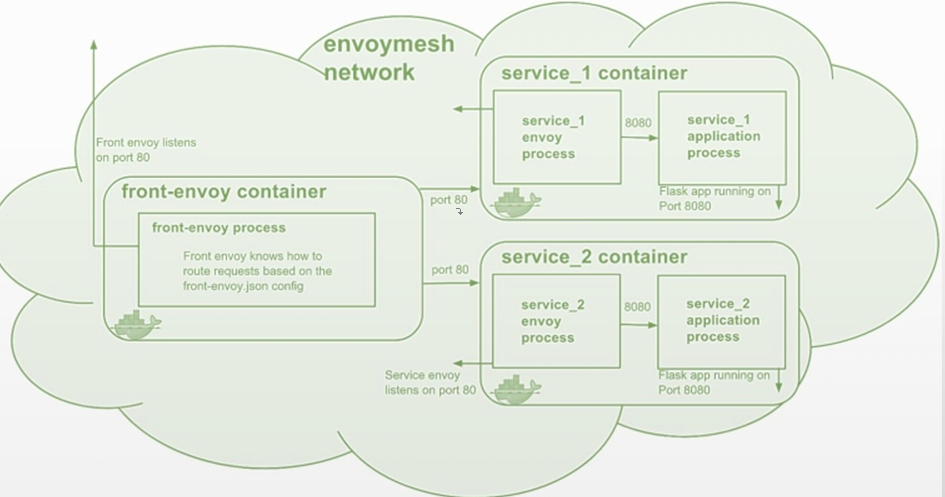

在Envoy Mesh中,作为Front Proxy的Envoy通常是独立运行的进程,它将客户端请求代理至 Mesh中的各Service,而这些Service中的每个应用实例都会隐藏于一个Sidecar Proxy模式的envoy 实例背后。

Envoy Mesh中的TLS模式大体有如下几种常用场景

1、Front Proxy面向下游客户端提供https服务,但Front Proxy、Mesh内部的各服务间依然使用http协议 https →http 2、Front Proxy面向下游客户端提供https服务,而且Front Proxy、Mesh内部的各服务间也使用https协议 https →https 但是内部各Service间的通信也有如下两种情形 (1)仅客户端验证服务端证书 (2)客户端与服务端之间互相验证彼此的证书( mTLS) 注意:对于容器化的动态环境来说,证书预配和管理将成为显著难题 3、Front Proxy直接以TCP Proxy的代理模式,在下游客户端与上游服务端之间透传tls协议; https-passthrough 集群内部的东西向流量同样工作于https协议模型

仅需要配置Listener面向下游客户端提供tls通信,下面是Front Proxy Envoy 的配置示例

static_resources: listeners: - name: listener_http address: socket_address: { address: 0.0.0.0, port_value: 8443 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_01 domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: web_cluster_01 } http_filters: - name: envoy.filters.http.router transport_socket: name: envoy.transport_sockets.tls typed_config: "@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext common_tls_context: tls_certificates: # The following self-signed certificate pair is generated using: # $ openssl req -x509 -newkey rsa:2048 -keyout front-proxy.key -out front-proxy.crt -days 3650 -nodes -subj '/CN=www.magedu.com' - certificate_chain: filename: "/etc/envoy/certs/front-proxy.crt" private_key: filename: "/etc/envoy/certs/front-proxy.key“

除了Listener中面向下游提供tls通信,Front Proxy还要以tls协议与Envoy Mesh中的各Service建 立tls连接

下面是Envoy Mesh中的某Service的Sidecar Proxy Envoy的配置示例

static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 443 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: …… http_filters: - name: envoy.filters.http.router transport_socket: name: envoy.transport_sockets.tls typed_config: "@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext common_tls_context: tls_certificates: - certificate_chain: filename: "/etc/envoy/certs/webserver.crt" private_key: filename: "/etc/envoy/certs/webserver.key"

下面是Front Proxy Envoy 中的Cluster面向上游通信的配置示例

clusters: - name: web_cluster_01 connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: web_cluster_01 endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.8.11, port_value: 443 } - endpoint: address: socket_address: { address: 172.31.8.12, port_value: 443 } transport_socket: name: envoy.transport_sockets.tls typed_config: "@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext ......

TLS passthrough模式的Front Proxy需要使用TCP Proxy类型的Listener,Cluster的相关配置中也 无需再指定transport_socket相关的配置。

但Envoy Mesh中各Service 需要基于tls提供服务。

static_resources: listeners: - name: listener_http address: socket_address: { address: 0.0.0.0, port_value: 8443 } filter_chains: - filters: - name: envoy.filters.network.tcp_proxy typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy cluster: web_cluster_01 stat_prefix: https_passthrough clusters: - name: web_cluster_01 connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: web_cluster_01 endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.9.11, port_value: 443 } - endpoint: address: socket_address: { address: 172.31.9.12, port_value: 443 }

十一、envoy静态配置实例

https://github.com/ikubernetes/servicemesh_in_practice.git

https://gitee.com/mageedu/servicemesh_in_practise

1、envoy-echo

telnet ip 端口,输入什么信息,会显示什么信息

envoy.yaml

static_resources: listeners: - name: listener_0 address: socket_address: address: 0.0.0.0 port_value: 8080 filter_chains: - filters: - name: envoy.filters.network.echo

Dockerfile

FROM envoyproxy/envoy-alpine:v1.20.0 ADD envoy.yaml /etc/envoy/

docker-compose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.4.2 aliases: - envoy-echo networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.4.0/24

root@test:/apps/servicemesh_in_practise/Envoy-Basics/envoy-echo# docker-compose up

telnet envoy的ip+端口

root@test:~# telnet 172.31.4.2 8080 Trying 172.31.4.2... Connected to 172.31.4.2. Escape character is '^]'. #输入什么,会显示什么 root@test:~# telnet 172.31.4.2 8080 Trying 172.31.4.2... Connected to 172.31.4.2. Escape character is '^]'. abc abc ni hao ni hao

修改envoy配置文件在envoy容器中测试

root@test:/apps/servicemesh_in_practise/Envoy-Basics/envoy-echo# docker-compose down

编辑envoy-v2.yaml

admin: access_log_path: /dev/null address: socket_address: address: 127.0.0.1 port_value: 0 static_resources: clusters: name: cluster_0 connect_timeout: 0.25s load_assignment: cluster_name: cluster_0 endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 127.0.0.1 port_value: 0 listeners: - name: listener_0 address: socket_address: address: 127.0.0.1 port_value: 8080 filter_chains: - filters: - name: envoy.filters.network.echo

docker-cpmpose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 volumes: - ./envoy-v2.yaml:/etc/envoy/envoy.yaml #使用envoy-v2.yaml networks: envoymesh: ipv4_address: 172.31.4.2 aliases: - envoy-echo networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.4.0/24

再次运行

root@test:/apps/servicemesh_in_practise/Envoy-Basics/envoy-echo# docker-compose up

进去容器

root@test:/apps/servicemesh_in_practise/Envoy-Basics/envoy-echo# docker-compose exec envoy sh / # nc 127.0.0.1 8080 abv abv ni hao world ni hao world #输入什么,就显示什么

实验环境

两个Service: envoy:Sidecar Proxy webserver01:第一个后端服务,地址为127.0.0.1

envoy.yaml

static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 127.0.0.1, port_value: 8080 }

docker-compose.yaml

version: '3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 #docker-compose up报error initializing configuration '/etc/envoy/envoy.yaml': cannot bind '0.0.0.0:80': Permission denied需要添加该环境变量 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.3.2 aliases: - ingress webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 network_mode: "service:envoy" depends_on: - envoy networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.3.0/24

访问172.31.3.2:80,可以被envoy转发到后端webserver01上

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-ingress# docker-compose up #重新克隆一个窗口多访问几次 root@test:/apps/servicemesh_in_practise/Envoy-Basics/http-ingress# curl 172.31.3.2 iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: d4eda0b2b84c, ServerIP: 172.31.3.2! root@test:/apps/servicemesh_in_practise/Envoy-Basics/http-ingress# curl 172.31.3.2 iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: dc4bd7a1316f, ServerIP: 172.31.3.2! root@test:/apps/servicemesh_in_practise/Envoy-Basics/http-ingress# curl 172.31.3.2 iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: dc4bd7a1316f, ServerIP: 172.31.3.2! root@test:/apps/servicemesh_in_practise/Envoy-Basics/http-ingress# curl 172.31.3.2 iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: dc4bd7a1316f, ServerIP: 172.31.3.2! #在前台运行的envoy程序上查看日志信息 ...... webserver01_1 | * Running on http://127.0.0.1:8080/ (Press CTRL+C to quit) webserver01_1 | 127.0.0.1 - - [01/Dec/2021 08:36:37] "GET / HTTP/1.1" 200 - webserver01_1 | 127.0.0.1 - - [01/Dec/2021 08:38:49] "GET / HTTP/1.1" 200 - webserver01_1 | 127.0.0.1 - - [01/Dec/2021 08:38:50] "GET / HTTP/1.1" 200 -

实验环境

三个Service: envoy:Front Proxy,地址为172.31.4.2 webserver01:第一个外部服务,地址为172.31.4.11 webserver02:第二个外部服务,地址为172.31.4.12

envoy.yaml

static_resources: listeners: - name: listener_0 address: socket_address: { address: 127.0.0.1, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: web_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: web_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: web_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.4.11, port_value: 80 } - endpoint: address: socket_address: { address: 172.31.4.12, port_value: 80 }

docker-compose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.4.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 client: image: ikubernetes/admin-toolbox:v1.0 network_mode: "service:envoy" depends_on: - envoy webserver01: image: ikubernetes/demoapp:v1.0 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.4.11 aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.4.12 aliases: - webserver02 networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.4.0/24

实验验证

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-egress# docker-compose up

另外克隆一个窗口,进入容器

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-egress# docker-compose exec client sh [root@b8f9b62f2771 /]# [root@b8f9b62f2771 /]# curl 127.0.0.1 iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver01, ServerIP: 172.31.4.11! [root@b8f9b62f2771 /]# curl 127.0.0.1 iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12! [root@b8f9b62f2771 /]# curl 127.0.0.1 iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver01, ServerIP: 172.31.4.11! [root@b8f9b62f2771 /]# curl 127.0.0.1 iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12! #在容器访问127.0.0.1,envoy会把请求以轮询的方式转发到webserver01和webserver02上

实验环境

三个Service: envoy:Front Proxy,地址为172.31.2.2 webserver01:第一个后端服务,地址为172.31.2.11 webserver02:第二个后端服务,地址为172.31.2.12 #把域名www.ik8s.io和www.magedu.com映射到172.31.2.2 #访问域名www.ik8s.io会轮询转发到webserver01和webserver02上 #访问域名www.magedu.com会跳转到www.ik8s.io,并轮询转发到webserver01和webserver02上

envoy.yaml

static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*.ik8s.io", "ik8s.io"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } - name: web_service_2 domains: ["*.magedu.com",“magedu.com"] routes: - match: { prefix: "/" } redirect: host_redirect: "www.ik8s.io" http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.2.11, port_value: 8080 } - endpoint: address: socket_address: { address: 172.31.2.12, port_value: 8080 }

docker-compose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.2.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.2.11 aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.2.12 aliases: - webserver02 networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.2.0/24

实验验证

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-front-proxy# docker-compose up

另外克隆一个窗口

#访问域名www.ik8s.io,envoy会以轮询的方式转发到webserver01和webserver02上 root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-front-proxy# curl -H "host: www.ik8s.io" 172.31.2.2 iKubernetes demoapp v1.0 !! ClientIP: 172.31.2.2, ServerName: webserver01, ServerIP: 172.31.2.11! root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-front-proxy# curl -H "host: www.ik8s.io" 172.31.2.2 iKubernetes demoapp v1.0 !! ClientIP: 172.31.2.2, ServerName: webserver02, ServerIP: 172.31.2.12! #访问域名www.magedu.com会跳转到www.ik8s.io上 root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/http-front-proxy# curl -I -H "host: www.magedu.com" 172.31.2.2 HTTP/1.1 301 Moved Permanently location: http://www.ik8s.io/ #跳转到了www.ik8s.io上 date: Wed, 01 Dec 2021 14:07:55 GMT server: envoy transfer-encoding: chunked

实验环境

三个Service: envoy:Front Proxy,地址为172.31.1.2 webserver01:第一个后端服务,地址为172.31.1.11 webserver02:第二个后端服务,地址为172.31.1.12 #访问envoy的ip:172.31.1.2,会以轮询的方式转发到webserver01和webserver02上

static_resources: listeners: name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.tcp_proxy typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy stat_prefix: tcp cluster: local_cluster clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.1.11, port_value: 8080 } - endpoint: address: socket_address: { address: 172.31.1.12, port_value: 8080 }

docker-compose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.1.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.1.11 aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.1.12 aliases: - webserver02 networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.1.0/24

实验验证

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/tcp-front-proxy# docker-compose up

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/tcp-front-proxy# curl 172.31.1.2 iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver01, ServerIP: 172.31.1.11! root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/tcp-front-proxy# curl 172.31.1.2 iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver02, ServerIP: 172.31.1.12!

实验环境

三个Service: envoy:Front Proxy,地址为172.31.5.2 webserver01:第一个后端服务,地址为172.31.5.11 webserver02:第二个后端服务,地址为172.31.5.12 #访问envoy的9901端口可以个获取相应的信息

envoy.yaml

admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 #在生产环境配置127.0.0.1;否则不安全 port_value: 9901 static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*.ik8s.io", "ik8s.io"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } - name: web_service_2 domains: ["*.magedu.com",“magedu.com"] routes: - match: { prefix: "/" } redirect: host_redirect: "www.ik8s.io" http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.5.11, port_value: 8080 } - endpoint: address: socket_address: { address: 172.31.5.12, port_value: 8080 }

docker-compose.yaml

services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.5.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.5.11 aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.5.12 aliases: - webserver02 networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.5.0/24

实验验证

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/admin-interface# docker-compose up

另外克隆一个窗口访问172.31.5.1:9901

#显示帮助信息 root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/admin-interface# curl 172.31.5.2:9901/help admin commands are: /: Admin home page /certs: print certs on machine /clusters: upstream cluster status /config_dump: dump current Envoy configs (experimental) /contention: dump current Envoy mutex contention stats (if enabled) /cpuprofiler: enable/disable the CPU profiler /drain_listeners: drain listeners /healthcheck/fail: cause the server to fail health checks /healthcheck/ok: cause the server to pass health checks /heapprofiler: enable/disable the heap profiler /help: print out list of admin commands /hot_restart_version: print the hot restart compatibility version /init_dump: dump current Envoy init manager information (experimental) /listeners: print listener info /logging: query/change logging levels /memory: print current allocation/heap usage /quitquitquit: exit the server /ready: print server state, return 200 if LIVE, otherwise return 503 /reopen_logs: reopen access logs /reset_counters: reset all counters to zero /runtime: print runtime values /runtime_modify: modify runtime values /server_info: print server version/status information /stats: print server stats /stats/prometheus: print server stats in prometheus format /stats/recentlookups: Show recent stat-name lookups /stats/recentlookups/clear: clear list of stat-name lookups and counter /stats/recentlookups/disable: disable recording of reset stat-name lookup names /stats/recentlookups/enable: enable recording of reset stat-name lookup names # 查看完成的配置信息 root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/admin-interface# curl 172.31.5.2:9901/config_dump ...... } ] }, { "name": "web_service_2", "domains": [ "*.magedu.com", "“magedu.com\"" ], "routes": [ { "match": { "prefix": "/" }, "redirect": { "host_redirect": "www.ik8s.io" } } ] } ] }, "last_updated": "2021-12-01T14:26:32.586Z" ...... #列出各Listener root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/admin-interface# curl 172.31.5.2:9901/listeners listener_0::0.0.0.0:80 #列出各cluster root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/admin-interface# curl 172.31.5.2:9901/clusters local_cluster::observability_name::local_cluster local_cluster::default_priority::max_connections::1024 local_cluster::default_priority::max_pending_requests::1024 local_cluster::default_priority::max_requests::1024 local_cluster::default_priority::max_retries::3 local_cluster::high_priority::max_connections::1024 local_cluster::high_priority::max_pending_requests::1024 local_cluster::high_priority::max_requests::1024 local_cluster::high_priority::max_retries::3 local_cluster::added_via_api::false local_cluster::172.31.5.11:8080::cx_active::0 local_cluster::172.31.5.11:8080::cx_connect_fail::0 local_cluster::172.31.5.11:8080::cx_total::0 local_cluster::172.31.5.11:8080::rq_active::0 local_cluster::172.31.5.11:8080::rq_error::0 local_cluster::172.31.5.11:8080::rq_success::0 local_cluster::172.31.5.11:8080::rq_timeout::0 local_cluster::172.31.5.11:8080::rq_total::0 local_cluster::172.31.5.11:8080::hostname:: local_cluster::172.31.5.11:8080::health_flags::healthy local_cluster::172.31.5.11:8080::weight::1 local_cluster::172.31.5.11:8080::region:: local_cluster::172.31.5.11:8080::zone:: local_cluster::172.31.5.11:8080::sub_zone:: local_cluster::172.31.5.11:8080::canary::false local_cluster::172.31.5.11:8080::priority::0 local_cluster::172.31.5.11:8080::success_rate::-1.0 local_cluster::172.31.5.11:8080::local_origin_success_rate::-1.0 local_cluster::172.31.5.12:8080::cx_active::0 local_cluster::172.31.5.12:8080::cx_connect_fail::0 local_cluster::172.31.5.12:8080::cx_total::0 local_cluster::172.31.5.12:8080::rq_active::0 local_cluster::172.31.5.12:8080::rq_error::0 local_cluster::172.31.5.12:8080::rq_success::0 local_cluster::172.31.5.12:8080::rq_timeout::0 local_cluster::172.31.5.12:8080::rq_total::0 local_cluster::172.31.5.12:8080::hostname:: local_cluster::172.31.5.12:8080::health_flags::healthy local_cluster::172.31.5.12:8080::weight::1 local_cluster::172.31.5.12:8080::region:: local_cluster::172.31.5.12:8080::zone:: local_cluster::172.31.5.12:8080::sub_zone:: local_cluster::172.31.5.12:8080::canary::false local_cluster::172.31.5.12:8080::priority::0 local_cluster::172.31.5.12:8080::success_rate::-1.0 local_cluster::172.31.5.12:8080::local_origin_success_rate::-1.0

7、layered-runtime

三个Service: envoy:Front Proxy,地址为172.31.14.2 webserver01:第一个后端服务,地址为172.31.14.11 webserver02:第二个后端服务,地址为172.31.14.12

envoy.yaml

admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901 layered_runtime: layers: - name: static_layer_0 static_layer: health_check: min_interval: 5 - name: admin_layer_0 admin_layer: {} static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*.ik8s.io", "ik8s.io"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } - name: web_service_2 domains: ["*.magedu.com",“magedu.com"] routes: - match: { prefix: "/" } redirect: host_redirect: "www.ik8s.io" http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 172.31.14.11, port_value: 8080 } - endpoint: address: socket_address: { address: 172.31.14.12, port_value: 8080 }

docker-compose.yaml

version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.14.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.14.11 aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.14.12 aliases: - webserver02 networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.14.0/24

实验验证

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/layered-runtime# docker-compose up

另外克隆一个窗口

root@test:/apps/servicemesh_in_practise-develop/Envoy-Basics/layered-runtime# curl 172.31.14.2:9901/runtime { "entries": { "health_check.min_interval": { "final_value": "5", "layer_values": [ "5", "" ] } }, "layers": [ "static_layer_0", "admin_layer_0" ] }

参考马哥教育: