一、Kubernetes对应Docker的版本支持列表

Kubernetes 1.9 <--Docker 1.11.2 to 1.13.1 and 17.03.x Kubernetes 1.8 <--Docker 1.11.2 to 1.13.1 and 17.03.x Kubernetes 1.7 <--Docker 1.10.3, 1.11.2, 1.12.6 Kubernetes 1.6 <--Docker 1.10.3, 1.11.2, 1.12.6 Kubernetes 1.5 <--Docker 1.10.3, 1.11.2, 1.12.3

版本对应地址:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.5.md#external-dependency-version-information https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.6.md#external-dependency-version-information https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.7.md#external-dependency-version-information https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#external-dependencies https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.9.md#external-dependencies

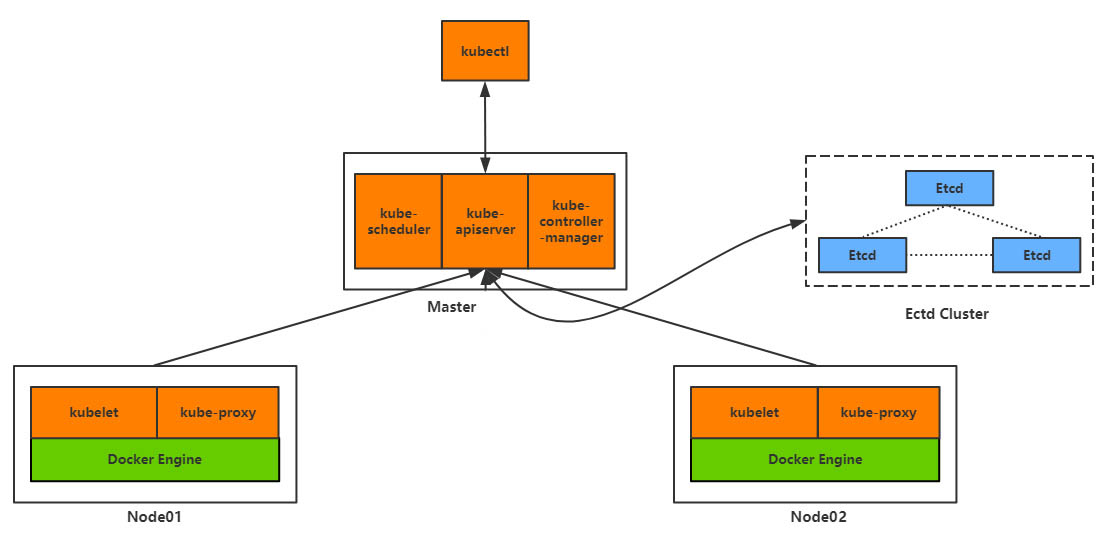

二、Kubernetes集群部署架构图

三、Kubernetes集群环境规划

1、系统环境

[root@k8s-master ~]# cat /etc/redhat-release CentOS Linux release 7.2.1511 (Core) [root@k8s-master ~]# uname -r 3.10.0-327.el7.x86_64 [root@k8s-master ~]# systemctl status firewalld.service ● firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled) Active: inactive (dead) [root@k8s-master ~]# getenforce Disabled

2、服务器规划

|

节点及功能 |

主机名 |

IP |

|

Master、etcd、registry |

K8s-master |

10.0.0.211 |

|

Node1 |

K8s-node-1 |

10.0.0.212 |

|

Node2 |

K8s-node-2 |

10.0.0.213 |

3、统一hosts解析

echo ' 10.0.0.211 k8s-master 10.0.0.211 etcd 10.0.0.211 registry 10.0.0.212 k8s-node-1 10.0.0.213 k8s-node-2' >> /etc/hosts

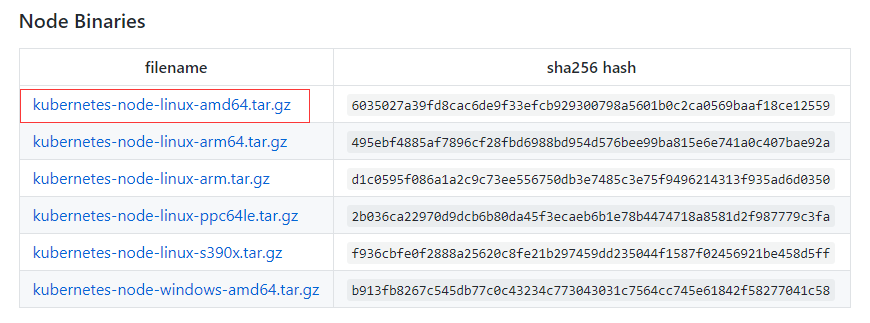

4、下载Kubernetes(简称K8S)二进制文件

https://github.com/kubernetes/kubernetes/releases

此处用的k8s版本为v:1.8.3

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#v183

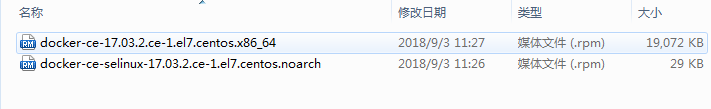

Docker版本为v17.03-ce

etcd下载版本为v3.1.18

https://github.com/coreos/etcd/releases/

四、Kubernetes集群离线部署

1、部署docker

#上传docker离线包# yum install docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm -y #移除旧版本# yum remove docker docker-common docker-selinux docker-engine

设置docker服务开机启动

systemctl enable docker.service

systemctl start docker.service

修改docker的镜像源为国内的daocloud

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://a58c8480.m.daocloud.io

2、部署etcd3

CentOS7二进制安装组件注意事项:

①复制对应的二进制文件到/usr/bin目录下

②创建systemd service启动服务文件

③创建service 中对应的配置参数文件

④将该应用加入到开机自启

#上传etcd-v3.1.18-linux-amd64.tar.gz# tar xf etcd-v3.1.18-linux-amd64.tar.gz cd etcd-v3.1.18-linux-amd64/ mv etcd etcdctl /usr/bin/

①创建/etc/etcd/etcd.conf配置文件

mkdir /etc/etcd/ -p mkdir /var/lib/etcd -p [root@k8s-master ~]# cat /etc/etcd/etcd.conf ETCD_NAME=ETCD Server ETCD_DATA_DIR="/var/lib/etcd/" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.211:2379"

②创建etcd.service启动服务文件

[root@k8s-master ~]# cat /etc/systemd/system/etcd.service [Unit] Description=etcd.service [Service] Type=notify TimeoutStartSec=0 Restart=always WorkingDirectory=/var/lib/etcd EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/bin/etcd [Install] WantedBy=multi-user.target 说明:其中WorkingDirectory为etcd数据库目录,前面我们已经提前创建好了

③设置etcd开机启动

systemctl daemon-reload

systemctl enable etcd.service

systemctl start etcd.service

④检验etcd是否安装成功

[root@k8s-master ~]# etcdctl cluster-health member 8e9e05c52164694d is healthy: got healthy result from http://10.0.0.211:2379 cluster is healthy

3、部署k8s-Master节点组件

#上传kubernetes-server-linux-amd64.tar.gz# tar xf kubernetes-server-linux-amd64.tar.gz mkdir -p /app/kubernetes/{bin,cfg} mv kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager,kubectl} /app/kubernetes/bin

①配置kube-apiserver.service服务

配置文件:vim /app/kubernetes/cfg/kube-apiserver

# 启用日志标准错误 KUBE_LOGTOSTDERR="--logtostderr=true" # 日志级别 KUBE_LOG_LEVEL="--v=4" # Etcd服务地址 KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.211:2379" # API服务监听地址 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" # API服务监听端口 KUBE_API_PORT="--insecure-port=8080" # 对集群中成员提供API服务地址 KUBE_ADVERTISE_ADDR="--advertise-address=10.0.0.211" # 允许容器请求特权模式,默认false KUBE_ALLOW_PRIV="--allow-privileged=false" # 集群分配的IP范围 KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.10.10.0/24"

启动服务systemd:vim /lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/app/kubernetes/cfg/kube-apiserver #ExecStart=/app/kubernetes/bin/kube-apiserver ${KUBE_APISERVER_OPTS} ExecStart=/app/kubernetes/bin/kube-apiserver ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_ETCD_SERVERS} ${KUBE_API_ADDRESS} ${KUBE_API_PORT} ${KUBE_ADVERTISE_ADDR} ${KUBE_ALLOW_PRIV} ${KUBE_SERVICE_ADDRESSES} Restart=on-failure [Install] WantedBy=multi-user.target

启动服务,并设置开机自启

systemctl daemon-reload systemctl enable kube-apiserver.service systemctl start kube-apiserver.service

②配置kube-scheduler服务

配置文件:

vim /app/kubernetes/cfg/kube-scheduler KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=4" KUBE_MASTER="--master=10.0.0.211:8080" KUBE_LEADER_ELECT="--leader-elect"

systemd服务文件:

vim /lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/app/kubernetes/cfg/kube-scheduler ExecStart=/app/kubernetes/bin/kube-scheduler ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_MASTER} ${KUBE_LEADER_ELECT} Restart=on-failure [Install] WantedBy=multi-user.target

启动服务并设置开机启动

systemctl daemon-reload systemctl enable kube-scheduler.service systemctl start kube-scheduler.service

③配置kube-controller-manger

配置文件:

cat /app/kubernetes/cfg/kube-controller-manager KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=4" KUBE_MASTER="--master=10.0.0.211:8080"

systemd服务文件

cat /lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/app/kubernetes/cfg/kube-controller-manager ExecStart=/app/kubernetes/bin/kube-controller-manager ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${KUBE_MASTER} ${KUBE_LEADER_ELECT} Restart=on-failure [Install] WantedBy=multi-user.target

启动服务并设置开机自启

systemctl daemon-reload systemctl enable kube-controller-manager.service systemctl start kube-controller-manager.service

至此Master节点组件就全部启动了,需要注意的是服务启动顺序有依赖,先启动etcd,再启动apiserver,其他组件无顺序要求

查看Master节点组件运行进程

[root@k8s-master ~]# ps -ef|grep kube root 6217 1 2 15:54 ? 00:00:19 /app/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=http://10.0.0.211:2379 --insecure-bind-address=0.0.0.0 --insecure-port=8080 --advertise-address=10.0.0.211 --allow-privileged=false --service-cluster-ip-range=10.10.10.0/24 root 6304 1 1 16:01 ? 00:00:04 /app/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=10.0.0.211:8080 --leader-elect root 6369 1 2 16:05 ? 00:00:02 /app/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=10.0.0.211:8080 root 6376 1926 0 16:07 pts/0 00:00:00 grep --color=auto kube

验证Master节点功能

[root@k8s-master ~]# kubectl get componentstatuses NAME STATUS MESSAGE ERROR etcd-0 Healthy {"health": "true"} controller-manager Healthy ok scheduler Healthy ok

如果启动失败,查看日志

[root@k8s-master ~]# journalctl -u kube-apiserver.service -- Logs begin at 一 2018-09-03 14:57:42 CST, end at 一 2018-09-03 16:09:06 CST. -- 9月 03 15:54:00 k8s-master systemd[1]: Started Kubernetes API Server. 9月 03 15:54:00 k8s-master systemd[1]: Starting Kubernetes API Server... 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924400 6217 flags.go:52] FLAG: --addre 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924847 6217 flags.go:52] FLAG: --admis 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924866 6217 flags.go:52] FLAG: --admis 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924873 6217 flags.go:52] FLAG: --adver 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924878 6217 flags.go:52] FLAG: --allow 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924884 6217 flags.go:52] FLAG: --alsol 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924904 6217 flags.go:52] FLAG: --anony 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924908 6217 flags.go:52] FLAG: --apise 9月 03 15:54:00 k8s-master kube-apiserver[6217]: I0903 15:54:00.924914 6217 flags.go:52] FLAG: --audit

配置环境变量

echo "export PATH=$PATH:/app/kubernetes/bin" >> /etc/profile source /etc/profile

4、部署K8s-Node节点组件

#上传kubernetes-node-linux-amd64.tar.gz# tar xf kubernetes-node-linux-amd64.tar.gz mkdir -p /app/kubernetes/{bin,cfg} mv kubernetes/node/bin/{kubelet,kube-proxy} /app/kubernetes/bin/

①配置kubelet服务

创建kubeconfig配置文件:kubeconfig文件用于kubelet连接master apiserver

cat /app/kubernetes/cfg/kubelet.kubeconfig apiVersion: v1 kind: Config clusters: - cluster: server: http://10.0.0.211:8080 name: local contexts: - context: cluster: local name: local current-context: local

创建kubelet配置文件

cat /app/kubernetes/cfg/kubelet # 启用日志标准错误 KUBE_LOGTOSTDERR="--logtostderr=true" # 日志级别 KUBE_LOG_LEVEL="--v=4" # Kubelet服务IP地址 NODE_ADDRESS="--address=10.0.0.212" # Kubelet服务端口 NODE_PORT="--port=10250" # 自定义节点名称 NODE_HOSTNAME="--hostname-override=10.0.0.212" # kubeconfig路径,指定连接API服务器 KUBELET_KUBECONFIG="--kubeconfig=/app/kubernetes/cfg/kubelet.kubeconfig" # 允许容器请求特权模式,默认false KUBE_ALLOW_PRIV="--allow-privileged=false" # DNS信息 KUBELET_DNS_IP="--cluster-dns=10.10.10.2" KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local" # 禁用使用Swap KUBELET_SWAP="--fail-swap-on=false"

systemd服务文件

cat /lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=-/app/kubernetes/cfg/kubelet ExecStart=/app/kubernetes/bin/kubelet ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${NODE_ADDRESS} ${NODE_PORT} ${NODE_HOSTNAME} ${KUBELET_KUBECONFIG} ${KUBE_ALLOW_PRIV} ${KUBELET_DNS_IP} ${KUBELET_DNS_DOMAIN} ${KUBELET_SWAP} Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

启动服务并设置开机自启

systemctl daemon-reload

systemctl enable kubelet.service

systemctl start kubelet.service

②配置kube-proxy服务

配置文件

mkdir /app/kubernetes/cfg/ -p cat /app/kubernetes/cfg/kube-proxy # 启用日志标准错误 KUBE_LOGTOSTDERR="--logtostderr=true" # 日志级别 KUBE_LOG_LEVEL="--v=4" # 自定义节点名称 NODE_HOSTNAME="--hostname-override=10.0.0.212" # API服务地址 KUBE_MASTER="--master=http://10.0.0.211:8080"

systemd服务文件

cat /lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/app/kubernetes/cfg/kube-proxy ExecStart=/app/kubernetes/bin/kube-proxy ${KUBE_LOGTOSTDERR} ${KUBE_LOG_LEVEL} ${NODE_HOSTNAME} ${KUBE_MASTER} Restart=on-failure [Install] WantedBy=multi-user.target

启动服务并设置开机自启

systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

③部署Flannel网络

下载安装

wget https://github.com/coreos/flannel/releases/download/v0.9.1/flannel-v0.9.1-linux-amd64.tar.gz tar xf flannel-v0.9.1-linux-amd64.tar.gz cp flanneld mk-docker-opts.sh /usr/bin/ mkdir -p /app/flannel/conf/

配置内核转发

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 EOF sysctl --system

systemd服务文件

cat /usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify EnvironmentFile=/app/flannel/conf/flanneld EnvironmentFile=-/etc/sysconfig/docker-network ExecStart=/usr/bin/flanneld-start $FLANNEL_OPTIONS ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target WantedBy=docker.service

配置文件:master、node都需要执行

cat /app/flannel/conf/flanneld # Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://10.0.0.211:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_KEY="/k8s/network" # Any additional options that you want to pass #FLANNEL_OPTIONS="" FLANNEL_OPTIONS="--logtostderr=false --log_dir=/var/log/k8s/flannel/ --etcd-endpoints=http://10.0.0.211:2379"

启动服务并设置开机自启

Master执行:

etcdctl set /k8s/network/config '{ "Network": "172.16.0.0/16" }'

systemctl daemon-reload

systemctl enable flanneld.service

systemctl start flanneld.service

验证服务:

journalctl -u flanneld |grep 'Lease acquired' 9月 03 17:58:02 k8s-node-1 flanneld[9658]: I0903 17:58:02.862074 9658 manager.go:250] Lease acquired: 10.0.11.0/24 9月 03 18:49:47 k8s-node-1 flanneld[11731]: I0903 18:49:47.882891 11731 manager.go:250] Lease acquire: 10.0.11.0/24

④启动Flannel之后,需要依次重启docker、kubernete

Master节点执行:

systemctl restart docker.service systemctl restart kube-apiserver.service systemctl restart kube-controller-manager.service systemctl restart kube-scheduler.service

Node节点执行:

systemctl restart docker.service

systemctl restart kubelet.service

systemctl restart kube-proxy.service

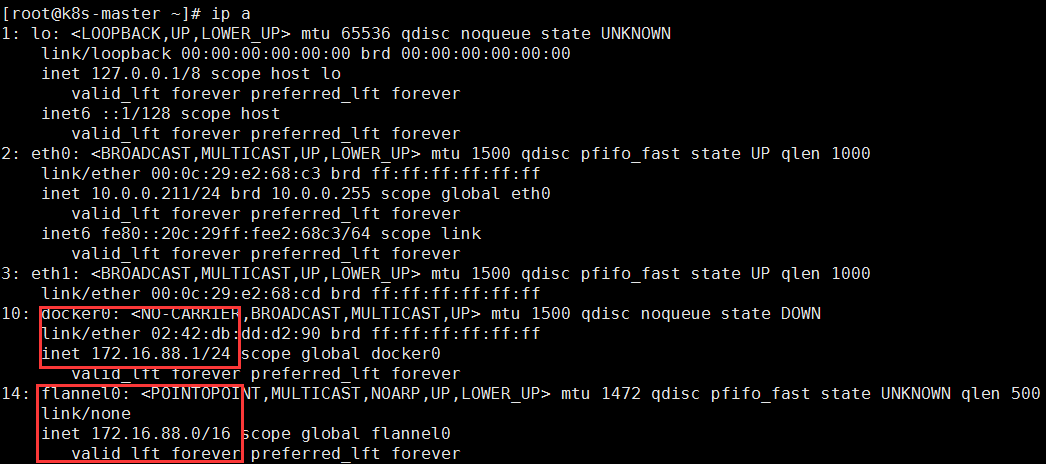

Master节点查看IP信息

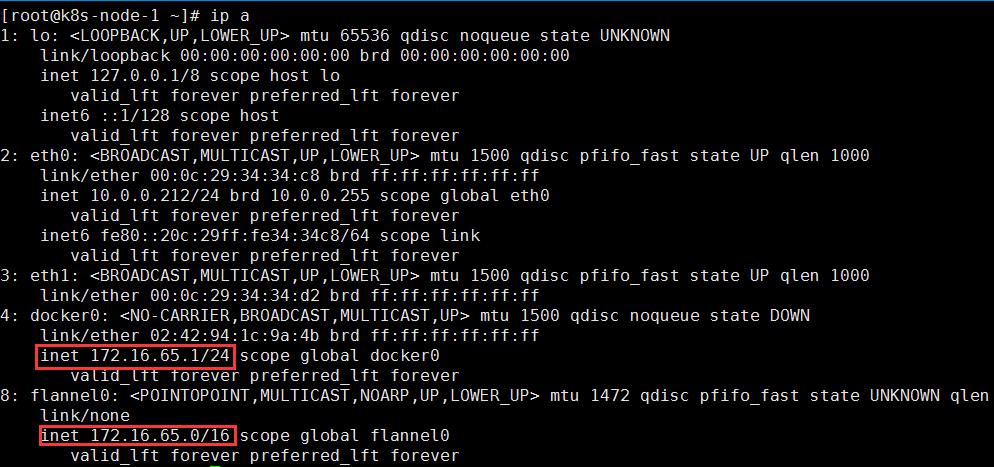

Node节点查看IP信息

小结:查看docker0和flannel0的网络设备,确保每个Node上的Docker0和flannel0在同一段内,并且不同节点的网段都被划分在172.16.0.0/16 的不同段内。如Master是172.16.88.0/16,Node1是172.16.65.0/16……