一、介绍

# 创建一个 Master 节点

kubeadm init

# 将一个 Node 节点加入到当前集群中

kubeadm join <Master节点的IP和端口 >

生产环境部署方式

①kubeadm

Kubeadm是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

②二进制

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

下载地址:https://github.com/kubernetes/kubernetes/releases

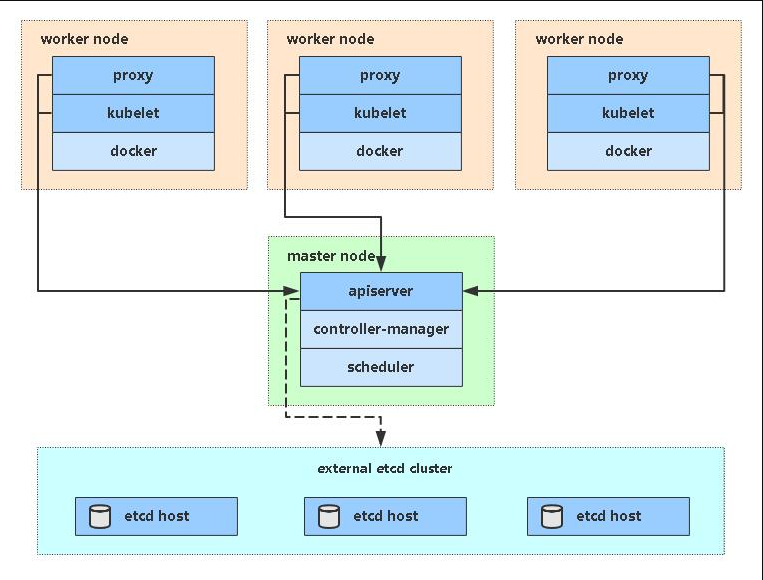

二、kubernetes架构图

三、部署k8s集群

1、基础环境

- 操作系统: CentOS7.x-86_x64

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

- 禁止swap分区

2、服务器规划

| IP | |

|---|---|

| k8s-master | 192.168.56.61 |

| k8s-node1 |

3、系统初始化

#关闭防火墙: systemctl stop firewalld systemctl disable firewalld #关闭selinux: sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久 setenforce 0 # 临时 #关闭swap: swapoff -a # 临时 #vim /etc/fstab # 永久 #设置主机名: hostnamectl set-hostname <hostname> #在master添加hosts: cat >> /etc/hosts << EOF 192.168.56.61 k8s-master 192.168.56.62 k8s-node1 EOF #将桥接的IPv4流量传递到iptables的链: cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system # 生效 #时间同步: yum install ntpdate -y ntpdate time.windows.com

4、

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum -y install docker-ce-18.06.1.ce-3.el7 systemctl enable docker && systemctl start docker docker --version cat > /etc/docker/daemon.json << EOF { "registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"] } EOF

②

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

③

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0 systemctl enable kubelet

④

Master节点执行

kubeadm init --apiserver-advertise-address=192.168.56.61 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all

使用配置文件引导

vi kubeadm.conf apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.18.0 imageRepository: registry.aliyuncs.com/google_containers networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.96.0.0/12 kubeadm init --config kubeadm.conf ignore-preflight-errors=all

⑤配置使用kubectl工具

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get nodes

⑥

Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.56.61:6443 --token 94kw30.b1gswshp2grv5vgd --discovery-token-ca-cert-hash sha256:0497a78ea746f2c1f48d67f3dca9d65cb4010868f22f2a0bbefb101d74c6f057

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,操作如下:

kubeadm token create kubeadm token list openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 0497a78ea746f2c1f48d67f3dca9d65cb4010868f22f2a0bbefb101d74c6f057 kubeadm join 192.168.56.61:6443 --token 94kw30.b1gswshp2grv5vgd --discovery-token-ca-cert-hash sha256:0497a78ea746f2c1f48d67f3dca9d65cb4010868f22f2a0bbefb101d74c6f057

5、部署

①

Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。

Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。

文档地址:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

修改calico.yaml

- 定义Pod网络(CALICO_IPV4POOL_CIDR),与前面pod CIDR配置一样

- 选择工作模式(CALICO_IPV4POOL_IPIP),支持**BGP(Never)**、**IPIP(Always)**、**CrossSubnet**(开启BGP并支持跨子网)

- name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" - name: CALICO_IPV4POOL_VXLAN value: "Never"

部署Calico

kubectl apply -f calico.yaml [root@k8s-master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-59877c7fb4-z2bms 1/1 Running 0 6m59s calico-node-pnjxq 1/1 Running 0 6m59s calico-node-v48jq 1/1 Running 0 6m59s coredns-7ff77c879f-dqk8t 1/1 Running 0 23m coredns-7ff77c879f-j8zsp 1/1 Running 0 23m etcd-k8s-master 1/1 Running 0 23m kube-apiserver-k8s-master 1/1 Running 0 23m kube-controller-manager-k8s-master 1/1 Running 0 23m kube-proxy-ck88h 1/1 Running 0 16m kube-proxy-hkb9f 1/1 Running 0 23m kube-scheduler-k8s-master 1/1 Running 0 23m

②

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.11.0-amd64#g" kube-flannel.yml

6、

- 创建一个Pod,验证Pod工作

- 验证Pod网络通信

- 验证DNS解析

①查看集群状态

kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"} kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 28m v1.18.0 k8s-node1 Ready <none> 21m v1.18.0

②创建应用

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port=80 --type=NodePort kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/nginx-f89759699-28gpp 1/1 Running 0 114s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 34m service/nginx NodePort 10.96.142.106 <none> 80:31233/TCP 73s

7、

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard

修改后

kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard

创建service account并绑定默认cluster-admin管理员集群角色:

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') #获取token命令

四、集群证书续签(kubeadm)

1、检查客户端证书过期时间

kubeadm alpha certs check-expiration

2、续签所有证书

kubeadm alpha certs renew all

cp /etc/kubernetes/admin.conf /root/.kube/config

3、查看当前目录所有证书有效时间

ls |grep crt |xargs -I {} openssl x509 -text -in {} |grep Not