循环终止条件:

import time while True: print("11111") for i in range(10): print(i) break #只能退出一次循环,不能退出whilt循环 print("for循环结束") time.sleep(1) print("最终结束") #不会被执行

三元运算:

result = 值1 if 条件 else 值2

深浅copy:

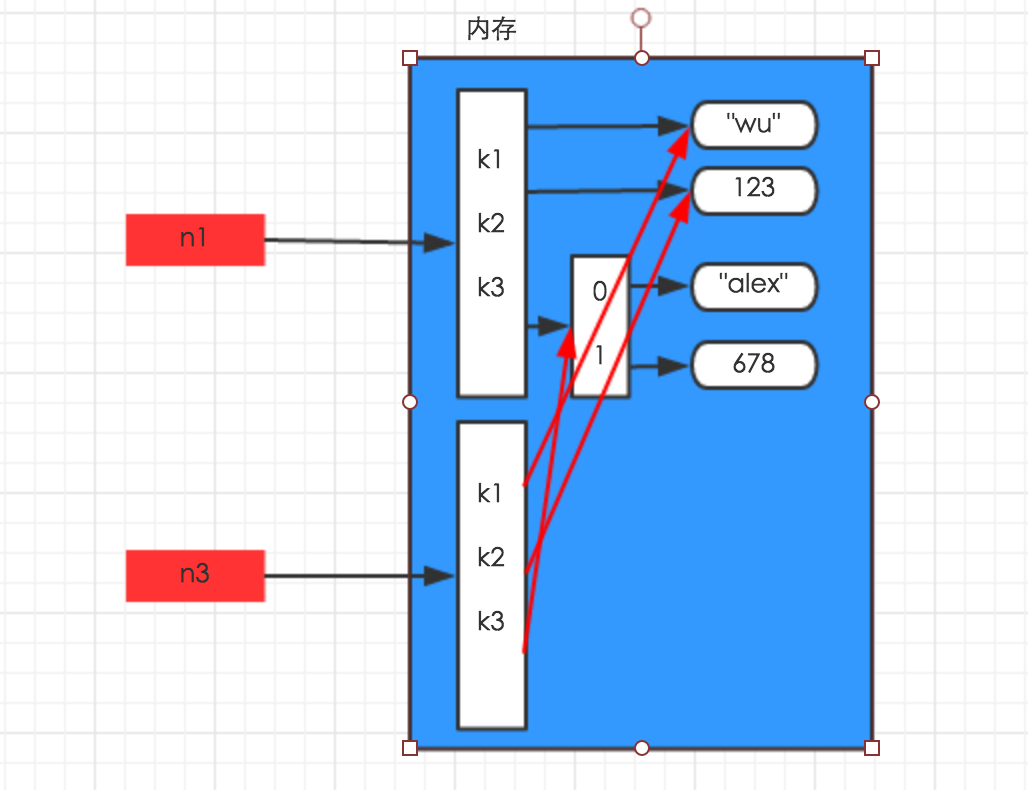

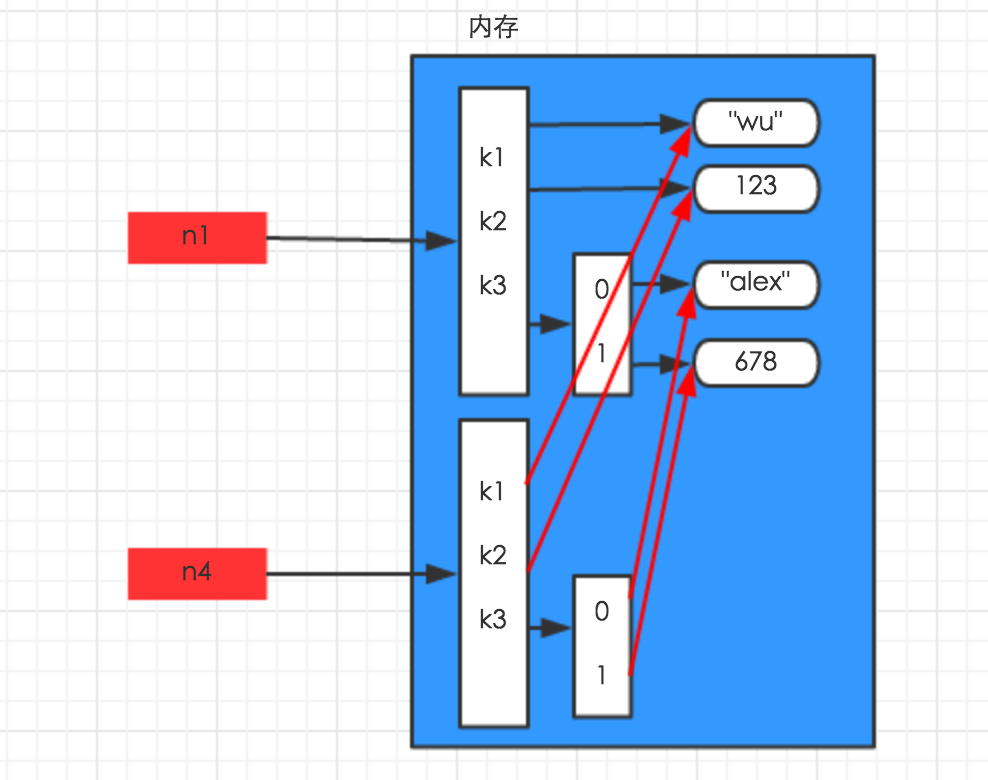

import copy n1 = (1,2,3,[2,3]) n2 = copy.copy(n1) #浅copy 在内存中只额外创建第一层数据,对于元祖,字符串,整数来说没有任何意义 n3 = copy.deepcopy(n1) #深copy 因为元祖中有了列表,深copy才有意义,否则就和字符串一样,深copy和浅copy没有任何意义(字符串是不可变类型) print(id(n1)) print(id(n2)) print(id(n3))

id(n1) == id(n2) != id(n3)

浅copy

浅copy

深copy,因为最后一层是字符串,所以没有必要再copy一层了,直接指向同一个内存地址

深copy,因为最后一层是字符串,所以没有必要再copy一层了,直接指向同一个内存地址

动态参数:

def a(x,*args,**kwargs): print(x) #获得的第一个参数 必须传值 print(*args) #获得的传进来的每一个值 没有返回空 print(args) #获得的是一个元祖类型 没有返回() print(kwargs) #获得的是一个字典类型 没有返回{} a([1],1,2,3,a=1) #字典实参必须放在后面

文件操作:

class file(object) def close(self): # real signature unknown; restored from __doc__ 关闭文件 """ close() -> None or (perhaps) an integer. Close the file. Sets data attribute .closed to True. A closed file cannot be used for further I/O operations. close() may be called more than once without error. Some kinds of file objects (for example, opened by popen()) may return an exit status upon closing. """ def fileno(self): # real signature unknown; restored from __doc__ 文件描述符 """ fileno() -> integer "file descriptor". This is needed for lower-level file interfaces, such os.read(). """ return 0 def flush(self): # real signature unknown; restored from __doc__ 刷新文件内部缓冲区 """ flush() -> None. Flush the internal I/O buffer. """ pass def isatty(self): # real signature unknown; restored from __doc__ 判断文件是否是同意tty设备 """ isatty() -> true or false. True if the file is connected to a tty device. """ return False def next(self): # real signature unknown; restored from __doc__ 获取下一行数据,不存在,则报错 """ x.next() -> the next value, or raise StopIteration """ pass def read(self, size=None): # real signature unknown; restored from __doc__ 读取指定字节数据 """ read([size]) -> read at most size bytes, returned as a string. If the size argument is negative or omitted, read until EOF is reached. Notice that when in non-blocking mode, less data than what was requested may be returned, even if no size parameter was given. """ pass def readinto(self): # real signature unknown; restored from __doc__ 读取到缓冲区,不要用,将被遗弃 """ readinto() -> Undocumented. Don't use this; it may go away. """ pass def readline(self, size=None): # real signature unknown; restored from __doc__ 仅读取一行数据 """ readline([size]) -> next line from the file, as a string. Retain newline. A non-negative size argument limits the maximum number of bytes to return (an incomplete line may be returned then). Return an empty string at EOF. """ pass def readlines(self, size=None): # real signature unknown; restored from __doc__ 读取所有数据,并根据换行保存值列表 """ readlines([size]) -> list of strings, each a line from the file. Call readline() repeatedly and return a list of the lines so read. The optional size argument, if given, is an approximate bound on the total number of bytes in the lines returned. """ return [] def seek(self, offset, whence=None): # real signature unknown; restored from __doc__ 指定文件中指针位置 """ seek(offset[, whence]) -> None. Move to new file position. Argument offset is a byte count. Optional argument whence defaults to (offset from start of file, offset should be >= 0); other values are 1 (move relative to current position, positive or negative), and 2 (move relative to end of file, usually negative, although many platforms allow seeking beyond the end of a file). If the file is opened in text mode, only offsets returned by tell() are legal. Use of other offsets causes undefined behavior. Note that not all file objects are seekable. """ pass def tell(self): # real signature unknown; restored from __doc__ 获取当前指针位置 """ tell() -> current file position, an integer (may be a long integer). """ pass def truncate(self, size=None): # real signature unknown; restored from __doc__ 截断数据,仅保留指定之前数据 """ truncate([size]) -> None. Truncate the file to at most size bytes. Size defaults to the current file position, as returned by tell(). """ pass def write(self, p_str): # real signature unknown; restored from __doc__ 写内容 """ write(str) -> None. Write string str to file. Note that due to buffering, flush() or close() may be needed before the file on disk reflects the data written. """ pass def writelines(self, sequence_of_strings): # real signature unknown; restored from __doc__ 将一个字符串列表写入文件 """ writelines(sequence_of_strings) -> None. Write the strings to the file. Note that newlines are not added. The sequence can be any iterable object producing strings. This is equivalent to calling write() for each string. """ pass def xreadlines(self): # real signature unknown; restored from __doc__ 可用于逐行读取文件,非全部 """ xreadlines() -> returns self. For backward compatibility. File objects now include the performance optimizations previously implemented in the xreadlines module. """ pass 2.x

with open('test.html','r+') as f: t = list(f) #将每一段分割,放在列表中 print(t)

from collections import Iterable f = open('a.txt') print(isinstance(f, Iterable)) # 判断文件对象是否为可迭代对象 True #可知 f 为 可迭代对象,自然可以for 循环

with语句打开和关闭文件,包括抛出一个内部块异常。for line in f文件对象f视为一个迭代器,会自动的采用缓冲IO和内存管理,所以你不必担心大文件。

简单版: with open(...) as f: for line in f: # process(line) # <do something with line>

利用迭代器:

def run():

with open("test1.html","r+") as f:

for i in f:

yield i

start = run()

print(next(start),end="")

print(next(start),end="")

使用迭代器 也可以处理大文件 def read_in_chunks(filePath, chunk_size=1024*1024): file_object = open(filePath) while True: chunk_data = file_object.read(chunk_size) if not chunk_data: break yield chunk_data if __name__ == "__main__": filePath = 'test1.html' for chunk in read_in_chunks(filePath): print(chunk)

迭代器和生成器:

迭代器是访问集合元素的一种方式。迭代器对象从集合的第一个元素开始访问,直到所有的元素被访问完结束。

一个函数调用时返回一个迭代器,那这个函数就叫做生成器(generator);如果函数中包含yield语法,那这个函数就会变成生成器;

函数生成迭代器 class Foo(object): def __init__(self, sq): self.sq = sq def __iter__(self): return iter(self.sq) obj = Foo([11,22,33,44]) for i in obj: print(i) 函数生成生成器 class Foo(object): def test(self): yield "sdfsd" foo = Foo() print(foo.test())