Kubernetes编排原理(四)

声明式API

对于k8s API对象的yaml文件,先kubectl create 再 kubectl replace的操作,称为命令式配置文件操作。而创建和更新都使用kubectl apply命令则式声明式 API操作。

它们本质的区别在于kubectl replace是使用新的yaml文件中的API对象替换原有的API对象,而kubectl apply是执行了一个对原有API对象的PATCH操作。

对于kube-apiserver来说,响应命令式请求时,一次只能处理一个写请求,否则可能产生冲突。而对于声明式请求,一次能处理多个写操作,并且具备merge能力。

举例:Istio

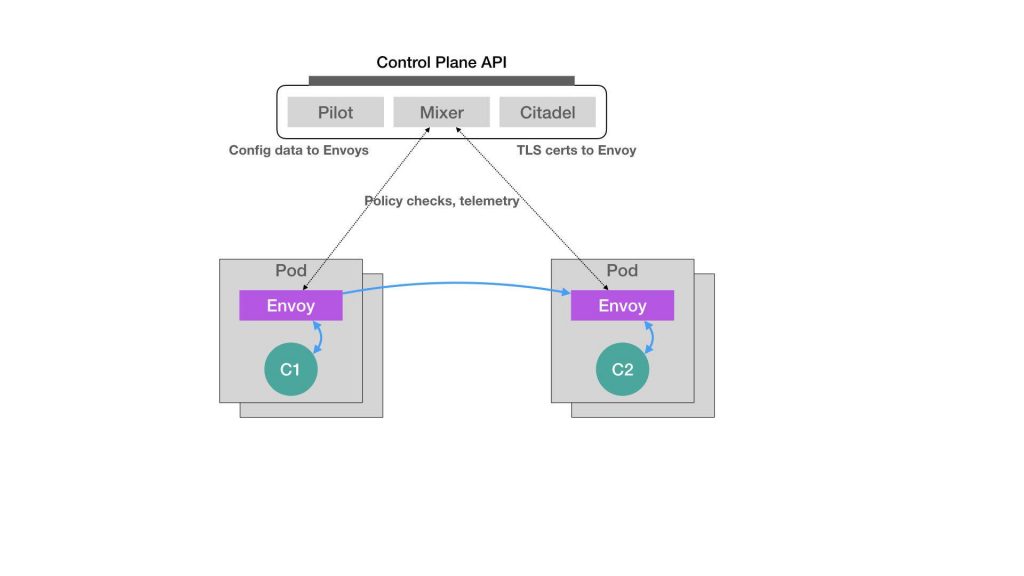

Istio是一个基于k8s的微服务治理框架。架构如下:

Istio最根本的组件是在每一个Pod里运行的Envoy容器。Envoy是Lyft公司推出的一个高性能C++网络代理。Istio以sidecar容器的方式,在每一个被治理的应用Pod中运行这个代理服务。因为Pod里的所有容器都共享一个Network Namespace。所以,Envoy容器就能通过配置Pod里的iptables规则接管整个Pod的进出流量。Istio控制层里的Pilot组件通过调用每个Envoy容器的API,来对这个Envoy代理进行配置,从而实现微服务治理。

Istio灰度发布

假设架构图中左边的Pod是正在运行的应用,右边的Pod是刚刚上线的应用新版本。此时,Pilot通过调节这两个Pod里面的Envoy容器的配置,从而将90%的流量分配给旧版本,将10%的流量分配给新版本,并且可以在后续的过程中可以随时调整。这样,一个典型的灰度发布场景就完成了。Istio可以调节这个流量从90%:10%改到80%:20%再到50%:50%,最后到0%:100%,就完成了整个灰度发布的过程。

Dynamic Admission Control(动态准入控制)

在k8s项目中,当一个Pod或者任何一个API对象被提交给API Server之后,总有一些"初始化"性质的工作需要在它们被k8s项目正式处理之前完成。比如:自动为所有Pod加上某些标签。这个"初始化"操作的实现,借助的是一个叫做Admission的功能。它其实是k8s项目里一组被称为Admission Controller的代码,可以选择性的被编译进API Server中,在API对象创建之后会被立刻调用。这也意味着,如果想添加一些自己的规则到Admission Controller,就会比较困难。因为需要重新编译并重启API Server。显然,这种使用方法对Istio来说影响太大了。所以,k8s项目额外提供了一种"热插拔"式的Admission机制,他就是Dynamic Admission Control,也称Initializer。

举例:

myapp-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh','-c','echo Hello Kubernetes! && sleep 3600']

接下来Istio要做的就是在这个Pod 的yaml被提交给k8s之后,在它对应的API对象里自动加上Envoy容器的配置,使这个对象变成如下所示的样子:

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh','-c','echo Hello Kubernetes! && sleep 3600']

- name: envoy

image: lyft/envoy:845747b88f102c0fd262ab234308e9e22f693a1

command: ["/usr/local/bin/envoy"]

.**..**

这个操作需要Istio编写一个用来为Pod自动注入Envoy容器的Initializer。

1.Istio会将Envoy容器本身的定义以ConfigMap的方式保存在k8s中:

apiVersion: v1

kind: ConfigMap

metadata:

name: envoy-initializer

data:

config: |

containers:

- name: envoy

image: lyft/envoy:845747b88f102c0fd262ab234308e9e22f693a1

command: ["/usr/local/bin/envoy"]

args:

- "--concurrency 4--"

- "--config-path /etc/envoy/envoy.json--"

- "--mode serve--"

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

cpu: "1000m"

memory: "512Mi"

volumes:

- name: envoy-conf

configMap:

name: envoy

Initializer要做的就是把ConfigMap中Envoy相关的字段添加到用户提交的Pod的API对象里。在Initializer更新用户Pod对象时,必须使用PATCH API来完成,以达到类似于git merge的功能,而这种PATCH API正是声明式API最主要的能力。

Istio需要将一个Initializer作为一个Pod部署在k8s中:

apiVersion: v1

kind: Pod

metadata:

name: envoy-initializer

labels:

app: envoy-initializer

spec:

containers:

- name: envoy-initializer

image: envot-initializer:0.0.1

imagePullPolicy: Always

envoy-initializer使用的envot-initializer:0.0.1镜像,是一个事先编写好的自定义控制器。此控制器的实际状态就是用户新建的Pod,而它的期望状态式这个Pod里被添加了Envoy容器的定义:

func control() {

for {

//获取新创建的Pod

pod := client.GetLaatestPod()

//Diff以下,检查是否已经初始化过

if !isInitialized(pod) {

//没有就初始化

doInitial(pod)

}

}

}

func doInitial(pod interface{}) {

cm:=client.Get(ConfigMap,"envoy-initializer")

newPod:=Pod{}

newPod.Spec.Containers=cm.Containers

newPod.Spec.Volumes=cm.Volumes

//生成patch数据

patchBytes:=strategicpatch.CreateTwoWayMergePatch(pod,newPod)

//发起PATCH 请求,修改这个Pod对象

client.Patch(pod.Name,patchBytes)

}

k8s还允许通过配置指定要对什么样的资源进行Initializer操作:

apiVersion: admissionregistration.k8s.io/v1alpha1

kind: InitializerConfiguration

metadata:

name: envoy-config

initializers:

#这个名字必须至少包括两个"."

- name: envoy.initializer.kubernetes.io

rules:

- apiGroups:

# ""就是core API Group 的意思

- ""

apiVersion:

- v1

resources:

- pods

这样k8s就会把这个initializer的名字加在所有符合条件的新创建的Pod的Metadata上:

apiVersion: v1

kind: Pod

metadata:

intializers:

pending:

- name: envoy.initializer.kubernetes.io

name: myapp-pod

labels:

app: myapp-pod

...

这个Metadata正是接下来Initializer的控制器判断这个Pod是否执行过自己负责的初始化操作的重要依据,这也意味着当你在Initializer里完成了要进行的操作之后,一定要记得清除这个metadata.initializers.pending标志。在编写Initializer代码时要注意这一点。

除了上面的配置方法,还可以在具体的Pod的Annotation里添加一个如下所示的字段:

apiVersion: v1

kind: Pod

metadata:

annotations:

"initializer.kubernetes.io/envoy": "true"

...

从而声明要使用某个Initializer。

声明式API工作原理

API对象创建

在k8s项目中,一个API对象在etcd里的完整资源路径是由Group(API组),Version(API版本)和Resource(API资源类型)3个部分组成的。如下图所示:

举例:

apiVersion: batch/v2alphal

kind: CronJob

这个资源文件中,CronJob就是这个API对象的资源类型,batch就是它的组,v2alphal就是它的版本。

对于k8s里的核心API对象(Pod,Node等),是不需要Group的(它们的Group是"")。所以,对于这些API对象来说,k8s会直接在/api这个层级进行下一步的匹配过程。

对于CronJob等非核心API对象来说,k8s就必须在/apis这个层级查找它对应的Group,进而根据"batch"这个Group的名字找到/apis/batch。Job和CronJob都属于"batch"这个Group。然后k8s会进一步匹配API对象的版本号,最后会匹配到API对象的资源类型。此时,API Server就可以继续创建这个CronJob对象了。

CronJob API对象创建流程:

1.发起创建CronJob的POST请求,并提交yaml文件给API Server

2.API Server首先过滤请求,并完成一些前置性工作,比如授权,超时处理,审计等

3.进入MUX和Routes流程,完成URL和Handler绑定

4.API Server根据用户提交的yaml文件创建CronJob对象

1)把用户提交的yaml文件转换成一个名为Super Version的对象,它正是该API资源类型所有版本的字段全集。

2)进行Admission()和Validation()操作

3)把经过验证的API对象转换成用户最初提交的版本,进行序列化操作,并调用etcd的API将其保存。

CRD(自定义资源定义)

k8s v1.7之后,提供了一个全新的API插件机制:CRD(custom resource definition),其允许用户在k8s中添加一个跟Pod,Node类似的,新的API资源类型:自定义API资源。

举例:

自定义一个名为Network的API资源类型,它的作用是,一旦用户创建了一个Network对象,那么k8s就应该使用这个对象定义的网络参数,调用真实的网络插件为用户创建一个真正的"网络"。这样,将来用户创建的Pod就可以声明使用这个网络了。

使用要自定义的Network对象:

# example-network.yaml

apiVersion: samplecrd.k8s.io/v1

kind: Network

metadata:

name: example-network

spec:

cidr: "192.168.0.0/16"

gateway: "192.168.0.1"

自定义资源定义文件:

# network-crd.yaml

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: networks.samplecrd.my.crd

spec:

group: samplecrd.my.crd

versions:

- name: v1

# 是否开启通过 REST APIs 访问 `/apis/<group>/<version>/

served: true

# 必须将一个且只有一个版本标记为存储版本

storage: true

# 定义自定义对象的声明规范

schema:

openAPIV3Schema:

description: Define samplecrd YAML Spec

type: object

properties:

spec:

type: object

properties:

cidr:

type: string

gateway:

type: string

names:

kind: Network

# 复数

plural: networks

# 此资源是一个属于Namespace的对象 类似Pod

scope: Namespaced

创建crd:

kubectl apply -f crd/network.yaml

创建自定义资源:

kubectl apply -f example/example-network.yaml

查看自定义资源:

root@k8s-master:~/go/src/k8s-controller-custom-resource# kubectl get network

NAME AGE

example-network 138m

root@k8s-master:~/go/src/k8s-controller-custom-resource# kubectl describe network

Name: example-network

Namespace: default

Labels: <none>

Annotations: API Version: samplecrd.my.crd/v1

Kind: Network

Metadata:

Creation Timestamp: 2022-04-17T11:05:30Z

Generation: 1

Managed Fields:

API Version: samplecrd.my.crd/v1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:cidr:

f:gateway:

Manager: kubectl

Operation: Update

Time: 2022-04-17T11:05:30Z

Resource Version: 10502999

Self Link: /apis/samplecrd.my.crd/v1/namespaces/default/networks/example-network

UID: a64f80b5-8ad3-41e2-91e0-4ba51ea9651a

Spec:

Cidr: 192.168.0.0/16

Gateway: 192.168.0.1

Events: <none>

这个crd定义文件定义了一个类型是samplecrd.k8s.io/v1/Network的API对象,创建crd过后,k8s便能认识和处理此类对象。

接下来,还需要让k8s认识此类对象yaml里描述的网络部分,比如cidr(网段),gateway(网关)这些字段的含义。这就i需要做些代码工作了。

在$GOPATH/src下建立k8s-controller-custom-resource项目,项目结构如下:

.

├── controller.go

├── crd

│ └── network.yaml

├── example

│ └── example-network.yaml

├── go.mod

├── go.sum

├── main.go

└── pkg

└── apis

└── samplecrd

├── register.go

└── v1

├── doc.go

├── register.go

└── types.go

samplecrd是组名,v1是版本。

pkg/apis/samplecrd/register.go:

package samplecrd

const (

GroupName = "samplecrd.my.crd"

Version = "v1"

)

pkg/apis/samplecrd/v1/doc.go:

// +k8s:deepcopy-gen=package

// +groupName=samplecrd.my.crd

package v1

pkg/apis/samplecrd/v1/register.go:

package v1

import (

"k8s-controller-custom-resource/pkg/apis/samplecrd"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/runtime/schema"

)

// GroupVersion is the identifier for the API which includes

// the name of the group and the version of the API

var SchemeGroupVersion = schema.GroupVersion{

Group: samplecrd.GroupName,

Version: samplecrd.Version,

}

/// addKnownTypes adds our types to the API scheme by registering

//Network and Network List

func addKnownTypes(scheme *runtime.Scheme) error {

scheme.AddKnownTypes(SchemeGroupVersion, &Network{}, &NetworkList{})

// register the type in the scheme

metav1.AddToGroupVersion(scheme, SchemeGroupVersion)

return nil

}

pkg/apis/samplecrd/v1/types.go:

package v1

import (

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

type networkSpec struct {

Cidr string `json:"cidr"`

Gateway string `json:"gateway"`

}

// +genclient

// +genclient:noStatus

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

type Network struct {

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec networkSpec `json:"spec"`

}

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

type NetworkList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata"`

Items []Network `json:"items"`

}

注释解析:

// +k8s:deepcopy-gen=package ==>为保利的所有类型生成DeepCopy方法

// +groupName=samplecrd.my.crd ==>指定API组名

// +genclient ==> 为类型生成客户端代码

// +genclient:noStatus ==>这个API资源类型定义里没有Status字段,不加生成Clent会自动带上UpdateStatus方法

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object ==>在生成DeepCopy时实现k8s提供的runtime.Object接口

代码生成:

在项目目录下创建hack目录,并在hack目录下创建如下文件:

boilerplate.go.txt

/*

Copyright The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

tools.go

//go:build tools

// +build tools

/*

Copyright 2019 The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

// This package imports things required by build scripts, to force `go mod` to see them as dependencies

package hack

import _ "k8s.io/code-generator"

update-codegen.sh

#!/usr/bin/env bash

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

set -o errexit

set -o nounset

set -o pipefail

# generate the code with:

# --output-base because this script should also be able to run inside the vendor dir of

# k8s.io/kubernetes. The output-base is needed for the generators to output into the vendor dir

# instead of the $GOPATH directly. For normal projects this can be dropped.

ROOT_PACKAGE="k8s-controller-custom-resource"

CUSTOM_RESOURCE_NAME="samplecrd"

CUSTOM_RESOURCE_VERSION="v1"

chmod +x ../vendor/k8s.io/code-generator/generate-groups.sh

../vendor/k8s.io/code-generator/generate-groups.sh all "$ROOT_PACKAGE/pkg/client" "$ROOT_PACKAGE/pkg/apis" "$CUSTOM_RESOURCE_NAME:$CUSTOM_RESOURCE_VERSION" \

--go-header-file $(pwd)/boilerplate.go.txt

在hack目录下执行如下命令生成代码:

go mod tidy

go mod download

# 把k8s.io/code-generator依赖导入项目目录

go mod vendor

# 执行代码生成命令

./update-codegen.sh

生成代码完成后的项目结构如下所示:

.

├── controller.go

├── crd

│ └── network.yaml

├── example

│ └── example-network.yaml

├── go.mod

├── go.sum

├── hack

│ ├── boilerplate.go.txt

│ ├── tools.go

│ └── update-codegen.sh

├── main.go

└── pkg

├── apis

│ └── samplecrd

│ ├── register.go

│ └── v1

│ ├── doc.go

│ ├── register.go

│ ├── types.go

│ └── zz_generated.deepcopy.go

└── client

├── clientset

│ └── versioned

│ ├── clientset.go

│ ├── doc.go

│ ├── fake

│ │ ├── clientset_generated.go

│ │ ├── doc.go

│ │ └── register.go

│ ├── scheme

│ │ ├── doc.go

│ │ └── register.go

│ └── typed

│ └── samplecrd

│ └── v1

│ ├── doc.go

│ ├── fake

│ │ ├── doc.go

│ │ ├── fake_network.go

│ │ └── fake_samplecrd_client.go

│ ├── generated_expansion.go

│ ├── network.go

│ └── samplecrd_client.go

├── informers

│ └── externalversions

│ ├── factory.go

│ ├── generic.go

│ ├── internalinterfaces

│ │ └── factory_interfaces.go

│ └── samplecrd

│ ├── interface.go

│ └── v1

│ ├── interface.go

│ └── network.go

└── listers

└── samplecrd

└── v1

├── expansion_generated.go

└── network.go

自定义控制器

完善main.go和controller.go以达到为CRD编写自定义控制器的目的。

1.编写main函数:

main.go

package main

import (

"flag"

"time"

"github.com/golang/glog"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

// Uncomment the following line to load the gcp plugin (only required to authenticate against GKE clusters).

// _ "k8s.io/client-go/plugin/pkg/client/auth/gcp"

clientset "k8s-controller-custom-resource/pkg/client/clientset/versioned"

informers "k8s-controller-custom-resource/pkg/client/informers/externalversions"

"k8s-controller-custom-resource/pkg/signals"

)

var (

masterURL string

kubeconfig string

)

func main() {

flag.Parse()

// set up signals so we handle the first shutdown signal gracefully

stopCh := signals.SetupSignalHandler()

cfg, err := clientcmd.BuildConfigFromFlags(masterURL, kubeconfig)

if err != nil {

glog.Fatalf("Error building kubeconfig: %s", err.Error())

}

kubeClient, err := kubernetes.NewForConfig(cfg)

if err != nil {

glog.Fatalf("Error building kubernetes clientset: %s", err.Error())

}

networkClient, err := clientset.NewForConfig(cfg)

if err != nil {

glog.Fatalf("Error building example clientset: %s", err.Error())

}

networkInformerFactory := informers.NewSharedInformerFactory(networkClient, time.Second*30)

controller := NewController(kubeClient, networkClient,

networkInformerFactory.Samplecrd().V1().Networks())

go networkInformerFactory.Start(stopCh)

if err = controller.Run(2, stopCh); err != nil {

glog.Fatalf("Error running controller: %s", err.Error())

}

}

func init() {

flag.StringVar(&kubeconfig, "kubeconfig", "", "Path to a kubeconfig. Only required if out-of-cluster.")

flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeconfig. Only required if out-of-cluster.")

}

1)main根据启动命令提供的Master配置(API Server的地址端口和kubeconfig路径),创建一个k8s的client(kubeClient)和Network对象的client(networkClient)。如果启动命令不提供Master配置,则会使用一种名为InClusterConfig的方式来创建这个client,该方式假设你的自定义控制器是以Pod的方式在集群里运行的,因为k8s所有的Pod都会以Volume的方式自动挂载k8s的默认ServiceAccount,所以这个控制器就会直接使用默认ServiceAccount Volume里的授权信息来访问API Server

2)mian函数为Network对象创建一个InformerFactory(networkInformerFactory)的工厂,并使用它生成一个Network对象的Informer,传递给控制器。

3)main函数启动上述的Informer,然后执行controller.Run,启动自定义控制器。

controller.go

package main

import (

"fmt"

"time"

"github.com/golang/glog"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

"k8s.io/apimachinery/pkg/util/runtime"

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

"k8s.io/apimachinery/pkg/util/wait"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/kubernetes/scheme"

typedcorev1 "k8s.io/client-go/kubernetes/typed/core/v1"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/record"

"k8s.io/client-go/util/workqueue"

samplecrdv1 "k8s-controller-custom-resource/pkg/apis/samplecrd/v1"

clientset "k8s-controller-custom-resource/pkg/client/clientset/versioned"

networkscheme "k8s-controller-custom-resource/pkg/client/clientset/versioned/scheme"

informers "k8s-controller-custom-resource/pkg/client/informers/externalversions/samplecrd/v1"

listers "k8s-controller-custom-resource/pkg/client/listers/samplecrd/v1"

)

const controllerAgentName = "network-controller"

const (

// SuccessSynced is used as part of the Event 'reason' when a Network is synced

SuccessSynced = "Synced"

// MessageResourceSynced is the message used for an Event fired when a Network

// is synced successfully

MessageResourceSynced = "Network synced successfully"

)

// Controller is the controller implementation for Network resources

type Controller struct {

// kubeclientset is a standard kubernetes clientset

kubeclientset kubernetes.Interface

// networkclientset is a clientset for our own API group

networkclientset clientset.Interface

networksLister listers.NetworkLister

networksSynced cache.InformerSynced

// workqueue is a rate limited work queue. This is used to queue work to be

// processed instead of performing it as soon as a change happens. This

// means we can ensure we only process a fixed amount of resources at a

// time, and makes it easy to ensure we are never processing the same item

// simultaneously in two different workers.

workqueue workqueue.RateLimitingInterface

// recorder is an event recorder for recording Event resources to the

// Kubernetes API.

recorder record.EventRecorder

}

// NewController returns a new network controller

func NewController(

kubeclientset kubernetes.Interface,

networkclientset clientset.Interface,

networkInformer informers.NetworkInformer) *Controller {

// Create event broadcaster

// Add sample-controller types to the default Kubernetes Scheme so Events can be

// logged for sample-controller types.

utilruntime.Must(networkscheme.AddToScheme(scheme.Scheme))

glog.V(4).Info("Creating event broadcaster")

eventBroadcaster := record.NewBroadcaster()

eventBroadcaster.StartLogging(glog.Infof)

eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeclientset.CoreV1().Events("")})

recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: controllerAgentName})

controller := &Controller{

kubeclientset: kubeclientset,

networkclientset: networkclientset,

networksLister: networkInformer.Lister(),

networksSynced: networkInformer.Informer().HasSynced,

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "Networks"),

recorder: recorder,

}

glog.Info("Setting up event handlers")

// Set up an event handler for when Network resources change

// 注册事件回调函数

networkInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

//添加事件key入队

AddFunc: controller.enqueueNetwork,

//更新事件比较是否有变化 没有变化直接返回 否则key入队

UpdateFunc: func(old, new interface{}) {

oldNetwork := old.(*samplecrdv1.Network)

newNetwork := new.(*samplecrdv1.Network)

if oldNetwork.ResourceVersion == newNetwork.ResourceVersion {

// Periodic resync will send update events for all known Networks.

// Two different versions of the same Network will always have different RVs.

return

}

controller.enqueueNetwork(new)

},

//删除事件 key入队

DeleteFunc: controller.enqueueNetworkForDelete,

})

return controller

}

// Run will set up the event handlers for types we are interested in, as well

// as syncing informer caches and starting workers. It will block until stopCh

// is closed, at which point it will shutdown the workqueue and wait for

// workers to finish processing their current work items.

func (c *Controller) Run(threadiness int, stopCh <-chan struct{}) error {

defer runtime.HandleCrash()

defer c.workqueue.ShutDown()

// Start the informer factories to begin populating the informer caches

glog.Info("Starting Network control loop")

// Wait for the caches to be synced before starting workers

//等待一次本地缓存数据同步

glog.Info("Waiting for informer caches to sync")

if ok := cache.WaitForCacheSync(stopCh, c.networksSynced); !ok {

return fmt.Errorf("failed to wait for caches to sync")

}

glog.Info("Starting workers")

// Launch two workers to process Network resources

//开启协程处理事件

for i := 0; i < threadiness; i++ {

go wait.Until(c.runWorker, time.Second, stopCh)

}

glog.Info("Started workers")

<-stopCh

glog.Info("Shutting down workers")

return nil

}

// runWorker is a long-running function that will continually call the

// processNextWorkItem function in order to read and process a message on the

// workqueue.

func (c *Controller) runWorker() {

for c.processNextWorkItem() {

}

}

// processNextWorkItem will read a single work item off the workqueue and

// attempt to process it, by calling the syncHandler.

func (c *Controller) processNextWorkItem() bool {

//从工作队列取出一个key

obj, shutdown := c.workqueue.Get()

if shutdown {

return false

}

// We wrap this block in a func so we can defer c.workqueue.Done.

err := func(obj interface{}) error {

// We call Done here so the workqueue knows we have finished

// processing this item. We also must remember to call Forget if we

// do not want this work item being re-queued. For example, we do

// not call Forget if a transient error occurs, instead the item is

// put back on the workqueue and attempted again after a back-off

// period.

defer c.workqueue.Done(obj)

var key string

var ok bool

// We expect strings to come off the workqueue. These are of the

// form namespace/name. We do this as the delayed nature of the

// workqueue means the items in the informer cache may actually be

// more up to date that when the item was initially put onto the

// workqueue.

if key, ok = obj.(string); !ok {

// As the item in the workqueue is actually invalid, we call

// Forget here else we'd go into a loop of attempting to

// process a work item that is invalid.

//如果key不是string直接忽略

c.workqueue.Forget(obj)

runtime.HandleError(fmt.Errorf("expected string in workqueue but got %#v", obj))

return nil

}

// Run the syncHandler, passing it the namespace/name string of the

// Network resource to be synced.

//比较期望状态和实际状态并使其一致

if err := c.syncHandler(key); err != nil {

return fmt.Errorf("error syncing '%s': %s", key, err.Error())

}

// Finally, if no error occurs we Forget this item so it does not

// get queued again until another change happens.

c.workqueue.Forget(obj)

glog.Infof("Successfully synced '%s'", key)

return nil

}(obj)

if err != nil {

runtime.HandleError(err)

return true

}

return true

}

// syncHandler compares the actual state with the desired, and attempts to

// converge the two. It then updates the Status block of the Network resource

// with the current status of the resource.

func (c *Controller) syncHandler(key string) error {

// Convert the namespace/name string into a distinct namespace and name

namespace, name, err := cache.SplitMetaNamespaceKey(key)

if err != nil {

runtime.HandleError(fmt.Errorf("invalid resource key: %s", key))

return nil

}

// Get the Network resource with this namespace/name

//从本地缓存获取API对象

network, err := c.networksLister.Networks(namespace).Get(name)

if err != nil {

// The Network resource may no longer exist, in which case we stop

// processing.

if errors.IsNotFound(err) {//本地缓存未发现则是删除操作需要真正删除API资源

glog.Warningf("Network: %s/%s does not exist in local cache, will delete it from Neutron ...",

namespace, name)

glog.Infof("[Neutron] Deleting network: %s/%s ...", namespace, name)

// FIX ME: call Neutron API to delete this network by name.

//

// neutron.Delete(namespace, name)

return nil

}

runtime.HandleError(fmt.Errorf("failed to list network by: %s/%s", namespace, name))

return err

}

glog.Infof("[Neutron] Try to process network: %#v ...", network)

//获取API资源实际状态并使其和期望状态一致

// FIX ME: Do diff().

//

// actualNetwork, exists := neutron.Get(namespace, name)

//

// if !exists {

// neutron.Create(namespace, name)

// } else if !reflect.DeepEqual(actualNetwork, network) {

// neutron.Update(namespace, name)

// }

c.recorder.Event(network, corev1.EventTypeNormal, SuccessSynced, MessageResourceSynced)

return nil

}

// enqueueNetwork takes a Network resource and converts it into a namespace/name

// string which is then put onto the work queue. This method should *not* be

// passed resources of any type other than Network.

func (c *Controller) enqueueNetwork(obj interface{}) {

var key string

var err error

//生成key

if key, err = cache.MetaNamespaceKeyFunc(obj); err != nil {

runtime.HandleError(err)

return

}

//key入队

c.workqueue.AddRateLimited(key)

}

// enqueueNetworkForDelete takes a deleted Network resource and converts it into a namespace/name

// string which is then put onto the work queue. This method should *not* be

// passed resources of any type other than Network.

func (c *Controller) enqueueNetworkForDelete(obj interface{}) {

var key string

var err error

key, err = cache.DeletionHandlingMetaNamespaceKeyFunc(obj)

if err != nil {

runtime.HandleError(err)

return

}

c.workqueue.AddRateLimited(key)

}

1)main函数调用NewController创建controller时注册事件回调函数

2) 等待Informer完成一次本地缓存的数据同步操作

3) 通过Goroutine启动一个或多个"无限循环"的任务

- 不断从workqueue中取出事件进行处理

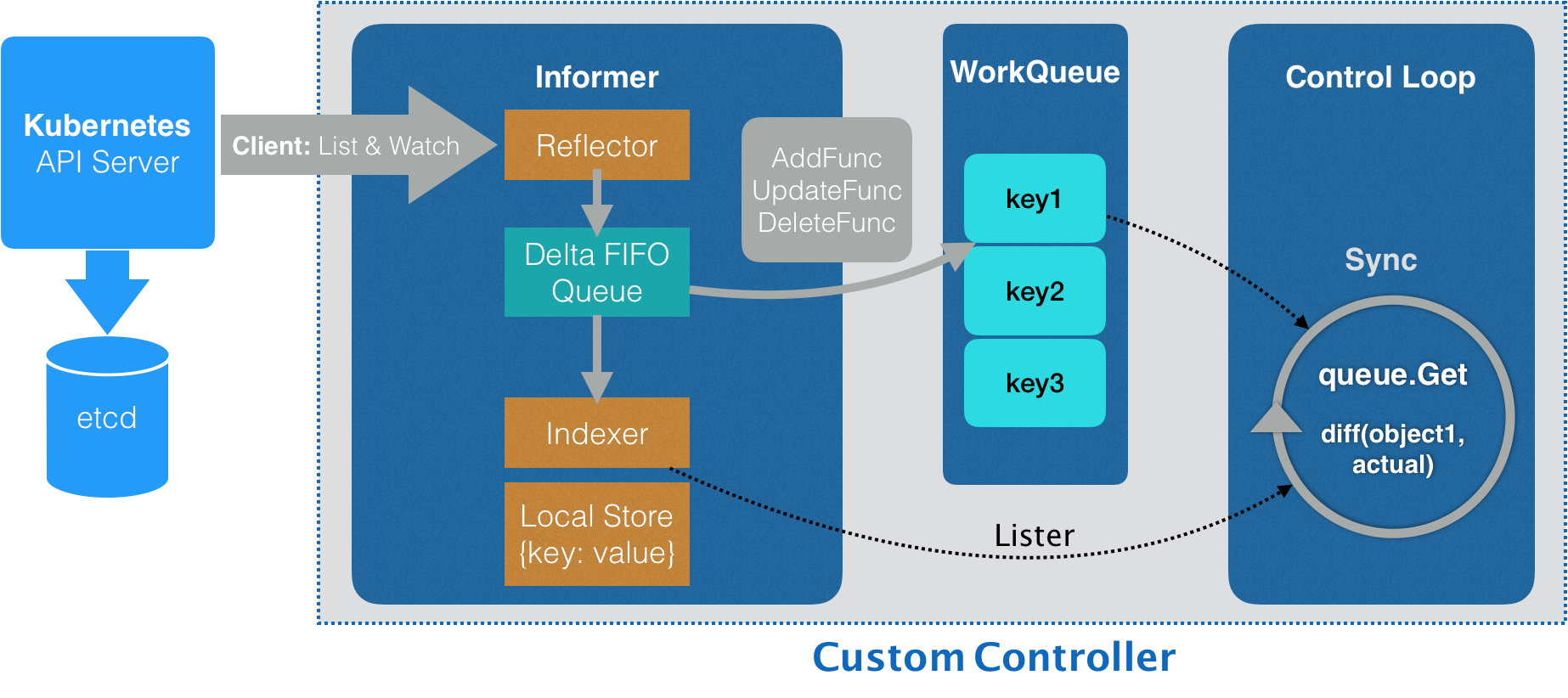

自定义控制器的工作原理如下图:

自定义控制器工作原理:

1.Network Informer通过ListAndWatch机制监听API Server端发生的事件,一旦API Server端有新的Network实例被创建,删除或者更新,Informer的Refector都会收到事件通知。此事件与其独赢的API的组合,被称为一个增量(Delta),被放进一个增量队列(Delta FIFO QUEUE)中。

2.Informer会不断从Delta队列中取出(Pop)增量,每拿到一个增量,Informer就会判断这个增量的事件类型,然后创建或者更新本地对象的缓存。

3.Informer的第二个职责就是根据事件类型触发事先注册好的ResourceEventHandler。(放入workqueue/执行特殊操作放入workqueue)

4.控制循环不断从工作队列取出事件并处理,以使实际状态和期望状态相符,控制循环中可以通过Lister获取本地缓存资源。

编译代码并在k8s集群中运行:

root@k8s-master:~/go/src/k8s-controller-custom-resource# go build -o samplecrd-controller .

root@k8s-master:~/go/src/k8s-controller-custom-resource# ./samplecrd-controller -kubeconfig=$HOME/.kube/config -alsologtostderr=true

I0424 17:28:06.151390 78677 controller.go:84] Setting up event handlers

I0424 17:28:06.151718 78677 controller.go:113] Starting Network control loop

I0424 17:28:06.151744 78677 controller.go:116] Waiting for informer caches to sync

I0424 17:28:06.553641 78677 controller.go:121] Starting workers

I0424 17:28:06.553679 78677 controller.go:127] Started workers

I0424 17:28:06.553731 78677 controller.go:229] [Neutron] Try to process network: &v1.Network{TypeMeta:v1.TypeMeta{Kind:"Network", APIVersion:"samplecrd.my.crd/v1"}, ObjectMeta:v1.ObjectMeta{Name:"example-network", GenerateName:"", Namespace:"default", SelfLink:"/apis/samplecrd.my.crd/v1/namespaces/default/networks/example-network", UID:"a64f80b5-8ad3-41e2-91e0-4ba51ea9651a", ResourceVersion:"10502999", Generation:1, CreationTimestamp:time.Date(2022, time.April, 17, 19, 5, 30, 0, time.Local), DeletionTimestamp:<nil>, DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"samplecrd.my.crd/v1\",\"kind\":\"Network\",\"metadata\":{\"annotations\":{},\"name\":\"example-network\",\"namespace\":\"default\"},\"spec\":{\"cidr\":\"192.168.0.0/16\",\"gateway\":\"192.168.0.1\"}}\n"}, OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ClusterName:"", ManagedFields:[]v1.ManagedFieldsEntry{v1.ManagedFieldsEntry{Manager:"kubectl", Operation:"Update", APIVersion:"samplecrd.my.crd/v1", Time:time.Date(2022, time.April, 17, 19, 5, 30, 0, time.Local), FieldsType:"FieldsV1", FieldsV1:(*v1.FieldsV1)(0xc00058c900)}}}, Spec:v1.networkSpec{Cidr:"192.168.0.0/16", Gateway:"192.168.0.1"}} ...

I0424 17:28:06.553937 78677 controller.go:183] Successfully synced 'default/example-network'

I0424 17:28:06.553998 78677 event.go:278] Event(v1.ObjectReference{Kind:"Network", Namespace:"default", Name:"example-network", UID:"a64f80b5-8ad3-41e2-91e0-4ba51ea9651a", APIVersion:"samplecrd.my.crd/v1", ResourceVersion:"10502999", FieldPath:""}): type: 'Normal' reason: 'Synced' Network synced successfully

I0424 17:32:27.500639 78677 controller.go:229] [Neutron] Try to process network: &v1.Network{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"example-network", GenerateName:"", Namespace:"default", SelfLink:"/apis/samplecrd.my.crd/v1/namespaces/default/networks/example-network", UID:"a64f80b5-8ad3-41e2-91e0-4ba51ea9651a", ResourceVersion:"13048101", Generation:2, CreationTimestamp:time.Date(2022, time.April, 17, 19, 5, 30, 0, time.Local), DeletionTimestamp:<nil>, DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"samplecrd.my.crd/v1\",\"kind\":\"Network\",\"metadata\":{\"annotations\":{},\"name\":\"example-network\",\"namespace\":\"default\"},\"spec\":{\"cidr\":\"192.168.1.0/16\",\"gateway\":\"192.168.1.1\"}}\n"}, OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ClusterName:"", ManagedFields:[]v1.ManagedFieldsEntry{v1.ManagedFieldsEntry{Manager:"kubectl", Operation:"Update", APIVersion:"samplecrd.my.crd/v1", Time:time.Date(2022, time.April, 24, 17, 32, 27, 0, time.Local), FieldsType:"FieldsV1", FieldsV1:(*v1.FieldsV1)(0xc00058c2d0)}}}, Spec:v1.networkSpec{Cidr:"192.168.1.0/16", Gateway:"192.168.1.1"}} ...

I0424 17:32:27.500835 78677 controller.go:183] Successfully synced 'default/example-network'

I0424 17:32:27.501220 78677 event.go:278] Event(v1.ObjectReference{Kind:"Network", Namespace:"default", Name:"example-network", UID:"a64f80b5-8ad3-41e2-91e0-4ba51ea9651a", APIVersion:"samplecrd.my.crd/v1", ResourceVersion:"13048101", FieldPath:""}): type: 'Normal' reason: 'Synced' Network synced successfully

W0424 17:33:25.671401 78677 controller.go:212] Network: default/example-network does not exist in local cache, will delete it from Neutron ...

I0424 17:33:25.671686 78677 controller.go:215] [Neutron] Deleting network: default/example-network ...

I0424 17:33:25.672122 78677 controller.go:183] Successfully synced 'default/example-network'

在另一个终端执行对Network资源的创建/更新/删除操作可以看到对应的Controller输出。

这套编写自定义控制器的流程不仅适用于自定义API资源,而且完全可以应用于k8s原生的默认API对象。只需要使用kubeInformerFactory获取对应的API资源的Informer,然后进行编码即可。