目录

1. 编译caffe

编译caffe不用多说,网上好多教程,环境需要整好。

一般就是直接make

或者cmake编译

mkdir build

cd build

cmake ..

make -j8

2.数据准备

我喜欢直接用图片训练,简单直观,不用lmdb。

先制作train.txt和test.txt

txt里面放图片路径 label

例如:

/data2/hello/1.jpg 0

/data2/hello/1_23.jpg 1

/data2/hello/1_24.jpg 1

类别从0开始计数。

3.网络文件准备,solver.prototxt resnet_50.prototxt ResNet-50-deploy.prototxt

solver.prototxt

net: "/data_1/2022/my_net_test_2022/my_resnet_50.prototxt"

test_iter: 3000

test_interval: 3000

#test_initialization: true

display: 20

average_loss: 1000

base_lr: 0.0005 ##刚开始训练的学习率稍微放大一点0.01

lr_policy: "step"

stepsize: 200000

gamma: 0.1

max_iter: 800000

momentum: 0.9

weight_decay: 0.0001

snapshot: 2000

snapshot_prefix: "/data_1/2022/my_net_test_2022/snap/resnet_50"

solver_mode: GPU

resnet_50.prototxt

name: "ResNet-50"

layer {

name: "data"

type: "ImageData"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: false

crop_size: 224

#mean_value: 104.0

#mean_value: 117.0

#mean_value: 123.0

}

image_data_param {

source: "/data_1/2022/train.txt"

new_height: 224

new_ 224

batch_size: 8

shuffle: true

}

}

layer {

name: "data"

type: "ImageData"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

crop_size: 224

#mean_value: 104.0

#mean_value: 117.0

#mean_value: 123.0

}

image_data_param {

source: "/data_1/2022/test.txt"

batch_size: 8

new_height: 224

new_ 224

}

}

layer {

bottom: "data"

top: "conv1"

name: "conv1"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

weight_filler {

type: "msra"

}

}

}

...

...

...

...

...

layer {

bottom: "pool5"

top: "fc1000"

name: "fc1000"

type: "InnerProduct"

inner_product_param {

num_output: 5 ###你自己的类别数

weight_filler {

type: "msra"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "prob"

name: "prob"

type: "SoftmaxWithLoss"

include {

phase: TRAIN

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "accuracy@1"

name: "accuracy/top1"

type: "Accuracy"

accuracy_param {

top_k: 1

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "accuracy@5"

name: "accuracy/top5"

type: "Accuracy"

accuracy_param {

top_k: 5

}

}

ResNet-50-deploy.prototxt

name: "ResNet-50"

input: "data"

input_dim: 1

input_dim: 3

input_dim: 224

input_dim: 224

layer {

bottom: "data"

top: "conv1"

name: "conv1"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

}

}

...

...

...

layer {

bottom: "pool5"

top: "fc1000"

name: "fc1000"

type: "InnerProduct"

inner_product_param {

num_output: 5 #自己的类别数量

}

}

layer {

bottom: "fc1000"

top: "prob"

name: "prob"

type: "Softmax"

}

4.训练指令

./build/tools/caffe train --solver /data_1/2022/my_net_test_2022/my_resnet_50_solver.prototxt

接着训练指令:

./build/tools/caffe train --solver /data_1/2022/my_net_test_2022/my_resnet_50_solver.prototxt --weights /data_1/2022/my_net_test_2022/snap/resnet_50_iter_225000.caffemodel

一些打印如下:

I0119 11:46:54.103080 8442 net.cpp:202] label_data_1_split does not need backward computation.

I0119 11:46:54.103085 8442 net.cpp:202] data does not need backward computation.

I0119 11:46:54.103088 8442 net.cpp:244] This network produces output accuracy@1

I0119 11:46:54.103092 8442 net.cpp:244] This network produces output accuracy@5

I0119 11:46:54.103171 8442 net.cpp:257] Network initialization done.

I0119 11:46:54.103446 8442 solver.cpp:72] Finetuning from /data_1/2022/my_net_test_2022/snap/resnet_50_iter_225000.caffemodel

I0119 11:46:54.154101 8442 upgrade_proto.cpp:79] Attempting to upgrade batch norm layers using deprecated params: /data_1/2022/my_net_test_2022/snap/resnet_50_iter_225000.caffemodel

I0119 11:46:54.154167 8442 upgrade_proto.cpp:82] Successfully upgraded batch norm layers using deprecated params.

I0119 11:46:54.172962 8442 net.cpp:746] Ignoring source layer prob

I0119 11:46:54.175931 8442 solver.cpp:57] Solver scaffolding done.

I0119 11:46:54.183208 8442 caffe.cpp:239] Starting Optimization

I0119 11:46:54.183221 8442 solver.cpp:289] Solving ResNet-50

I0119 11:46:54.183226 8442 solver.cpp:290] Learning Rate Policy: step

I0119 11:46:54.187500 8442 solver.cpp:347] Iteration 0, Testing net (#0)

I0119 11:46:54.198930 8442 net.cpp:678] Ignoring source layer prob

I0119 11:46:54.561599 8442 blocking_queue.cpp:49] Waiting for data

I0119 11:48:21.886171 8442 blocking_queue.cpp:49] Waiting for data

I0119 11:49:52.645862 8442 blocking_queue.cpp:49] Waiting for data

I0119 11:50:32.406616 8442 solver.cpp:414] Test net output #0: accuracy@1 = 0.98425

I0119 11:50:32.406733 8442 solver.cpp:414] Test net output #1: accuracy@5 = 0.999833

I0119 11:50:32.574229 8442 solver.cpp:239] Iteration 0 (-2.31354e-41 iter/s, 218.385s/20 iters), loss = 0.014625

I0119 11:50:32.574259 8442 solver.cpp:258] Train net output #0: accuracy@1 = 1

I0119 11:50:32.574265 8442 solver.cpp:258] Train net output #1: accuracy@5 = 1

I0119 11:50:32.574271 8442 solver.cpp:258] Train net output #2: prob = 0.014625 (* 1 = 0.014625 loss)

I0119 11:50:32.574281 8442 sgd_solver.cpp:112] Iteration 0, lr = 0.0005

I0119 11:50:35.615777 8442 solver.cpp:239] Iteration 20 (6.57589 iter/s, 3.04142s/20 iters), loss = 0.0107786

I0119 11:50:35.615824 8442 solver.cpp:258] Train net output #0: accuracy@1 = 1

I0119 11:50:35.615831 8442 solver.cpp:258] Train net output #1: accuracy@5 = 1

I0119 11:50:35.615839 8442 solver.cpp:258] Train net output #2: prob = 0.0342208 (* 1 = 0.0342208 loss)

I0119 11:50:35.615845 8442 sgd_solver.cpp:112] Iteration 20, lr = 0.0005

I0119 11:50:38.667296 8442 solver.cpp:239] Iteration 40 (6.55443 iter/s, 3.05137s/20 iters), loss = 0.0113241

I0119 11:50:38.667331 8442 solver.cpp:258] Train net output #0: accuracy@1 = 1

I0119 11:50:38.667340 8442 solver.cpp:258] Train net output #1: accuracy@5 = 1

I0119 11:50:38.667351 8442 solver.cpp:258] Train net output #2: prob = 0.00137874 (* 1 = 0.00137874 loss)

I0119 11:50:38.667358 8442 sgd_solver.cpp:112] Iteration 40, lr = 0.0005

I0119 11:50:41.711781 8442 solver.cpp:239] Iteration 60 (6.56963 iter/s, 3.04431s/20 iters), loss = 0.0104271

I0119 11:50:41.712178 8442 solver.cpp:258] Train net output #0: accuracy@1 = 1

I0119 11:50:41.712193 8442 solver.cpp:258] Train net output #1: accuracy@5 = 1

I0119 11:50:41.712205 8442 solver.cpp:258] Train net output #2: prob = 0.00118907 (* 1 = 0.00118907 loss)

I0119 11:50:41.712213 8442 sgd_solver.cpp:112] Iteration 60, lr = 0.0005

I0119 11:50:44.749732 8442 solver.cpp:239] Iteration 80 (6.58447 iter/s, 3.03745s/20 iters), loss = 0.0108907

5.推理跑前向

训练完了会生成caffemodel模型文件,接着就可以用deploy文件和caffemodel文件来进行推理。

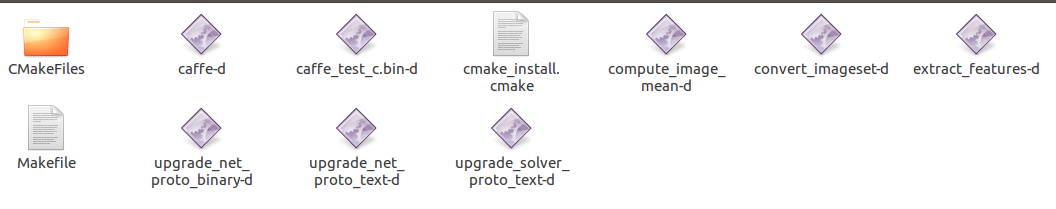

我是直接在caffe源码里面添加了一个cpp文件

.

├── caffe.cpp

├── caffe_test_c.cpp ####新增!!!

├── CMakeLists.txt

├── compute_image_mean.cpp

├── convert_imageset.cpp

├── extra

├── extract_features.cpp

├── upgrade_net_proto_binary.cpp

├── upgrade_net_proto_text.cpp

└── upgrade_solver_proto_text.cpp

文件添加在这里,这里的cmakelist可以直接为每个cpp生成一个可执行文件。在build/tools目录下面:

caffe_test_c.cpp代码如下:

#include "boost/algorithm/string.hpp"

#include "caffe/caffe.hpp"

#include "caffe/util/signal_handler.h"

#include "opencv2/opencv.hpp"

#include <iostream>

#include <algorithm>

#include <iosfwd>

#include <memory>

#include <string>

#include <utility>

#include <vector>

#include <iostream>

#include <algorithm>

#include <string>

#include <vector>

#include <dirent.h>

#include <fstream>

#include <sys/types.h>

#include <sys/stat.h>

#include <sstream>

#include <iomanip>

#include <ctime>

#include <stdio.h>

using namespace std;

using namespace cv;

class Classifier {

public:

Classifier(const std::string& model_file, const std::string& trained_file,

const std::string& mean_file, const std::string& mean_value, const std::string& scale_value);

std::vector<float> Predict(const cv::Mat& img);

std::vector<vector<float> > PredictMulti(const cv::Mat& img);

vector<float> extractFeatures(const cv::Mat &img, const std::string &blob_name);

private:

void SetMean(const std::string& mean_file, const std::string& mean_value);

void WrapInputLayer(std::vector<cv::Mat>* input_channels);

void Preprocess(const cv::Mat& img, std::vector<cv::Mat>* input_channels);

private:

boost::shared_ptr<caffe::Net<float> > net_;

cv::Size input_geometry_;

int num_channels_;

bool use_mean_;

cv::Mat mean_;

float scale_;

std::vector<std::string> labels_;

};

////////////////////////////////////////////////////////////////////////////////////////////////

using namespace caffe;

using caffe::Blob;

using caffe::Caffe;

using caffe::Net;

using caffe::Layer;

using caffe::Solver;

using caffe::shared_ptr;

using caffe::string;

using caffe::Timer;

using caffe::vector;

using std::ostringstream;

DEFINE_string(mean_file, "",

"The mean file used to subtract from the input image.");

DEFINE_string(mean_value, "104,117,123",

"If specified, can be one value or can be same as image channels"

" - would subtract from the corresponding channel). Separated by ','."

"Either mean_file or mean_value should be provided, not both.");

Classifier::Classifier(const string& model_file, const string& trained_file, const string& mean_file, const string& mean_value, const string& scale_value)

{

Caffe::SetDevice(0);

Caffe::set_mode(Caffe::GPU);

net_.reset(new Net<float>(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file);

Blob<float>* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

CHECK(num_channels_ == 3 || num_channels_ == 1) << "Input layer should have 1 or 3 channels.";

input_geometry_ = cv::Size(input_layer->width(), input_layer->height());

if("" != mean_file || "" != mean_value)

{

use_mean_ = true;

SetMean(mean_file, mean_value);

scale_ = std::atof(scale_value.c_str());

}

else

{

use_mean_ = false;

scale_ = 1; // normailize scale value

}

}

void Classifier::SetMean(const string& mean_file, const string& mean_value)

{

if (!mean_file.empty()) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto);

Blob<float> mean_blob;

mean_blob.FromProto(blob_proto);

CHECK_EQ(mean_blob.channels(), num_channels_)

<< "Number of channels of mean file doesn't match input layer.";

std::vector<cv::Mat> channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

}

cv::Mat mean;

cv::merge(channels, mean);

// cv::Scalar channel_mean = cv::mean(mean);

// mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

mean_ = mean.clone();

}

if (!mean_value.empty()) {

CHECK(mean_file.empty()) <<

"Cannot specify mean_file and mean_value at the same time";

stringstream ss(mean_value);

vector<float> values;

string item;

while (getline(ss, item, ',')) {

float value = std::atof(item.c_str());

values.push_back(value);

}

CHECK((int)values.size() == 1 || (int)values.size() == num_channels_) <<

"Specify either 1 mean_value or as many as channels: " << num_channels_;

std::vector<cv::Mat> channels;

for (int i = 0; i < num_channels_; ++i) {

/* Extract an individual channel. */

cv::Mat channel(input_geometry_.height, input_geometry_.width, CV_32FC1,

cv::Scalar(values[i]));

channels.push_back(channel);

}

cv::merge(channels, mean_);

}

}

void Classifier::Preprocess(const cv::Mat& img, std::vector<cv::Mat>* input_channels)

{

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_GRAY2BGR);

else

sample = img;

cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample;

cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1);

cv::Mat sample_normalized;

if(use_mean_)

{

cv::subtract(sample_float, mean_, sample_normalized);

sample_normalized = sample_normalized*scale_;

}

else

sample_normalized = sample_float.clone();

// cv::Mat resimg=(sample_normalized-cv::Scalar(127.5, 127.5, 127.5))*0.0078125;

cv::Mat resimg=sample_normalized;

cv::split(resimg, *input_channels);

// cv::split(sample_normalized, *input_channels);

CHECK(reinterpret_cast<float*>(input_channels->at(0).data) == net_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

}

vector<float> Classifier::extractFeatures(const cv::Mat &img, const std::string &blob_name) {

vector<float> blob_features;

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_, input_geometry_.height, input_geometry_.width);

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

const boost::shared_ptr<Blob<float> > blob_layer = net_->blob_by_name(blob_name);

const float* blob_layer_data = blob_layer->cpu_data(); // multi-dimension's data

// TODO: the index's range

int featureNum = blob_layer->count(1);

for (int i = 0; i < featureNum; i++) {

blob_features.push_back(*blob_layer_data);

blob_layer_data++;

}

return blob_features;

}

vector<float> Classifier::Predict(const cv::Mat& img)

{

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_, input_geometry_.height, input_geometry_.width);

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

Blob<float>* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector<float>(begin, end);

}

vector<vector<float> > Classifier::PredictMulti(const cv::Mat& img)

{

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_, input_geometry_.height, input_geometry_.width);

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

Blob<float>* output_layer1 = net_->output_blobs()[0];

Blob<float>* output_layer2 = net_->output_blobs()[1];

const float* begin1 = output_layer1->cpu_data();

const float* end1 = begin1 + output_layer1->channels();

std::vector<float> vec1 = std::vector<float>(begin1, end1);

const float* begin2 = output_layer2->cpu_data();

const float* end2 = begin2 + output_layer2->channels();

std::vector<float> vec2 = std::vector<float>(begin2, end2);

std::vector<vector<float> > res;

res.push_back(vec1);

res.push_back(vec2);

return res;

}

void Classifier::WrapInputLayer(std::vector<cv::Mat>* input_channels)

{

Blob<float>* input_layer = net_->input_blobs()[0];

int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

}

////////////////////////////////////////////////////////////////////////////////////////////////

struct Info

{

int idx;

float score;

};

bool sort_score(Info& i1, Info& i2)

{

return i1.score > i2.score;

}

int main()

{

string cls_model = "/data_1/2022/my_net_test_2022/ResNet-50-deploy.prototxt";

string cls_weights = "/data_1/2022/my_net_test_2022/snap/resnet_50_iter_225000.caffemodel";

Classifier* cls= new Classifier(cls_model, cls_weights, "", "","");

vector<string> v_files_all;

vector<string> v_files_test;

ifstream infile("/data_1/2022/test_list.txt");

string line;

while(infile >> line)

{

cv::Mat img = cv::imread(line);

if(img.empty())continue;

Mat dst = img.clone();

vector<float> predictions = cls->Predict(dst);

vector<Info> infos;

for(int k = 0; k <predictions.size(); k++)

{

Info info;

info.idx = k;

info.score = predictions[k];

infos.push_back(info);

}

sort(infos.begin(), infos.end(), sort_score);

Info info = infos[0];

int idx = info.idx;

std::cout<<"path="<<line<<std::endl;

std::cout<<"label="<<idx<<std::endl;

cv::imshow("img",img);

cv::waitKey(0);

}

return 0;

}

6.三个完整网络文件

my_resnet_50_solver.prototxt

net: "/data_1/2022/my_net_test_2022/my_resnet_50.prototxt"

test_iter: 3000

test_interval: 3000

#test_initialization: true

display: 20

average_loss: 1000

base_lr: 0.0005

lr_policy: "step"

stepsize: 200000

gamma: 0.1

max_iter: 800000

momentum: 0.9

weight_decay: 0.0001

snapshot: 2000

snapshot_prefix: "/data_1/2022/my_net_test_2022/snap/resnet_50"

solver_mode: GPU

my_resnet_50.prototxt

name: "ResNet-50"

layer {

name: "data"

type: "ImageData"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: false

crop_size: 224

#mean_value: 104.0

#mean_value: 117.0

#mean_value: 123.0

}

image_data_param {

source: "/data_1/2022/train-20211025.txt"

new_height: 224

new_ 224

batch_size: 8

shuffle: true

}

}

layer {

name: "data"

type: "ImageData"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

crop_size: 224

#mean_value: 104.0

#mean_value: 117.0

#mean_value: 123.0

}

image_data_param {

source: "/data_1/2022/val-20211025.txt"

batch_size: 8

new_height: 224

new_ 224

}

}

layer {

bottom: "data"

top: "conv1"

name: "conv1"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "bn_conv1"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "scale_conv1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "conv1_relu"

type: "ReLU"

}

layer {

bottom: "conv1"

top: "pool1"

name: "pool1"

type: "Pooling"

pooling_param {

kernel_size: 3

stride: 2

pool: MAX

}

}

layer {

bottom: "pool1"

top: "res2a_branch1"

name: "res2a_branch1"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "bn2a_branch1"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "scale2a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "pool1"

top: "res2a_branch2a"

name: "res2a_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "bn2a_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "scale2a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "res2a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2b"

name: "res2a_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "bn2a_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "scale2a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "res2a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2c"

name: "res2a_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "bn2a_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "scale2a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch1"

bottom: "res2a_branch2c"

top: "res2a"

name: "res2a"

type: "Eltwise"

}

layer {

bottom: "res2a"

top: "res2a"

name: "res2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a"

top: "res2b_branch2a"

name: "res2b_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "bn2b_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "scale2b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "res2b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2b"

name: "res2b_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2b"

name: "bn2b_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2b"

name: "scale2b_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2b"

name: "res2b_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2c"

name: "res2b_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2b_branch2c"

top: "res2b_branch2c"

name: "bn2b_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2b_branch2c"

top: "res2b_branch2c"

name: "scale2b_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a"

bottom: "res2b_branch2c"

top: "res2b"

name: "res2b"

type: "Eltwise"

}

layer {

bottom: "res2b"

top: "res2b"

name: "res2b_relu"

type: "ReLU"

}

layer {

bottom: "res2b"

top: "res2c_branch2a"

name: "res2c_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2c_branch2a"

top: "res2c_branch2a"

name: "bn2c_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2c_branch2a"

top: "res2c_branch2a"

name: "scale2c_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2c_branch2a"

top: "res2c_branch2a"

name: "res2c_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2c_branch2a"

top: "res2c_branch2b"

name: "res2c_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2c_branch2b"

top: "res2c_branch2b"

name: "bn2c_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2c_branch2b"

top: "res2c_branch2b"

name: "scale2c_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2c_branch2b"

top: "res2c_branch2b"

name: "res2c_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2c_branch2b"

top: "res2c_branch2c"

name: "res2c_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res2c_branch2c"

top: "res2c_branch2c"

name: "bn2c_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res2c_branch2c"

top: "res2c_branch2c"

name: "scale2c_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2b"

bottom: "res2c_branch2c"

top: "res2c"

name: "res2c"

type: "Eltwise"

}

layer {

bottom: "res2c"

top: "res2c"

name: "res2c_relu"

type: "ReLU"

}

layer {

bottom: "res2c"

top: "res3a_branch1"

name: "res3a_branch1"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3a_branch1"

top: "res3a_branch1"

name: "bn3a_branch1"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3a_branch1"

top: "res3a_branch1"

name: "scale3a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2c"

top: "res3a_branch2a"

name: "res3a_branch2a"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3a_branch2a"

top: "res3a_branch2a"

name: "bn3a_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3a_branch2a"

top: "res3a_branch2a"

name: "scale3a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3a_branch2a"

top: "res3a_branch2a"

name: "res3a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res3a_branch2a"

top: "res3a_branch2b"

name: "res3a_branch2b"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3a_branch2b"

top: "res3a_branch2b"

name: "bn3a_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3a_branch2b"

top: "res3a_branch2b"

name: "scale3a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3a_branch2b"

top: "res3a_branch2b"

name: "res3a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res3a_branch2b"

top: "res3a_branch2c"

name: "res3a_branch2c"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3a_branch2c"

top: "res3a_branch2c"

name: "bn3a_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3a_branch2c"

top: "res3a_branch2c"

name: "scale3a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3a_branch1"

bottom: "res3a_branch2c"

top: "res3a"

name: "res3a"

type: "Eltwise"

}

layer {

bottom: "res3a"

top: "res3a"

name: "res3a_relu"

type: "ReLU"

}

layer {

bottom: "res3a"

top: "res3b_branch2a"

name: "res3b_branch2a"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3b_branch2a"

top: "res3b_branch2a"

name: "bn3b_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3b_branch2a"

top: "res3b_branch2a"

name: "scale3b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3b_branch2a"

top: "res3b_branch2a"

name: "res3b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res3b_branch2a"

top: "res3b_branch2b"

name: "res3b_branch2b"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3b_branch2b"

top: "res3b_branch2b"

name: "bn3b_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3b_branch2b"

top: "res3b_branch2b"

name: "scale3b_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3b_branch2b"

top: "res3b_branch2b"

name: "res3b_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res3b_branch2b"

top: "res3b_branch2c"

name: "res3b_branch2c"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3b_branch2c"

top: "res3b_branch2c"

name: "bn3b_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3b_branch2c"

top: "res3b_branch2c"

name: "scale3b_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3a"

bottom: "res3b_branch2c"

top: "res3b"

name: "res3b"

type: "Eltwise"

}

layer {

bottom: "res3b"

top: "res3b"

name: "res3b_relu"

type: "ReLU"

}

layer {

bottom: "res3b"

top: "res3c_branch2a"

name: "res3c_branch2a"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3c_branch2a"

top: "res3c_branch2a"

name: "bn3c_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3c_branch2a"

top: "res3c_branch2a"

name: "scale3c_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3c_branch2a"

top: "res3c_branch2a"

name: "res3c_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res3c_branch2a"

top: "res3c_branch2b"

name: "res3c_branch2b"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3c_branch2b"

top: "res3c_branch2b"

name: "bn3c_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3c_branch2b"

top: "res3c_branch2b"

name: "scale3c_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3c_branch2b"

top: "res3c_branch2b"

name: "res3c_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res3c_branch2b"

top: "res3c_branch2c"

name: "res3c_branch2c"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3c_branch2c"

top: "res3c_branch2c"

name: "bn3c_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3c_branch2c"

top: "res3c_branch2c"

name: "scale3c_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3b"

bottom: "res3c_branch2c"

top: "res3c"

name: "res3c"

type: "Eltwise"

}

layer {

bottom: "res3c"

top: "res3c"

name: "res3c_relu"

type: "ReLU"

}

layer {

bottom: "res3c"

top: "res3d_branch2a"

name: "res3d_branch2a"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3d_branch2a"

top: "res3d_branch2a"

name: "bn3d_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3d_branch2a"

top: "res3d_branch2a"

name: "scale3d_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3d_branch2a"

top: "res3d_branch2a"

name: "res3d_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res3d_branch2a"

top: "res3d_branch2b"

name: "res3d_branch2b"

type: "Convolution"

convolution_param {

num_output: 128

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3d_branch2b"

top: "res3d_branch2b"

name: "bn3d_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3d_branch2b"

top: "res3d_branch2b"

name: "scale3d_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3d_branch2b"

top: "res3d_branch2b"

name: "res3d_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res3d_branch2b"

top: "res3d_branch2c"

name: "res3d_branch2c"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res3d_branch2c"

top: "res3d_branch2c"

name: "bn3d_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res3d_branch2c"

top: "res3d_branch2c"

name: "scale3d_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3c"

bottom: "res3d_branch2c"

top: "res3d"

name: "res3d"

type: "Eltwise"

}

layer {

bottom: "res3d"

top: "res3d"

name: "res3d_relu"

type: "ReLU"

}

layer {

bottom: "res3d"

top: "res4a_branch1"

name: "res4a_branch1"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4a_branch1"

top: "res4a_branch1"

name: "bn4a_branch1"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4a_branch1"

top: "res4a_branch1"

name: "scale4a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res3d"

top: "res4a_branch2a"

name: "res4a_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4a_branch2a"

top: "res4a_branch2a"

name: "bn4a_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4a_branch2a"

top: "res4a_branch2a"

name: "scale4a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4a_branch2a"

top: "res4a_branch2a"

name: "res4a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4a_branch2a"

top: "res4a_branch2b"

name: "res4a_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4a_branch2b"

top: "res4a_branch2b"

name: "bn4a_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4a_branch2b"

top: "res4a_branch2b"

name: "scale4a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4a_branch2b"

top: "res4a_branch2b"

name: "res4a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4a_branch2b"

top: "res4a_branch2c"

name: "res4a_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4a_branch2c"

top: "res4a_branch2c"

name: "bn4a_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4a_branch2c"

top: "res4a_branch2c"

name: "scale4a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4a_branch1"

bottom: "res4a_branch2c"

top: "res4a"

name: "res4a"

type: "Eltwise"

}

layer {

bottom: "res4a"

top: "res4a"

name: "res4a_relu"

type: "ReLU"

}

layer {

bottom: "res4a"

top: "res4b_branch2a"

name: "res4b_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4b_branch2a"

top: "res4b_branch2a"

name: "bn4b_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4b_branch2a"

top: "res4b_branch2a"

name: "scale4b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4b_branch2a"

top: "res4b_branch2a"

name: "res4b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4b_branch2a"

top: "res4b_branch2b"

name: "res4b_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4b_branch2b"

top: "res4b_branch2b"

name: "bn4b_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4b_branch2b"

top: "res4b_branch2b"

name: "scale4b_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4b_branch2b"

top: "res4b_branch2b"

name: "res4b_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4b_branch2b"

top: "res4b_branch2c"

name: "res4b_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4b_branch2c"

top: "res4b_branch2c"

name: "bn4b_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4b_branch2c"

top: "res4b_branch2c"

name: "scale4b_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4a"

bottom: "res4b_branch2c"

top: "res4b"

name: "res4b"

type: "Eltwise"

}

layer {

bottom: "res4b"

top: "res4b"

name: "res4b_relu"

type: "ReLU"

}

layer {

bottom: "res4b"

top: "res4c_branch2a"

name: "res4c_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4c_branch2a"

top: "res4c_branch2a"

name: "bn4c_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4c_branch2a"

top: "res4c_branch2a"

name: "scale4c_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4c_branch2a"

top: "res4c_branch2a"

name: "res4c_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4c_branch2a"

top: "res4c_branch2b"

name: "res4c_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4c_branch2b"

top: "res4c_branch2b"

name: "bn4c_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4c_branch2b"

top: "res4c_branch2b"

name: "scale4c_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4c_branch2b"

top: "res4c_branch2b"

name: "res4c_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4c_branch2b"

top: "res4c_branch2c"

name: "res4c_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4c_branch2c"

top: "res4c_branch2c"

name: "bn4c_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4c_branch2c"

top: "res4c_branch2c"

name: "scale4c_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4b"

bottom: "res4c_branch2c"

top: "res4c"

name: "res4c"

type: "Eltwise"

}

layer {

bottom: "res4c"

top: "res4c"

name: "res4c_relu"

type: "ReLU"

}

layer {

bottom: "res4c"

top: "res4d_branch2a"

name: "res4d_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4d_branch2a"

top: "res4d_branch2a"

name: "bn4d_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4d_branch2a"

top: "res4d_branch2a"

name: "scale4d_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4d_branch2a"

top: "res4d_branch2a"

name: "res4d_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4d_branch2a"

top: "res4d_branch2b"

name: "res4d_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4d_branch2b"

top: "res4d_branch2b"

name: "bn4d_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4d_branch2b"

top: "res4d_branch2b"

name: "scale4d_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4d_branch2b"

top: "res4d_branch2b"

name: "res4d_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4d_branch2b"

top: "res4d_branch2c"

name: "res4d_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4d_branch2c"

top: "res4d_branch2c"

name: "bn4d_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4d_branch2c"

top: "res4d_branch2c"

name: "scale4d_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4c"

bottom: "res4d_branch2c"

top: "res4d"

name: "res4d"

type: "Eltwise"

}

layer {

bottom: "res4d"

top: "res4d"

name: "res4d_relu"

type: "ReLU"

}

layer {

bottom: "res4d"

top: "res4e_branch2a"

name: "res4e_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4e_branch2a"

top: "res4e_branch2a"

name: "bn4e_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4e_branch2a"

top: "res4e_branch2a"

name: "scale4e_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4e_branch2a"

top: "res4e_branch2a"

name: "res4e_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4e_branch2a"

top: "res4e_branch2b"

name: "res4e_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4e_branch2b"

top: "res4e_branch2b"

name: "bn4e_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4e_branch2b"

top: "res4e_branch2b"

name: "scale4e_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4e_branch2b"

top: "res4e_branch2b"

name: "res4e_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4e_branch2b"

top: "res4e_branch2c"

name: "res4e_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4e_branch2c"

top: "res4e_branch2c"

name: "bn4e_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4e_branch2c"

top: "res4e_branch2c"

name: "scale4e_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4d"

bottom: "res4e_branch2c"

top: "res4e"

name: "res4e"

type: "Eltwise"

}

layer {

bottom: "res4e"

top: "res4e"

name: "res4e_relu"

type: "ReLU"

}

layer {

bottom: "res4e"

top: "res4f_branch2a"

name: "res4f_branch2a"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4f_branch2a"

top: "res4f_branch2a"

name: "bn4f_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4f_branch2a"

top: "res4f_branch2a"

name: "scale4f_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4f_branch2a"

top: "res4f_branch2a"

name: "res4f_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res4f_branch2a"

top: "res4f_branch2b"

name: "res4f_branch2b"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4f_branch2b"

top: "res4f_branch2b"

name: "bn4f_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4f_branch2b"

top: "res4f_branch2b"

name: "scale4f_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4f_branch2b"

top: "res4f_branch2b"

name: "res4f_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res4f_branch2b"

top: "res4f_branch2c"

name: "res4f_branch2c"

type: "Convolution"

convolution_param {

num_output: 1024

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res4f_branch2c"

top: "res4f_branch2c"

name: "bn4f_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res4f_branch2c"

top: "res4f_branch2c"

name: "scale4f_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4e"

bottom: "res4f_branch2c"

top: "res4f"

name: "res4f"

type: "Eltwise"

}

layer {

bottom: "res4f"

top: "res4f"

name: "res4f_relu"

type: "ReLU"

}

layer {

bottom: "res4f"

top: "res5a_branch1"

name: "res5a_branch1"

type: "Convolution"

convolution_param {

num_output: 2048

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5a_branch1"

top: "res5a_branch1"

name: "bn5a_branch1"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5a_branch1"

top: "res5a_branch1"

name: "scale5a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res4f"

top: "res5a_branch2a"

name: "res5a_branch2a"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 2

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5a_branch2a"

top: "res5a_branch2a"

name: "bn5a_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5a_branch2a"

top: "res5a_branch2a"

name: "scale5a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5a_branch2a"

top: "res5a_branch2a"

name: "res5a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res5a_branch2a"

top: "res5a_branch2b"

name: "res5a_branch2b"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5a_branch2b"

top: "res5a_branch2b"

name: "bn5a_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5a_branch2b"

top: "res5a_branch2b"

name: "scale5a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5a_branch2b"

top: "res5a_branch2b"

name: "res5a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res5a_branch2b"

top: "res5a_branch2c"

name: "res5a_branch2c"

type: "Convolution"

convolution_param {

num_output: 2048

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5a_branch2c"

top: "res5a_branch2c"

name: "bn5a_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5a_branch2c"

top: "res5a_branch2c"

name: "scale5a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5a_branch1"

bottom: "res5a_branch2c"

top: "res5a"

name: "res5a"

type: "Eltwise"

}

layer {

bottom: "res5a"

top: "res5a"

name: "res5a_relu"

type: "ReLU"

}

layer {

bottom: "res5a"

top: "res5b_branch2a"

name: "res5b_branch2a"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5b_branch2a"

top: "res5b_branch2a"

name: "bn5b_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5b_branch2a"

top: "res5b_branch2a"

name: "scale5b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5b_branch2a"

top: "res5b_branch2a"

name: "res5b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res5b_branch2a"

top: "res5b_branch2b"

name: "res5b_branch2b"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5b_branch2b"

top: "res5b_branch2b"

name: "bn5b_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5b_branch2b"

top: "res5b_branch2b"

name: "scale5b_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5b_branch2b"

top: "res5b_branch2b"

name: "res5b_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res5b_branch2b"

top: "res5b_branch2c"

name: "res5b_branch2c"

type: "Convolution"

convolution_param {

num_output: 2048

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5b_branch2c"

top: "res5b_branch2c"

name: "bn5b_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5b_branch2c"

top: "res5b_branch2c"

name: "scale5b_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5a"

bottom: "res5b_branch2c"

top: "res5b"

name: "res5b"

type: "Eltwise"

}

layer {

bottom: "res5b"

top: "res5b"

name: "res5b_relu"

type: "ReLU"

}

layer {

bottom: "res5b"

top: "res5c_branch2a"

name: "res5c_branch2a"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5c_branch2a"

top: "res5c_branch2a"

name: "bn5c_branch2a"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5c_branch2a"

top: "res5c_branch2a"

name: "scale5c_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5c_branch2a"

top: "res5c_branch2a"

name: "res5c_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res5c_branch2a"

top: "res5c_branch2b"

name: "res5c_branch2b"

type: "Convolution"

convolution_param {

num_output: 512

kernel_size: 3

pad: 1

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5c_branch2b"

top: "res5c_branch2b"

name: "bn5c_branch2b"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5c_branch2b"

top: "res5c_branch2b"

name: "scale5c_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5c_branch2b"

top: "res5c_branch2b"

name: "res5c_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res5c_branch2b"

top: "res5c_branch2c"

name: "res5c_branch2c"

type: "Convolution"

convolution_param {

num_output: 2048

kernel_size: 1

pad: 0

stride: 1

bias_term: false

weight_filler {

type: "msra"

}

}

}

layer {

bottom: "res5c_branch2c"

top: "res5c_branch2c"

name: "bn5c_branch2c"

type: "BatchNorm"

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

param {

lr_mult: 0

decay_mult: 0

}

}

layer {

bottom: "res5c_branch2c"

top: "res5c_branch2c"

name: "scale5c_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res5b"

bottom: "res5c_branch2c"

top: "res5c"

name: "res5c"

type: "Eltwise"

}

layer {

bottom: "res5c"

top: "res5c"

name: "res5c_relu"

type: "ReLU"

}

layer {

bottom: "res5c"

top: "pool5"

name: "pool5"

type: "Pooling"

pooling_param {

kernel_size: 7

stride: 1

pool: AVE

}

}

layer {

bottom: "pool5"

top: "fc1000"

name: "fc1000"

type: "InnerProduct"

inner_product_param {

num_output: 5

weight_filler {

type: "msra"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "prob"

name: "prob"

type: "SoftmaxWithLoss"

include {

phase: TRAIN

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "accuracy@1"

name: "accuracy/top1"

type: "Accuracy"

accuracy_param {

top_k: 1

}

}

layer {

bottom: "fc1000"

bottom: "label"

top: "accuracy@5"

name: "accuracy/top5"

type: "Accuracy"

accuracy_param {

top_k: 5

}

}

ResNet-50-deploy.prototxt

name: "ResNet-50"

input: "data"

input_dim: 1

input_dim: 3

input_dim: 224

input_dim: 224

layer {

bottom: "data"

top: "conv1"

name: "conv1"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "bn_conv1"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "scale_conv1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "conv1_relu"

type: "ReLU"

}

layer {

bottom: "conv1"

top: "pool1"

name: "pool1"

type: "Pooling"

pooling_param {

kernel_size: 3

stride: 2

pool: MAX

}

}

layer {

bottom: "pool1"

top: "res2a_branch1"

name: "res2a_branch1"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "bn2a_branch1"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "scale2a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "pool1"

top: "res2a_branch2a"

name: "res2a_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "bn2a_branch2a"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "scale2a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "res2a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2b"

name: "res2a_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "bn2a_branch2b"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "scale2a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "res2a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2c"

name: "res2a_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "bn2a_branch2c"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "scale2a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch1"

bottom: "res2a_branch2c"

top: "res2a"

name: "res2a"

type: "Eltwise"

}

layer {

bottom: "res2a"

top: "res2a"

name: "res2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a"

top: "res2b_branch2a"

name: "res2b_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "bn2b_branch2a"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "scale2b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "res2b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2b"

name: "res2b_branch2b"