最近在用tensorrt api实现refinedet,中间过程记录一下。主要参考的如下仓库:

https://github.com/wang-xinyu/tensorrtx

tensorrt api说明:

https://docs.nvidia.com/deeplearning/tensorrt/api/c_api/index.html

vgg主体部分实现了和pytorch精度一致,然后有个自定义L2norm层,我就傻眼了,翻遍了整个这个仓库都没有,tensorrt不熟悉,难啊。

pytorch的L2norm层代码:

import torch

import torch.nn as nn

from torch.autograd import Function

#from torch.autograd import Variable

import torch.nn.init as init

class L2Norm(nn.Module):

def __init__(self,n_channels, scale):

super(L2Norm,self).__init__()

self.n_channels = n_channels

self.gamma = scale or None

self.eps = 1e-10

self.weight = nn.Parameter(torch.Tensor(self.n_channels))

self.reset_parameters()

a = 0

def reset_parameters(self):

init.constant_(self.weight,self.gamma)

def forward(self, x):

aa = x.pow(2) ## [1,512,40,40]

bb = x.pow(2).sum(dim=1, keepdim=True) ## [1,1,40,40]

bb1 = x.pow(2).sum(dim=1)#[1,40,40]

norm = x.pow(2).sum(dim=1, keepdim=True).sqrt()+self.eps # [1,1,40,40]

#x /= norm

x = torch.div(x,norm) # [1,512,40,40]

ccc = self.weight.unsqueeze(0).unsqueeze(2).unsqueeze(3).expand_as(x) ##[1,512,40,40]

out = self.weight.unsqueeze(0).unsqueeze(2).unsqueeze(3).expand_as(x) * x

return out

用的时候:

self.conv4_3_L2Norm = L2Norm(512, 10)

self.conv5_3_L2Norm = L2Norm(512, 8)

仔细研究了这段代码,这句:

self.weight = nn.Parameter(torch.Tensor(self.n_channels))

这句表明了weight是可学习参数。一开始还不知道,因为初始化的时候初始了常亮10,8.当我把权重载入的时候发现权重变化了,变成了9点多,7点多。我就意识到这些参数是可学习的了。

但是tensorrt咋搞哦,群里问,有个群名叫“昝”的大佬说加减乘除的运算可以用addScale,addElementWise这些完成,然后又给我说官方也有api实现了:

https://docs.nvidia.com/deeplearning/tensorrt/api/c_api/_nv_infer_plugin_8h.html#a23fc3c32fb290af2b0f6f3922b1e7527

我就去看了,根本不知道怎么用,都没有个例子的,一头雾水,再稍微问点,群里大佬就是自己看文档。也是也是,一切都得靠自己!

然后我看项目里面不支持的层自己写plugin来实现的,然后我又去看plugin,然后tensorrt官方git有plugin的实现norm,

https://github.com/NVIDIA/TensorRT/tree/master/plugin/normalizePlugin

这个文件夹下面实现了很多plugin包括nmsPlugin,priorBoxPlugin,batchedNMSPlugin这些感觉都是目标检测后处理用到的,然后看了半天,不知道怎么用啊!!!!

好像这个需要源码编译tensorrt库,cmakelist编译,我试了,没有成功报错。

我太难了。

然后又看cuda编程的实现方式,全是操作一维数组吗,按照某个维度求和都需要好多代码才能完成啊。。。然后又去看了一天cuda编程,把第五章看完了,讲到了share共享内存这里。

第三天,一早我就盯着L2Norm的实现方式,

aa = x.pow(2) ## [1,512,40,40]

bb = x.pow(2).sum(dim=1, keepdim=True) ## [1,1,40,40]

# bb1 = x.pow(2).sum(dim=1)#[1,40,40]

norm = x.pow(2).sum(dim=1, keepdim=True).sqrt()+self.eps # [1,1,40,40]

其实一开始为了方便了解过程我就把任务拆解了,所以思路就是先实现求平方。其实我知道

virtual IScaleLayer* addScale(ITensor& input, ScaleMode mode, Weights shift, Weights scale, Weights power) TRTNOEXCEPT = 0;这个函数完成的数学操作就是:

f(x)= (shift + scale * x) ^ power

求平方就是pow赋值2,scale=1,shift=0.就可以完成。恩!然后试了下。确实可以,和pytorch代码一致,然后再下一步:

x.pow(2).sum(dim=1, keepdim=True)

这个就不知道咋搞了,我去群里问,指定哪个维度求和怎么弄,有个群名叫sky的说addReduce,真感谢这位兄弟,确实是的。

virtual IReduceLayer* addReduce(ITensor& input, ReduceOperation operation, uint32_t reduceAxes, bool keepDimensions) TRTNOEXCEPT = 0;

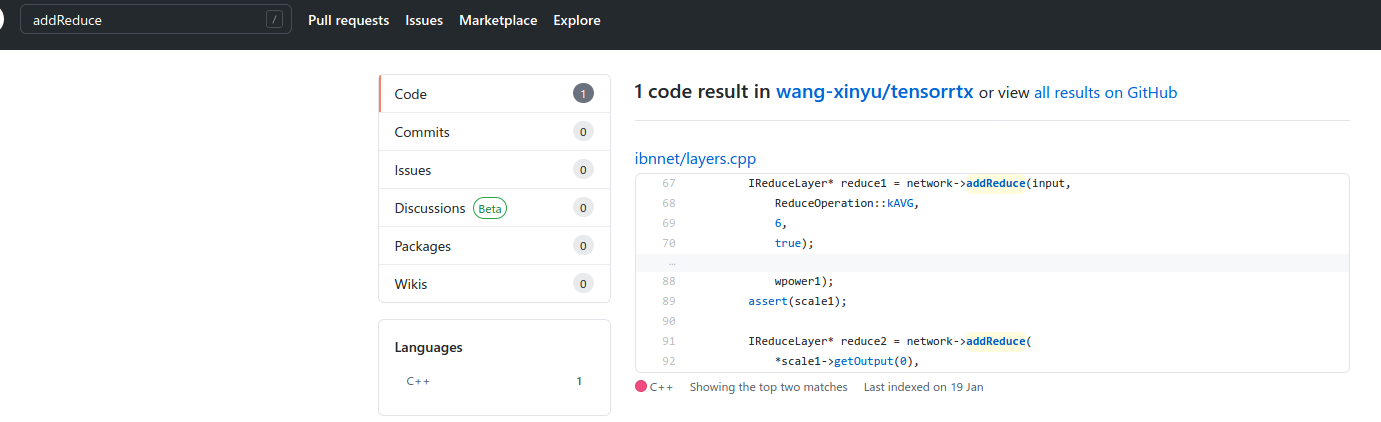

然后我就去工程中搜索addReduce,确实有个工程用到了这个api

仔细一看代码也是和我需要实现的功能差不多:

IScaleLayer* addInstanceNorm2d(INetworkDefinition *network, std::map<std::string, Weights>& weightMap, ITensor& input, const std::string lname, const float eps) {

int len = weightMap[lname + ".weight"].count;

IReduceLayer* reduce1 = network->addReduce(input,

ReduceOperation::kAVG,

6,

true);

assert(reduce1);

IElementWiseLayer* ew1 = network->addElementWise(input,

*reduce1->getOutput(0),

ElementWiseOperation::kSUB);

assert(ew1);

const static float pval1[3]{0.0, 1.0, 2.0};

Weights wshift1{DataType::kFLOAT, pval1, 1};

Weights wscale1{DataType::kFLOAT, pval1+1, 1};

Weights wpower1{DataType::kFLOAT, pval1+2, 1};

IScaleLayer* scale1 = network->addScale(

*ew1->getOutput(0),

ScaleMode::kUNIFORM,

wshift1,

wscale1,

wpower1);

assert(scale1);

IReduceLayer* reduce2 = network->addReduce(

*scale1->getOutput(0),

ReduceOperation::kAVG,

6,

true);

assert(reduce2);

const static float pval2[3]{eps, 1.0, 0.5};

Weights wshift2{DataType::kFLOAT, pval2, 1};

Weights wscale2{DataType::kFLOAT, pval2+1, 1};

Weights wpower2{DataType::kFLOAT, pval2+2, 1};

IScaleLayer* scale2 = network->addScale(

*reduce2->getOutput(0),

ScaleMode::kUNIFORM,

wshift2,

wscale2,

wpower2);

assert(scale2);

IElementWiseLayer* ew2 = network->addElementWise(*ew1->getOutput(0),

*scale2->getOutput(0),

ElementWiseOperation::kDIV);

assert(ew2);

float* pval3 = reinterpret_cast<float*>(malloc(sizeof(float) * len));

std::fill_n(pval3, len, 1.0);

Weights wpower3{DataType::kFLOAT, pval3, len};

weightMap[lname + ".power3"] = wpower3;

IScaleLayer* scale3 = network->addScale(

*ew2->getOutput(0),

ScaleMode::kCHANNEL,

weightMap[lname + ".bias"],

weightMap[lname + ".weight"],

wpower3);

assert(scale3);

return scale3;

}

恩!我最擅长的就是依葫芦画瓢。很快,我把L2Norm也实现完了,并且精度一致。

IScaleLayer* L2norm(INetworkDefinition *network, std::map<std::string, Weights>& weightMap, ITensor& input, const std::string pre_name = "conv4_3_L2Norm.weight")

{

//aa = x.pow(2) ## [1,512,40,40]

const static float pval1[3]{0.0, 1.0, 2.0};

Weights wshift1{DataType::kFLOAT, pval1, 1};

Weights wscale1{DataType::kFLOAT, pval1+1, 1};

Weights wpower1{DataType::kFLOAT, pval1+2, 1};

IScaleLayer* scale1 = network->addScale(

input,

ScaleMode::kUNIFORM,

wshift1,

wscale1,

wpower1);

assert(scale1);

//bb = x.pow(2).sum(dim=1, keepdim=True) ## [1,1,40,40]

IReduceLayer* reduce1 = network->addReduce(*scale1->getOutput(0),

ReduceOperation::kSUM,

1,

true);

assert(reduce1);

//norm = x.pow(2).sum(dim=1, keepdim=True).sqrt()+self.eps # [1,1,40,40]

const static float pval2[3]{0.0, 1.0, 0.5};

Weights wshift2{DataType::kFLOAT, pval2, 1};

Weights wscale2{DataType::kFLOAT, pval2+1, 1};

Weights wpower2{DataType::kFLOAT, pval2+2, 1};

IScaleLayer* scale2 = network->addScale(

*reduce1->getOutput(0),

ScaleMode::kUNIFORM,

wshift2,

wscale2,

wpower2);

assert(scale2);

// x = torch.div(x,norm)

IElementWiseLayer* ew2 = network->addElementWise(input,

*scale2->getOutput(0),

ElementWiseOperation::kDIV);

assert(ew2);

//out = self.weight.unsqueeze(0).unsqueeze(2).unsqueeze(3).expand_as(x) * x

int len = weightMap[pre_name].count;

float* pval3 = reinterpret_cast<float*>(malloc(sizeof(float) * len));

std::fill_n(pval3, len, 1.0);

Weights wpower3{DataType::kFLOAT, pval3, len};

weightMap[pre_name + ".power3"] = wpower3;

float* pval4 = reinterpret_cast<float*>(malloc(sizeof(float) * len));

std::fill_n(pval4, len, 0.0);

Weights wpower4{DataType::kFLOAT, pval4, len};

weightMap[pre_name + ".power4"] = wpower4;

IScaleLayer* scale3 = network->addScale(

*ew2->getOutput(0),

ScaleMode::kCHANNEL,

wpower4,

weightMap[pre_name],

wpower3);

assert(scale3);

return scale3;

}

然后再接着搭建后面的网络。

ps基金跌成狗了 <>