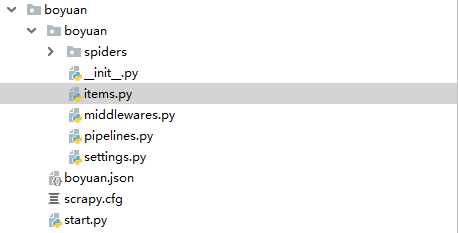

一、创建项目

第一步:scrapy startproject boyuan

第二步:cd boyuan

scrapy genspider product -t crawl boyuan.com

如图:

二、代码编写

1、item.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class BoyuanItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() address = scrapy.Field() company = scrapy.Field() img = scrapy.Field() time = scrapy.Field()

2、product.py爬虫文件

# -*- coding: utf-8 -*- import scrapy from scrapy.spiders import Rule, CrawlSpider from scrapy.linkextractors import LinkExtractor from ..items import BoyuanItem class ProductSpider(CrawlSpider): name = 'product' allowed_domains = ['boyuan.com'] offset = 1 url = "http://www.boyuan.com/sell/?page={0}" start_urls = [url.format(str(offset))] page_link = LinkExtractor(allow=("?page=d+")) rules = [ Rule(page_link, callback="parse_content", follow=True) ] def parse_content(self, response): for each in response.xpath("//div[@class='list']//tr"): item = BoyuanItem() item['name'] = each.xpath("./td[4]//strong/text()").extract()[0] item['company'] = each.xpath("./td[4]//li[4]/a/text()").extract()[0] address = each.xpath("./td[4]//li[3]/text()").extract()[0] item['address'] = str(address).strip("[").strip("]") time = each.xpath("./td[4]//li[3]/span/text()").extract()[0] item['time'] = str(time).strip() item['img'] = each.xpath("./td[2]//img/@original").extract()[0] yield item

3、pipelines.py 管道文件

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import json import pymongo from scrapy.conf import settings class BoyuanPipeline(object): def __init__(self): host = settings.get("MONGO_HOST") port = settings.get("MONGO_PORT") db_name = settings.get("MONGO_DB") collection = settings.get("MONGO_COLLECTION") self.client = pymongo.MongoClient(host=host, port=int(port)) db = self.client.get_database(db_name) if collection not in db.list_collection_names(): db.create_collection(collection) self.col = db[collection] def process_item(self, item, spider): # 保存到mongodb中 self.col.insert(dict(item)) return item def close_spider(self, spider): self.client.close()

3、settings.py 配置文件

# mongodb数据库参数 MONGO_HOST = "localhost" MONGO_PORT = "27017" MONGO_DB = "boyuan" MONGO_COLLECTION = "product"

4、start.py 启动文件

from scrapy import cmdline if __name__ == '__main__': cmdline.execute("scrapy crawl product".split())

采集结果如图: