|

python : 3.5 jdk : 1.7 eclipse : 4.5.2(有点低了,需要对应Neon 4.6,不然总是会弹出提示框) |

应该学习最新版本的 Python 3 还是旧版本的 Python 2.7?

MySqlDB官网只支持Python3.4,这里Python3.5使用第三方库PyMysql连接Mysql数据库。

http://dev.mysql.com/downloads/connector/python/2.0.html

PyMysql下载地址:

https://pypi.python.org/pypi/PyMySQL#downloads

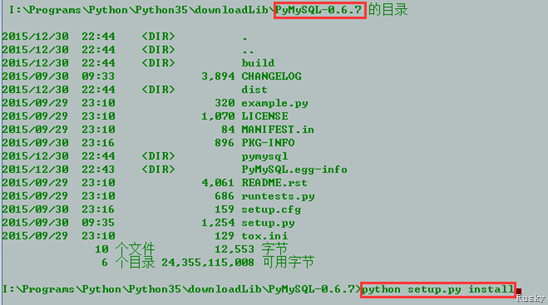

Windows下安装方法:

下载解压后,进入PyMySql-0.6.7目录,执行python setup.py install安装

test1.py

1 import urllib.request as request 2 def baidu_tieba(url, begin_page, end_page): 3 for i in range(begin_page, end_page + 1): 4 sName = 'D:/360Downloads/test/'+str(i).zfill(5)+'.html' 5 print('正在下载第'+str(i)+'个页面, 并保存为'+sName) 6 m = request.urlopen(url+str(i)).read() 7 with open(sName,'wb') as file: 8 file.write(m) 9 file.close() 10 if __name__ == "__main__": 11 url = "http://tieba.baidu.com/p/" 12 begin_page = 1 13 end_page = 3 14 baidu_tieba(url, begin_page, end_page)

test2.py

1 import urllib.request as request 2 import re 3 import os 4 import urllib.error as error 5 def baidu_tieba(url, begin_page, end_page): 6 count = 1 7 for i in range(begin_page, end_page + 1): 8 sName = 'D:/360Downloads/test/' + str(i).zfill(5) + '.html' 9 print('正在下载第' + str(i) + '个页面, 并保存为' + sName) 10 m = request.urlopen(url + str(i)).read() 11 # 创建目录保存每个网页上的图片 12 dirpath = 'D:/360Downloads/test/' 13 dirname = str(i) 14 new_path = os.path.join(dirpath, dirname) 15 if not os.path.isdir(new_path): 16 os.makedirs(new_path) 17 page_data = m.decode('gbk', 'ignore') 18 page_image = re.compile('<img src="(.+?)"') 19 for image in page_image.findall(page_data): 20 pattern = re.compile(r'^http://.*.png$') 21 if pattern.match(image): 22 try: 23 image_data = request.urlopen(image).read() 24 image_path = dirpath + dirname + '/' + str(count) + '.png' 25 count += 1 26 print(image_path) 27 with open(image_path, 'wb') as image_file: 28 image_file.write(image_data) 29 image_file.close() 30 except error.URLError as e: 31 print('Download failed') 32 with open(sName, 'wb') as file: 33 file.write(m) 34 file.close() 35 if __name__ == "__main__": 36 url = "http://tieba.baidu.com/p/" 37 begin_page = 1 38 end_page = 3 39 baidu_tieba(url, begin_page, end_page)

test3.py

1 #python3.4 爬虫教程 2 #爬取网站上的图片 3 #林炳文Evankaka(博客:http://blog.csdn.net/evankaka/) 4 import urllib.request 5 import socket 6 import re 7 import sys 8 import os 9 targetDir = r"D:PythonWorkPlaceload" #文件保存路径 10 def destFile(path): 11 if not os.path.isdir(targetDir): 12 os.makedirs(targetDir) 13 pos = path.rindex('/') 14 t = os.path.join(targetDir, path[pos+1:]) 15 print(t) 16 return t 17 if __name__ == "__main__": #程序运行入口 18 weburl = "http://www.douban.com/" 19 webheaders = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:23.0) Gecko/20100101 Firefox/23.0'} 20 req = urllib.request.Request(url=weburl, headers=webheaders) #构造请求报头 21 webpage = urllib.request.urlopen(req) #发送请求报头 22 contentBytes = webpage.read() 23 for link, t in set(re.findall(r'(https:[^s]*?(jpg|png|gif))', str(contentBytes))): #正则表达式查找所有的图片 24 print(link) 25 try: 26 urllib.request.urlretrieve(link, destFile(link)) #下载图片 27 except: 28 print('失败') #异常抛出

test4.py

1 ''' 2 第一个示例:简单的网页爬虫 3 4 爬取豆瓣首页 5 ''' 6 7 import urllib.request 8 9 #网址 10 url = "http://bj.58.com/caishui/28707491160259x.shtml?adtype=1&entinfo=28707491160259_0&adact=3&psid=156713756196890928513274724" 11 12 #请求 13 request = urllib.request.Request(url) 14 15 #爬取结果 16 response = urllib.request.urlopen(request) 17 18 data = response.read() 19 20 #设置解码方式 21 data = data.decode('utf-8') 22 23 #打印结果 24 print(data) 25 26 #打印爬取网页的各类信息 27 28 # print(type(response)) 29 # print(response.geturl()) 30 # print(response.info()) 31 # print(response.getcode())

test5.py

1 #!/usr/bin/env python 2 #-*-coding: utf-8 -*- 3 import re 4 import urllib.request as request 5 from bs4 import BeautifulSoup as bs 6 import csv 7 import os 8 import sys 9 from imp import reload 10 reload(sys) 11 12 def GetAllLink(): 13 num = int(input("爬取多少页:>")) 14 if not os.path.exists('./data/'): 15 os.mkdir('./data/') 16 17 for i in range(num): 18 if i+1 == 1: 19 url = 'http://nj.58.com/piao/' 20 GetPage(url, i) 21 else: 22 url = 'http://nj.58.com/piao/pn%s/' %(i+1) 23 GetPage(url, i) 24 25 26 def GetPage(url, num): 27 Url = url 28 user_agent = 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:32.0) Gecko/20100101 Firefox/32.0' 29 headers = { 'User-Agent' : user_agent } 30 req = request.Request(Url, headers = headers) 31 page = request.urlopen(req).read().decode('utf-8') 32 soup = bs(page, "html.parser") 33 table = soup.table 34 tag = table.find_all('tr') 35 # 提取出所需的那段 36 soup2 = bs(str(tag), "html.parser") 37 title = soup2.find_all('a','t') #标题与url 38 price = soup2.find_all('b', 'pri') #价格 39 fixedprice = soup2.find_all('del') #原价 40 date = soup2.find_all('span','pr25') #时间 41 42 atitle = [] 43 ahref = [] 44 aprice = [] 45 afixedprice = [] 46 adate = [] 47 48 for i in title: 49 #print i.get_text(), i.get('href') 50 atitle.append(i.get_text()) 51 ahref.append(i.get('href')) 52 for i in price: 53 #print i.get_text() 54 aprice.append(i.get_text()) 55 for i in fixedprice: 56 #print j.get_text() 57 afixedprice.append(i.get_text()) 58 for i in date: 59 #print i.get_text() 60 adate.append(i.get_text()) 61 62 csvfile = open('./data/ticket_%s.csv'%num, 'w') 63 writer = csv.writer(csvfile) 64 writer.writerow(['标题','url','售价','原价','演出时间']) 65 ''' 66 每个字段必有title,但是不一定有时间date 67 如果没有date日期,我们就设为'---' 68 ''' 69 if len(atitle) > len(adate): 70 for i in range(len(atitle) - len(adate)): 71 adate.append('---') 72 for i in range(len(atitle) - len(afixedprice)): 73 afixedprice.append('---') 74 for i in range(len(atitle) - len(aprice)): 75 aprice.append('---') 76 77 for i in range(len(atitle)): 78 message = atitle[i]+'|'+ahref[i]+'|'+aprice[i]+ '|'+afixedprice[i]+'|'+ adate[i] 79 writer.writerow([i for i in str(message).split('|')]) 80 print ("[Result]:> 页面 %s 信息保存完毕!"%(num+1)) 81 csvfile.close() 82 83 84 if __name__ == '__main__': 85 GetAllLink()

test6.py

1 #!/usr/bin/env python 2 #-*-coding: utf-8 -*- 3 import urllib.request as request 4 from bs4 import BeautifulSoup as bs 5 import sys 6 from imp import reload 7 reload(sys) 8 9 def GetAllLink(): 10 num = int(input("爬取多少页:>")) 11 12 for i in range(num): 13 if i+1 == 1: 14 url = 'http://bj.58.com/caishui/?key=%E4%BB%A3%E7%90%86%E8%AE%B0%E8%B4%A6%E5%85%AC%E5%8F%B8&cmcskey=%E4%BB%A3%E7%90%86%E8%AE%B0%E8%B4%A6%E5%85%AC%E5%8F%B8&final=1&jump=1&specialtype=gls' 15 GetPage(url, i) 16 else: 17 url = 'http://bj.58.com/caishui/pn%s/'%(i+1)+'?key=%E4%BB%A3%E7%90%86%E8%AE%B0%E8%B4%A6%E5%85%AC%E5%8F%B8&cmcskey=%E4%BB%A3%E7%90%86%E8%AE%B0%E8%B4%A6%E5%85%AC%E5%8F%B8&final=1&specialtype=gls&PGTID=0d30215f-0000-1941-5161-367b7a641048&ClickID=4' 18 GetPage(url, i) 19 20 21 def GetPage(url, num): 22 Url = url 23 user_agent = 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:32.0) Gecko/20100101 Firefox/32.0' 24 headers = { 'User-Agent' : user_agent } 25 req = request.Request(Url, headers = headers) 26 page = request.urlopen(req).read().decode('utf-8') 27 soup = bs(page, "html.parser") 28 table = soup.table 29 tag = table.find_all('tr') 30 31 # 提取出所需的那段 32 soup2 = bs(str(tag), "html.parser") 33 34 title = soup2.find_all('a','t') #标题与url 35 companyName = soup2.find_all('a','sellername') #公司名称 36 37 atitle = [] 38 ahref = [] 39 acompanyName = [] 40 41 for i in title: 42 atitle.append(i.get_text()) 43 ahref.append(i.get('href')) 44 for i in companyName: 45 acompanyName.append(i.get_text()) 46 for i in range(len(ahref)): 47 getSonPage(str(ahref[i])) 48 49 50 def getSonPage(url): 51 Url = url 52 user_agent = 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:32.0) Gecko/20100101 Firefox/32.0' 53 headers = { 'User-Agent' : user_agent } 54 req = request.Request(Url, headers = headers) 55 page = request.urlopen(req).read().decode('utf-8') 56 soup = bs(page, "html.parser") 57 print("=========================") 58 #类别 59 print(soup.find('div','su_con').get_text()) 60 #服务区域 61 print(soup.find('div','su_con quyuline').get_text()) 62 #联 系 人 63 print(soup.find_all('ul','suUl')[0].find_all('li')[2].find_all('a')[0].get_text()) 64 #商家地址 65 print(soup.find_all('ul','suUl')[0].find_all('li')[3].find('div','su_con').get_text().replace(" ",'').replace(" ",'').replace(' ','').replace(' ','')) 66 #服务项目 67 print(soup.find('article','description_con').get_text().replace("_____________________________________"," ").replace("___________________________________"," ").replace("(以下为公司北京区域分布图)","")) 68 print("=========================") 69 70 if __name__ == '__main__': 71 GetAllLink()

test7.py

1 import pymysql 2 conn = pymysql.connect(host='192.168.1.102', port=3306,user='root',passwd='123456',db='test',charset='UTF8') 3 cur = conn.cursor() 4 cur.execute("select version()") 5 for i in cur: 6 print(i) 7 cur.close() 8 conn.close()