1.Hdfs shell客户端命令操作:

1.1.查看命令列表:hadoop fs

帮助如下:

Usage: hadoop fs [generic options] [-appendToFile <localsrc> ... <dst>] //追加一个文件到已经存在的文件末尾 [-cat [-ignoreCrc] <src> ...] [-checksum <src> ...] [-chgrp [-R] GROUP PATH...] [-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...] [-chown [-R] [OWNER][:[GROUP]] PATH...] [-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>] [-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-count [-q] [-h] <path> ...] [-cp [-f] [-p | -p[topax]] <src> ... <dst>] [-createSnapshot <snapshotDir> [<snapshotName>]] [-deleteSnapshot <snapshotDir> <snapshotName>] [-df [-h] [<path> ...]] [-du [-s] [-h] <path> ...] [-expunge] [-find <path> ... <expression> ...] [-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-getfacl [-R] <path>] [-getfattr [-R] {-n name | -d} [-e en] <path>] [-getmerge [-nl] <src> <localdst>] [-help [cmd ...]] [-ls [-d] [-h] [-R] [<path> ...]] [-mkdir [-p] <path> ...] [-moveFromLocal <localsrc> ... <dst>] [-moveToLocal <src> <localdst>] //从hdfs剪切粘贴到本地 [-mv <src> ... <dst>] [-put [-f] [-p] [-l] <localsrc> ... <dst>] [-renameSnapshot <snapshotDir> <oldName> <newName>] [-rm [-f] [-r|-R] [-skipTrash] <src> ...] [-rmdir [--ignore-fail-on-non-empty] <dir> ...] [-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]] [-setfattr {-n name [-v value] | -x name} <path>] [-setrep [-R] [-w] <rep> <path> ...] [-stat [format] <path> ...] [-tail [-f] <file>] [-test -[defsz] <path>] [-text [-ignoreCrc] <src> ...] [-touchz <path> ...] [-truncate [-w] <length> <path> ...] [-usage [cmd ...]] Generic options supported are -conf <configuration file> specify an application configuration file -D <property=value> use value for given property -fs <local|namenode:port> specify a namenode -jt <local|resourcemanager:port> specify a ResourceManager -files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster -libjars <comma separated list of jars> specify comma separated jar files to include in the classpath. -archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines. The general command line syntax is bin/hadoop command [genericOptions] [commandOptions]

1.1.1.查看文件列表:hadoop fs -ls / (/:HDFS文件系统的根目录)

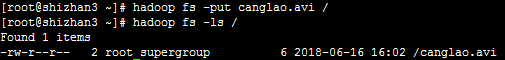

1.1.2.上传文件:hadoop fs -put canglao.avi /

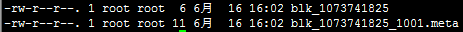

注意:真实的数据文件路径在DataNode节点数据目录的:/usr/local/src/hadoop-2.6.4/hdpdata3/dfs/data/current/BP-1438427861-192.168.232.201-1529131287527/current/finalized/subdir0/subdir0/下面有如下文件列表:

此时会发现在shizhan5的DataNode数据存放目录下面会有该blk_1073741825的副本

可以用下面的命令查看一下blk_1073741825文件的内容:cat blk_1073741825

然后会发现输出的内容是canglao.avi文件的内容。因为文件太小,没有切开。默认128M才切开,再放一个超过128M的文件:

hadoop fs -put /root/temp/hadoop-2.7.3.tar.gz /

在dataNode数据存放目录查看(同样存在副本):

-rw-r--r-- 1 root root 23562 2月 18 12:53 blk_1073741825 -rw-r--r-- 1 root root 195 2月 18 12:53 blk_1073741825_1001.meta -rw-r--r-- 1 root root 134217728 2月 18 13:09 blk_1073741826 -rw-r--r-- 1 root root 1048583 2月 18 13:09 blk_1073741826_1002.meta -rw-r--r-- 1 root root 79874467 2月 18 13:09 blk_1073741827 -rw-r--r-- 1 root root 624027 2月 18 13:09 blk_1073741827_1003.meta

接下来我们把blk_1073741826和blk_1073741827尝试拼接起来,然后试一下能不能解压:

cat blk_1073741826 >> tmp.file cat blk_1073741827 >> tmp.file tar -zxvf tmp.file

操作后的文件列表是:

-rw-r--r-- 1 root root 23562 2月 18 12:53 blk_1073741825 -rw-r--r-- 1 root root 195 2月 18 12:53 blk_1073741825_1001.meta -rw-r--r-- 1 root root 134217728 2月 18 13:09 blk_1073741826 -rw-r--r-- 1 root root 1048583 2月 18 13:09 blk_1073741826_1002.meta -rw-r--r-- 1 root root 79874467 2月 18 13:09 blk_1073741827 -rw-r--r-- 1 root root 624027 2月 18 13:09 blk_1073741827_1003.meta drwxr-xr-x 9 root root 4096 8月 18 2016 hadoop-2.7.3/ ----解压tmp.file后的文件 -rw-r--r-- 1 root root 214092195 2月 18 13:16 tmp.file

1.1.3.获取文件:hadoop fs -get /hadoop-2.7.3.tar.gz(完整文件)

1.1.4.删除文件:hadoop fs -rm /canglao.avi

1.1.5.从本地剪切粘贴到HDFS:hadoop fs -moveFromLocal /home/hadoop/a.txt /aaa/bbb

1.1.5.查看集群状态:hdfs dfsadmin -report

[root@shizhan3 subdir0]# hdfs dfsadmin -report Configured Capacity: 55306051584 (51.51 GB) Present Capacity: 23346880478 (21.74 GB) DFS Remaining: 23346765824 (21.74 GB) DFS Used: 114654 (111.97 KB) DFS Used%: 0.00% Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 ------------------------------------------------- Live datanodes (3): Name: 192.168.232.208:50010 (192.168.232.208) Hostname: shizhan6 Decommission Status : Normal Configured Capacity: 18435350528 (17.17 GB) DFS Used: 24576 (24 KB) Non DFS Used: 10881404928 (10.13 GB) DFS Remaining: 7553921024 (7.04 GB) DFS Used%: 0.00% DFS Remaining%: 40.98% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Sat Jun 16 16:44:28 CST 2018 Name: 192.168.232.205:50010 (shizhan3) Hostname: shizhan3 Decommission Status : Normal Configured Capacity: 18435350528 (17.17 GB) DFS Used: 45039 (43.98 KB) Non DFS Used: 10236620817 (9.53 GB) DFS Remaining: 8198684672 (7.64 GB) DFS Used%: 0.00% DFS Remaining%: 44.47% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Sat Jun 16 16:44:27 CST 2018 Name: 192.168.232.207:50010 (shizhan5) Hostname: shizhan5 Decommission Status : Normal Configured Capacity: 18435350528 (17.17 GB) DFS Used: 45039 (43.98 KB) Non DFS Used: 10841145361 (10.10 GB) DFS Remaining: 7594160128 (7.07 GB) DFS Used%: 0.00% DFS Remaining%: 41.19% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Sat Jun 16 16:44:26 CST 2018

可以看出,集群共有3个datanode可用

也可以打开web控制台查看HDFS集群信息:http://shizhan2:50070