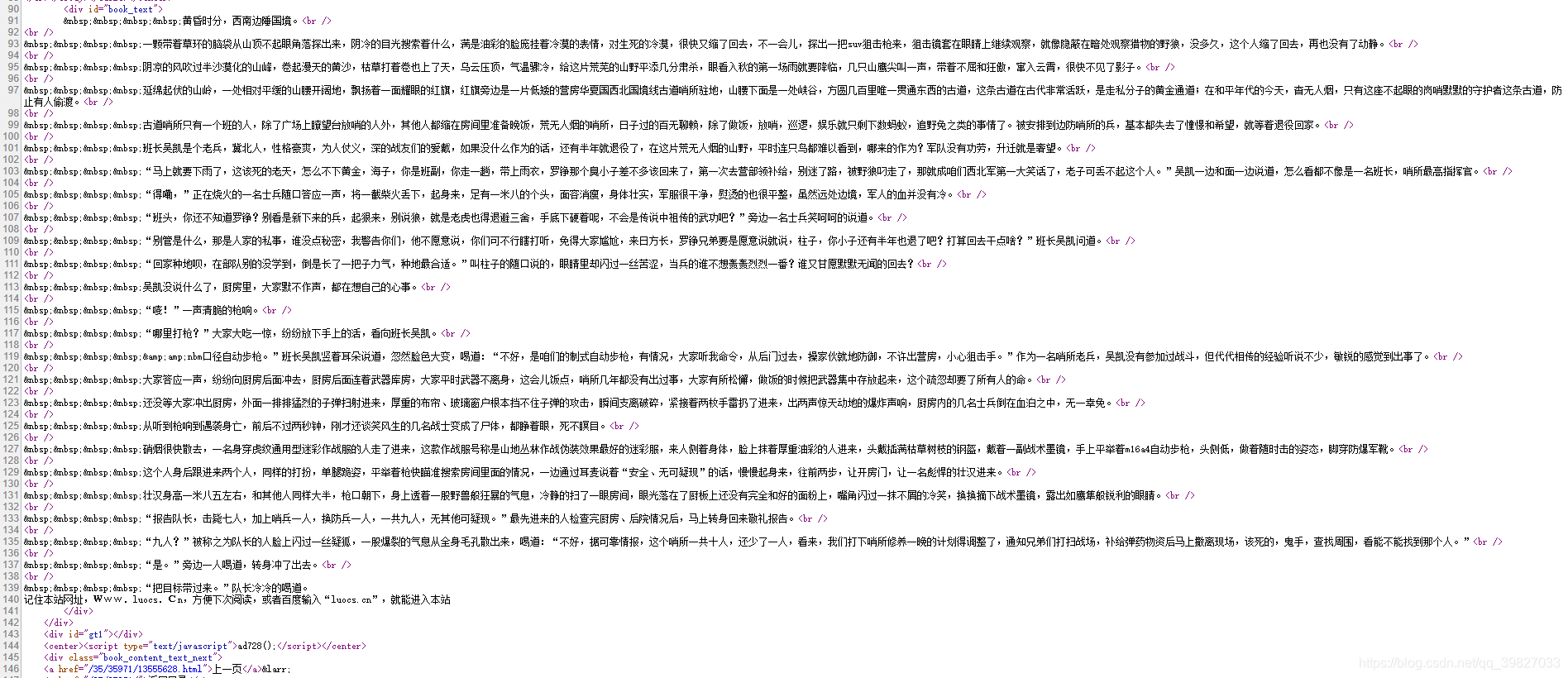

我这边找了个小说网站:

基本套路:

第一步:获取小说每一章的url地址

第二步:获取章节url内容并使用正则表达式提取需要的内容

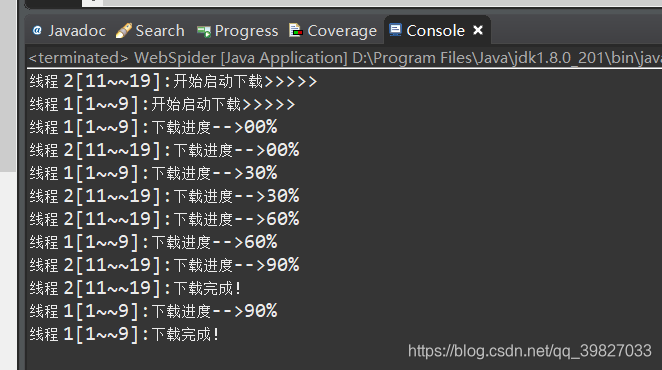

第三步:多线程封装,实现如下效果

最后测试。

代码:

内容获取封装:

public class WebSpider {

//<a href="/35/35971/13555631.html"> 第1章:边哨惨案 </a> -->{"/35/35971/13555631.html","第1章:边哨惨案"}

// 存放所有章节列表和标题

private List<String[]> urlList;

// 指定下载的跟目录

private String rootDir;

// 指定编码

private String encoding;

public WebSpider() {

urlList = new ArrayList<String[]>();

}

public WebSpider(String titleUrl, Map<String, String> regexMap, String rootDir, String encoding) {

this();

this.rootDir = rootDir;

this.encoding = encoding;

initUrlList(titleUrl, regexMap);

}

/**

* 初始化小说所有章节列表 在构造方法中调用

* @param url

* @param regexMap

*/

private void initUrlList(String url, Map<String, String> regexMap) {

StringBuffer sb = getContent(url, this.encoding);

int urlIndex = Integer.parseInt(regexMap.get("urlIndex"));

int titleIndex = Integer.parseInt(regexMap.get("titleIndex"));

Pattern p = Pattern.compile(regexMap.get("regex"));

Matcher m = p.matcher(sb);

while (m.find()) {

String[] strs = { m.group(urlIndex), m.group(titleIndex) };

this.urlList.add(strs);

}

}

/**

* 获取文本内容

*

* @param urlPath

* @param enc

* @return

*/

public StringBuffer getContent(String urlPath, String enc) {

StringBuffer strBuf;

class Result{

StringBuffer sb;

public Result() {

BufferedReader reader = null;

HttpURLConnection conn = null;

try {

URL url = new URL(urlPath);

conn = (HttpURLConnection) url.openConnection();

conn.setRequestProperty("user-agent",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Safari/537.36");

reader = new BufferedReader(new InputStreamReader(conn.getInputStream(), enc));

sb = new StringBuffer();

String line;

while ((line = reader.readLine()) != null) {

sb.append(line).append("

");

}

} catch (Exception e) {

// e.printStackTrace();

} finally {

try {

if (reader != null) {

reader.close();

}

conn.disconnect();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

for(int i = 1; ; i++) {

strBuf = new Result().sb;

if(strBuf == null) {

try {

Thread.sleep(5000 * i);

System.out.println("=====================等待 --》" + (5000 * i));

} catch (InterruptedException e) {

e.printStackTrace();

}

continue;

}

break;

}

return strBuf;

}

/**

* 获取正则表达式匹配后内容

* @param url

* @param enc

* @param regex

* @param indexGroup

* @return

*/

public String getDestTxt(String url, String enc, String regex, int indexGroup) {

StringBuffer sb = getContent(url, enc);

StringBuffer result = new StringBuffer();

Pattern p = Pattern.compile(regex);

Matcher m = p.matcher(sb);

while (m.find()) {

result.append(m.group(indexGroup).replace("<br />", "

"));

}

return result.toString();

}

public String getRootDir() {

return rootDir;

}

public List<String[]> getUrlList() {

return urlList;

}

}

多线程封装:

class Down implements Runnable {// 线程类

private WebSpider spider;

private String enc;// 编码 这个网站的标题列表编码和正文编码不一样

private String bookName;// 书名

// 分卷下载,指定开始和结束

private int start;

private int end;

private Map<String, String> regexMap;

public Down(WebSpider spider, Map<String, String> regexMap, String enc, String bookName, int start, int end) {

this.spider = spider;

this.regexMap = regexMap;

this.enc = enc;

this.bookName = bookName;

this.start = start;

this.end = end;

}

@Override

public void run() {

System.out.println(Thread.currentThread().getName() + "开始启动下载>>>>>");

List<String[]> list = this.spider.getUrlList();

String[] arr = null;

for (int i = start; i < end; i++) {

arr = list.get(i);

String url = "https://www.luocs.cn" + arr[0];

String title = arr[1];

this.writeTOFile(url, this.regexMap.get("regex"), Integer.parseInt(this.regexMap.get("groupIndex")), title);

if (i % 10 % 3 == 0) {

System.out.println(Thread.currentThread().getName() + "下载进度-->" + (i % 10) + "0%");

}

// 暂停1~3秒爬取下一章节

try {

Thread.sleep((long) (1000L + Math.random() * 20000));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

System.out.println(Thread.currentThread().getName() + "下载完成!");

}

/**

* 写入到文件

* @param url 正文url 地址

* @param regex 正文匹配的正则表达式

* @param indexGroup 正文匹配的正则表达式分组

* @param title 每个章节的标题

*/

private void writeTOFile(String url, String regex, int indexGroup, String title) {

String src = this.spider.getDestTxt(url, this.enc, regex, indexGroup);

BufferedWriter writer = null;

try {

writer = new BufferedWriter(new FileWriter(

new File(this.spider.getRootDir() + this.bookName + this.start + "--" + this.end + ".txt"), true));

// 简单美化一下格式

writer.append("*****").append(title).append("*****");

writer.newLine();

writer.append(src, 0, src.length());

writer.newLine();

writer.append("====================");

writer.newLine();

writer.flush();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (writer != null) {

writer.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

测试:

我这边直接写main方法测试:

public static void main(String[] args) {

String url = "https://www.luocs.cn/35/35971/0.html";

Map<String, String> map = new HashMap<String, String>();

map.put("regex", "href='(.+html)'>(.+:{1}.+)</a>");

map.put("urlIndex", "1");

map.put("titleIndex", "2");

WebSpider spider = new WebSpider(url, map, "f:/Game/", "gbk");

Map<String, String> regexMap = new HashMap<String, String>();

regexMap.put("regex", "( ){4}(.+)");

regexMap.put("groupIndex", "2");

for (int i = 0; i < 2; i++) {

Down d = new Down(spider, regexMap, "gb2312", "最强兵王", i * 10, (i + 1) * 10);

new Thread(d, "线程 " + (i + 1) + "[" + (i * 10 + 1) + "~~" + ((i + 1) * 10 - 1) + "]:").start();

}

}

效果:

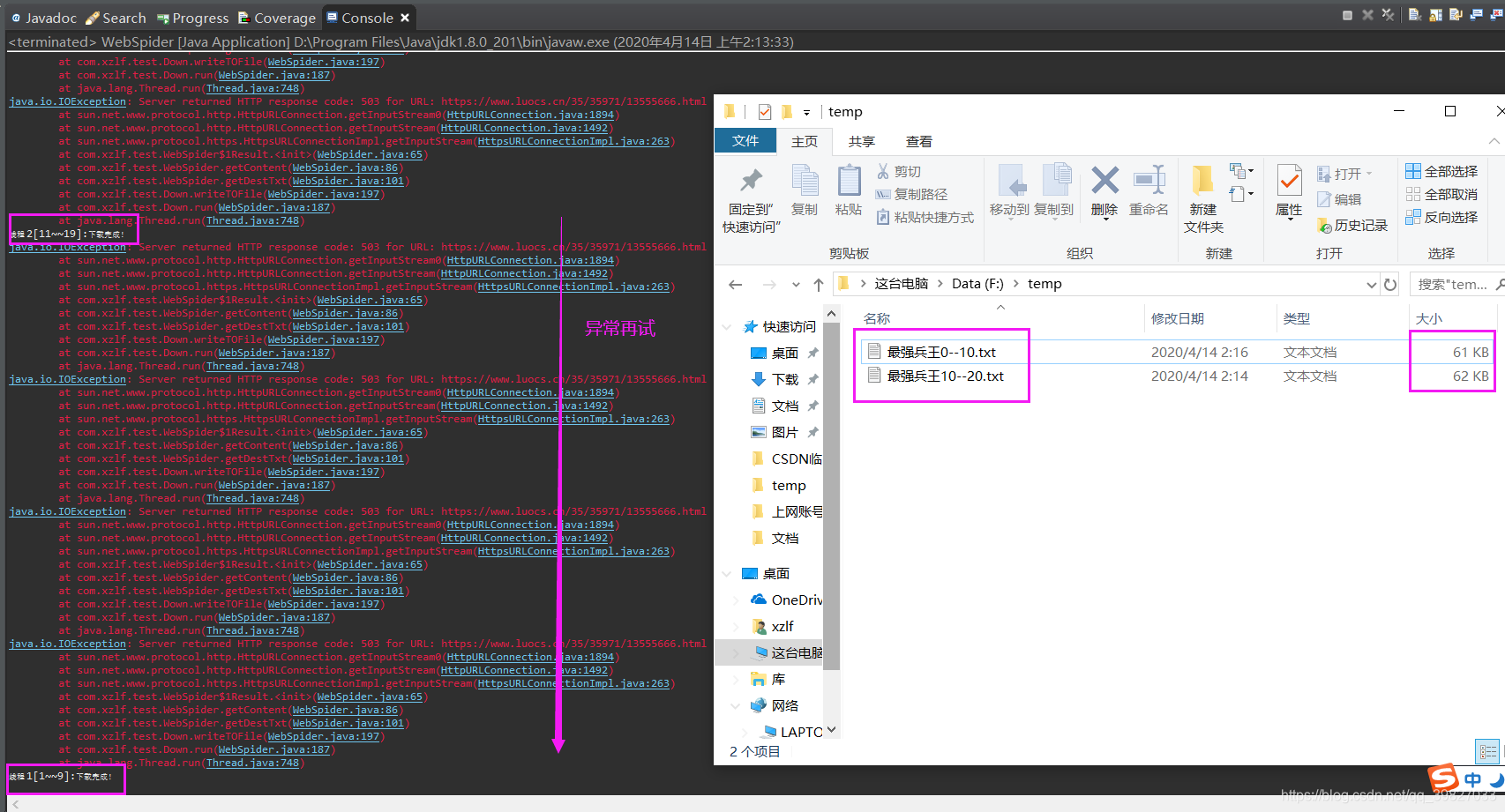

503失败后,等待一段时间再试

最后写到本地效果

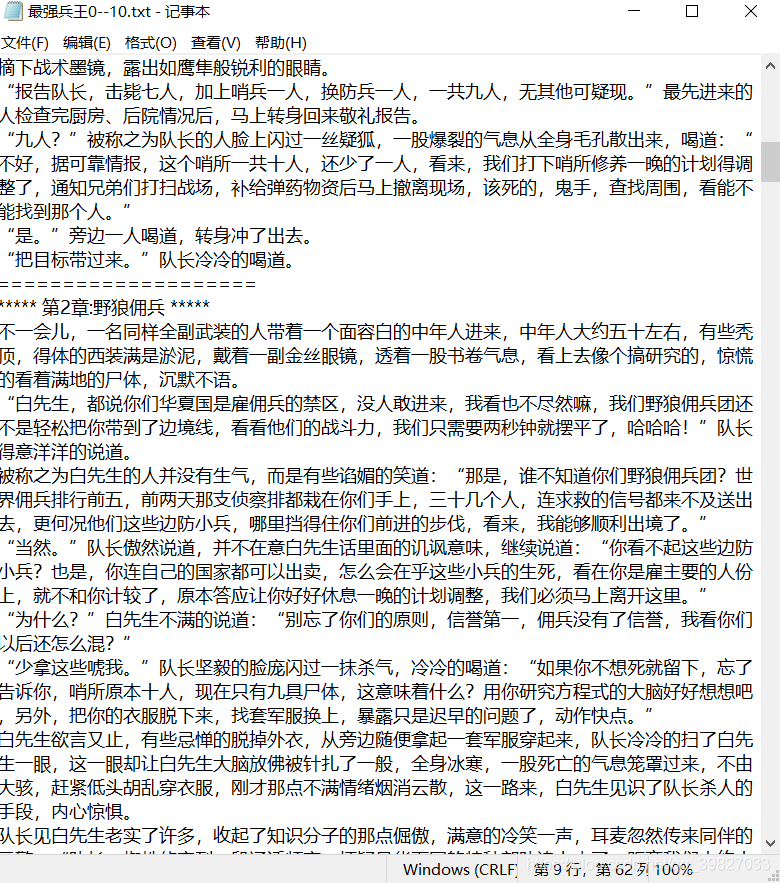

如果把文本导入到手机,用UC或者QQ 浏览器插件打开,会自动区分章节,在配上背景色,那样阅读起来就真的是清爽无弹窗了。

这个网站不怎么好爬,大家可以找个好爬点的网站爬取。当然这个503处理也不是很好,各位路边的大神如果有更好的办法,请指教。