有几个error:

1.在写两个xml文件时里面的文件夹都要是存在的,如果不存在就自己创建,一定要和hadoop目录的权限一致,不要一个是普通权限一个是root权限。

2.如果不用localhost,例如我想用hadoop时,更改/etc/hosts文件,增加ip地址的对应关系;如果报错hostname不一致,就修改/etc/hostname文件(查看hostname可以直接用命令 hostname )

3.一定要记得改hadoop-env.sh,因为这里和CentOS不一样,这里是一定要改的,否则找不到java;

为了方便以后,贴一下几个文件的补充内容:

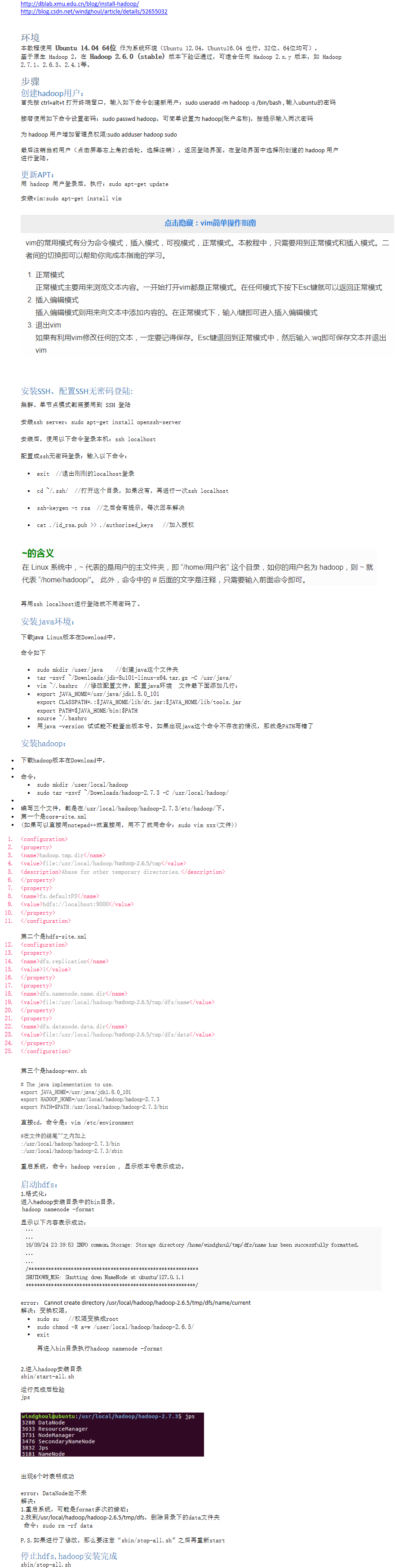

~/.bashrc :

export JAVA_HOME=/home/hadoop/softwares/jdk1.8.0_151 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_CLASSPATH=.$CLASSPATH:$HADOOP_CLASSPATH:$HADOOP_HOME/bin export HADOOP_HOME=/home/hadoop/softwares/hadoop-2.7.3 export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_MOME=$HADOOP_HOME export YARE_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR" export PATH=$JAVA_HOME/bin:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

hadoop-env.sh:

export JAVA_HOME=/home/hadoop/softwares/jdk1.8.0_151

export HADOOP_CLASSPATH=.$CLASSPATH:$HADOOP_CLASSPATH:$HADOOP_HOME/bin

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true -Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR" export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_HOME=/home/hadoop/softwares/hadoop-2.7.1

core-site.xml :

<property> <name>fs.defaultFS</name> <value>hdfs://hadoop:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/softwares/hadoop-2.7.3/tmp</value> </property>

hdfs-site.xml :

<property> <name>dfs.name.dir</name> <value>/home/hadoop/softwares/hadoop-2.7.3/data/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>/home/hadoop/softwares/hadoop-2.7.3/data/datanode</value> </property> <property> <name>dfs.tmp.dir</name> <value>/home/hadoop/softwares/hadoop-2.7.3/data/tmp</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

mapred-site.xml :

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop:19888</value> </property>

yarn-site.xml :

<property> <name>yarn.resourcemanager.hostname</name> <value>hadoop</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>hadoop:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>hadoop:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>hadoop:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>hadoop:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>hadoop:8088</value> </property>

4.为了方便使用,还有需要安装的:findbugs,protobuf,maven

findbugs和maven很好安装,下载后解压,配置环境变量,老套路,不会看jdk的安装流程。

maven下载地址:http://www-eu.apache.org/dist/maven/maven-3/

查看完成与否:mvn -v,findbugs -version

wget http://prdownloads.sourceforge.net/findbugs/findbugs-3.0.1.tar.gz //下载

protobuf安装麻烦一点:

1 wget http://protobuf.googlecode.com/files/protobuf-2.5.0.tar.gz //安装 2 tar -zxvf protobuf-2.5.0.tar.gz -C /home/hadoop/softwares/ //解压 3 4 //进入protobuf安装目录 5 ./configure 6 sudo make 7 sudo make check 8 sudo make install 9 10 //配置环境变量 11 export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib 12 13 //检查 14 protoc --version //出现 libprotoc 2.5.0 表示成功

P.S. 注意一下第11行,因为protobuf的默认安装路径是 /usr/local/lib ,而/usr/local/lib 不在Ubuntu体系默认的 LD_LIBRARY_PATH 里,所以不加这句话就version会报错:

protoc: error while loading shared libraries: libprotoc.so.8: cannot open shared