所以机子需同一个用户名

安装Ubuntu系统,要联网安装,可安装必要的插件等。

一.Jdk安装与配置:

Jdk解压后:sudo gedit /etc/profile 在末尾加入一下内容

#SET JAVA ENVIROMENT

export JAVA_HOME=/home/ubuntu/jdk

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

1.安装rpm:sudo apt-get install rpm

Hadoop安装与配置:

sudo gedit /etc/profile 在末尾加入一下内容:

#hadoop variable settings

export HADOOP_PREFIX=/home/ubuntu/hadoop

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_HOME=${HADOOP_PREFIX}

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native-x64

export HADOOP_CONF_DIR=${HADOOP_PREFIX}/etc/hadoop

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib/native-64 -Djava.net.preferIPv4Stack=true"

Hadoop解压后在hadoop/etc/hadoop目录下配置:

hadoop-env.sh

export JAVA_HOME=/home/ubuntu/jdk

2.yarn-env.sh

export JAVA_HOME=/home/ubuntu/jdk

3.core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/bigdata/tmp</value>

</property>

<property>

<name>hadoop.native.lib</name>

<value>true</value>

<description>Should native hadoop libraries, if present, be used.</description>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>3600</value>

<description>The number of seconds between two periodic checkpoints.

</description>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>67108864</value>

<description>

the size of the current edit log (int bytes) that triggers

a periodic checkpoint even if the fs.checkpoint.

period hasn't expried.

</description>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>${hadoop.tmp.dir}/dfs/namesecondary</value>

<description>

Determines where on the local filesystem the DFS secondary

name node should store the temporary images to merge.

If this is a comma-delimited list of directories then the image is

replicated in all of the directories for redundancy.

</description>

</property>

<!-- <property>

<name>topology.script.file.name</name>

<value>/hadoop-script/rack.py</value>

</property> -->

需要本地创建tmp文件夹

4. hdfs-site.xml

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/bigdata/hdfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/bigdata/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.http.address</name>

<value>master:50070</value>

<description>

The address and the base port where the dfs namenode web ui will listen on.

If the port is 0 then the server will start on a free port.

</description>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:50090</value>

</property>

需要本地创建 hdfs文件夹 name 和data文件夹不能创建,为自动生成,创建会出错,

如更改配置后,需重新格式化namenode 则需要删除hdfs下的文件

Master删name Slave删data

5.mapred-site.xml(从mapred-site.xml.template拷贝黏贴改名)

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<final>true</final>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

需要本地创建var文件夹

6.yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

7.Slaves

增加如: slave1

Slave2

三.更改hosts文件

Sudo gedit /etc/hosts

增加

10.9.201.129 master

10.9.51.29 slave1

四.更改hostname文件

Sudo gedit /etc/hostname

根据需要修改,如:master slave1

五.安装ssh 免密码登录

1.安装ssh

Sudo apt-get install ssh

2.创建公钥与私钥

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

3.追加到授权keys

Cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

4.在每台机器

chmod 755 ~/.ssh/

chmod 644 authorized_keys

六.将authorized_keys分发到slave主机中(在.ssh文件夹中)

Scp authorized_keys slave1:~/.ssh/

七.Hadoop格式化namenode

bin/hdfs namenode -format

如果出现权限问题,则更改文件夹权限

Chmod 777 -R hadoop

Hdfs

Var

Tmp

八.启动hadoop

sbin/start-dfs.sh

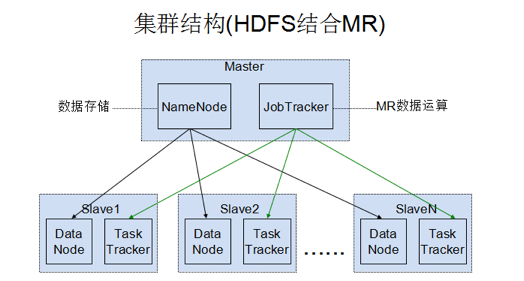

可以在master结点上看到如下几个进程:

haduser@master:~/hadoop/hadoop-2.2.0$ jps

6638 Jps

6015 NameNode

6525 SecondaryNameNode

在slave结点上看到如下进程

haduser@slave1:~/hadoop/hadoop-2.2.0/etc/hadoop$ jps

4264 Jps

4208 DataNode

启动YARN集群

sbin/start-yarn.sh

八.启动hadoop

sbin/start-dfs.sh

可以在master结点上看到如下几个进程:

haduser@master:~/hadoop/hadoop-2.2.0$ jps

6638 Jps

6015 NameNode

6525 SecondaryNameNode

在slave结点上看到如下进程

haduser@slave1:~/hadoop/hadoop-2.2.0/etc/hadoop$ jps

4264 Jps

4208 DataNode

启动YARN集群

sbin/start-yarn.sh

九.Ecplise 配置:

1.将插件放入ecplise目录下的plugin中

2.windows -preference-mapred 配置路径

3.在console新建mapred标签,配置相关。