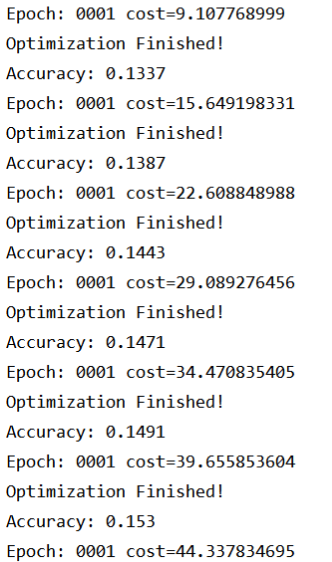

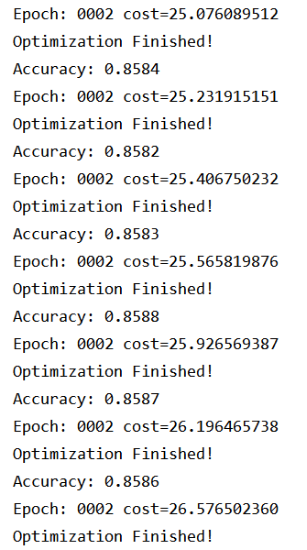

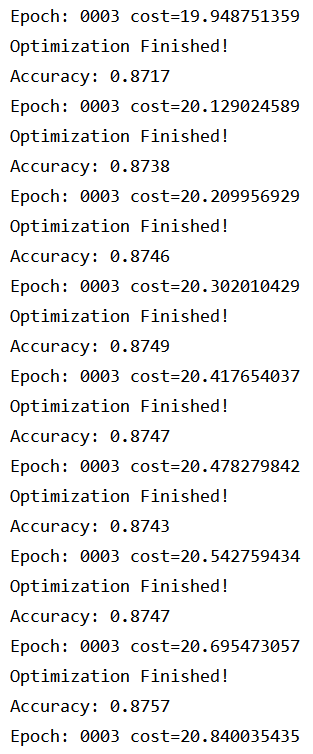

import tensorflow.compat.v1 as tf tf.disable_v2_behavior() import input_data import numpy as np import os os.environ["CUDA_VISIBLE_DEVICES"]="0" mnist=input_data.read_data_sets("D:大二Java大三寒假作业大三寒假作业深度学习应用篇",one_hot=True) learning_rate=0.001 training_epochs=15 batch_size=100 display_step=1 n_hidden_1=256 n_hidden_2=256 n_input=784 n_classes=10 X=tf.placeholder("float",[None,n_input]) Y=tf.placeholder("float",[None,n_classes]) weights={ "h1":tf.Variable(tf.random_normal([n_input,n_hidden_1])), "h2":tf.Variable(tf.random_normal([n_hidden_1,n_hidden_2])), "out":tf.Variable(tf.random_normal([n_hidden_2,n_classes]))} biases={ 'b1':tf.Variable(tf.random_normal([n_hidden_1])), 'b2':tf.Variable(tf.random_normal([n_hidden_2])), 'out':tf.Variable(tf.random_normal([n_classes]))} def multiplayer_perceptron(x): layer_1=tf.add(tf.matmul(x,weights['h1']),biases['b1']) layer_2=tf.add(tf.matmul(layer_1,weights['h2']),biases['b2']) out_layer = tf.matmul(layer_2, weights['out']) + biases['out'] return out_layer logits=multiplayer_perceptron(X) loss_op=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits( logits=logits,labels=Y)) train_op=tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(loss_op) init=tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) for epoch in range(training_epochs): avg_cost = 0 total_batch = int(mnist.train.num_examples / batch_size) for i in range(total_batch): batch_x, batch_y = mnist.train.next_batch(batch_size) _, c = sess.run([train_op, loss_op], feed_dict={X: batch_x, Y: batch_y}) avg_cost += c / total_batch if epoch % display_step == 0: print("Epoch:", "%04d" % (epoch + 1), "cost={:.9f}".format(avg_cost)) print("Optimization Finished!") pred = tf.nn.softmax(logits) correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(Y, 1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) print("Accuracy:", accuracy.eval({X: mnist.test.images, Y: mnist.test.labels}))