数据集地址

- 分类:http://archive.ics.uci.edu/ml/datasets/Iris

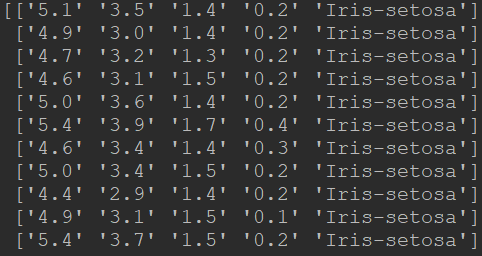

部分数据:

- 回归:利用sklearn函数直接生成

基于原生LightGBM的分类

首先得安装相关的库:pip install lightgbm

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder

import lightgbm as lgb

import numpy as np

# 以分隔符,读取文件,得到的是一个二维列表

iris = np.loadtxt('iris.data', dtype=str, delimiter=',', unpack=False, encoding='utf-8')

# 前4列是特征

data = iris[:, :4].astype(np.float)

# 最后一列是标签,我们将其转换为二维列表

target = iris[:, -1][:, np.newaxis]

# 对标签进行onehot编码后还原成数字

enc = OneHotEncoder()

target = enc.fit_transform(target).astype(np.int).toarray()

target = [list(oh).index(1) for oh in target]

# 划分训练数据和测试数据

X_train, X_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=1)

# 转换为Dataset数据格式

train_data = lgb.Dataset(X_train, label=y_train)

validation_data = lgb.Dataset(X_test, label=y_test)

# 参数

params = {

'learning_rate': 0.1,

'lambda_l1': 0.1,

'lambda_l2': 0.2,

'max_depth': 4,

'objective': 'multiclass', # 目标函数

'num_class': 3,

}

# 模型训练

gbm = lgb.train(params, train_data, valid_sets=[validation_data])

# 模型预测

y_pred = gbm.predict(X_test)

y_pred = [list(x).index(max(x)) for x in y_pred]

print(y_pred)

# 模型评估

print(accuracy_score(y_test, y_pred))

结果

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[99] valid_0's multi_logloss: 0.264218

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[100] valid_0's multi_logloss: 0.264481

[0, 1, 1, 0, 2, 1, 2, 0, 0, 2, 1, 0, 2, 1, 1, 0, 1, 1, 0, 0, 1, 1, 2, 0, 2, 1, 0, 0, 1, 2]

0.9666666666666667

基于sklearn接口的分类

使用基本参数进行分类

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder

from lightgbm import LGBMClassifier

from sklearn.externals import joblib

import numpy as np

# 以分隔符,读取文件,得到的是一个二维列表

iris = np.loadtxt('iris.data', dtype=str, delimiter=',', unpack=False, encoding='utf-8')

# 前4列是特征

data = iris[:, :4].astype(np.float)

# 最后一列是标签,我们将其转换为二维列表

target = iris[:, -1][:, np.newaxis]

# 对标签进行onehot编码后还原成数字

enc = OneHotEncoder()

target = enc.fit_transform(target).astype(np.int).toarray()

target = [list(oh).index(1) for oh in target]

# 划分训练数据和测试数据

X_train, X_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=1)

# 模型训练

gbm = LGBMClassifier(num_leaves=31, learning_rate=0.05, n_estimators=20)

gbm.fit(X_train, y_train, eval_set=[(X_test, y_test)], early_stopping_rounds=5)

# 模型存储

joblib.dump(gbm, 'loan_model.pkl')

# 模型加载

gbm = joblib.load('loan_model.pkl')

# 模型预测

y_pred = gbm.predict(X_test, num_iteration=gbm.best_iteration_)

# 模型评估

print('The accuracy of prediction is:', accuracy_score(y_test, y_pred))

# 特征重要度

print('Feature importances:', list(gbm.feature_importances_))

结果

[1] valid_0's multi_logloss: 1.04105

Training until validation scores don't improve for 5 rounds

[2] valid_0's multi_logloss: 0.969489

[3] valid_0's multi_logloss: 0.903964

[4] valid_0's multi_logloss: 0.845211

[5] valid_0's multi_logloss: 0.793714

[6] valid_0's multi_logloss: 0.742919

[7] valid_0's multi_logloss: 0.698058

[8] valid_0's multi_logloss: 0.659407

[9] valid_0's multi_logloss: 0.621686

[10] valid_0's multi_logloss: 0.588324

[11] valid_0's multi_logloss: 0.556705

[12] valid_0's multi_logloss: 0.52607

[13] valid_0's multi_logloss: 0.501139

[14] valid_0's multi_logloss: 0.476254

[15] valid_0's multi_logloss: 0.454358

[16] valid_0's multi_logloss: 0.433247

[17] valid_0's multi_logloss: 0.41494

[18] valid_0's multi_logloss: 0.395876

[19] valid_0's multi_logloss: 0.378817

[20] valid_0's multi_logloss: 0.364502

Did not meet early stopping. Best iteration is:

[20] valid_0's multi_logloss: 0.364502

The accuracy of prediction is: 0.9333333333333333

Feature importances: [12, 15, 129, 56]

使用参数搜索进行分类

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.preprocessing import OneHotEncoder

from lightgbm import LGBMClassifier

from sklearn.externals import joblib

import numpy as np

# 以分隔符,读取文件,得到的是一个二维列表

iris = np.loadtxt('iris.data', dtype=str, delimiter=',', unpack=False, encoding='utf-8')

# 前4列是特征

data = iris[:, :4].astype(np.float)

# 最后一列是标签,我们将其转换为二维列表

target = iris[:, -1][:, np.newaxis]

# 对标签进行onehot编码后还原成数字

enc = OneHotEncoder()

target = enc.fit_transform(target).astype(np.int).toarray()

target = [list(oh).index(1) for oh in target]

# 划分训练数据和测试数据

X_train, X_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=1)

# 网格搜索,参数优化

estimator = LGBMClassifier(num_leaves=31)

param_grid = {

'learning_rate': [0.01, 0.1, 1],

'n_estimators': [20, 40]

}

gbm = GridSearchCV(estimator, param_grid)

gbm.fit(X_train, y_train)

print('Best parameters found by grid search are:', gbm.best_params_)

结果

Best parameters found by grid search are: {'learning_rate': 0.1, 'n_estimators': 20}

基于原生LightGBM的回归

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

import lightgbm as lgb

from sklearn.metrics import mean_absolute_error

X, y = make_regression(n_samples=100, n_features=1, noise=20)

print(X,y)

# 切分训练集、测试集

train_X, test_X, train_y, test_y = train_test_split(X, y, test_size=0.25, random_state=1)

# 转换为Dataset数据格式

lgb_train = lgb.Dataset(train_X, train_y)

lgb_eval = lgb.Dataset(test_X, test_y, reference=lgb_train)

# 参数

params = {

'task': 'train',

'boosting_type': 'gbdt', # 设置提升类型

'objective': 'regression', # 目标函数

'metric': {'l2', 'auc'}, # 评估函数

'num_leaves': 31, # 叶子节点数

'learning_rate': 0.05, # 学习速率

'feature_fraction': 0.9, # 建树的特征选择比例

'bagging_fraction': 0.8, # 建树的样本采样比例

'bagging_freq': 5, # k 意味着每 k 次迭代执行bagging

'verbose': 1 # <0 显示致命的, =0 显示错误 (警告), >0 显示信息

}

# 调用LightGBM模型,使用训练集数据进行训练(拟合)

# Add verbosity=2 to print messages while running boosting

my_model = lgb.train(params, lgb_train, num_boost_round=20, valid_sets=lgb_eval, early_stopping_rounds=5)

# 使用模型对测试集数据进行预测

predictions = my_model.predict(test_X, num_iteration=my_model.best_iteration)

# 对模型的预测结果进行评判(平均绝对误差)

print("Mean Absolute Error : " + str(mean_absolute_error(predictions, test_y)))

结果

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[9] valid_0's auc: 0.873377 valid_0's l2: 1521.21

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[10] valid_0's auc: 0.873377 valid_0's l2: 1448

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[11] valid_0's auc: 0.873377 valid_0's l2: 1394.27

Early stopping, best iteration is:

[6] valid_0's auc: 0.873377 valid_0's l2: 1796.72

Mean Absolute Error : 32.371899328245405

基于sklearn接口的回归

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

import lightgbm as lgb

from sklearn.metrics import mean_absolute_error

X, y = make_regression(n_samples=100, n_features=1, noise=20)

# 切分训练集、测试集

train_X, test_X, train_y, test_y = train_test_split(X, y, test_size=0.25, random_state=1)

# 调用LightGBM模型,使用训练集数据进行训练(拟合)

# Add verbosity=2 to print messages while running boosting

my_model = lgb.LGBMRegressor(objective='regression', num_leaves=31, learning_rate=0.05, n_estimators=20,

verbosity=2)

my_model.fit(train_X, train_y, verbose=False)

# 使用模型对测试集数据进行预测

predictions = my_model.predict(test_X)

# 对模型的预测结果进行评判(平均绝对误差)

print("Mean Absolute Error : " + str(mean_absolute_error(predictions, test_y)))

结果

[LightGBM] [Debug] Dataset::GetMultiBinFromAllFeatures: sparse rate 0.000000

[LightGBM] [Debug] init for col-wise cost 0.000011 seconds, init for row-wise cost 0.000109 seconds

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000126 seconds.

You can set force_col_wise=true to remove the overhead.

[LightGBM] [Info] Total Bins 27

[LightGBM] [Info] Number of data points in the train set: 75, number of used features: 1

[LightGBM] [Info] Start training from score 10.744539

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 2 and max_depth = 1

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 2 and max_depth = 1

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 2 and max_depth = 1

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 2 and max_depth = 1

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 2 and max_depth = 1

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

[LightGBM] [Warning] No further splits with positive gain, best gain: -inf

[LightGBM] [Debug] Trained a tree with leaves = 3 and max_depth = 2

Mean Absolute Error : 18.71203698086779