Pig大的运行方法:

1、脚本

2、Grunt

3、嵌入式

Grunt

1、自动补全机制 (命令补全、不支持文件名补全)

2、autocomplete文件

3、Eclipse插件PigPen

进入Grunt shell命令

[hadoop@master pig]$ ./bin/pig

2013-04-13 23:00:19,909 [main] INFO org.apache.pig.Main - Apache Pig version 0.10.0 (r1328203) compiled Apr 19 2012, 22:54:12

2013-04-13 23:00:19,909 [main] INFO org.apache.pig.Main - Logging error messages to: /opt/pig/pig_1365865219902.log

2013-04-13 23:00:20,237 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: hdfs://192.168.154.100:9000

2013-04-13 23:00:20,536 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to map-reduce job tracker at: 192.168.154.100:9001

帮助(help)

grunt> help

Commands:

<pig latin statement>; - See the PigLatin manual for details: http://hadoop.apache.org/pig

File system commands:

fs <fs arguments> - Equivalent to Hadoop dfs command: http://hadoop.apache.org/common/docs/current/hdfs_shell.html

Diagnostic commands:

describe <alias>[::<alias] - Show the schema for the alias. Inner aliases can be described as A::B.

explain [-script <pigscript>] [-out <path>] [-brief] [-dot] [-param <param_name>=<param_value>]

[-param_file <file_name>] [<alias>] - Show the execution plan to compute the alias or for entire script.

-script - Explain the entire script.

-out - Store the output into directory rather than print to stdout.

-brief - Don't expand nested plans (presenting a smaller graph for overview).

-dot - Generate the output in .dot format. Default is text format.

-param <param_name - See parameter substitution for details.

-param_file <file_name> - See parameter substitution for details.

alias - Alias to explain.

dump <alias> - Compute the alias and writes the results to stdout.

Utility Commands:

exec [-param <param_name>=param_value] [-param_file <file_name>] <script> -

Execute the script with access to grunt environment including aliases.

-param <param_name - See parameter substitution for details.

-param_file <file_name> - See parameter substitution for details.

script - Script to be executed.

run [-param <param_name>=param_value] [-param_file <file_name>] <script> -

Execute the script with access to grunt environment.

-param <param_name - See parameter substitution for details.

-param_file <file_name> - See parameter substitution for details.

script - Script to be executed.

sh <shell command> - Invoke a shell command.

kill <job_id> - Kill the hadoop job specified by the hadoop job id.

set <key> <value> - Provide execution parameters to Pig. Keys and values are case sensitive.

The following keys are supported:

default_parallel - Script-level reduce parallelism. Basic input size heuristics used by default.

debug - Set debug on or off. Default is off.

job.name - Single-quoted name for jobs. Default is PigLatin:<script name>

job.priority - Priority for jobs. Values: very_low, low, normal, high, very_high. Default is normal

stream.skippath - String that contains the path. This is used by streaming.

any hadoop property.

help - Display this message.

quit - Quit the grunt shell.

查看(ls、cd 、cat)

grunt> ls

hdfs://192.168.154.100:9000/user/hadoop/in <dir>

hdfs://192.168.154.100:9000/user/hadoop/out <dir>

grunt> cd in

grunt> ls

hdfs://192.168.154.100:9000/user/hadoop/in/test1.txt<r 1> 12

hdfs://192.168.154.100:9000/user/hadoop/in/test2.txt<r 1> 13

hdfs://192.168.154.100:9000/user/hadoop/in/test_1.txt<r 1> 328

hdfs://192.168.154.100:9000/user/hadoop/in/test_2.txt<r 1> 139

grunt> cat test1.txt

hello world

复制到本地(copyToLocal)

grunt> ls

hdfs://192.168.154.100:9000/user/hadoop/in/test1.txt<r 1> 12

hdfs://192.168.154.100:9000/user/hadoop/in/test2.txt<r 1> 13

hdfs://192.168.154.100:9000/user/hadoop/in/test_1.txt<r 1> 328

hdfs://192.168.154.100:9000/user/hadoop/in/test_2.txt<r 1> 139

grunt> copyToLocal test1.txt ttt

[root@master pig]# ls -l ttt

-rwxrwxrwx. 1 hadoop hadoop 12 4月 13 23:06 ttt

[root@master pig]#

执行操作系统命令:sh

grunt> sh jps

2098 DataNode

1986 NameNode

2700 Jps

2539 RunJar

2297 JobTracker

2211 SecondaryNameNode

2411 TaskTracker

grunt>

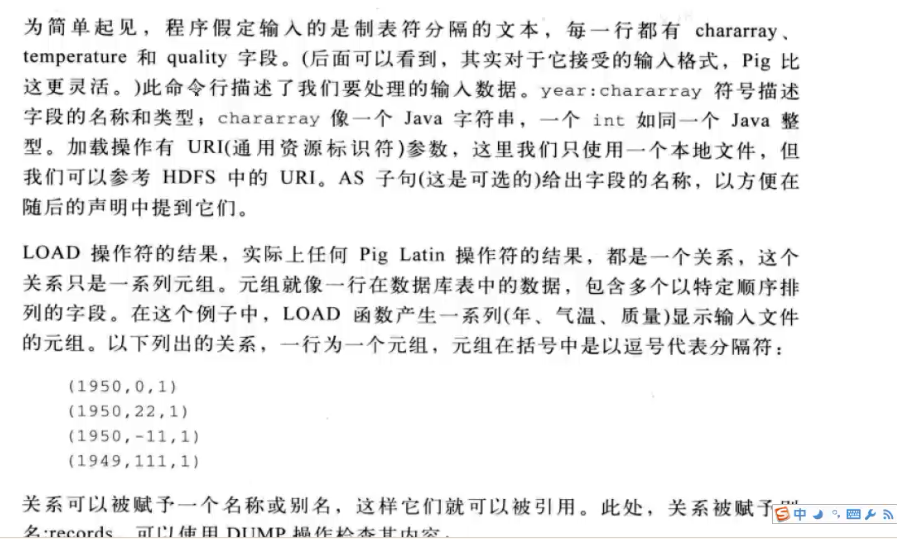

Pig数据模型

Bag:表

Tuple:行,记录

Field:属性

Pig不要求同一个bag里面的各个tuple有相同数量或相同类型的field

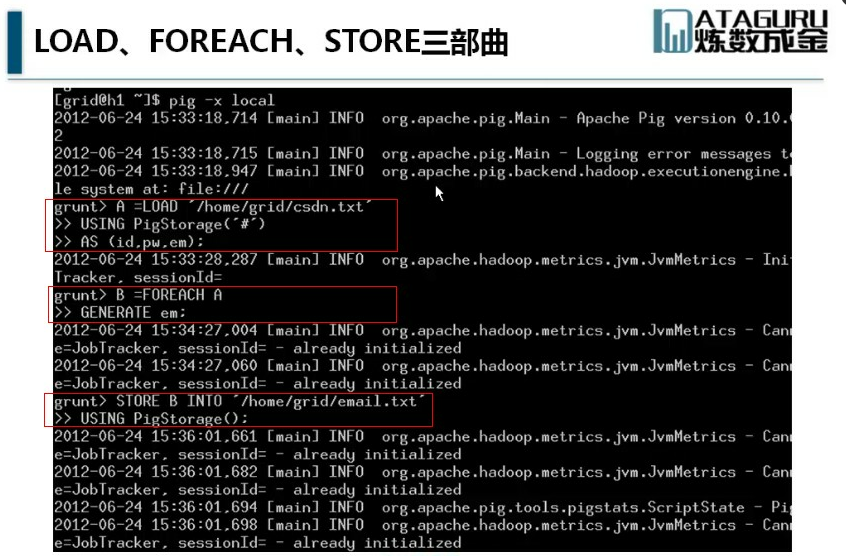

Pig latin常用语句

LOAD:指出载入数据的方法

FOREACH:逐行扫描进行某种处理

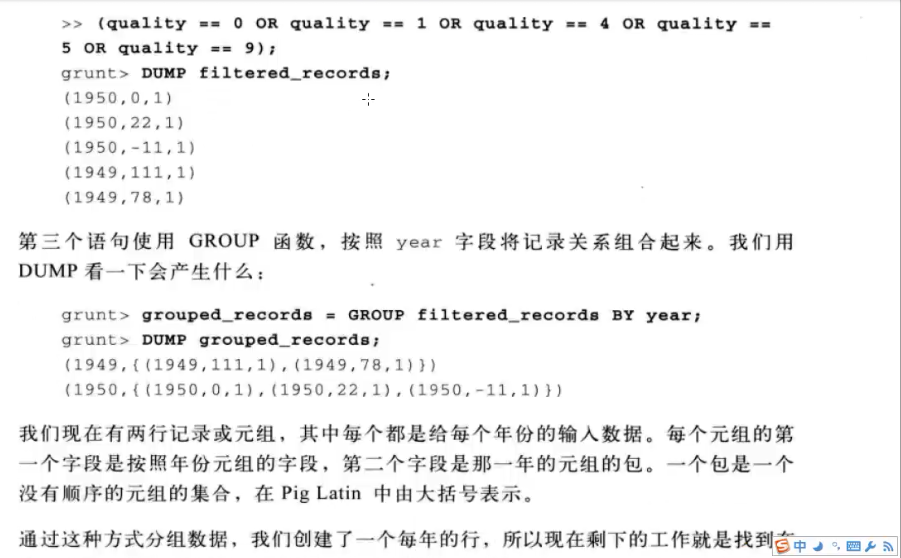

FILTER:过滤行

DUMP:把结果显示到屏幕

STORE:把结果保存到文件

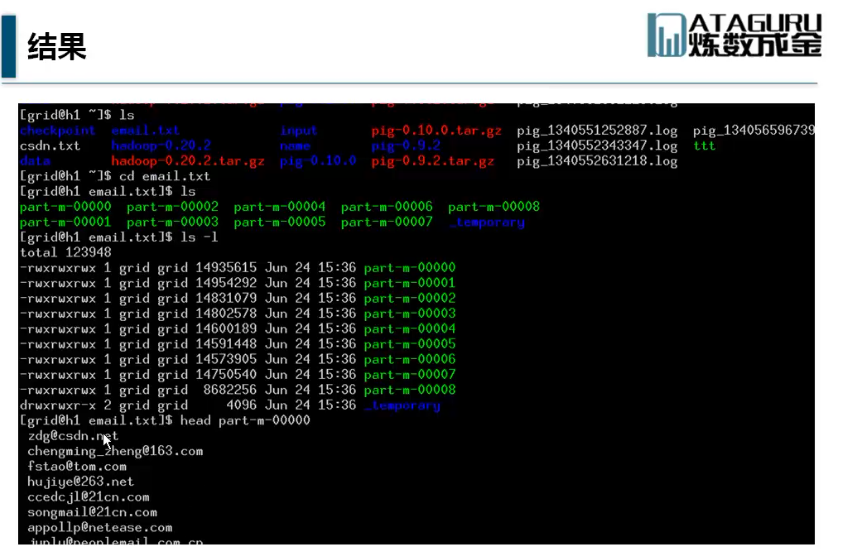

pig脚本实例:

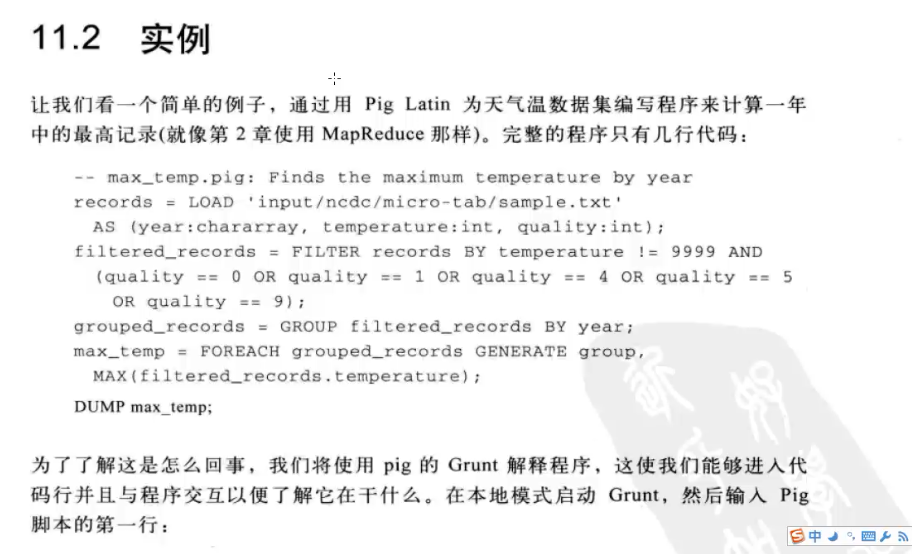

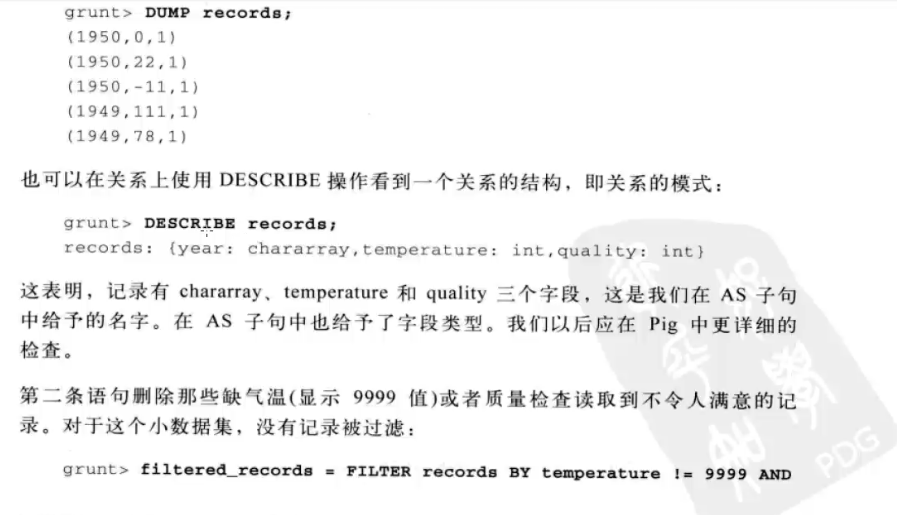

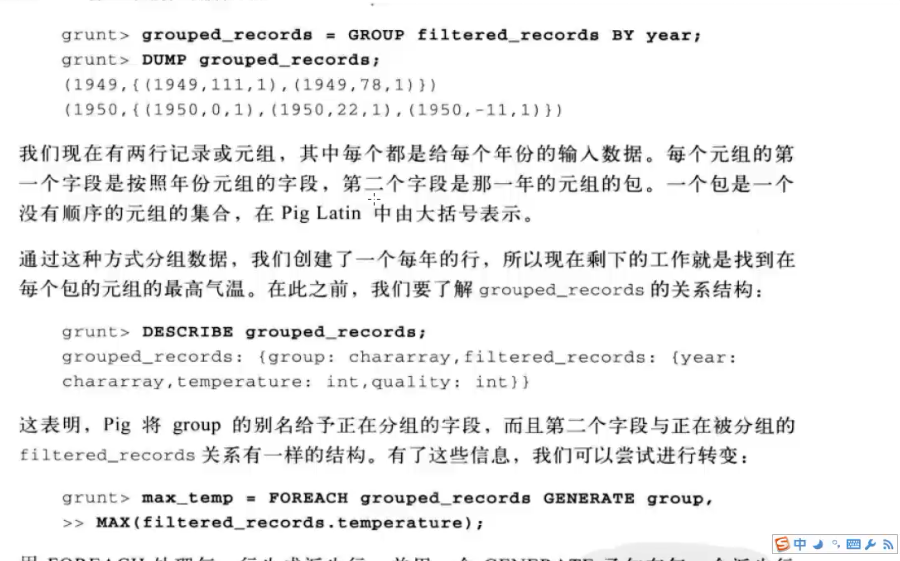

grunt> records = LOAD 'input/ncdc/micro-tab/sample.txt'

>> AS (year:chararray, temerature:int, quality:int);

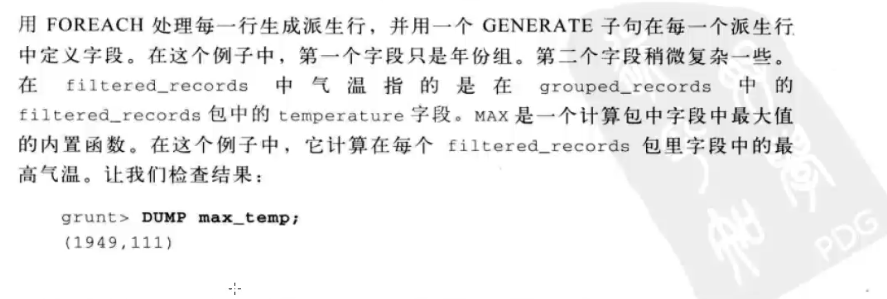

(1949,111)

(1950,22)

由此成功计算每年的最高气温。

实例二: