第五课主要内容有:

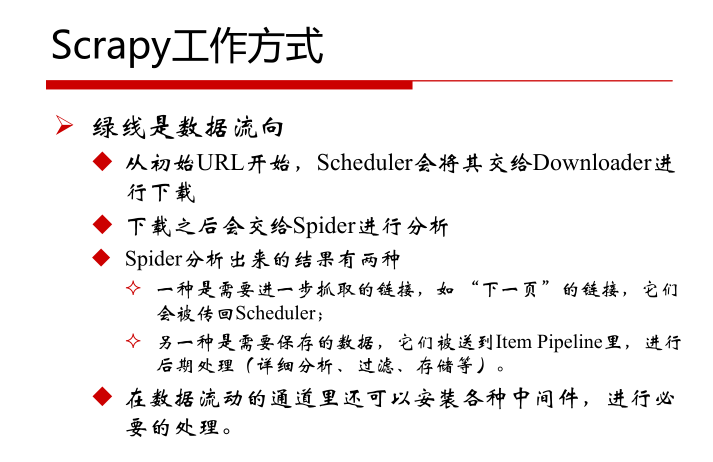

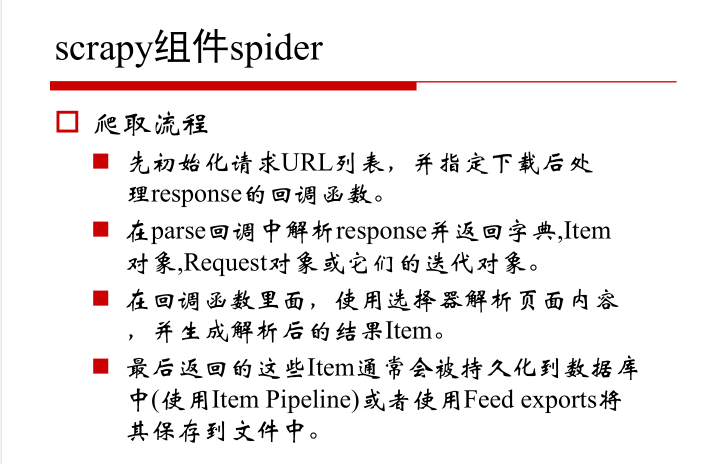

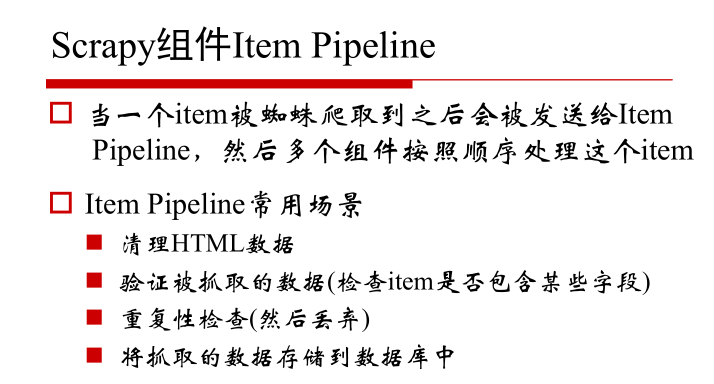

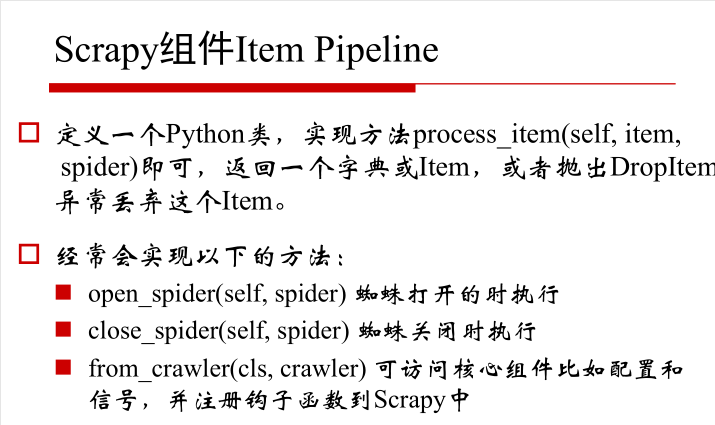

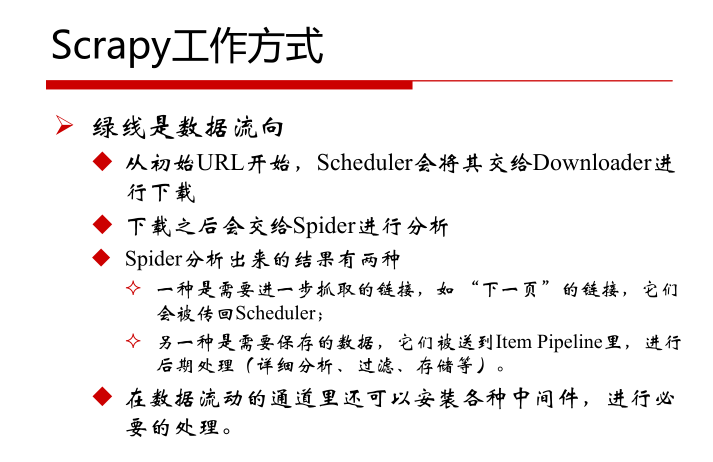

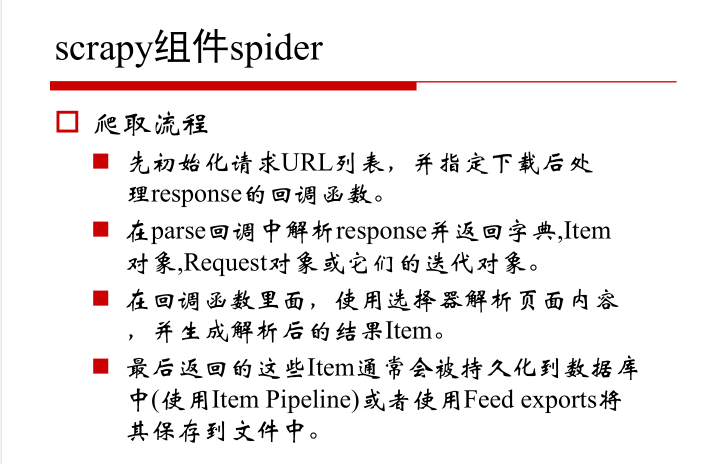

- Scrapy框架结构,组件及工作方式

- 单页爬取-julyedu.com

- 拼URL爬取-博客园

- 循环下页方式爬取-toscrape.com

- Scrapy项目相关命令-QQ新闻

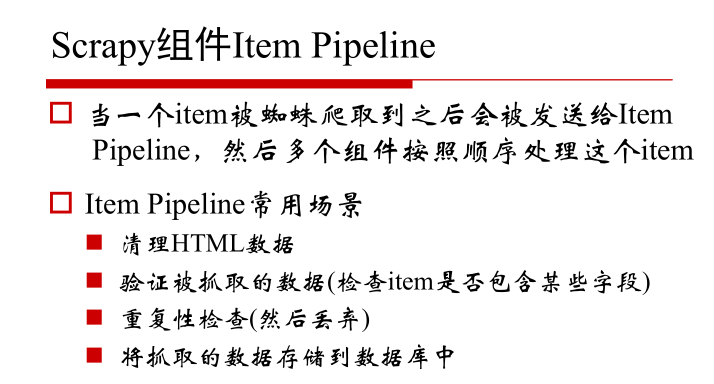

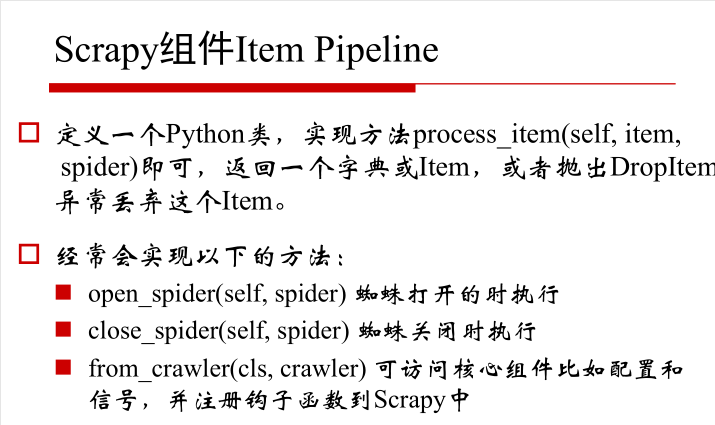

1.Scrapy框架结构,组件及工作方式

2.单页爬取-julyedu.com

#by 寒小阳(hanxiaoyang.ml@gmail.com)---七月在线讲师

#Python2

import scrapy

class JulyeduSpider(scrapy.Spider):

name = "julyedu"

start_urls = [

'https://www.julyedu.com/category/index',

]

def parse(self, response):

for julyedu_class in response.xpath('//div[@class="course_info_box"]'):

print julyedu_class.xpath('a/h4/text()').extract_first()

print julyedu_class.xpath('a/p[@class="course-info-tip"][1]/text()').extract_first()

print julyedu_class.xpath('a/p[@class="course-info-tip"][2]/text()').extract_first()

print response.urljoin(julyedu_class.xpath('a/img[1]/@src').extract_first())

print "

"

yield {

'title':julyedu_class.xpath('a/h4/text()').extract_first(),

'desc': julyedu_class.xpath('a/p[@class="course-info-tip"][1]/text()').extract_first(),

'time': julyedu_class.xpath('a/p[@class="course-info-tip"][2]/text()').extract_first(),

'img_url': response.urljoin(julyedu_class.xpath('a/img[1]/@src').extract_first())

}

3.拼URL爬取-博客园

#by 寒小阳(hanxiaoyang.ml@gmail.com)

import scrapy

class CnBlogSpider(scrapy.Spider):

name = "cnblogs"

allowed_domains = ["cnblogs.com"]

start_urls = [

'http://www.cnblogs.com/pick/#p%s' % p for p in xrange(1, 11)

]

def parse(self, response):

for article in response.xpath('//div[@class="post_item"]'):

print article.xpath('div[@class="post_item_body"]/h3/a/text()').extract_first().strip()

print response.urljoin(article.xpath('div[@class="post_item_body"]/h3/a/@href').extract_first()).strip()

print article.xpath('div[@class="post_item_body"]/p/text()').extract_first().strip()

print article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/a/text()').extract_first().strip()

print response.urljoin(article.xpath('div[@class="post_item_body"]/div/a/@href').extract_first()).strip()

print article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/span[@class="article_comment"]/a/text()').extract_first().strip()

print article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/span[@class="article_view"]/a/text()').extract_first().strip()

print ""

yield {

'title': article.xpath('div[@class="post_item_body"]/h3/a/text()').extract_first().strip(),

'link': response.urljoin(article.xpath('div[@class="post_item_body"]/h3/a/@href').extract_first()).strip(),

'summary': article.xpath('div[@class="post_item_body"]/p/text()').extract_first().strip(),

'author': article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/a/text()').extract_first().strip(),

'author_link': response.urljoin(article.xpath('div[@class="post_item_body"]/div/a/@href').extract_first()).strip(),

'comment': article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/span[@class="article_comment"]/a/text()').extract_first().strip(),

'view': article.xpath('div[@class="post_item_body"]/div[@class="post_item_foot"]/span[@class="article_view"]/a/text()').extract_first().strip(),

}

4.找到‘下一页’标签进行爬取

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/tag/humor/',

]

def parse(self, response):

for quote in response.xpath('//div[@class="quote"]'):

yield {

'text': quote.xpath('span[@class="text"]/text()').extract_first(),

'author': quote.xpath('span/small[@class="author"]/text()').extract_first(),

}

next_page = response.xpath('//li[@class="next"]/@herf').extract_first()

if next_page is not None:

next_page = response.urljoin(next_page)

yield scrapy.Request(next_page, callback=self.parse)

5.进入链接,按照链接进行爬取

#by 寒小阳(hanxiaoyang.ml@gmail.com)

import scrapy

class QQNewsSpider(scrapy.Spider):

name = 'qqnews'

start_urls = ['http://news.qq.com/society_index.shtml']

def parse(self, response):

for href in response.xpath('//*[@id="news"]/div/div/div/div/em/a/@href'):

full_url = response.urljoin(href.extract())

yield scrapy.Request(full_url, callback=self.parse_question)

def parse_question(self, response):

print response.xpath('//div[@class="qq_article"]/div/h1/text()').extract_first()

print response.xpath('//span[@class="a_time"]/text()').extract_first()

print response.xpath('//span[@class="a_catalog"]/a/text()').extract_first()

print "

".join(response.xpath('//div[@id="Cnt-Main-Article-QQ"]/p[@class="text"]/text()').extract())

print ""

yield {

'title': response.xpath('//div[@class="qq_article"]/div/h1/text()').extract_first(),

'content': "

".join(response.xpath('//div[@id="Cnt-Main-Article-QQ"]/p[@class="text"]/text()').extract()),

'time': response.xpath('//span[@class="a_time"]/text()').extract_first(),

'cate': response.xpath('//span[@class="a_catalog"]/a/text()').extract_first(),

}