k8s安装前置准备工作

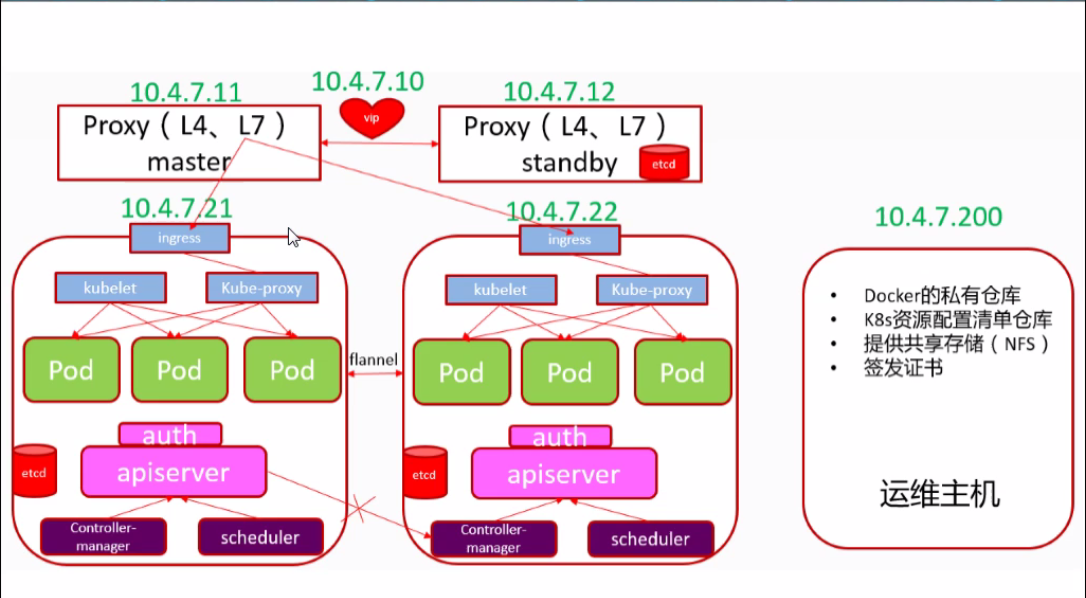

1. 所需资源

| 主机名 | 最低CPU | 最低内存 | IP地址 | 角色 |

|---|---|---|---|---|

| hdss7-11.host.com | 2核 | 2G | 10.4.7.11 | k8s代理节点1 |

| hdss7-12.host.com | 2核 | 2G | 10.4.7.12 | k8s代理节点2 |

| hdss7-21.host.com | 2核 | 2G | 10.4.7.21 | k8s运算节点1 |

| hdss7-22.host.com | 2核 | 2G | 10.4.7.22 | k8s运算节点2 |

| hdss7-200.host.com | 2核 | 2G | 10.4.7.200 | k8s运维节点(docker仓库) |

网络规划

- 节点网络:10.4.7.0/16

- Pod网络:172.7.0.0/16

- Service网络:192.168.0.0/16

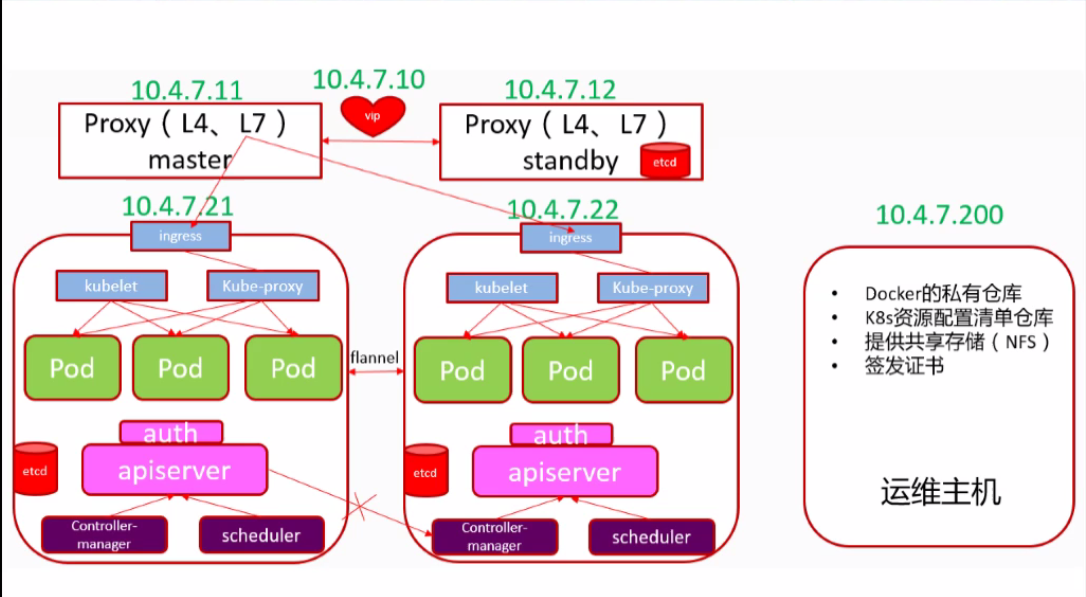

本次集群架构图

2. 环境配置

(1)操作系统为Centos 7,并做好基础优化

(2)关闭selinux和防火墙

(3)Linux内核版本3.8以上

(4)安装国内yum源和epel源

2.1 所有机器的基础配置

# 添加epel源

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 关闭SElinux

setenforce 0

# 安装必要的工具

yum install -y wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils

3. 安装bind9,部署自建DNS系统

hdss7-11.host.com上操作

3.1 为什么要安装bind9

因为要使用ingress做7层的流量调度,方便容器间的域名解析。

3.2 安装bind9

[root@hdss7-11.host.com ~]# yum install -y bind

[root@hdss7-11.host.com ~]# rpm -qa bind

bind-9.11.4-16.P2.el7_8.6.x86_64

3.3 配置bind9主配置文件

[root@hdss7-11.host.com ~]# vi /etc/named.conf

13 listen-on port 53 { 10.4.7.11; }; # 监听本机IP

14 listen-on-v6 port 53 { ::1; }; # 删除该行,不监听IPV6

20 allow-query { any; }; # 允许所有主机查看

21 forwarders { 10.4.7.254; }; # 添加该行,地址为办公网的DNS

32 recursion yes; # dns采用递归的查询

34 dnssec-enable no; # 关闭,节省资源(生产可能不需要关闭)

35 dnssec-validation no; # 关闭,节省资源,不做互联网认证

检查配置是否正确

[root@hdss7-11.host.com ~]# named-checkconf

# 没有输出表示正常

3.4 配置bind9区域配置文件

[root@hdss7-11.host.com ~]# vim /etc/named.rfc1912.zones

# 文本最后添加

zone "host.com" IN { # 主机域

type master;

file "host.com.zone";

allow-update { 10.4.7.11; };

};

zone "od.com" IN { # 业务域

type master;

file "od.com.zone";

allow-update { 10.4.7.11; };

};

3.5 配置bind9区域数据文件

3.5.1 配置主机域数据文件

[root@hdss7-11.host.com ~]# vim /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes # 过期时间10分钟

@ IN SOA dns.host.com. dnsadmin.host.com. ( # 区域授权文件的开始,OSA记录,dnsadmin.host.com为邮箱

2020102801 ; serial # 2020102801为安装的当天日期+01,共10位

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com. # NS记录

$TTL 60 ; 1 minute

dns A 10.4.7.11 # A记录

hdss7-11 A 10.4.7.11

hdss7-12 A 10.4.7.12

hdss7-21 A 10.4.7.21

hdss7-22 A 10.4.7.22

hdss7-200 A 10.4.7.200

3.5.2 配置业务域数据文件

[root@hdss7-11.host.com ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020102801 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

3.5.3 检查配置并启动

[root@hdss7-11.host.com ~]# named-checkconf

[root@hdss7-11.host.com ~]# # 没有输出表示正常

[root@hdss7-11.host.com ~]# systemctl start named

[root@hdss7-11.host.com ~]# systemctl enable named

Created symlink from /etc/systemd/system/multi-user.target.wants/named.service to /usr/lib/systemd/system/named.service.

[root@hdss7-11.host.com ~]# netstat -lntup |grep 53 # 53端口监听到了,表示服务就启动成功了

tcp 0 0 10.4.7.11:53 0.0.0.0:* LISTEN 22171/named

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 6536/sshd

tcp 0 0 127.0.0.1:953 0.0.0.0:* LISTEN 22171/named

tcp6 0 0 :::22 :::* LISTEN 6536/sshd

tcp6 0 0 ::1:953 :::* LISTEN 22171/named

udp 0 0 10.4.7.11:53 0.0.0.0:* 22171/named

3.5.3 检查域名解析配置是否成功

[root@hdss7-11.host.com ~]# dig -t A hdss7-21.host.com @10.4.7.11 +short

10.4.7.21

[root@hdss7-11.host.com ~]# dig -t A hdss7-22.host.com @10.4.7.11 +short

10.4.7.22

[root@hdss7-11.host.com ~]# dig -t A hdss7-200.host.com @10.4.7.11 +short

10.4.7.200

[root@hdss7-11.host.com ~]# dig -t A hdss7-12.host.com @10.4.7.11 +short

10.4.7.12

[root@hdss7-11.host.com ~]#

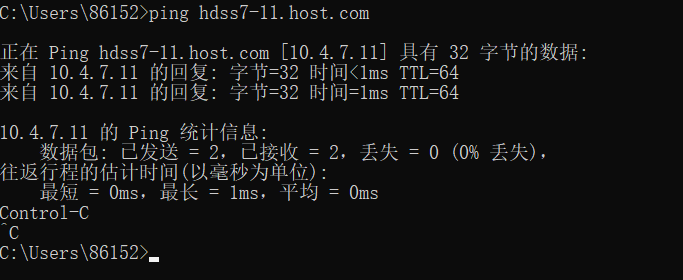

3.5.4 更换所有主机的DNS为10.4.7.11

vim /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=10.4.7.11

systemctl restart network

[root@hdss7-11.host.com ~]# ping www.baidu.com

PING www.a.shifen.com (14.215.177.39) 56(84) bytes of data.

64 bytes from 14.215.177.39 (14.215.177.39): icmp_seq=1 ttl=128 time=17.2 ms

^C

[root@hdss7-11.host.com ~]# ping `hostname`

PING hdss7-11.host.com (10.4.7.11) 56(84) bytes of data.

64 bytes from hdss7-11.host.com (10.4.7.11): icmp_seq=1 ttl=64 time=0.009 ms

^C

[root@hdss7-11.host.com ~]# ping hdss7-11 #因为resolv.conf中有search host.com,支持短域名,所以这不要.host.com也能ping通,一般情况下,只有主机域使用短域名。

PING hdss7-11.host.com (10.4.7.11) 56(84) bytes of data.

64 bytes from hdss7-11.host.com (10.4.7.11): icmp_seq=1 ttl=64 time=0.007 ms

^C

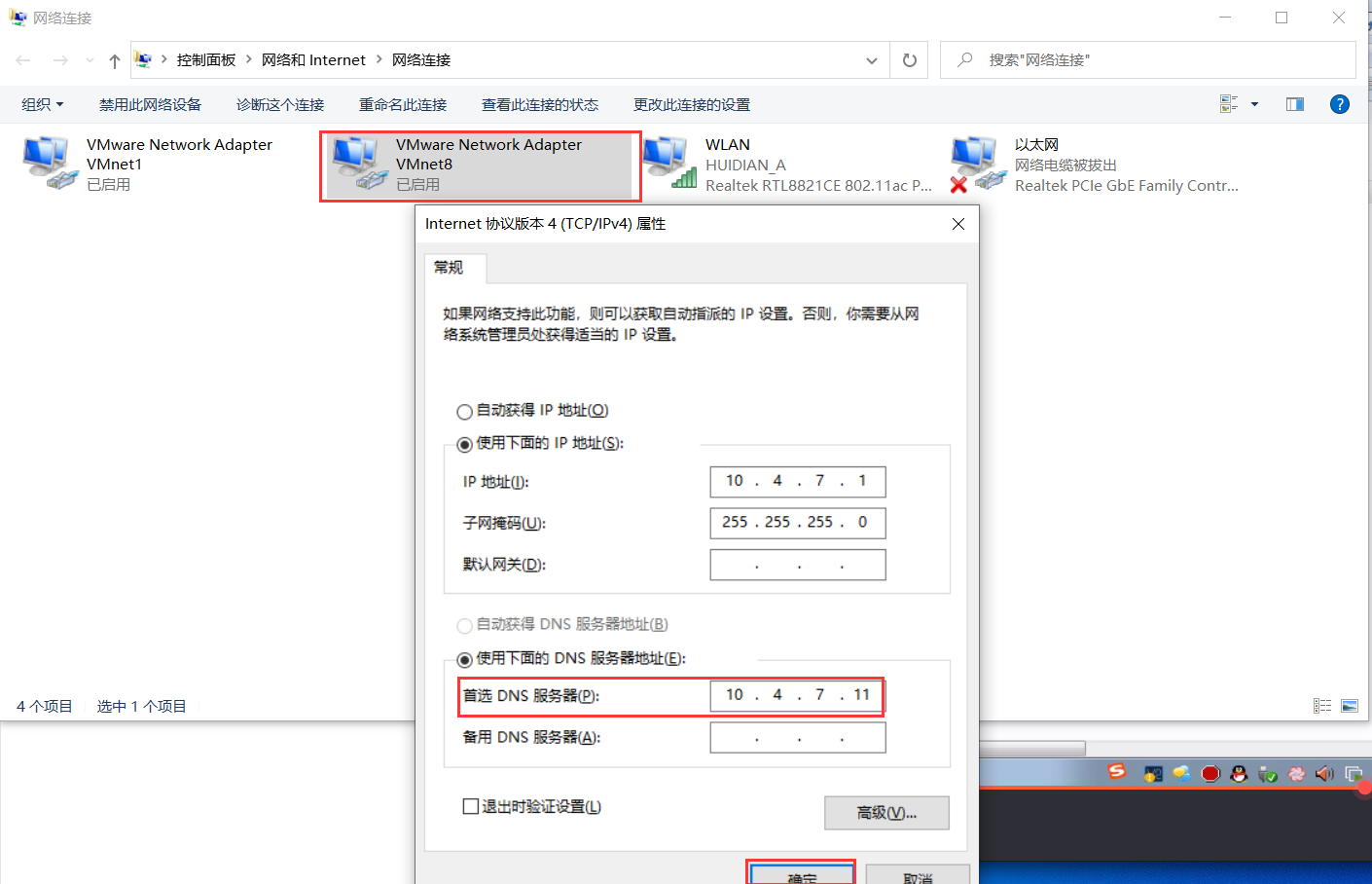

3.5.5 配置windows宿主机的VMnet8网卡的dns,后期要浏览网页

如果这里在cmd无法ping通虚拟机的主机名,则检查宿主机的防火墙是否关闭,如果关闭了宿主机防火墙还是不行,就把宿主机本身的网卡DNS改成10.4.7.11,但是实验过后记得还原宿主机网卡的设置,以免出现无法上网的情况

4. 准备证书签发环境

操作hdss7-200.host.com

4.1 安装CFSSL

cfssl工具:

- cfssl:证书签发的主要工具

- cfssl-json:将cfssl生成的证书(json格式)变为承载式证书(文件文件)

- cfssl-cerinfo:验证证书信息。

- 使用方法:cfssl-certinfo -cert $name.pem

[root@hdss7-200.host.com ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

[root@hdss7-200.host.com ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

[root@hdss7-200.host.com ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

[root@hdss7-200.host.com ~]# chmod +x /usr/bin/cfssl*

[root@hdss7-200.host.com ~]# which cfssl

/usr/bin/cfssl

[root@hdss7-200.host.com ~]# which cfssl-json

/usr/bin/cfssl-json

[root@hdss7-200.host.com ~]# which cfssl-certinfo

/usr/bin/cfssl-certinfo

4.2 创建CA根证书签名请求(csr)的JSON配置文件

[root@hdss7-200.host.com ~]# cd /opt/

[root@hdss7-200.host.com /opt]# mkdir certs

[root@hdss7-200.host.com /opt]# cd certs

[root@hdss7-200.host.com /opt/certs]# pwd

/opt/certs

[root@hdss7-200.host.com /opt/certs]# vim ca-csr.json

{

"CN": "OldboyEdu", # CA机构的名称

"hosts": [

],

"key": {

"algo": "rsa", # 加密算法的类型

"size": 2048 # 长度

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h" # ca证书的过期时间,使用kubeadm安装K8S默认颁发的证书有效期是一年,此处二进制安装K8S,为20年

}

}

# 上述配置官网解释

## CN: Common Name,浏览器使用该字段验证网站是否合法,一般写的是域名。非常重要。浏览器使用该字段验证网站是否合法

## C: Country, 国家

## ST: State,州,省

## L: Locality,地区,城市

## O: Organization Name,组织名称,公司名称

## OU: Organization Unit Name,组织单位名称,公司部门

4.3 签发承载式证书

[root@hdss7-200.host.com /opt/certs]# ll

total 4

-rw-r--r-- 1 root root 329 Oct 28 16:24 ca-csr.json

[root@hdss7-200.host.com /opt/certs]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

2020/10/28 16:25:17 [INFO] generating a new CA key and certificate from CSR

2020/10/28 16:25:17 [INFO] generate received request

2020/10/28 16:25:17 [INFO] received CSR

2020/10/28 16:25:17 [INFO] generating key: rsa-2048

2020/10/28 16:25:18 [INFO] encoded CSR

2020/10/28 16:25:18 [INFO] signed certificate with serial number 210900104910205411292096453403515818629104651035

[root@hdss7-200.host.com /opt/certs]# ll

total 16

-rw-r--r-- 1 root root 993 Oct 28 16:25 ca.csr # 生成的证书

-rw-r--r-- 1 root root 329 Oct 28 16:24 ca-csr.json

-rw------- 1 root root 1675 Oct 28 16:25 ca-key.pem # 生成的证书(根证书的私钥)

-rw-r--r-- 1 root root 1346 Oct 28 16:25 ca.pem # 生成的证书(根证书)

5. 准备Docker环境

K8s环境依赖于容器引擎,此处选择的容器引擎为docker

操作:hdss7-200.host.com、hdss7-21.host.com、hdss7-22.host.com

# curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun # 安装完了后会显示WARNING:,忽略即可

# mkdir -p /etc/docker

# vi /etc/docker/daemon.json

{

"graph": "/data/docker", # 该目录 需要手动创建

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"bip": "172.7.200.1/24", # 定义k8s主机上k8s pod的ip地址网段,此处在200机器上配置的,当在21和22上配置时,把172.7.200.1分别改成172.7.21.1和172.7.22.1即可。

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

# mkdir -p /data/docker

# systemctl start docker

# systemctl enable docker

6. 部署docker私有仓库harbor

6.1 下载harbor

操作:hdss7-200.host.com

harbor官网:https://github.com/goharbor/harbor(不FQ上不了github)

强烈建议安装1.7.6以上版本(1.7.5以下的有漏洞),并选择harbor-offline-installer类型的包

[root@hdss7-200.host.com ~]# mkdir /opt/src

[root@hdss7-200.host.com ~]# cd /opt/src

[root@hdss7-200.host.com /opt/src]# ll -h

total 554M

-rw-r--r-- 1 root root 554M Oct 28 17:06 harbor-offline-installer-v1.8.3.tgz

[root@hdss7-200.host.com /opt/src]# tar zxf harbor-offline-installer-v1.8.3.tgz -C /opt/

[root@hdss7-200.host.com /opt/src]# cd /opt/

[root@hdss7-200.host.com /opt]# ll

total 0

drwxr-xr-x 2 root root 71 Oct 28 16:25 certs

drwx--x--x 4 root root 28 Oct 28 16:53 containerd

drwxr-xr-x 2 root root 100 Oct 28 17:08 harbor

drwxr-xr-x 2 root root 49 Oct 28 17:06 src

[root@hdss7-200.host.com /opt]# mv harbor harbor-v1.8.3 # 方便识别版本

[root@hdss7-200.host.com /opt]# ln -s harbor-v1.8.3 harbor

[root@hdss7-200.host.com /opt]# ll

total 0

drwxr-xr-x 2 root root 71 Oct 28 16:25 certs

drwx--x--x 4 root root 28 Oct 28 16:53 containerd

lrwxrwxrwx 1 root root 13 Oct 28 17:09 harbor -> harbor-v1.8.3 # 方便未来升级

drwxr-xr-x 2 root root 100 Oct 28 17:08 harbor-v1.8.3

drwxr-xr-x 2 root root 49 Oct 28 17:06 src

[root@hdss7-200.host.com /opt]#

[root@hdss7-200.host.com /opt/harbor]# ll

total 569632

-rw-r--r-- 1 root root 583269670 Sep 16 2019 harbor.v1.8.3.tar.gz # harbor镜像文件

-rw-r--r-- 1 root root 4519 Sep 16 2019 harbor.yml # harbor的配置文件

-rwxr-xr-x 1 root root 5088 Sep 16 2019 install.sh

-rw-r--r-- 1 root root 11347 Sep 16 2019 LICENSE

-rwxr-xr-x 1 root root 1654 Sep 16 2019 prepar

6.2 编辑harbor主配置文件harbor.yml

[root@hdss7-200.host.com /opt/harbor]# vim harbor.yml

# 把第5行的 hostname: reg.mydomain.com 改成 hostname: harbor.od.com

# 把第10行的 port: 80 改成 port: 180,后期需要安装nginx,防止端口冲突

# 第27行 harbor_admin_password: Harbor12345,为登陆harbor的密码,生产环境中应设置为复杂度足够高的字符串。

# 把第35行的 data_volume: /data 改成 data_volume: /data/harbor

# 把第82行的 location: /var/log/harbor 改成 location: /data/harbor/logs,自定义日志存放的位置

[root@hdss7-200.host.com /opt/harbor]# mkdir -p /data/harbor/logs

6.3 安装docker-compose

# harbor本身也是若干个容器启动起来的,依赖于docker-compose做单机编排。

[root@hdss7-200.host.com /opt/harbor]# yum install -y docker-compose

[root@hdss7-200.host.com /opt/harbor]# rpm -qa docker-compose

docker-compose-1.18.0-4.el7.noarch

6.4 安装harbor

root@hdss7-200.host.com /opt/harbor]# ./install.sh

[Step 0]: checking installation environment ...

Note: docker version: 19.03.13 # 用到的docker

Note: docker-compose version: 1.18.0 # 用到的docker-compose

[Step 1]: loading Harbor images ...

………………省略若干行输出

✔ ----Harbor has been installed and started successfully.---- # 安装和启动完毕

6.5 检查harbor启动情况

[root@hdss7-200.host.com /opt/harbor]# docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------------

harbor-core /harbor/start.sh Up

harbor-db /entrypoint.sh postgres Up 5432/tcp

harbor-jobservice /harbor/start.sh Up

harbor-log /bin/sh -c /usr/local/bin/ ... Up 127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up 80/tcp

nginx nginx -g daemon off; Up 0.0.0.0:180->80/tcp

redis docker-entrypoint.sh redis ... Up 6379/tcp

registry /entrypoint.sh /etc/regist ... Up 5000/tcp

registryctl /harbor/start.sh Up

[root@hdss7-200.host.com /opt/harbor]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ea041807100f goharbor/nginx-photon:v1.8.3 "nginx -g 'daemon of…" 3 minutes ago Up 3 minutes (healthy) 0.0.0.0:180->80/tcp nginx

c383803a057d goharbor/harbor-jobservice:v1.8.3 "/harbor/start.sh" 3 minutes ago Up 3 minutes harbor-jobservice

2585d6dbd86b goharbor/harbor-portal:v1.8.3 "nginx -g 'daemon of…" 3 minutes ago Up 3 minutes (healthy) 80/tcp harbor-portal

6a595b66ea58 goharbor/harbor-core:v1.8.3 "/harbor/start.sh" 3 minutes ago Up 3 minutes (healthy) harbor-core

7f621c7241b0 goharbor/harbor-registryctl:v1.8.3 "/harbor/start.sh" 3 minutes ago Up 3 minutes (healthy) registryctl

1c6aed28ed83 goharbor/redis-photon:v1.8.3 "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 6379/tcp redis

880f4554a304 goharbor/harbor-db:v1.8.3 "/entrypoint.sh post…" 3 minutes ago Up 3 minutes (healthy) 5432/tcp harbor-db

728895602e02 goharbor/registry-photon:v2.7.1-patch-2819-v1.8.3 "/entrypoint.sh /etc…" 3 minutes ago Up 3 minutes (healthy) 5000/tcp registry

03f05904cd6d goharbor/harbor-log:v1.8.3 "/bin/sh -c /usr/loc…" 3 minutes ago Up 3 minutes (healthy) 127.0.0.1:1514->10514/tcp harbor-log

6.6 安装nginx,反向代理harbor

[root@hdss7-200.host.com /opt/harbor]# yum install -y nginx

[root@hdss7-200.host.com /opt/harbor]# vim /etc/nginx/conf.d/harbor.od.com.conf

server {

listen 80;

server_name harbor.od.com;

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}

[root@hdss7-200.host.com /opt/harbor]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-200.host.com /opt/harbor]# systemctl start nginx

[root@hdss7-200.host.com /opt/harbor]# systemctl enable nginx

# 这个是否harbor的域名,是不能访问的,需要去更改dns服务器11的配置。

6.7 更改dns服务器配置,使harbor能够正常对外提供服务

[root@hdss7-11.host.com ~]# vim /var/named/od.com.zone

# 把第4行的 2020102801 改成 2020102802,就是最后一位数+1,前滚一个序列号,(每次添加一个新的解析都需要最后一位数字+1)

# 然后在文件末尾添加一行:harbor A 10.4.7.200

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020102802 ; serial # 最后一位数+1

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200 # 添加的行

[root@hdss7-11.host.com ~]# systemctl restart named # 重启服务

[root@hdss7-11.host.com ~]# dig -t A harbor.od.com +short #验证域名能否正常解析

10.4.7.200

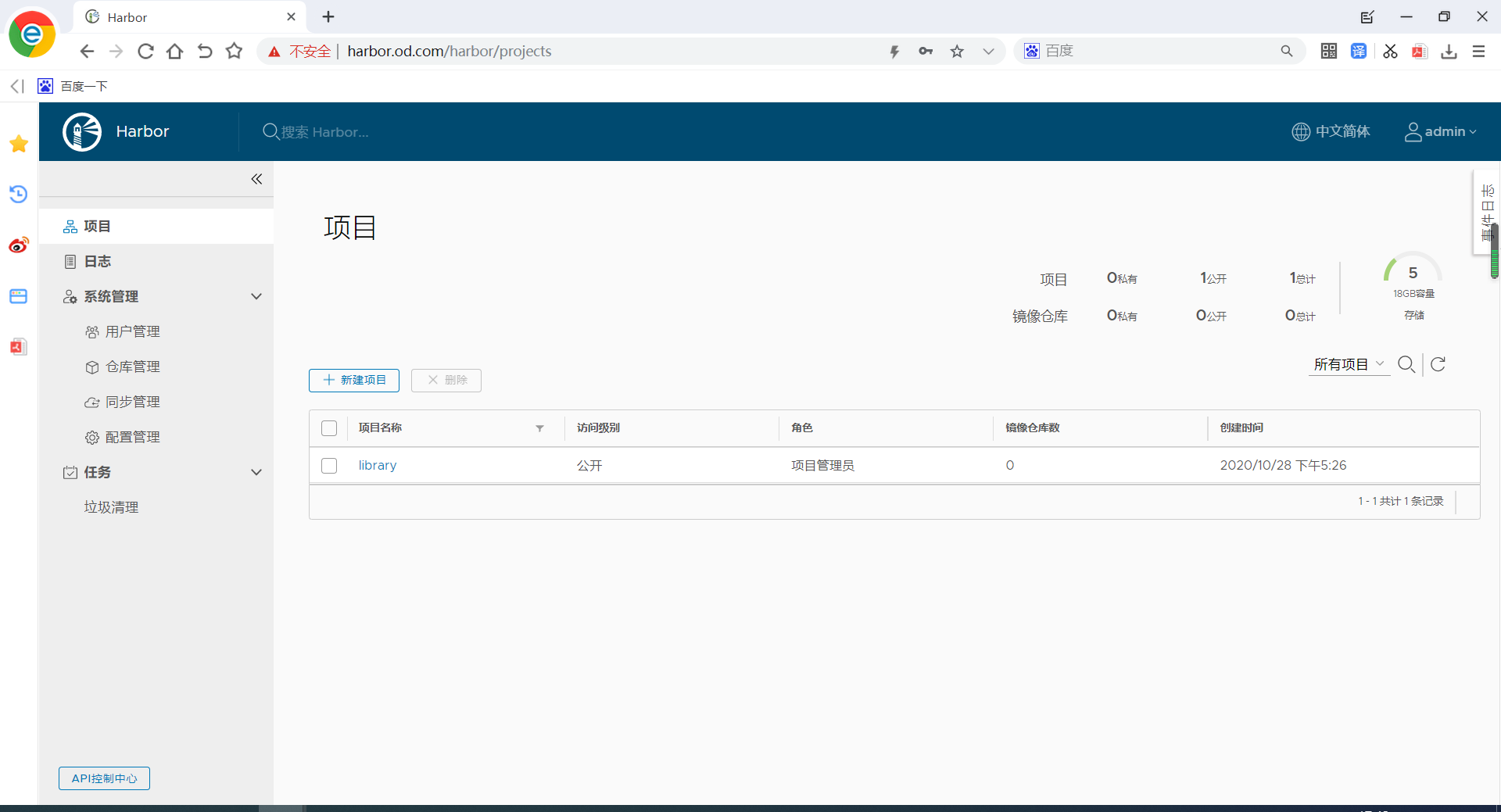

浏览器访问,新建一个public项目并公开

用户名:admin。密码:Harbor12345

6.8 下载一个镜像,并测试上传到harbor

[root@hdss7-200.host.com /opt/harbor]# docker pull nginx:1.7.9

[root@hdss7-200.host.com /opt/harbor]# docker images |grep 1.7.9

nginx 1.7.9 84581e99d807 5 years ago 91.7MB

[root@hdss7-200.host.com /opt/harbor]# docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9

[root@hdss7-200.host.com /opt/harbor]# docker login harbor.od.com # 登陆仓库

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@hdss7-200.host.com /opt/harbor]# docker push harbor.od.com/public/nginx

6.9 如果需要重启harbor请使用如下命令

docker-compose up -d

浏览器查看上传结果

正式开始安装K8S

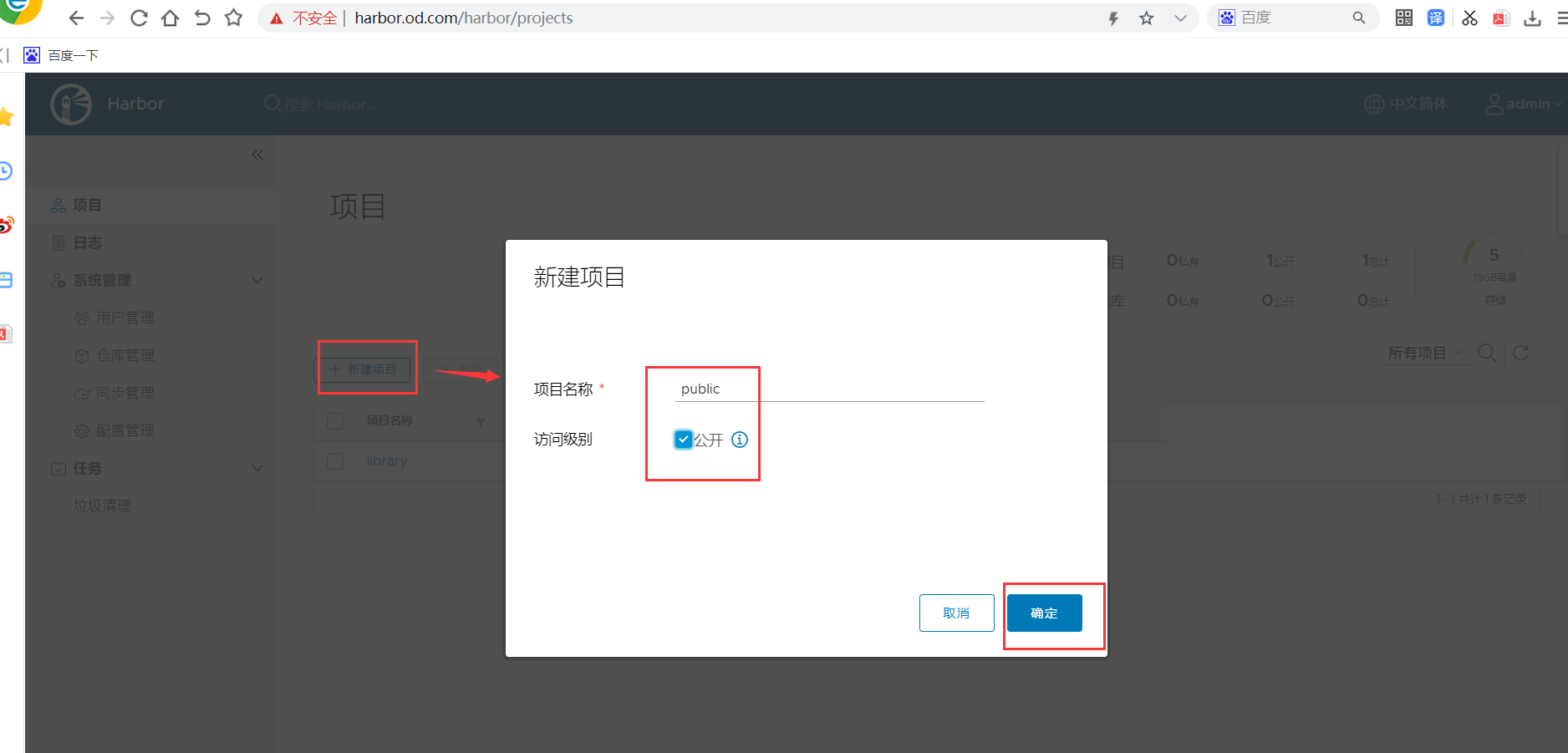

1. 部署Master节点的etcd集群

集群规划

1.1 创建基于根证书的config配置文件

操作:hdss7-200

[root@hdss7-200.host.com ~]# vim /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": { # 服务端通信客户端的配置,服务端通信客户端时需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": { # 客户端通信服务端的配置,客户端通信服务端时需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": { # 服务端与客户端相互通信配置,相互通信时都需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

1.2 创建etchd证书请求文件

[root@hdss7-200.host.com ~]# vim /opt/certs/etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [ # hosts配置段,表示etcd有可能安装的节点

"10.4.7.11",

"10.4.7.12",

"10.4.7.21",

"10.4.7.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

1.3 签发etcd使用的证书

[root@hdss7-200.host.com ~]# cd /opt/certs/

[root@hdss7-200.host.com /opt/certs]# ll

total 24

-rw-r--r-- 1 root root 840 Oct 29 11:49 ca-config.json

-rw-r--r-- 1 root root 993 Oct 28 16:25 ca.csr

-rw-r--r-- 1 root root 329 Oct 28 16:24 ca-csr.json

-rw------- 1 root root 1675 Oct 28 16:25 ca-key.pem

-rw-r--r-- 1 root root 1346 Oct 28 16:25 ca.pem

-rw-r--r-- 1 root root 363 Oct 29 11:53 etcd-peer-csr.json

[root@hdss7-200.host.com /opt/certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer # 签发证书

2020/10/29 11:54:53 [INFO] generate received request

2020/10/29 11:54:53 [INFO] received CSR

2020/10/29 11:54:53 [INFO] generating key: rsa-2048

2020/10/29 11:54:53 [INFO] encoded CSR

2020/10/29 11:54:53 [INFO] signed certificate with serial number 518313688059201272353183692889297697137578166576

2020/10/29 11:54:53 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@hdss7-200.host.com /opt/certs]# ll

total 36

-rw-r--r-- 1 root root 840 Oct 29 11:49 ca-config.json

-rw-r--r-- 1 root root 993 Oct 28 16:25 ca.csr

-rw-r--r-- 1 root root 329 Oct 28 16:24 ca-csr.json

-rw------- 1 root root 1675 Oct 28 16:25 ca-key.pem

-rw-r--r-- 1 root root 1346 Oct 28 16:25 ca.pem

-rw-r--r-- 1 root root 1062 Oct 29 11:54 etcd-peer.csr # 生成的证书

-rw-r--r-- 1 root root 363 Oct 29 11:53 etcd-peer-csr.json

-rw------- 1 root root 1679 Oct 29 11:54 etcd-peer-key.pem # 生成的证书

-rw-r--r-- 1 root root 1428 Oct 29 11:54 etcd-peer.pem # 生成的证书

[root@hdss7-200.host.com /opt/certs]#

1.4 部署etcd

1.4.1 下载etcd

https://github.com/etcd-io/etcd/tags #需要FQ

1.4.2 创建etcd专用用户(hdss7-12.host.com)

操作:hdss7-12.host.com

[root@hdss7-12.host.com /opt/src]# useradd -s /sbin/nologin -M etcd

[root@hdss7-12.host.com /opt/src]# id etcd

uid=1000(etcd) gid=1000(etcd) groups=1000(etcd)

1.4.3 上传etcd软件,并进行相关配置

[root@hdss7-12.host.com /opt/src]# tar zxf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

[root@hdss7-12.host.com /opt/src]# cd ..

[root@hdss7-12.host.com /opt]# ll

total 0

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20-linux-amd64

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-12.host.com /opt]# mv etcd-v3.1.20-linux-amd64 etcd-v3.1.20

[root@hdss7-12.host.com /opt]# ln -s etcd-v3.1.20 etcd

[root@hdss7-12.host.com /opt]# ll

total 0

lrwxrwxrwx 1 root root 12 Oct 29 14:12 etcd -> etcd-v3.1.20

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-12.host.com /opt]# cd etcd

[root@hdss7-12.host.com /opt/etcd]# ll

total 30068

drwxr-xr-x 11 478493 89939 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 478493 89939 16406432 Oct 11 2018 etcd # etcd启动文件

-rwxr-xr-x 1 478493 89939 14327712 Oct 11 2018 etcdctl # etcd命令行工具

-rw-r--r-- 1 478493 89939 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 478493 89939 5878 Oct 11 2018 README.md

-rw-r--r-- 1 478493 89939 7892 Oct 11 2018 READMEv2-etcdctl.md

1.4.4 创建目录,拷贝证书、私钥

[root@hdss7-12.host.com /opt/etcd]# mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

# 启动etcd需要用到3个证书:ca.pem、etcd-peer-key.pem、etcd-peer.pem

[root@hdss7-12.host.com /opt/etcd]# cd certs/

[root@hdss7-12.host.com /opt/etcd/certs]# ll

total 0

[root@hdss7-12.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/ca.pem ./

[root@hdss7-12.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer-key.pem ./

[root@hdss7-12.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer.pem ./

[root@hdss7-12.host.com /opt/etcd/certs]# ll

total 12

-rw-r--r-- 1 root root 1346 Oct 29 14:18 ca.pem # 证书

-rw------- 1 root root 1679 Oct 29 14:19 etcd-peer-key.pem # 私钥,注意私钥权限为600

-rw-r--r-- 1 root root 1428 Oct 29 14:19 etcd-peer.pem # 证书

1.4.5 创建etcd启动文件

[root@hdss7-12.host.com /opt/etcd/certs]# cd ..

[root@hdss7-12.host.com /opt/etcd]# vim etcd-server-startup.sh

#!/bin/sh

./etcd --name etcd-server-7-12

--data-dir /data/etcd/etcd-server

--listen-peer-urls https://10.4.7.12:2380

--listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379

--quota-backend-bytes 8000000000

--initial-advertise-peer-urls https://10.4.7.12:2380

--advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380

--ca-file ./certs/ca.pem

--cert-file ./certs/etcd-peer.pem

--key-file ./certs/etcd-peer-key.pem

--client-cert-auth

--trusted-ca-file ./certs/ca.pem

--peer-ca-file ./certs/ca.pem

--peer-cert-file ./certs/etcd-peer.pem

--peer-key-file ./certs/etcd-peer-key.pem

--peer-client-cert-auth

--peer-trusted-ca-file ./certs/ca.pem

--log-output stdout

[root@hdss7-12.host.com /opt/etcd]# chmod +x etcd-server-startup.sh

[root@hdss7-12.host.com /opt/etcd]# chown -R etcd. /opt/etcd-v3.1.20/

[root@hdss7-12.host.com /opt/etcd]# ll /opt/etcd-v3.1.20/

total 30072

drwxr-xr-x 2 etcd etcd 66 Oct 29 14:19 certs

drwxr-xr-x 11 etcd etcd 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 etcd etcd 16406432 Oct 11 2018 etcd

-rwxr-xr-x 1 etcd etcd 14327712 Oct 11 2018 etcdctl

-rwxr-xr-x 1 etcd etcd 981 Oct 29 14:45 etcd-server-startup.sh

-rw-r--r-- 1 etcd etcd 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 etcd etcd 5878 Oct 11 2018 README.md

-rw-r--r-- 1 etcd etcd 7892 Oct 11 2018 READMEv2-etcdctl.md

[root@hdss7-12.host.com /opt/etcd]# chown -R etcd. /data/etcd/

[root@hdss7-12.host.com /opt/etcd]# chown -R etcd. /data/logs/etcd-server/

1.4.6 安装supervisor,让etcd后台运行

[root@hdss7-12.host.com /opt/etcd]# yum install supervisor -y

[root@hdss7-12.host.com /opt/etcd]# systemctl start supervisord

[root@hdss7-12.host.com /opt/etcd]# systemctl enable supervisord

1.4.7 创建supervisor启动文件

[root@hdss7-12.host.com /opt/etcd]# vim /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12] # 注意此处

command=/opt/etcd/etcd-server-startup.sh #etcd脚本启动位置 ; the program (relative uses PATH, can take args)

numprocs=1 #启动1个进程 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true # 是否自动启动 ; start at supervisord start (default: true)

autorestart=true # 是否自动重启 ; retstart at unexpected quit (default: true)

startsecs=30 # 启动后多长时间判定为启动成功 ; number of secs prog must stay running (def. 1)

startretries=3 # 重启次数 ; max # of serial start failures (default 3)

exitcodes=0,2 # 异常退出的codes ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT # 停止的信号 ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd # 使用的用户 ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

1.4.8 启动etcd

[root@hdss7-12.host.com /opt/etcd]# supervisorctl update

etcd-server-7-12: added process group

# etcd启动起来需要一点时间,如果启动异常,查看/data/logs/etcd-server/etcd.stdout.log

[root@hdss7-12.host.com /opt]# supervisorctl status

etcd-server-7-12 STARTING

[root@hdss7-12host.com /opt]# supervisorctl status

etcd-server-7-12 RUNNING pid 9263, uptime 0:00:52

[root@hdss7-12.host.com /opt]# netstat -lntup |grep etcd # 必须要监听了2379、2380两个端口才算启动成功

tcp 0 0 10.4.7.12:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 10.4.7.12:2380 0.0.0.0:* LISTEN 9264/./etcd

1.4.9 创建etcd专用用户(hdss7-21.host.com)

操作:hdss7-21.host.com

[root@hdss7-21.host.com /opt/src]# useradd -s /sbin/nologin -M etcd

[root@hdss7-21.host.com /opt/src]# id etcd

uid=1000(etcd) gid=1000(etcd) groups=1000(etcd)

1.4.10 上传etcd软件,并进行相关配置

[root@hdss7-21.host.com /opt/src]# tar zxf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

[root@hdss7-21.host.com /opt/src]# cd ..

[root@hdss7-21.host.com /opt]# ll

total 0

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20-linux-amd64

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-21.host.com /opt]# mv etcd-v3.1.20-linux-amd64 etcd-v3.1.20

[root@hdss7-21.host.com /opt]# ln -s etcd-v3.1.20 etcd

[root@hdss7-21.host.com /opt]# ll

total 0

lrwxrwxrwx 1 root root 12 Oct 29 14:12 etcd -> etcd-v3.1.20

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-21.host.com /opt]# cd etcd

[root@hdss7-21.host.com /opt/etcd]# ll

total 30068

drwxr-xr-x 11 478493 89939 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 478493 89939 16406432 Oct 11 2018 etcd # etcd启动文件

-rwxr-xr-x 1 478493 89939 14327712 Oct 11 2018 etcdctl # etcd命令行工具

-rw-r--r-- 1 478493 89939 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 478493 89939 5878 Oct 11 2018 README.md

-rw-r--r-- 1 478493 89939 7892 Oct 11 2018 READMEv2-etcdctl.md

1.4.11 创建目录,拷贝证书、私钥

[root@hdss7-21.host.com /opt/etcd]# mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

# 启动etcd需要用到3个证书:ca.pem、etcd-peer-key.pem、etcd-peer.pem

[root@hdss7-21.host.com /opt/etcd]# cd certs/

[root@hdss7-21.host.com /opt/etcd/certs]# ll

total 0

[root@hdss7-21.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/ca.pem ./

[root@hdss7-21.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer-key.pem ./

[root@hdss7-21.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer.pem ./

[root@hdss7-21.host.com /opt/etcd/certs]# ll

total 12

-rw-r--r-- 1 root root 1346 Oct 29 14:18 ca.pem # 证书

-rw------- 1 root root 1679 Oct 29 14:19 etcd-peer-key.pem # 私钥,注意私钥权限为600

-rw-r--r-- 1 root root 1428 Oct 29 14:19 etcd-peer.pem # 证书

1.4.12 创建etcd启动文件

[root@hdss7-21.host.com /opt/etcd/certs]# cd ..

[root@hdss7-21.host.com /opt/etcd]# vim etcd-server-startup.sh

#!/bin/sh

./etcd --name etcd-server-7-21

--data-dir /data/etcd/etcd-server

--listen-peer-urls https://10.4.7.21:2380

--listen-client-urls https://10.4.7.21:2379,http://127.0.0.1:2379

--quota-backend-bytes 8000000000

--initial-advertise-peer-urls https://10.4.7.21:2380

--advertise-client-urls https://10.4.7.21:2379,http://127.0.0.1:2379

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380

--ca-file ./certs/ca.pem

--cert-file ./certs/etcd-peer.pem

--key-file ./certs/etcd-peer-key.pem

--client-cert-auth

--trusted-ca-file ./certs/ca.pem

--peer-ca-file ./certs/ca.pem

--peer-cert-file ./certs/etcd-peer.pem

--peer-key-file ./certs/etcd-peer-key.pem

--peer-client-cert-auth

--peer-trusted-ca-file ./certs/ca.pem

--log-output stdout

[root@hdss7-21.host.com /opt/etcd]# chmod +x etcd-server-startup.sh

[root@hdss7-21.host.com /opt/etcd]# chown -R etcd. /opt/etcd-v3.1.20/

[root@hdss7-21.host.com /opt/etcd]# ll /opt/etcd-v3.1.20/

total 30072

drwxr-xr-x 2 etcd etcd 66 Oct 29 14:19 certs

drwxr-xr-x 11 etcd etcd 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 etcd etcd 16406432 Oct 11 2018 etcd

-rwxr-xr-x 1 etcd etcd 14327712 Oct 11 2018 etcdctl

-rwxr-xr-x 1 etcd etcd 981 Oct 29 14:45 etcd-server-startup.sh

-rw-r--r-- 1 etcd etcd 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 etcd etcd 5878 Oct 11 2018 README.md

-rw-r--r-- 1 etcd etcd 7892 Oct 11 2018 READMEv2-etcdctl.md

[root@hdss7-21.host.com /opt/etcd]# chown -R etcd. /data/etcd/

[root@hdss7-21.host.com /opt/etcd]# chown -R etcd. /data/logs/etcd-server/

1.4.13 安装supervisor,让etcd后台运行

[root@hdss7-21.host.com /opt/etcd]# yum install supervisor -y

[root@hdss7-21.host.com /opt/etcd]# systemctl start supervisord

[root@hdss7-21.host.com /opt/etcd]# systemctl enable supervisord

1.4.14 创建supervisor启动文件

[root@hdss7-21.host.com /opt/etcd]# vim /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-21] # 注意此处

command=/opt/etcd/etcd-server-startup.sh #etcd脚本启动位置 ; the program (relative uses PATH, can take args)

numprocs=1 #启动1个进程 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true # 是否自动启动 ; start at supervisord start (default: true)

autorestart=true # 是否自动重启 ; retstart at unexpected quit (default: true)

startsecs=30 # 启动后多长时间判定为启动成功 ; number of secs prog must stay running (def. 1)

startretries=3 # 重启次数 ; max # of serial start failures (default 3)

exitcodes=0,2 # 异常退出的codes ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT # 停止的信号 ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd # 使用的用户 ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

1.4.15 启动etcd

[root@hdss7-21.host.com /opt/etcd]# supervisorctl update

etcd-server-7-21: added process group

# etcd启动起来需要一点时间,如果启动异常,查看/data/logs/etcd-server/etcd.stdout.log

[root@hdss7-21.host.com /opt]# supervisorctl status

etcd-server-7-21 STARTING

[root@hdss7-12host.com /opt]# supervisorctl status

etcd-server-7-21 RUNNING pid 9263, uptime 0:00:52

[root@hdss7-21.host.com /opt]# netstat -lntup |grep etcd # 必须要监听了2379、2380两个端口才算启动成功

tcp 0 0 10.4.7.21:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 10.4.7.21:2380 0.0.0.0:* LISTEN 9264/./etcd

1.4.16 创建etcd专用用户(hdss7-22.host.com)

操作:hdss7-22.host.com

[root@hdss7-22.host.com /opt/src]# useradd -s /sbin/nologin -M etcd

[root@hdss7-22.host.com /opt/src]# id etcd

uid=1000(etcd) gid=1000(etcd) groups=1000(etcd)

1.4.17 上传etcd软件,并进行相关配置

[root@hdss7-22.host.com /opt/src]# tar zxf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

[root@hdss7-22.host.com /opt/src]# cd ..

[root@hdss7-22.host.com /opt]# ll

total 0

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20-linux-amd64

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-22.host.com /opt]# mv etcd-v3.1.20-linux-amd64 etcd-v3.1.20

[root@hdss7-22.host.com /opt]# ln -s etcd-v3.1.20 etcd

[root@hdss7-22.host.com /opt]# ll

total 0

lrwxrwxrwx 1 root root 12 Oct 29 14:12 etcd -> etcd-v3.1.20

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20

drwxr-xr-x 2 root root 45 Oct 29 14:11 src

[root@hdss7-22.host.com /opt]# cd etcd

[root@hdss7-22.host.com /opt/etcd]# ll

total 30068

drwxr-xr-x 11 478493 89939 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 478493 89939 16406432 Oct 11 2018 etcd # etcd启动文件

-rwxr-xr-x 1 478493 89939 14327712 Oct 11 2018 etcdctl # etcd命令行工具

-rw-r--r-- 1 478493 89939 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 478493 89939 5878 Oct 11 2018 README.md

-rw-r--r-- 1 478493 89939 7892 Oct 11 2018 READMEv2-etcdctl.md

1.4.18 创建目录,拷贝证书、私钥

[root@hdss7-22.host.com /opt/etcd]# mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

# 启动etcd需要用到3个证书:ca.pem、etcd-peer-key.pem、etcd-peer.pem

[root@hdss7-22.host.com /opt/etcd]# cd certs/

[root@hdss7-22.host.com /opt/etcd/certs]# ll

total 0

[root@hdss7-22.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/ca.pem ./

[root@hdss7-22.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer-key.pem ./

[root@hdss7-22.host.com /opt/etcd/certs]# scp hdss7-200:/opt/certs/etcd-peer.pem ./

[root@hdss7-22.host.com /opt/etcd/certs]# ll

total 12

-rw-r--r-- 1 root root 1346 Oct 29 14:18 ca.pem # 证书

-rw------- 1 root root 1679 Oct 29 14:19 etcd-peer-key.pem # 私钥,注意私钥权限为600

-rw-r--r-- 1 root root 1428 Oct 29 14:19 etcd-peer.pem # 证书

1.4.19 创建etcd启动文件

[root@hdss7-22.host.com /opt/etcd/certs]# cd ..

[root@hdss7-22.host.com /opt/etcd]# vim etcd-server-startup.sh

#!/bin/sh

./etcd --name etcd-server-7-22

--data-dir /data/etcd/etcd-server

--listen-peer-urls https://10.4.7.22:2380

--listen-client-urls https://10.4.7.22:2379,http://127.0.0.1:2379

--quota-backend-bytes 8000000000

--initial-advertise-peer-urls https://10.4.7.22:2380

--advertise-client-urls https://10.4.7.22:2379,http://127.0.0.1:2379

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380

--ca-file ./certs/ca.pem

--cert-file ./certs/etcd-peer.pem

--key-file ./certs/etcd-peer-key.pem

--client-cert-auth

--trusted-ca-file ./certs/ca.pem

--peer-ca-file ./certs/ca.pem

--peer-cert-file ./certs/etcd-peer.pem

--peer-key-file ./certs/etcd-peer-key.pem

--peer-client-cert-auth

--peer-trusted-ca-file ./certs/ca.pem

--log-output stdout

[root@hdss7-22.host.com /opt/etcd]# chmod +x etcd-server-startup.sh

[root@hdss7-22.host.com /opt/etcd]# chown -R etcd. /opt/etcd-v3.1.20/

[root@hdss7-22.host.com /opt/etcd]# ll /opt/etcd-v3.1.20/

total 30072

drwxr-xr-x 2 etcd etcd 66 Oct 29 14:19 certs

drwxr-xr-x 11 etcd etcd 4096 Oct 11 2018 Documentation

-rwxr-xr-x 1 etcd etcd 16406432 Oct 11 2018 etcd

-rwxr-xr-x 1 etcd etcd 14327712 Oct 11 2018 etcdctl

-rwxr-xr-x 1 etcd etcd 981 Oct 29 14:45 etcd-server-startup.sh

-rw-r--r-- 1 etcd etcd 32632 Oct 11 2018 README-etcdctl.md

-rw-r--r-- 1 etcd etcd 5878 Oct 11 2018 README.md

-rw-r--r-- 1 etcd etcd 7892 Oct 11 2018 READMEv2-etcdctl.md

[root@hdss7-22.host.com /opt/etcd]# chown -R etcd. /data/etcd/

[root@hdss7-22.host.com /opt/etcd]# chown -R etcd. /data/logs/etcd-server/

1.4.13 安装supervisor,让etcd后台运行

[root@hdss7-22.host.com /opt/etcd]# yum install supervisor -y

[root@hdss7-22.host.com /opt/etcd]# systemctl start supervisord

[root@hdss7-22.host.com /opt/etcd]# systemctl enable supervisord

1.4.20 创建supervisor启动文件

[root@hdss7-22.host.com /opt/etcd]# vim /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-22] # 注意此处

command=/opt/etcd/etcd-server-startup.sh #etcd脚本启动位置 ; the program (relative uses PATH, can take args)

numprocs=1 #启动1个进程 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true # 是否自动启动 ; start at supervisord start (default: true)

autorestart=true # 是否自动重启 ; retstart at unexpected quit (default: true)

startsecs=30 # 启动后多长时间判定为启动成功 ; number of secs prog must stay running (def. 1)

startretries=3 # 重启次数 ; max # of serial start failures (default 3)

exitcodes=0,2 # 异常退出的codes ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT # 停止的信号 ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd # 使用的用户 ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

1.4.21 启动etcd

[root@hdss7-22.host.com /opt/etcd]# supervisorctl update

etcd-server-7-22: added process group

# etcd启动起来需要一点时间,如果启动异常,查看/data/logs/etcd-server/etcd.stdout.log

[root@hdss7-22.host.com /opt]# supervisorctl status

etcd-server-7-22 STARTING

[root@hdss7-22.host.com /opt]# supervisorctl status

etcd-server-7-22 RUNNING pid 9263, uptime 0:00:52

[root@hdss7-22.host.com /opt]# netstat -lntup |grep etcd # 必须要监听了2379、2380两个端口才算启动成功

tcp 0 0 10.4.7.22:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 9264/./etcd

tcp 0 0 10.4.7.22:2380 0.0.0.0:* LISTEN 9264/./etcd

1.4.22 任意etdc节点检查节点健康状态(3台etcd均启动后)

方法1:

[root@hdss7-21.host.com /opt/etcd]# ./etcdctl cluster-health

member 988139385f78284 is healthy: got healthy result from http://127.0.0.1:2379

member 5a0ef2a004fc4349 is healthy: got healthy result from http://127.0.0.1:2379

member f4a0cb0a765574a8 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy

方法2:

[root@hdss7-21.host.com /opt/etcd]# ./etcdctl member list # 该命令可以查出节点中的Leader

988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.22:2379 isLeader=false

5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false

f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true

2. 部署kube-apiserver集群

集群规划

| 主机名 | 角色 | IP |

|---|---|---|

| hdss7-21.host.com | kube-apiserver | 10.4.7.21 |

| hdss7-22.host.com | kube-apiserver | 10.4.7.22 |

| hdss7-11.host.com | 4层负载均衡 | 10.4.7.11 |

| hdss7-12.host.com | 4层负载均衡 | 10.4.7.12 |

注意:这里10.4.7.11和10.4.7.12使用nginx做4层负载均衡器,用keepalived跑一个vip:10.4.7.10,代理两个kube-apiserver,实现高可用

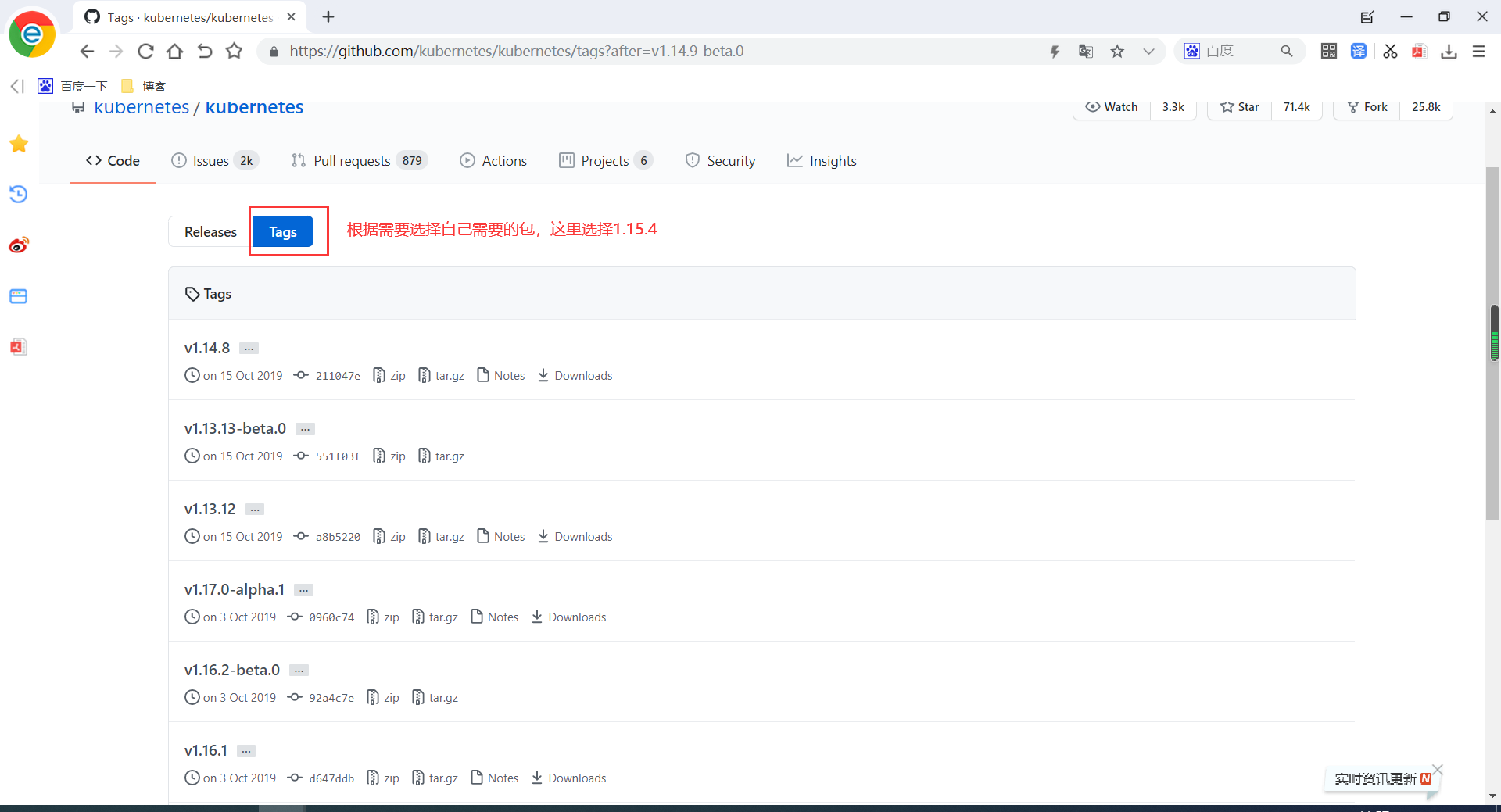

2.1 下载kubernetes

下载地址:https://github.com/kubernetes/kubernetes

2.2 安装kubernetes(hdss7-21.host.com操作)

[root@hdss7-21.host.com ~]# cd /opt/src/

[root@hdss7-21.host.com /opt/src]# ll

total 442992

-rw-r--r-- 1 root root 9850227 Nov 4 11:06 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 443770238 Nov 4 14:05 kubernetes-server-linux-amd64-v1.15.2.tar.gz

[root@hdss7-21.host.com /opt/src]# tar zxf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt/

[root@hdss7-21.host.com /opt/src]# cd /opt/

[root@hdss7-21.host.com /opt]# ll

total 0

drwx--x--x 4 root root 28 Nov 4 10:20 containerd

lrwxrwxrwx 1 root root 12 Nov 4 11:07 etcd -> etcd-v3.1.20

drwxr-xr-x 4 etcd etcd 166 Nov 4 11:17 etcd-v3.1.20

drwxr-xr-x 4 root root 79 Aug 5 2019 kubernetes

drwxr-xr-x 2 root root 97 Nov 4 14:05 src

[root@hdss7-21.host.com /opt]# mv kubernetes kubernetes-v1.15.2

[root@hdss7-21.host.com /opt]# ln -s kubernetes-v1.15.2 kubernetes

[root@hdss7-21.host.com /opt]# cd kubernetes

[root@hdss7-21.host.com /opt/kubernetes]# ll

total 27184

drwxr-xr-x 2 root root 6 Aug 5 2019 addons

-rw-r--r-- 1 root root 26625140 Aug 5 2019 kubernetes-src.tar.gz # kubernetes源码包

-rw-r--r-- 1 root root 1205293 Aug 5 2019 LICENSES

drwxr-xr-x 3 root root 17 Aug 5 2019 server

[root@hdss7-21.host.com /opt/kubernetes]# rm -f kubernetes-src.tar.gz

[root@hdss7-21.host.com /opt/kubernetes]# cd server/bin/

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# ll

total 1548800

-rwxr-xr-x 1 root root 43534816 Aug 5 2019 apiextensions-apiserver

-rwxr-xr-x 1 root root 100548640 Aug 5 2019 cloud-controller-manager

-rw-r--r-- 1 root root 8 Aug 5 2019 cloud-controller-manager.docker_tag

-rw-r--r-- 1 root root 144437760 Aug 5 2019 cloud-controller-manager.tar # .tar结尾的文件都是docker镜像

-rwxr-xr-x 1 root root 200648416 Aug 5 2019 hyperkube

-rwxr-xr-x 1 root root 40182208 Aug 5 2019 kubeadm

-rwxr-xr-x 1 root root 164501920 Aug 5 2019 kube-apiserver

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-apiserver.docker_tag

-rw-r--r-- 1 root root 208390656 Aug 5 2019 kube-apiserver.tar # .tar结尾的文件都是docker镜像

-rwxr-xr-x 1 root root 116397088 Aug 5 2019 kube-controller-manager

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-controller-manager.docker_tag

-rw-r--r-- 1 root root 160286208 Aug 5 2019 kube-controller-manager.tar # .tar结尾的文件都是docker镜像

-rwxr-xr-x 1 root root 42985504 Aug 5 2019 kubectl

-rwxr-xr-x 1 root root 119616640 Aug 5 2019 kubelet

-rwxr-xr-x 1 root root 36987488 Aug 5 2019 kube-proxy

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-proxy.docker_tag

-rw-r--r-- 1 root root 84282368 Aug 5 2019 kube-proxy.tar # .tar结尾的文件都是docker镜像

-rwxr-xr-x 1 root root 38786144 Aug 5 2019 kube-scheduler

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-scheduler.docker_tag

-rw-r--r-- 1 root root 82675200 Aug 5 2019 kube-scheduler.tar # .tar结尾的文件都是docker镜像

-rwxr-xr-x 1 root root 1648224 Aug 5 2019 mounter

# 这里用的是二进制安装,所以用不上上面的镜像,可以删除

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# rm -f *.tar

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# rm -f *_tag

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# ll

total 884636

-rwxr-xr-x 1 root root 43534816 Aug 5 2019 apiextensions-apiserver

-rwxr-xr-x 1 root root 100548640 Aug 5 2019 cloud-controller-manager

-rwxr-xr-x 1 root root 200648416 Aug 5 2019 hyperkube

-rwxr-xr-x 1 root root 40182208 Aug 5 2019 kubeadm

-rwxr-xr-x 1 root root 164501920 Aug 5 2019 kube-apiserver

-rwxr-xr-x 1 root root 116397088 Aug 5 2019 kube-controller-manager

-rwxr-xr-x 1 root root 42985504 Aug 5 2019 kubectl

-rwxr-xr-x 1 root root 119616640 Aug 5 2019 kubelet

-rwxr-xr-x 1 root root 36987488 Aug 5 2019 kube-proxy

-rwxr-xr-x 1 root root 38786144 Aug 5 2019 kube-scheduler

-rwxr-xr-x 1 root root 1648224 Aug 5 2019 mounter

2.3 签发apiserver clinet证书(用于apiserver和etcd集群通信)

在apiserver和etcd集群通信过程中,etcd集群是server端,apiserver是客户端,所以这里需要签发client证书给apiserver。

操作:hdss7-200.host.com

[root@hdss7-200.host.com ~]# cd /opt/certs/

[root@hdss7-200.host.com /opt/certs]# vim client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@hdss7-200.host.com /opt/certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client # 生成client证书和私钥

[root@hdss7-200.host.com /opt/certs]# ll

total 52

-rw-r--r-- 1 root root 836 Nov 4 10:49 ca-config.json

-rw-r--r-- 1 root root 993 Nov 4 09:59 ca.csr

-rw-r--r-- 1 root root 389 Nov 4 09:59 ca-csr.json

-rw------- 1 root root 1679 Nov 4 09:59 ca-key.pem

-rw-r--r-- 1 root root 1346 Nov 4 09:59 ca.pem

-rw-r--r-- 1 root root 993 Nov 4 14:23 client.csr # 生成的clinet相关证书

-rw-r--r-- 1 root root 280 Nov 4 14:19 client-csr.json

-rw------- 1 root root 1675 Nov 4 14:23 client-key.pem # 生成的clinet相关证书

-rw-r--r-- 1 root root 1363 Nov 4 14:23 client.pem # 生成的clinet相关证书

-rw-r--r-- 1 root root 1062 Nov 4 10:50 etcd-peer.csr

-rw-r--r-- 1 root root 363 Nov 4 10:49 etcd-peer-csr.json

-rw------- 1 root root 1679 Nov 4 10:50 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Nov 4 10:50 etcd-peer.pem

2.4 签发apiserver server端证书(apiserver对外提供服务时使用的证书)

有了该证书后,当有服务连接apiserver时,也需要通过ssl认证

root@hdss7-200.host.com /opt/certs]# vim apiserver-csr.json

{

"CN": "apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.4.7.10", # vip,下面其余IP都是apiserver可能部署的地址

"10.4.7.21",

"10.4.7.22",

"10.4.7.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@hdss7-200.host.com /opt/certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssl-json -bare apiserver # 生成证书和私钥

[root@hdss7-200.host.com /opt/certs]# ll

total 68

-rw-r--r-- 1 root root 1245 Nov 4 14:33 apiserver.csr # 生成的证书

-rw-r--r-- 1 root root 562 Nov 4 14:32 apiserver-csr.json

-rw------- 1 root root 1679 Nov 4 14:33 apiserver-key.pem # 生成的证书

-rw-r--r-- 1 root root 1594 Nov 4 14:33 apiserver.pem # 生成的证书

-rw-r--r-- 1 root root 836 Nov 4 10:49 ca-config.json

-rw-r--r-- 1 root root 993 Nov 4 09:59 ca.csr

-rw-r--r-- 1 root root 389 Nov 4 09:59 ca-csr.json

-rw------- 1 root root 1679 Nov 4 09:59 ca-key.pem

-rw-r--r-- 1 root root 1346 Nov 4 09:59 ca.pem

-rw-r--r-- 1 root root 993 Nov 4 14:23 client.csr

-rw-r--r-- 1 root root 280 Nov 4 14:19 client-csr.json

-rw------- 1 root root 1675 Nov 4 14:23 client-key.pem

-rw-r--r-- 1 root root 1363 Nov 4 14:23 client.pem

-rw-r--r-- 1 root root 1062 Nov 4 10:50 etcd-peer.csr

-rw-r--r-- 1 root root 363 Nov 4 10:49 etcd-peer-csr.json

-rw------- 1 root root 1679 Nov 4 10:50 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Nov 4 10:50 etcd-peer.pem

2.5 拷贝证书至各运算节点,并创建配置(拷贝证书、私钥,注意私钥文件属性600)

操作:hdss7-21.host.com

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# mkdir cert

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# cd cert

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/ca.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/ca-key.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/client.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/client-key.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/apiserver.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/apiserver-key.pem .

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# ll

total 24

-rw------- 1 root root 1679 Nov 4 14:43 apiserver-key.pem

-rw-r--r-- 1 root root 1594 Nov 4 14:43 apiserver.pem

-rw------- 1 root root 1679 Nov 4 14:42 ca-key.pem

-rw-r--r-- 1 root root 1346 Nov 4 14:41 ca.pem

-rw------- 1 root root 1675 Nov 4 14:43 client-key.pem

-rw-r--r-- 1 root root 1363 Nov 4 14:42 client.pem

2.6 创建apiserver启动配置文件(日志审计)

[root@hdss7-21.host.com /opt/kubernetes/server/bin/cert]# cd ..

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# mkdir conf

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# cd conf

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# vi audit.yaml # apiserver日志审计,是apiserver启动时必带配置

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

2.7 创建apiserver启动脚本

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# vi kube-apiserver.sh

#!/bin/bash

./kube-apiserver # apiserver启动命令

--apiserver-count 2 # 指定apiserver启动数量

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log # 日志路径

--audit-policy-file ./conf/audit.yaml # 日志审计

--authorization-mode RBAC # 鉴权模式,RBAC(基于角色的访问控制)

--client-ca-file ./cert/ca.pem

--requestheader-client-ca-file ./cert/ca.pem

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota

--etcd-cafile ./cert/ca.pem

--etcd-certfile ./cert/client.pem

--etcd-keyfile ./cert/client-key.pem

--etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379

--service-account-key-file ./cert/ca-key.pem

--service-cluster-ip-range 192.168.0.0/16

--service-node-port-range 3000-29999

--target-ram-mb=1024 # 使用的内存

--kubelet-client-certificate ./cert/client.pem

--kubelet-client-key ./cert/client-key.pem

--log-dir /data/logs/kubernetes/kube-apiserver

--tls-cert-file ./cert/apiserver.pem

--tls-private-key-file ./cert/apiserver-key.pem

--v 2

# 上述参数的更全面描述可以访问官网或./kube-apiserver --help

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# chmod +x kube-apiserver.sh

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# mkdir -p /data/logs/kubernetes/kube-apiserver # 该路径必须创建,否则后续启动会报错

2.8 创建supervisor配置

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# cd /etc/supervisord.d/

[root@hdss7-21.host.com /etc/supervisord.d]# vi kube-apiserver.ini

[program:kube-apiserver-7-21] # 注意此处的21,根据实际IP地址更改

command=/opt/kubernetes/server/bin/conf/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

2.9 启动服务并检查

[root@hdss7-21.host.com /etc/supervisord.d]# cd -

/opt/kubernetes/server/bin/conf

[root@hdss7-21.host.com /opt/kubernetes/server/bin/conf]# cd ..

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl update

kube-apiserver: added process group

[root@hdss7-21.host.com /opt/kubernetes/server/bin]#

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 8762, uptime 4:03:01

kube-apiserver RUNNING pid 9250, uptime 0:00:34

2.10 hdss7-22.host.com安装apiserver

[root@hdss7-22.host.com ~]# cd /opt/src/

[root@hdss7-22.host.com /opt/src]# ll

total 442992

-rw-r--r-- 1 root root 9850227 Nov 4 11:20 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 443770238 Nov 4 15:25 kubernetes-server-linux-amd64-v1.15.2.tar.gz

[root@hdss7-22.host.com /opt/src]# tar zxf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt/

[root@hdss7-22.host.com /opt/src]# cd /opt/

[root@hdss7-22.host.com /opt]# mv kubernetes kubernetes-v1.15.2

[root@hdss7-22.host.com /opt]# ln -s kubernetes-v1.15.2/ kubernetes

[root@hdss7-22.host.com /opt]# ll

total 0

drwx--x--x 4 root root 28 Nov 4 10:20 containerd

lrwxrwxrwx 1 root root 12 Nov 4 11:20 etcd -> etcd-v3.1.20

drwxr-xr-x 4 etcd etcd 166 Nov 4 11:22 etcd-v3.1.20

lrwxrwxrwx 1 root root 19 Nov 4 15:26 kubernetes -> kubernetes-v1.15.2/

drwxr-xr-x 4 root root 79 Aug 5 2019 kubernetes-v1.15.2

drwxr-xr-x 2 root root 97 Nov 4 15:25 src

[root@hdss7-22.host.com /opt]# cd kubernetes

[root@hdss7-22.host.com /opt/kubernetes]# ls

addons kubernetes-src.tar.gz LICENSES server

[root@hdss7-22.host.com /opt/kubernetes]# rm -f kubernetes-src.tar.gz

[root@hdss7-22.host.com /opt/kubernetes]# cd server/bin/

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# ls

apiextensions-apiserver cloud-controller-manager.tar kube-apiserver kube-controller-manager kubectl kube-proxy.docker_tag kube-scheduler.docker_tag

cloud-controller-manager hyperkube kube-apiserver.docker_tag kube-controller-manager.docker_tag kubelet kube-proxy.tar kube-scheduler.tar

cloud-controller-manager.docker_tag kubeadm kube-apiserver.tar kube-controller-manager.tar kube-proxy kube-scheduler mounter

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# rm -f *.tar

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# rm -f *_tag

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# ll

total 884636

-rwxr-xr-x 1 root root 43534816 Aug 5 2019 apiextensions-apiserver

-rwxr-xr-x 1 root root 100548640 Aug 5 2019 cloud-controller-manager

-rwxr-xr-x 1 root root 200648416 Aug 5 2019 hyperkube

-rwxr-xr-x 1 root root 40182208 Aug 5 2019 kubeadm

-rwxr-xr-x 1 root root 164501920 Aug 5 2019 kube-apiserver

-rwxr-xr-x 1 root root 116397088 Aug 5 2019 kube-controller-manager

-rwxr-xr-x 1 root root 42985504 Aug 5 2019 kubectl

-rwxr-xr-x 1 root root 119616640 Aug 5 2019 kubelet

-rwxr-xr-x 1 root root 36987488 Aug 5 2019 kube-proxy

-rwxr-xr-x 1 root root 38786144 Aug 5 2019 kube-scheduler

-rwxr-xr-x 1 root root 1648224 Aug 5 2019 mounter

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# mkdir cert

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# cd cert

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/ca.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/ca-key.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/client.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/client-key.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/apiserver.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# scp hdss7-200:/opt/certs/apiserver-key.pem .

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]#

[root@hdss7-22.host.com /opt/kubernetes/server/bin/cert]# cd ..

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# mkdir conf

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# cd conf

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# vi audit.yaml # 该文件内容和21一样

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# vi kube-apiserver.sh # 该文件内容和21一样

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# chmod +x kube-apiserver.sh

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# mkdir -p /data/logs/kubernetes/kube-apiserver

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# cd /etc/supervisord.d/

[root@hdss7-22.host.com /etc/supervisord.d]# vi kube-apiserver.ini

[program:kube-apiserver-7-22] # 注意这里改成22

command=/opt/kubernetes/server/bin/conf/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

[root@hdss7-22.host.com /etc/supervisord.d]# cd -

/opt/kubernetes/server/bin/conf

[root@hdss7-22.host.com /opt/kubernetes/server/bin/conf]# cd ..

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# supervisorctl update

kube-apiserver-7-22: added process group

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# supervisorctl status

etcd-server-7-22 RUNNING pid 8925, uptime 4:09:11

kube-apiserver-7-22 RUNNING pid 9302, uptime 0:00:24

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# netstat -lntup | grep api

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 9303/./kube-apiserv

tcp6 0 0 :::6443 :::* LISTEN 9303/./kube-apiserv

3. 安装部署主控节点4层反向代理服务

操作:hdss7-11.host.com、hdss7-12.host.com

3.1 安装nginx并配置

# yum -y install nginx

# vi /etc/nginx/nginx.conf #在文件末尾添加如下内容

…… 省略部分内容

stream { # 四层反代

upstream kube-apiserver {

server 10.4.7.21:6443 max_fails=3 fail_timeout=30s;

server 10.4.7.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

# nginx -t

# systemctl start nginx

# systemctl enable nginx

3.2 安装keepalived并配置

操作:hdss7-11.host.com、hdss7-12.host.com

# yum -y install keepalived

# 配置监听脚本(作用:如果主节点的7443端口宕了,自动进行切换)

~]# vi /etc/keepalived/check_port.sh

#!/bin/bash

#keepalived 监控端口脚本

#使用方法:

#在keepalived的配置文件中

#vrrp_script check_port {#创建一个vrrp_script脚本,检查配置

# script "/etc/keepalived/check_port.sh 6379" #配置监听的端口

# interval 2 #检查脚本的频率,单位(秒)

#}

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

~]# chmod +x /etc/keepalived/check_port.sh

3.3 配置keepalived主

操作:hdss7-11.host.com

[root@hdss7-11.host.com ~]# vi /etc/keepalived/keepalived.conf # 删除里面的默认配置,添加如下配置

! Configuration File for keepalived

global_defs {

router_id 10.4.7.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 10.4.7.11

nopreempt # 非抢占机制,主宕掉后,从接管VIP。当主起来后,不去接管VIP地址。因为生产环境严禁VIP地址随意变动,进行VIP切换时只能在流量低谷时进行。

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

3.4 配置keepalived备

操作:hdss7-12.host.com

[root@hdss7-12.host.com ~]# vi /etc/keepalived/keepalived.conf # 删除里面的默认配置,添加如下配置

! Configuration File for keepalived

global_defs {

router_id 10.4.7.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

mcast_src_ip 10.4.7.12

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

3.5 启动keepalived

操作:hdss7-11.host.com、hdss7-12.host.com

[root@hdss7-11.host.com ~]# systemctl start keepalived.service

[root@hdss7-11.host.com ~]# systemctl enable keepalived.service

[root@hdss7-12.host.com ~]# systemctl start keepalived.service

[root@hdss7-12.host.com ~]# systemctl enable keepalived.service

[root@hdss7-11.host.com ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8a:60:c1 brd ff:ff:ff:ff:ff:ff

inet 10.4.7.11/24 brd 10.4.7.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 10.4.7.10/32 scope global eth0 # vip已生成

3.6 测试keepalived

[root@hdss7-11.host.com ~]# systemctl stop nginx # 停止11的nginx

[root@hdss7-12.host.com ~]# ip a|grep 10.4.7.10 # 此时vip已经转移到12上

inet 10.4.7.10/32 scope global eth0

[root@hdss7-11.host.com ~]# systemctl start nginx # 主 11 再次启动nginx

[root@hdss7-11.host.com ~]# ip a # 这个时候的VIP是不会自动切换回来的,因为主keeplived配置文件中配置了nopreempt参数,不主动切换VIP。

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8a:60:c1 brd ff:ff:ff:ff:ff:ff

inet 10.4.7.11/24 brd 10.4.7.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::9b0c:62d2:22eb:3e41/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::59f0:e5a9:c574:795e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1995:f2a1:11a8:cb1e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

# 切换方法如下:

[root@hdss7-11.host.com ~]# systemctl restart keepalived.service

[root@hdss7-12.host.com ~]# systemctl restart keepalived.service

[root@hdss7-11.host.com ~]# ip a

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8a:60:c1 brd ff:ff:ff:ff:ff:ff

inet 10.4.7.11/24 brd 10.4.7.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 10.4.7.10/32 scope global eth0 # 此时的VIP已经切换回来

4. 部署controller-manager

集群规划

| 主机名 | 角色 | IP |

|---|---|---|

| hdss7-21.host.com | controller-manager | 10.4.7.21 |

| hdss7-22.host.com | controller-manager | 10.4.7.22 |

4.1 创建启动脚本

操作:hdss7-21.host.com

[root@hdss7-21.host.com ~]# cd /opt/kubernetes/server/bin/

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# vi kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager

--cluster-cidr 172.7.0.0/16

--leader-elect true

--log-dir /data/logs/kubernetes/kube-controller-manager # 这个路径稍后需要创建出来

--master http://127.0.0.1:8080

--service-account-private-key-file ./cert/ca-key.pem

--service-cluster-ip-range 192.168.0.0/16

--root-ca-file ./cert/ca.pem

--v 2

4.2 调整文件权限,创建目录

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# chmod +x kube-controller-manager.sh

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# mkdir -p /data/logs/kubernetes/kube-controller-manager

4.3 创建supervisor配置

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# cd /etc/supervisord.d/

[root@hdss7-21.host.com /etc/supervisord.d]# vi kube-conntroller-manager.ini

[program:kube-controller-manager-7-21] # 注意此处,21在不同机器上时,应变成对应机器的ip

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

4.4 启动服务并检查

[root@hdss7-21.host.com /etc/supervisord.d]# cd -

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl update

kube-controller-manager-7-22: added process group

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 8762, uptime 6:24:01

kube-apiserver RUNNING pid 9250, uptime 2:21:34

kube-controller-manager-7-21 RUNNING pid 9763, uptime 0:00:35

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# netstat -lntup |grep contro

tcp6 0 0 :::10252 :::* LISTEN 9764/./kube-control

tcp6 0 0 :::10257 :::* LISTEN 9764/./kube-control

4.5 hdss7-22.host.com进行相同操作

[root@hdss7-22.host.com ~]# cd /opt/kubernetes/server/bin/

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# vi kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager

--cluster-cidr 172.7.0.0/16

--leader-elect true

--log-dir /data/logs/kubernetes/kube-controller-manager # 这个路径稍后需要创建出来

--master http://127.0.0.1:8080

--service-account-private-key-file ./cert/ca-key.pem

--service-cluster-ip-range 192.168.0.0/16

--root-ca-file ./cert/ca.pem

--v 2

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# chmod +x kube-controller-manager.sh

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# mkdir -p /data/logs/kubernetes/kube-controller-manager

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# cd /etc/supervisord.d/

[root@hdss7-22.host.com /etc/supervisord.d]# vi kube-conntroller-manager.ini

[program:kube-controller-manager-7-22]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

[root@hdss7-22.host.com /etc/supervisord.d]# cd -

/opt/kubernetes/server/bin

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# supervisorctl update

kube-controller-manager-7-22: added process group

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# supervisorctl status

etcd-server-7-22 RUNNING pid 8925, uptime 6:28:56

kube-apiserver-7-22 RUNNING pid 9302, uptime 2:20:09

kube-controller-manager-7-22 RUNNING pid 9597, uptime 0:00:35

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# netstat -lntup | grep con

Active Internet connections (only servers)

tcp6 0 0 :::10252 :::* LISTEN 9598/./kube-control

tcp6 0 0 :::10257 :::* LISTEN 9598/./kube-control

5. 部署kube-scheduler

集群规划

| 主机名 | 角色 | IP |

|---|---|---|

| hdss7-21.host.com | controller-manager | 10.4.7.21 |

| hdss7-22.host.com | controller-manager | 10.4.7.22 |

5.1 创建启动脚本

操作:hdss7-21.host.com

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# vi kube-scheduler.sh

#!/bin/sh

./kube-scheduler

--leader-elect

--log-dir /data/logs/kubernetes/kube-scheduler

--master http://127.0.0.1:8080

--v 2

5.2 调整文件权限,创建目录

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# chmod +x kube-scheduler.sh

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# mkdir -p /data/logs/kubernetes/kube-scheduler

5.3 创建supervisor配置

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-7-21]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

5.4 启动服务并检查

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl update

kube-scheduler-7-21: added process group

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 8762, uptime 6:57:55

kube-apiserver RUNNING pid 9250, uptime 2:55:28

kube-controller-manager-7-21 RUNNING pid 9763, uptime 0:34:29

kube-scheduler-7-21 RUNNING pid 9824, uptime 0:01:31

[root@hdss7-21.host.com /opt/kubernetes/server/bin]# netstat -lntup | grep sch

tcp6 0 0 :::10251 :::* LISTEN 9825/./kube-schedul

tcp6 0 0 :::10259 :::* LISTEN 9825/./kube-schedul

5.5 hdss7-22.host.com进行相同操作

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# vi kube-scheduler.sh

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# chmod +x kube-scheduler.sh

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# mkdir -p /data/logs/kubernetes/kube-scheduler

[root@hdss7-22.host.com /opt/kubernetes/server/bin]# vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-7-22]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)