ImagePipeline

使用scrapy框架我们除了要下载文本,还有可能需要下载图片,scrapy提供了ImagePipeline来进行图片的下载。

ImagePipeline还支持以下特别的功能:

1 生成缩略图:通过配置IMAGES_THUMBS = {'size_name': (width_size,heigh_size),}

2 过滤小图片:通过配置IMAGES_MIN_HEIGHT和IMAGES_MIN_WIDTH来过滤过小的图片。

具体其他功能可以看下参考官网手册:https://docs.scrapy.org/en/latest/topics/media-pipeline.html.

ImagePipelines的工作流程

1 在spider中爬取需要下载的图片链接,将其放入item中的image_urls.

2 spider将其传送到pipieline

3 当ImagePipeline处理时,它会检测是否有image_urls字段,如果有的话,会将url传递给scrapy调度器和下载器

4 下载完成后会将结果写入item的另一个字段images,images包含了图片的本地路径,图片校验,和图片的url。

示例 爬取巴士lol的英雄美图

只爬第一页

第一步:items.py

import scrapy

class Happy4Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

image_urls = scrapy.Field()

images = scrapy.Field()

爬虫文件lol.py

# -*- coding: utf-8 -*-

import scrapy

from happy4.items import Happy4Item

class LolSpider(scrapy.Spider):

name = 'lol'

allowed_domains = ['lol.tgbus.com']

start_urls = ['http://lol.tgbus.com/tu/yxmt/']

def parse(self, response):

li_list = response.xpath('//div[@class="list cf mb30"]/ul//li')

for one_li in li_list:

item = Happy4Item()

item['image_urls'] =one_li.xpath('./a/img/@src').extract()

yield item

最后 settings.py

BOT_NAME = 'happy4'

SPIDER_MODULES = ['happy4.spiders']

NEWSPIDER_MODULE = 'happy4.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'scrapy.pipelines.images.ImagesPipeline': 1,

}

IMAGES_STORE = 'images'

不需要操作管道文件,就可以爬去图片到本地

极大的减少了代码量.

注意:因为图片管道会尝试将所有图片都转换成JPG格式的,你看源代码的话也会发现图片管道中文件名类型直接写死为JPG的。所以如果想要保存原始类型的图片,就应该使用文件管道。

示例 爬取mm131美女图片

要求爬取的就是这个网站

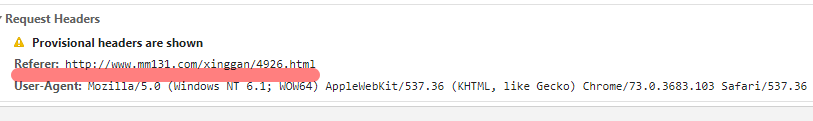

这个网站是有反爬的,当你直接去下载一个图片的时候,你会发现url被重新指向了别处,或者有可能是直接报错302,这是因为它使用了referer这个请求头里的字段,当你打开一个图片的url的时候,你的请求头里必须有referer,不然就会被识别为爬虫文件,禁止你的爬取,那么如何解决呢?

手动在爬取每个图片的时候添加referer字段。

xingan.py

# -*- coding: utf-8 -*-

import scrapy

from happy5.items import Happy5Item

import re

class XinganSpider(scrapy.Spider):

name = 'xingan'

allowed_domains = ['www.mm131.com']

start_urls = ['http://www.mm131.com/xinggan/']

def parse(self, response):

every_html = response.xpath('//div[@class="main"]/dl//dd')

for one_html in every_html[0:-1]:

item = Happy5Item()

# 每个图片的链接

link = one_html.xpath('./a/@href').extract_first()

# 每个图片的名字

title = one_html.xpath('./a/img/@alt').extract_first()

item['title'] = title

# 进入到每个标题里面

request = scrapy.Request(url=link, callback=self.parse_one, meta={'item':item})

yield request

# 每个人下面的图集

def parse_one(self, response):

item = response.meta['item']

# 找到总页数

total_page = response.xpath('//div[@class="content-page"]/span[@class="page-ch"]/text()').extract_first()

num = int(re.findall('(d+)', total_page)[0])

# 找到当前页数

now_num = response.xpath('//div[@class="content-page"]/span[@class="page_now"]/text()').extract_first()

now_num = int(now_num)

# 当前页图片的url

every_pic = response.xpath('//div[@class="content-pic"]/a/img/@src').extract()

# 当前页的图片url

item['image_urls'] = every_pic

# 当前图片的refer

item['referer'] = response.url

yield item

# 如果当前数小于总页数

if now_num < num:

if now_num == 1:

url1 = response.url[0:-5] + '_%d'%(now_num+1) + '.html'

elif now_num > 1:

url1 = re.sub('_(d+)', '_' + str(now_num+1), response.url)

headers = {

'referer':self.start_urls[0]

}

# 给下一页发送请求

yield scrapy.Request(url=url1, headers=headers, callback=self.parse_one, meta={'item':item})

items.py

import scrapy

class Happy5Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

image_urls = scrapy.Field()

images = scrapy.Field()

title = scrapy.Field()

referer = scrapy.Field()

pipelines.py

from scrapy.pipelines.images import ImagesPipeline

from scrapy.exceptions import DropItem

from scrapy.http import Request

class Happy5Pipeline(object):

def process_item(self, item, spider):

return item

class QiushiImagePipeline(ImagesPipeline):

# 下载图片时加入referer请求头

def get_media_requests(self, item, info):

for image_url in item['image_urls']:

headers = {'referer':item['referer']}

yield Request(image_url, meta={'item': item}, headers=headers)

# 这里把item传过去,因为后面需要用item里面的书名和章节作为文件名

# 获取图片的下载结果, 控制台查看

def item_completed(self, results, item, info):

image_paths = [x['path'] for ok, x in results if ok]

if not image_paths:

raise DropItem("Item contains no images")

return item

# 修改文件的命名和路径

def file_path(self, request, response=None, info=None):

item = request.meta['item']

image_guid = request.url.split('/')[-1]

filename = './{}/{}'.format(item['title'], image_guid)

return filename

settings.py

BOT_NAME = 'happy5'

SPIDER_MODULES = ['happy5.spiders']

NEWSPIDER_MODULE = 'happy5.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

# 'scrapy.pipelines.images.ImagesPipeline': 1,

'happy5.pipelines.QiushiImagePipeline': 2,

}

IMAGES_STORE = 'images'

得到图片文件:

这种图还是要少看。