PoolThreadLocalCache

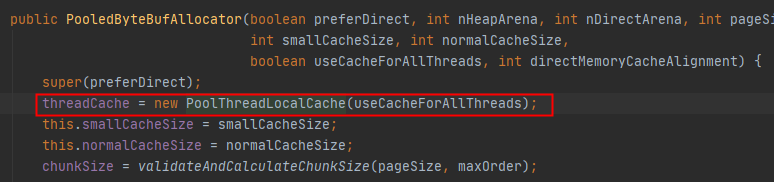

PooledByteBufAllocator 持有 PoolThreadLocalCache 对象,PoolThreadLocalCache 继承 FastThreadLocal<PoolThreadCache>,属于本地线程缓存变量。因此每个线程拥有属于自己的 PoolThreadCache(线程私有)。当我们需要申请内存时,首先从 PoolThreadCache 中尝试获取(根据规范值在对应数组中查找即可),如果 PoolThreadCache 对象存在适配的内存块,直接返回,没有才委托 PoolArena 进行内存分配操作。相当于在线程和 PoolArena 对象之间加了一道缓存,从而进一步提升内存分配的效率。

当线程用完某段内存块,它并不直接归还至 PoolChunk,而是使用 PoolThreadCache 缓存起来。PoolThreadCache 不会一直缓存 PoolChunk 分配给线程的内存块。PoolThreadCache 在分配次数(allocations)超过阈值(freeSweepAllocationThreshold,默认值: 8192)之后,就会触发释放内存动作,将多余的空闲内存归还给 PoolArena。

final class PoolThreadLocalCache extends FastThreadLocal<PoolThreadCache> { private final boolean useCacheForAllThreads; PoolThreadLocalCache(boolean useCacheForAllThreads) { this.useCacheForAllThreads = useCacheForAllThreads; } // 每次初始化「FastThreadLocal」都会调用,可以用来做一些初始化工作,在这里是初始化heapArena和directArena两个对象 @Override protected synchronized PoolThreadCache initialValue() { final PoolArena<byte[]> heapArena = leastUsedArena(heapArenas); final PoolArena<ByteBuffer> directArena = leastUsedArena(directArenas); final Thread current = Thread.currentThread(); if (useCacheForAllThreads || current instanceof FastThreadLocalThread) { final PoolThreadCache cache = new PoolThreadCache( heapArena, directArena, smallCacheSize, normalCacheSize, DEFAULT_MAX_CACHED_BUFFER_CAPACITY, DEFAULT_CACHE_TRIM_INTERVAL); if (DEFAULT_CACHE_TRIM_INTERVAL_MILLIS > 0) { final EventExecutor executor = ThreadExecutorMap.currentExecutor(); if (executor != null) { executor.scheduleAtFixedRate(trimTask, DEFAULT_CACHE_TRIM_INTERVAL_MILLIS, DEFAULT_CACHE_TRIM_INTERVAL_MILLIS, TimeUnit.MILLISECONDS); } } return cache; } // No caching so just use 0 as sizes. return new PoolThreadCache(heapArena, directArena, 0, 0, 0, 0); } @Override protected void onRemoval(PoolThreadCache threadCache) { threadCache.free(false); } // 比较每个PoolArena的numThreadCaches的值,选择最小的那个与线程进行绑定 private <T> PoolArena<T> leastUsedArena(PoolArena<T>[] arenas) { if (arenas == null || arenas.length == 0) { return null; } PoolArena<T> minArena = arenas[0]; for (int i = 1; i < arenas.length; i++) { PoolArena<T> arena = arenas[i]; if (arena.numThreadCaches.get() < minArena.numThreadCaches.get()) { minArena = arena; } } return minArena; } }

每个线程只会绑定其中一个 PoolArena(具体分为 heapArena 和 directArena),在整个线程生命周期内只与这个 PoolArena 打交道。这也是 jemalloc 的算法思想(分而治之)的体现(可以提升多线程内存分配的性能)。通过比对每个 PoolArena 绑定的线程数量,选择最小值的 PoolArena 和当前进行内存申请的线程进行绑定。这样才能实现线程对内存的负载均衡,否则一个内存被多个线程用,其他的内存没有线程用,那使用效率就低了,还会引起严重的线程竞争问题。

PoolThreadLocalCache 的泛型是 PoolThreadCache 类型,因此可以通过 PoolThreadLocalCache#get() 获得 PoolThreadCache 对象,这个对象就是存放内存信息的地方。

PoolThreadCache

属性:

final PoolArena<byte[]> heapArena; final PoolArena<ByteBuffer> directArena; // Hold the caches for the different size classes, which are tiny, small and normal. private final MemoryRegionCache<byte[]>[] smallSubPageHeapCaches;//39即之前提的numSmallSubpagePools长度 private final MemoryRegionCache<ByteBuffer>[] smallSubPageDirectCaches;//39即之前提的numSmallSubpagePools长度 private final MemoryRegionCache<byte[]>[] normalHeapCaches;//1 private final MemoryRegionCache<ByteBuffer>[] normalDirectCaches;//1 private final int freeSweepAllocationThreshold;// 触发释放部分内存块阈值 private final AtomicBoolean freed = new AtomicBoolean();// 当前「PoolThreadCache」是否需要被释放 private int allocations;// 从本地线程缓存中分配的次数,当超过freeSweepAllocationThreshold时会重置为0

heapArena 和 directArena 是在 PoolThreadLocalCache#initialValue() 初始化的,PoolThreadCache 定义了缓存内存块的规则,其实和 PoolArena 类似,使用数组缓存内存信息,数组序号与内存块大小一一对应,这样就可以通过规格值直接找到对应的序号判断是否有可用内存块了。

MemoryRegionCache

private abstract static class MemoryRegionCache<T> { private final int size;//缓存个数 private final Queue<Entry<T>> queue;//实体队列,是一个MpscArrayQueue多生产者单消费者的队列,也就是说可以多个线程放入数据,只有一个线程可以取数据。 private final SizeClass sizeClass;//类型,即之前说过的 small和normal private int allocations;// 「MemoryRegionCache」成功分配次数,这个和「PoolThreadCache」是有区别的

Entry

static final class Entry<T> { final Handle<Entry<?>> recyclerHandle;//回收处理器 PoolChunk<T> chunk;//那个块 ByteBuffer nioBuffer;//注解缓冲区 long handle = -1;//句柄 int normCapacity;

handle里就描述了很多信息,可以获得块内的偏移地址,子页偏移地址,子页等等。缓存就是缓存块内对应的内存信息。

构造函数

PoolThreadCache(PoolArena<byte[]> heapArena, PoolArena<ByteBuffer> directArena, int smallCacheSize, int normalCacheSize, int maxCachedBufferCapacity, int freeSweepAllocationThreshold) { checkPositiveOrZero(maxCachedBufferCapacity, "maxCachedBufferCapacity"); this.freeSweepAllocationThreshold = freeSweepAllocationThreshold; this.heapArena = heapArena; this.directArena = directArena; if (directArena != null) { smallSubPageDirectCaches = createSubPageCaches( smallCacheSize, directArena.numSmallSubpagePools); normalDirectCaches = createNormalCaches( normalCacheSize, maxCachedBufferCapacity, directArena); directArena.numThreadCaches.getAndIncrement(); } else { // No directArea is configured so just null out all caches smallSubPageDirectCaches = null; normalDirectCaches = null; } if (heapArena != null) { // Create the caches for the heap allocations smallSubPageHeapCaches = createSubPageCaches( smallCacheSize, heapArena.numSmallSubpagePools); normalHeapCaches = createNormalCaches( normalCacheSize, maxCachedBufferCapacity, heapArena); heapArena.numThreadCaches.getAndIncrement(); } else { // No heapArea is configured so just null out all caches smallSubPageHeapCaches = null; normalHeapCaches = null; } // Only check if there are caches in use. if ((smallSubPageDirectCaches != null || normalDirectCaches != null || smallSubPageHeapCaches != null || normalHeapCaches != null) && freeSweepAllocationThreshold < 1) { throw new IllegalArgumentException("freeSweepAllocationThreshold: " + freeSweepAllocationThreshold + " (expected: > 0)"); } }

createSubPageCaches

small类型默认39个SubPageMemoryRegionCache,每个内部缓存256个Entry

private static <T> MemoryRegionCache<T>[] createSubPageCaches( int cacheSize, int numCaches) { if (cacheSize > 0 && numCaches > 0) { @SuppressWarnings("unchecked") MemoryRegionCache<T>[] cache = new MemoryRegionCache[numCaches];//长度39 for (int i = 0; i < cache.length; i++) { // TODO: maybe use cacheSize / cache.length cache[i] = new SubPageMemoryRegionCache<T>(cacheSize); } return cache; } else { return null; } }

createNormalCaches

最多只能存maxCachedBufferCapacity 默认是32K的大小,数组个数是1,idx起始为39,max为32k,对应id为39,所以只能add一次

private static <T> MemoryRegionCache<T>[] createNormalCaches( int cacheSize, int maxCachedBufferCapacity, PoolArena<T> area) { if (cacheSize > 0 && maxCachedBufferCapacity > 0) { int max = Math.min(area.chunkSize, maxCachedBufferCapacity);//默认最多缓存maxCachedBufferCapacity=32K // Create as many normal caches as we support based on how many sizeIdx we have and what the upper // bound is that we want to cache in general. List<MemoryRegionCache<T>> cache = new ArrayList<MemoryRegionCache<T>>() ; for (int idx = area.numSmallSubpagePools; idx < area.nSizes && area.sizeIdx2size(idx) <= max ; idx++) { cache.add(new NormalMemoryRegionCache<T>(cacheSize));//一个 } return cache.toArray(new MemoryRegionCache[0]); } else { return null; } }

从缓存中尝试申请内存

在创建缓冲区的方法中尝试从PoolThreadLocalCache获取PoolThreadCache:

protected ByteBuf newHeapBuffer(int initialCapacity, int maxCapacity) {

PoolThreadCache cache = threadCache.get();

PoolArena<byte[]> heapArena = cache.heapArena;

final ByteBuf buf;

if (heapArena != null) {

buf = heapArena.allocate(cache, initialCapacity, maxCapacity);

} else {

buf = PlatformDependent.hasUnsafe() ?

new UnpooledUnsafeHeapByteBuf(this, initialCapacity, maxCapacity) :

new UnpooledHeapByteBuf(this, initialCapacity, maxCapacity);

}

return toLeakAwareBuffer(buf);

}

FastThreadLocal的get方法,如果没有会执行initialize创建。

public final V get() {

InternalThreadLocalMap threadLocalMap = InternalThreadLocalMap.get();

Object v = threadLocalMap.indexedVariable(index);

if (v != InternalThreadLocalMap.UNSET) {

return (V) v;

}

return initialize(threadLocalMap);

}

最终调用PoolThreadLocalCache#initialValue 方法,实现PoolThreadCache的实例化。

PoolArena#allocate

PooledByteBuf<T> allocate(PoolThreadCache cache, int reqCapacity, int maxCapacity) { PooledByteBuf<T> buf = newByteBuf(maxCapacity); allocate(cache, buf, reqCapacity); return buf; } private void allocate(PoolThreadCache cache, PooledByteBuf<T> buf, final int reqCapacity) { final int sizeIdx = size2SizeIdx(reqCapacity); if (sizeIdx <= smallMaxSizeIdx) { tcacheAllocateSmall(cache, buf, reqCapacity, sizeIdx); } else if (sizeIdx < nSizes) { tcacheAllocateNormal(cache, buf, reqCapacity, sizeIdx); } else { int normCapacity = directMemoryCacheAlignment > 0 ? normalizeSize(reqCapacity) : reqCapacity; // Huge allocations are never served via the cache so just call allocateHuge allocateHuge(buf, normCapacity); } }

PoolThreadCache分配内存allocate

尝试让缓存来分配,如果有分配过,无论成功失败,都会使得allocations增加,如果分配的数量超过阈值后,就会清0,并且对缓存进行清除trim,估计是避免长时间缓存着又没用到,等于说是内存泄露了

/** * Try to allocate a small buffer out of the cache. Returns {@code true} if successful {@code false} otherwise */ boolean allocateSmall(PoolArena<?> area, PooledByteBuf<?> buf, int reqCapacity, int sizeIdx) {//尝试分配small级别内存并初始化PooledByteBuf对象 return allocate(cacheForSmall(area, sizeIdx), buf, reqCapacity); } /** * Try to allocate a normal buffer out of the cache. Returns {@code true} if successful {@code false} otherwise */ boolean allocateNormal(PoolArena<?> area, PooledByteBuf<?> buf, int reqCapacity, int sizeIdx) { return allocate(cacheForNormal(area, sizeIdx), buf, reqCapacity); } @SuppressWarnings({ "unchecked", "rawtypes" }) private boolean allocate(MemoryRegionCache<?> cache, PooledByteBuf buf, int reqCapacity) { if (cache == null) { // no cache found so just return false here return false; } boolean allocated = cache.allocate(buf, reqCapacity, this);// 委托「MemoryRegionCache」分配内存 if (++ allocations >= freeSweepAllocationThreshold) {//已分配次数是否大于清除次数阈值,阈值为8192 allocations = 0; trim(); } return allocated; }

cacheForSmall和cacheForNormal

根据数据容器以及规格值获取对应的MemoryRegionCache对象

private MemoryRegionCache<?> cacheForSmall(PoolArena<?> area, int sizeIdx) { if (area.isDirect()) { return cache(smallSubPageDirectCaches, sizeIdx); } return cache(smallSubPageHeapCaches, sizeIdx); } private MemoryRegionCache<?> cacheForNormal(PoolArena<?> area, int sizeIdx) { // We need to substract area.numSmallSubpagePools as sizeIdx is the overall index for all sizes. int idx = sizeIdx - area.numSmallSubpagePools; if (area.isDirect()) { return cache(normalDirectCaches, idx); } return cache(normalHeapCaches, idx); }

cache

获取对应类型的索引,看数组中是否存在该缓存,如果存在,就返回,否则就是null

private static <T> MemoryRegionCache<T> cache(MemoryRegionCache<T>[] cache, int sizeIdx) { if (cache == null || sizeIdx > cache.length - 1) { return null; } return cache[sizeIdx]; }

MemoryRegionCache的allocate

真正内存分配的地方,从队列queue里取出Entry实体,然后进行initBuf初始化,并把 Entry 对象回收。

public final boolean allocate(PooledByteBuf<T> buf, int reqCapacity, PoolThreadCache threadCache) { Entry<T> entry = queue.poll();//取出实体 if (entry == null) { return false; } initBuf(entry.chunk, entry.nioBuffer, entry.handle, buf, reqCapacity, threadCache);//把内存信息写入ByteBuf对象 entry.recycle();//回收实体,放入对象池 // allocations is not thread-safe which is fine as this is only called from the same thread all time. ++ allocations;//已分配出去的+1 return true; }

最开始的时候队列里面没有实体,返回false。

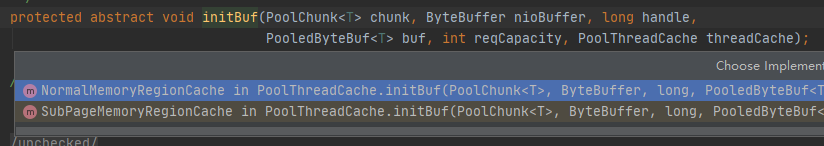

initBuf

由两个子类实现。

NormalMemoryRegionCache#initBuf

private static final class NormalMemoryRegionCache<T> extends MemoryRegionCache<T> { NormalMemoryRegionCache(int size) { super(size, SizeClass.Normal); } @Override protected void initBuf( PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, PooledByteBuf<T> buf, int reqCapacity, PoolThreadCache threadCache) { chunk.initBuf(buf, nioBuffer, handle, reqCapacity, threadCache); } }

SubPageMemoryRegionCache#initBuf

private static final class SubPageMemoryRegionCache<T> extends MemoryRegionCache<T> { SubPageMemoryRegionCache(int size) { super(size, SizeClass.Small); } @Override protected void initBuf( PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, PooledByteBuf<T> buf, int reqCapacity, PoolThreadCache threadCache) { chunk.initBufWithSubpage(buf, nioBuffer, handle, reqCapacity, threadCache); } }

这个跟我们正常内存分配流程最后的一样,subpage调用initBufWithSubpage,normal类型是initBuf。之前提过这两个方法。

本地线程回收内存块

当调用 ByteBuf#release() 会让引用计数 -1,当引用计数为 0 时就意味着该 ByteBuf 对象需要被回收,ByteBuf 对象进入对象池,ByteBuf 对象所管理的内存块进行内存池。但是内存块进入内存池之前会被 PoolThreadCache 截胡,把待回收内存块放入本地线程缓存中,待后续本线程申请时使用。

PoolThreadCache尝试缓存内存

将案例修改下:

ByteBuf buffer = ByteBufAllocator.DEFAULT.heapBuffer(28*1024);

buffer.release();

@Override public boolean release() { return handleRelease(updater.release(this)); } private boolean handleRelease(boolean result) { if (result) { deallocate(); } return result; }

PooledByteBuf的deallocate

其实就是释放内存资源,属性重新设置回默认,自己也回收到对象池里。

@Override protected final void deallocate() { if (handle >= 0) { final long handle = this.handle; this.handle = -1; memory = null; chunk.arena.free(chunk, tmpNioBuf, handle, maxLength, cache); tmpNioBuf = null; chunk = null; recycle();//放进池里面 } }

PoolArena的free

首先进行了尺寸类型的获取,然后如果是有缓存的,就尝试添加到缓存,如果添加成功就返回,不然就要释放块内存了。

void free(PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, int normCapacity, PoolThreadCache cache) { if (chunk.unpooled) { int size = chunk.chunkSize(); destroyChunk(chunk); activeBytesHuge.add(-size); deallocationsHuge.increment(); } else { SizeClass sizeClass = sizeClass(handle);

// 先让本地线程缓存尝试回收 if (cache != null && cache.add(this, chunk, nioBuffer, handle, normCapacity, sizeClass)) { // cached so not free it. return; } freeChunk(chunk, handle, normCapacity, sizeClass, nioBuffer, false); } }

PoolThreadCache的add

boolean add(PoolArena<?> area, PoolChunk chunk, ByteBuffer nioBuffer, long handle, int normCapacity, SizeClass sizeClass) { int sizeIdx = area.size2SizeIdx(normCapacity); MemoryRegionCache<?> cache = cache(area, sizeIdx, sizeClass); if (cache == null) {//没有适配的 MemoryRegionCache return false; } return cache.add(chunk, nioBuffer, handle, normCapacity);//回收缓存 } private MemoryRegionCache<?> cache(PoolArena<?> area, int sizeIdx, SizeClass sizeClass) { switch (sizeClass) { case Normal: return cacheForNormal(area, sizeIdx); case Small: return cacheForSmall(area, sizeIdx); default: throw new Error(); } }

MemoryRegionCache的add

开始尝试添加,会先将要缓存的数据封装成一个实体,然后尝试放进队列里,如果队列满了,放不了,就把实体回收,防止内存泄漏,返回false。如果能放,就直接返回true。Entry 对象使用对象池化技术。

public final boolean add(PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, int normCapacity) { Entry<T> entry = newEntry(chunk, nioBuffer, handle, normCapacity); boolean queued = queue.offer(entry); if (!queued) { // If it was not possible to cache the chunk, immediately recycle the entry entry.recycle(); } return queued; }

MemoryRegionCache的newEntry

与之前的bytebuf一样,利用对象池来实现Entry的创建

private static Entry newEntry(PoolChunk<?> chunk, ByteBuffer nioBuffer, long handle, int normCapacity) { Entry entry = RECYCLER.get(); entry.chunk = chunk; entry.nioBuffer = nioBuffer; entry.handle = handle; entry.normCapacity = normCapacity; return entry; } @SuppressWarnings("rawtypes") private static final ObjectPool<Entry> RECYCLER = ObjectPool.newPool(new ObjectCreator<Entry>() { @SuppressWarnings("unchecked") @Override public Entry newObject(Handle<Entry> handle) { return new Entry(handle); } });

至此添加到缓存讲完了,其实就是缓冲区没有被引用的时候就会添加到线程本地缓存,以便于下一次用可以直接拿出来初始化,不需要进行内存分配算法了,提高了效率。具体体现为使用 Entry 对象封装内存块信息,然后写入对应 MemoryRegionCache[] 数组中。MemoryRegionCache 对象内部维护一个队列,该队列是存放 Entry 对象的地方。

释放缓存中的内存块

PoolThreadCache的trim

void trim() { trim(smallSubPageDirectCaches); trim(normalDirectCaches); trim(smallSubPageHeapCaches); trim(normalHeapCaches); } private static void trim(MemoryRegionCache<?>[] caches) { if (caches == null) { return; } for (MemoryRegionCache<?> c: caches) { trim(c); } }

MemoryRegionCache的trim

trim() 这个方法会在这样的时机被调用:

① 定时任务trimTask,默认不开启,可以通过设置 io.netty.allocation.cacheTrimIntervalMillis开启,时间单位:TimeUnit.MILLISECONDS

② 在分配次数>=freeSweepAllocationThreshold(默认值:8192)时才会触发回收

public final void trim() { int free = size - allocations;//还存在队列里的数量 allocations = 0; // We not even allocated all the number that are if (free > 0) { free(free, false);//这里并非全部释放队列的中所有内存信息,它有一个最大值free限制 } }

这里的finalizer为false,那哪里会使用 true 呢。可以看到PoolThreadCache重载了finalize() 方法,这里面 的finalizer 就是true。

protected void finalize() throws Throwable { try { super.finalize(); } finally { free(true); } }

根据是否从 Object#finalizer() 调用来判断是否需要对 Entry 对象回收。如果为 true,表明此时进行的时线程销毁动作,调用 PoolThreadCache#finalize() 方法会回收所有只与此线程相关的数据,比如 Entry、ObjectPool 等对象,线程销毁这些对象就会自动销毁了。但是平常的释放动作不同,虽然调用 entry.crecycle() 对象,假设此时 PoolChunk 对象只有 Entry 这么一个引用指向它,如果不调用这个方法就会造成 PoolChunk 一直被强引用,无法被回收,从而造成内存泄漏。

MemoryRegionCache#free(int, boolean)

从队列里取,去完释放完为止,返回释放的个数。最多可能会回收 max 个对象。

private int free(int max, boolean finalizer) { int numFreed = 0; for (; numFreed < max; numFreed++) { Entry<T> entry = queue.poll(); if (entry != null) { freeEntry(entry, finalizer); } else { // all cleared return numFreed; } } return numFreed; }

freeEntry

如果整个PoolThreadCache被回收了,会使得finalizer=true

private void freeEntry(Entry entry, boolean finalizer) { PoolChunk chunk = entry.chunk; long handle = entry.handle; ByteBuffer nioBuffer = entry.nioBuffer; if (!finalizer) { // recycle now so PoolChunk can be GC'ed. This will only be done if this is not freed because of // a finalizer. entry.recycle();//回收Entry对象,以便后面的PoolChunk对象可以GC。这不会在Oejct#finalize()方法中进行这一步操作 } chunk.arena.freeChunk(chunk, handle, entry.normCapacity, sizeClass, nioBuffer, finalizer);//归还内存 }

PoolArena的freeChunk

void freeChunk(PoolChunk<T> chunk, long handle, int normCapacity, SizeClass sizeClass, ByteBuffer nioBuffer, boolean finalizer) { final boolean destroyChunk; synchronized (this) { // We only call this if freeChunk is not called because of the PoolThreadCache finalizer as otherwise this // may fail due lazy class-loading in for example tomcat. if (!finalizer) { switch (sizeClass) { case Normal: ++deallocationsNormal; break; case Small: ++deallocationsSmall; break; default: throw new Error(); } } destroyChunk = !chunk.parent.free(chunk, handle, normCapacity, nioBuffer); } if (destroyChunk) { // destroyChunk not need to be called while holding the synchronized lock. destroyChunk(chunk); } }

PoolChunkList的free

最终还是要到chunk中释放。释放完之后看使用率进行移动并从块列表中删除,如果移动到q000不能往前移了,就说明块没利用率,没说没任何使用,得释放了,返回false。否则返回true。

boolean free(PoolChunk<T> chunk, long handle, int normCapacity, ByteBuffer nioBuffer) { chunk.free(handle, normCapacity, nioBuffer);//块释放内存 if (chunk.freeBytes > freeMaxThreshold) {//大于最大空闲 remove(chunk);//从当前快列表中删除 // Move the PoolChunk down the PoolChunkList linked-list. return move0(chunk);//给上一个快列表 } return true; }

PoolChunk的free

void free(long handle, int normCapacity, ByteBuffer nioBuffer) { if (isSubpage(handle)) {//subpage类型 int sizeIdx = arena.size2SizeIdx(normCapacity); PoolSubpage<T> head = arena.findSubpagePoolHead(sizeIdx); int sIdx = runOffset(handle); PoolSubpage<T> subpage = subpages[sIdx]; assert subpage != null && subpage.doNotDestroy; // Obtain the head of the PoolSubPage pool that is owned by the PoolArena and synchronize on it. // This is need as we may add it back and so alter the linked-list structure. synchronized (head) { if (subpage.free(head, bitmapIdx(handle))) { //the subpage is still used, do not free it return; } assert !subpage.doNotDestroy; // Null out slot in the array as it was freed and we should not use it anymore. subpages[sIdx] = null; } } //start free run int pages = runPages(handle); synchronized (runsAvail) { // collapse continuous runs, successfully collapsed runs // will be removed from runsAvail and runsAvailMap long finalRun = collapseRuns(handle); //set run as not used finalRun &= ~(1L << IS_USED_SHIFT); //if it is a subpage, set it to run finalRun &= ~(1L << IS_SUBPAGE_SHIFT); insertAvailRun(runOffset(finalRun), runPages(finalRun), finalRun); freeBytes += pages << pageShifts; } if (nioBuffer != null && cachedNioBuffers != null && cachedNioBuffers.size() < PooledByteBufAllocator.DEFAULT_MAX_CACHED_BYTEBUFFERS_PER_CHUNK) { cachedNioBuffers.offer(nioBuffer); } }

PoolSubpage的free

释放位图中的索引位,可以给下一次申请的直接用,如果可用的数量是0,即已经是用完的子页的话,重新加入到head后。返回true,说明不需要删除子页,又复用了。如果可用数量不为0,释放后数量页等于最大数量,说明这个子页现在没有任何内存被分配出去了,那就等于这个子页没什么用了, 但是如果就只剩head了,那就不删除了,直接返回,删除的话后面申请的又的重新创建,浪费时间。如果除了head还有其他子页,那就从双向循环列表中删除掉当前子页,并设置需要销毁标志doNotDestroy=false,返回false,否则就返回true。

boolean free(PoolSubpage<T> head, int bitmapIdx) { if (elemSize == 0) { return true; } int q = bitmapIdx >>> 6;//获取位图在数组中的索引 int r = bitmapIdx & 63;//获取位图内部索引 assert (bitmap[q] >>> r & 1) != 0; bitmap[q] ^= 1L << r; setNextAvail(bitmapIdx);//将索引释放,下一个可以直接获取整个索引 if (numAvail ++ == 0) {//如果已经使用完的子页,有空间了再加到head后 addToPool(head); /* When maxNumElems == 1, the maximum numAvail is also 1. * Each of these PoolSubpages will go in here when they do free operation. * If they return true directly from here, then the rest of the code will be unreachable * and they will not actually be recycled. So return true only on maxNumElems > 1. */ if (maxNumElems > 1) { return true; } } if (numAvail != maxNumElems) {//还有其他内存在使用 return true; } else { // Subpage not in use (numAvail == maxNumElems) if (prev == next) {//只有一个head了,不能删除 // Do not remove if this subpage is the only one left in the pool. return true; } //如果有其他子页的话,删除当前子页 // Remove this subpage from the pool if there are other subpages left in the pool. doNotDestroy = false; removeFromPool(); return false; } }

PoolChunkList的remove

如果发现chunk块列表的使用率小的话,就从块列表中删除,并准备往前移。

private void remove(PoolChunk<T> cur) { if (cur == head) { head = cur.next; if (head != null) { head.prev = null; } } else { PoolChunk<T> next = cur.next; cur.prev.next = next; if (next != null) { next.prev = cur.prev; } } }

PoolChunkList的move0

往以一个块列表移,如果发现符合条件就加入块列表,否则就继续往前移,直到移到q000块列表位为止,返回false,表示要销毁这个块。

private boolean move0(PoolChunk<T> chunk) { if (prevList == null) {//当前块列表没有前一个块列表了,也就是Q0,可以直接删除块释放内存 // There is no previous PoolChunkList so return false which result in having the PoolChunk destroyed and // all memory associated with the PoolChunk will be released. assert chunk.usage() == 0; return false; } return prevList.move(chunk);//移动到前一个快列表中 }

PoolArena的destroyChunk

堆内存,给虚拟机处理器,实现方法为:

protected void destroyChunk(PoolChunk<byte[]> chunk) { // Rely on GC. }

堆外内存的话就是根据是否有清理器来释放

protected void destroyChunk(PoolChunk<ByteBuffer> chunk) { if (PlatformDependent.useDirectBufferNoCleaner()) { PlatformDependent.freeDirectNoCleaner(chunk.memory); } else { PlatformDependent.freeDirectBuffer(chunk.memory); } }

没有清理器

public static void freeDirectNoCleaner(ByteBuffer buffer) { assert USE_DIRECT_BUFFER_NO_CLEANER; int capacity = buffer.capacity(); PlatformDependent0.freeMemory(PlatformDependent0.directBufferAddress(buffer)); decrementMemoryCounter(capacity); }

PlatformDependent0的directBufferAddress

这里最后还是调用了unsafe的方法,其实就是本地方法freeMemory0。

static void freeMemory(long address) { UNSAFE.freeMemory(address); }

有清理器

public static void freeDirectBuffer(ByteBuffer buffer) { CLEANER.freeDirectBuffer(buffer); }

CLEANER

跟平台有关

if (!isAndroid()) { // only direct to method if we are not running on android. // See https://github.com/netty/netty/issues/2604 if (javaVersion() >= 9) { CLEANER = CleanerJava9.isSupported() ? new CleanerJava9() : NOOP; } else { CLEANER = CleanerJava6.isSupported() ? new CleanerJava6() : NOOP; } } else { CLEANER = NOOP; }

PoolThreadLocalCache的onRemoval

如何才可以在线程结束的时候调用方法呢,其实就是包装成FastThreadLocalRunnable的效果。

final class FastThreadLocalRunnable implements Runnable { private final Runnable runnable; private FastThreadLocalRunnable(Runnable runnable) { this.runnable = ObjectUtil.checkNotNull(runnable, "runnable"); } @Override public void run() { try { runnable.run(); } finally { FastThreadLocal.removeAll(); } } static Runnable wrap(Runnable runnable) { return runnable instanceof FastThreadLocalRunnable ? runnable : new FastThreadLocalRunnable(runnable); } }

而FastThreadLocalRunnable,在最先开始的DefaultThreadFactory#newThread中进行转换,将普通的 Runnable 装饰为 FastThreadLocalRunnable。这样在方法执行完以后进行缓存的释放。完美实现了装饰者模式。

@Override public Thread newThread(Runnable r) { Thread t = newThread(FastThreadLocalRunnable.wrap(r), prefix + nextId.incrementAndGet()); try { if (t.isDaemon() != daemon) { t.setDaemon(daemon); } if (t.getPriority() != priority) { t.setPriority(priority); } } catch (Exception ignored) { // Doesn't matter even if failed to set. } return t; } protected Thread newThread(Runnable r, String name) { return new FastThreadLocalThread(threadGroup, r, name); }

public static void removeAll() { InternalThreadLocalMap threadLocalMap = InternalThreadLocalMap.getIfSet(); if (threadLocalMap == null) { return; } try { Object v = threadLocalMap.indexedVariable(variablesToRemoveIndex); if (v != null && v != InternalThreadLocalMap.UNSET) { @SuppressWarnings("unchecked") Set<FastThreadLocal<?>> variablesToRemove = (Set<FastThreadLocal<?>>) v; FastThreadLocal<?>[] variablesToRemoveArray = variablesToRemove.toArray(new FastThreadLocal[0]); for (FastThreadLocal<?> tlv: variablesToRemoveArray) { tlv.remove(threadLocalMap); } } } finally { InternalThreadLocalMap.remove(); } }

public final void remove(InternalThreadLocalMap threadLocalMap) { if (threadLocalMap == null) { return; } Object v = threadLocalMap.removeIndexedVariable(index); removeFromVariablesToRemove(threadLocalMap, this); if (v != InternalThreadLocalMap.UNSET) { try { onRemoval((V) v); } catch (Exception e) { PlatformDependent.throwException(e); } } }

前面有说PoolThreadLocalCache在线程执行完会自动释放缓存,其实就是onRemoval方法。

protected void onRemoval(PoolThreadCache threadCache) { threadCache.free(false); }

PoolThreadCache的free(boolean finalizer)

里面也是每个缓存数组都释放,返回释放的总数量,然后对应的计数减少。

void free(boolean finalizer) { // As free() may be called either by the finalizer or by FastThreadLocal.onRemoval(...) we need to ensure // we only call this one time. if (freed.compareAndSet(false, true)) { int numFreed = free(smallSubPageDirectCaches, finalizer) + free(normalDirectCaches, finalizer) + free(smallSubPageHeapCaches, finalizer) + free(normalHeapCaches, finalizer); if (numFreed > 0 && logger.isDebugEnabled()) { logger.debug("Freed {} thread-local buffer(s) from thread: {}", numFreed, Thread.currentThread().getName()); } if (directArena != null) { directArena.numThreadCaches.getAndDecrement(); } if (heapArena != null) { heapArena.numThreadCaches.getAndDecrement(); } } }

private static int free(MemoryRegionCache<?>[] caches, boolean finalizer) { if (caches == null) { return 0; } int numFreed = 0; for (MemoryRegionCache<?> c: caches) { numFreed += free(c, finalizer); } return numFreed; } private static int free(MemoryRegionCache<?> cache, boolean finalizer) { if (cache == null) { return 0; } return cache.free(finalizer); }

回到之前的哪里,返回所有回收的总数量

最终释放的是整一个块。内存的申请和释放可以从缓存中获取,缓存又存在于线程本地变量中,当然线程回收了,会回收线程本地变量,就会释放对应的缓存,当然不一定会把块释放了,因为有可能多个线程对应一个块,其他线程还在使用块。