1 Spark读HBase

Spark读HBase黑名单数据,过滤出当日新增userid,并与mysql黑名单表内userid去重后,写入mysql。

def main(args: Array[String]): Unit = {

@volatile var broadcastMysqlUserids: Broadcast[Array[String]] = null

val today = args(0)

val sourceHBaseTable = PropertiesUtil.getProperty("anticheat.blacklist.hbase.tbale")

val sinkMysqlTable = PropertiesUtil.getProperty("anticheat.blacklist.mysql.dbtable")

val zookeeper = PropertiesUtil.getProperty("anticheat.blacklist.zookeeper.quorum")

val zkport = PropertiesUtil.getProperty("anticheat.blacklist.zookeeper.port")

val znode = PropertiesUtil.getProperty("anticheat.blacklist.zookeeper.znode")

//创建SparkSession

val sparkconf = new SparkConf().setAppName("").set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

val sc = new SparkContext(sparkconf)

val spark = AnticheatUtil.SparkSessionSingleton.getInstance(sc.getConf)

//配置hbase参数

val conf = HBaseConfiguration.create

conf.set("hbase.zookeeper.quorum", zookeeper)

conf.set("hbase.zookeeper.property.clientPort", zkport)

conf.set("zookeeper.znode.parent", znode)

conf.set(TableInputFormat.INPUT_TABLE, sourceHBaseTable)

// 从数据源获取数据

val hbaseRDD = sc.newAPIHadoopRDD(conf,classOf[TableInputFormat],classOf[ImmutableBytesWritable],classOf[Result])

//读取mysql表,并将mysql表中的userid广播出去,用于去重

broadcastMysqlUserids = get_mysql_user_blacklist(spark,sinkMysqlTable)

//获取当日新增userid数据组装成与mysql表结构一致的对象rdd

val records_userid_rdd = get_new_blacklist_rdd(hbaseRDD,today,broadcastMysqlUserids)

//将当日新增userid数据存入mysql

save_blacklist_to_mysql(records_userid_rdd,today,spark,sinkMysqlTable)

}

2 Spark读MySQL表广播出去

/**

* Spark读Mysql用户黑名单表,将黑名单中所有userid赋予广播变量

* @param spark

* @return

*/

def get_mysql_user_blacklist(spark: SparkSession,table :String) :Broadcast[Array[String]] = {

@volatile var broadcastMysqlUserids: Broadcast[Array[String]] = null

val url = PropertiesUtil.getProperty("anticheat.blacklist.mysql.url")

val user = PropertiesUtil.getProperty("anticheat.blacklist.mysql.user")

val password = PropertiesUtil.getProperty("anticheat.blacklist.mysql.password")

import spark.implicits._

val mysql_userids_rdd = spark.sqlContext.read

.format("jdbc")

.option("url",url)

.option("dbtable",table)

.option("user",user)

.option("password",password)

.load()

.map(record => {

val userid = record.getString(0)

userid

})

if(broadcastMysqlUserids !=null){

broadcastMysqlUserids.unpersist()

}

broadcastMysqlUserids = spark.sparkContext.broadcast(mysql_userids_rdd.collect())

println(s"broadcastMysqlUserids.size= ${broadcastMysqlUserids.value.size}")

broadcastMysqlUserids

}

3 构建黑名单数据对象rdd

/**

* 构建新增userid数据写入mysql

* @param hbaseRDD

* @param today

* @return

*/

def get_new_blacklist_rdd(hbaseRDD: RDD[(ImmutableBytesWritable, Result)],today: String,broadcastMysqlUserids: Broadcast[Array[String]]): RDD[BlackList] = {

val records_userid_rdd : RDD[BlackList] = hbaseRDD.filter(line =>{

//过滤出当日新增userid

var flag = false //默认非当日新增

val userid = Bytes.toString(line._2.getRow)

val dt = Bytes.toString(line._2.getValue(Bytes.toBytes("user"), Bytes.toBytes("dt")))

val did_dt = Bytes.toString(line._2.getValue(Bytes.toBytes("user"), Bytes.toBytes("did_dt")))

/* 判断为当日新增userid同时需满足三个条件:

1. 用户维度加入时间dt=today

2. 或者用户维度加入时间dt=null 且设备维度加入时间did_dt=today

3. 并且不在mysql黑名单表中

*/

if(today.equals(dt) || (dt==null && today.equals(did_dt))){

//broadcastMysqlUserids.value.search(userid).isInstanceOf[InsertionPoint]调用scala 二分查找函数,注意此函数找到返回false

if(broadcastMysqlUserids.value.search(userid).isInstanceOf[InsertionPoint]){

//以上三个条件全满足,表示为当日新增,flag 赋值为 true

flag = true

}

}

flag

}).map(record =>{

//获取新增用户userid,加入黑名单时间设为today,其余字段设为默认值

val userid = Bytes.toString(record._2.getRow)

val day = Integer.parseInt(today)

BlackList(userid,day,null,0,"system")

})

records_userid_rdd

}

case class BlackList(userid: String, dt: Int, update_time: Timestamp,delete_flag: Int,operator : String)

4 Spark写MySQL

/**

* 将userid黑名单数据写入mysql

* @param blacklist_rdd

* @param today

* @param spark

*/

def save_blacklist_to_mysql(blacklist_rdd: RDD[BlackList],today: String,spark: SparkSession,table :String): Unit ={

val url = PropertiesUtil.getProperty("anticheat.blacklist.mysql.url")

val user = PropertiesUtil.getProperty("anticheat.blacklist.mysql.user")

val password = PropertiesUtil.getProperty("anticheat.blacklist.mysql.password")

import spark.implicits._

val records_userid_dataset = blacklist_rdd.toDS()

records_userid_dataset.write

.format("jdbc")

.option("url",url)

.option("dbtable",table)

.option("user",user)

.option("password",password)

.mode(SaveMode.Append)

.save()

}

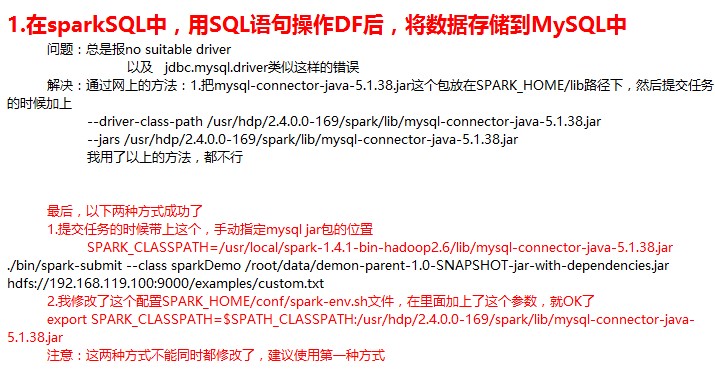

5 注意问题

数据存入Mysql注意事项

尽量先设置好存储模式

默认为SaveMode.ErrorIfExists模式,该模式下,如果数据库中已经存在该表,则会直接报异常,导致数据不能存入数据库.另外三种模式如下:

SaveMode.Append 如果表已经存在,则追加在该表中;若该表不存在,则会先创建表,再插入数据;

SaveMode.Overwrite 重写模式,其实质是先将已有的表及其数据全都删除,再重新创建该表,最后插入新的数据;

SaveMode.Ignore 若表不存在,则创建表,并存入数据;在表存在的情况下,直接跳过数据的存储,不会报错。