一、TensorFlow安装

在Windows系统下进行安装,cmd进入命令控制窗,输入命令利用豆瓣网的镜像下载安装TensorFlow包

python -m pip install tensorflow -i https://pypi.douban.com/simple

输入import tensorflow as tf 若不报错,则安装成功。

二、TensorFlow基本操作

3.打开basic-operations.py文件,编写tensorflow基础操作代码。在Python环境,使用import导入TensorFlow模块,别名为tf。

1. import tensorflow as tf

2. import os

3. os.environ["CUDA_VISIBLE_DEVICES"]="0"

4.构造计算图,创建两个常量节点a,b,值分别为2,3,代码如下:

1. a=tf.constant(2)

2. b=tf.constant(3)

5.创建一个Session会话对象,调用run方法,运行计算图。

1. with tf.Session() as sess:

2. print("a:%i" % sess.run(a),"b:%i" % sess.run(b))

3. print("Addition with constants: %i" % sess.run(a+b))

4. print("Multiplication with constant:%i" % sess.run(a*b))

6.代码编写完毕,在basic-operations.py文件内,点击右键=》Run ‘basic-operations’,执行basic-operations.py文件。

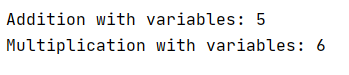

7.运行结果为:

8.使用变量Variable构造计算图a,b

1. a=tf.placeholder(tf.int16)

2. b=tf.placeholder(tf.int16)

9.使用tf中的add,multiply函数对a,b进行求和与求积操作。

1. add=tf.add(a,b)

2. mul=tf.multiply(a,b)

10.创建一个Session会话对象,调用run方法,运行计算图。

1. with tf.Session() as sess:

2. print("Addition with variables: %i" % sess.run(add,feed_dict={a:2,b:3}))

3. print("Multiplication with variables: %i" % sess.run(mul,feed_dict={a:2,b:3}))

11.将步骤8,9,10的代码追加到basic-operations.py文件中。运行basic-operations.py文件,运行结果为

12.构造计算图,创建两个矩阵常量节点matrix1,matrix2,值分别为[[3.,3.]],[[2.],[2.]],代码如下:

1. matrix1=tf.constant([[3.,3.]])

2. matrix2=tf.constant([[2.],[2.]])

13.构造矩阵乘法运算,

1. product=tf.matmul(matrix1,matrix2)

14.创建一个Session会话对象,调用run方法,运行计算图。

with tf.Session() as sess:

1. result=sess.run(product)

2. print(result)

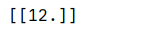

15.将步骤12,13,14的代码追加basic-operations.py文件中。运行basic_operations.py文件,总的运行结果:

完整代码如下:

import tensorflow as tf import os os.environ["CUDA_VISIBLE_DEVICES"]="0" tf.compat.v1.disable_eager_execution() # a = tf.constant(2) # b = tf.constant(3) # with tf.compat.v1.Session() as sess: # print("a:%i" % sess.run(a), "b:%i" % sess.run(b)) # print("Addition with constants: %i" % sess.run(a + b)) # print("Multiplication with constant:%i" % sess.run(a * b)) # a = tf.compat.v1.placeholder(tf.int16) # b = tf.compat.v1.placeholder(tf.int16) # add = tf.add(a,b) # mul = tf.multiply(a,b) # with tf.compat.v1.Session() as sess: # print("Addition with variables: %i" % sess.run(add,feed_dict={a:2,b:3})) # print("Multiplication with variables: %i" % sess.run(mul, feed_dict={a: 2, b: 3})) matrix1=tf.constant([[3.,3.]]) matrix2=tf.constant([[2.],[2.]]) product=tf.matmul(matrix1,matrix2) with tf.compat.v1.Session() as sess: result=sess.run(product) print(result)

三、TensorFlow线性回归

3.打开linear_regression.py文件,编写tensorflow线性回归代码。导入实验所需要的模块

1. import tensorflow as tf

2. import numpy as np

3. import matplotlib.pyplot as plt

4. import os

5. os.environ["CUDA_VISIBLE_DEVICES"]="0"

4.设置训练参数,learning_rate=0.01,training_epochs=1000,display_step=50。

1. learning_rate=0.01

2. training_epochs=1000

3. display_step=50

5.创建训练数据

1. train_X=np.asarray([3.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167,

2. 7.042,10.791,5.313,7.997,5.654,9.27,3.1])

3. train_Y=np.asarray([1.7,2.76,2.09,3.19,1.694,1.573,3.366,2.596,2.53,1.221,

4. 2.827,3.465,1.65,2.904,2.42,2.94,1.3])

5. n_samples=train_X.shape[0]

6.构造计算图,使用变量Variable构造变量X,Y,代码如下:

1. X=tf.placeholder("float")

2. Y=tf.placeholder("float")

7.设置模型的初始权重

1. W=tf.Variable(np.random.randn(),name="weight")

2. b=tf.Variable(np.random.randn(),name='bias')

8.构造线性回归模型

1. pred=tf.add(tf.multiply(X,W),b)

9.求损失函数,即均方差

1. cost=tf.reduce_sum(tf.pow(pred-Y,2))/(2*n_samples)

10.使用梯度下降法求最小值,即最优解

1. optimizer=tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

11.初始化全部变量

1. init =tf.global_variables_initializer()

12.使用tf.Session()创建Session会话对象,会话封装了Tensorflow运行时的状态和控制。

1. with tf.Session() as sess:

2. sess.run(init)

13.调用会话对象sess的run方法,运行计算图,即开始训练模型。

1. #Fit all training data

2. for epoch in range(training_epochs):

3. for (x,y) in zip(train_X,train_Y):

4. sess.run(optimizer,feed_dict={X:x,Y:y})

5.

6. #Display logs per epoch step

7. if (epoch+1) % display_step==0:

8. c=sess.run(cost,feed_dict={X:train_X,Y:train_Y})

9. print("Epoch:" ,'%04d' %(epoch+1),"cost=","{:.9f}".format(c),"W=",sess.run(W),"b=",sess.run(b))

14.打印训练模型的代价函数。

1. training_cost=sess.run(cost,feed_dict={X:train_X,Y:train_Y})

2. print("Train cost=",training_cost,"W=",sess.run(W),"b=",sess.run(b))

15.可视化,展现线性模型的最终结果。

1. plt.plot(train_X,train_Y,'ro',label='Original data')

2. plt.plot(train_X,sess.run(W)*train_X+sess.run(b),label="Fitting line")

3. plt.legend()

4. plt.show()

16.完整代码如下:

1. import tensorflow as tf

2. import numpy as np

3. import matplotlib.pyplot as plt

4. import os

5. os.environ["CUDA_VISIBLE_DEVICES"]="0"

6. #Parameters

7. learning_rate=0.01

8. training_epochs=1000

9. display_step=50

10. #training Data

11. train_X=np.asarray([3.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167,

12. 7.042,10.791,5.313,7.997,5.654,9.27,3.1])

13. train_Y=np.asarray([1.7,2.76,2.09,3.19,1.694,1.573,3.366,2.596,2.53,1.221,

14. 2.827,3.465,1.65,2.904,2.42,2.94,1.3])

15. n_samples=train_X.shape[0]

16. #tf Graph Input

17. X=tf.placeholder("float")

18. Y=tf.placeholder("float")

19. #Set model weights

20. W=tf.Variable(np.random.randn(),name="weight")

21. b=tf.Variable(np.random.randn(),name='bias')

22. #Construct a linear model

23. pred=tf.add(tf.multiply(X,W),b)

24. #Mean squared error

25. cost=tf.reduce_sum(tf.pow(pred-Y,2))/(2*n_samples)

26. # Gradient descent

27. optimizer=tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

28. #Initialize the variables

29. init =tf.global_variables_initializer()

30. #Start training

31. with tf.Session() as sess:

32. sess.run(init)

33. #Fit all training data

34. for epoch in range(training_epochs):

35. for (x,y) in zip(train_X,train_Y):

36. sess.run(optimizer,feed_dict={X:x,Y:y})

37. #Display logs per epoch step

38. if (epoch+1) % display_step==0:

39. c=sess.run(cost,feed_dict={X:train_X,Y:train_Y})

40. print("Epoch:" ,'%04d' %(epoch+1),"cost=","{:.9f}".format(c),"W=",sess.run(W),"b=",sess.run(b))

41. print("Optimization Finished!")

42. training_cost=sess.run(cost,feed_dict={X:train_X,Y:train_Y})

43. print("Train cost=",training_cost,"W=",sess.run(W),"b=",sess.run(b))

44. #Graphic display

45. plt.plot(train_X,train_Y,'ro',label='Original data')

46. plt.plot(train_X,sess.run(W)*train_X+sess.run(b),label="Fitting line")

47. plt.legend()

48. plt.show()

17.代码编写完毕,在linear_regression.py文件内,点击右键=》Run ‘linear_regression’,执行linear_regression.py文件。

18.运行结果为:

完整代码如下:

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt import os os.environ["CUDA_VISIBLE_DEVICES"]="0" tf.compat.v1.disable_eager_execution() learning_rate=0.01 training_epochs=1000 display_step=50 train_X=np.asarray([3.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167,7.042,10.791,5.313,7.997,5.654,9.27,3.1]) train_Y=np.asarray([1.7,2.76,2.09,3.19,1.694,1.573,3.366,2.596,2.53,1.221,2.827,3.465,1.65,2.904,2.42,2.94,1.3]) n_samples=train_X.shape[0] X=tf.compat.v1.placeholder("float") Y=tf.compat.v1.placeholder("float") W=tf.Variable(np.random.randn(),name="weight") b=tf.Variable(np.random.randn(),name="bias") pred=tf.add(tf.multiply(X,W),b) cost=tf.reduce_sum(tf.pow(pred-Y,2))/(2*n_samples) optimizer=tf.compat.v1.train.GradientDescentOptimizer(learning_rate).minimize(cost) init=tf.compat.v1.global_variables_initializer() with tf.compat.v1.Session() as sess: sess.run(init) #Fit all training data for epoch in range(training_epochs): for (x,y) in zip(train_X,train_Y): sess.run(optimizer,feed_dict={X:x,Y:y}) #Display logs per epoch step if (epoch+1) % display_step==0: c=sess.run(cost,feed_dict={X:train_X,Y:train_Y}) print("Epoch:" ,'%04d' %(epoch+1),"cost=","{:.9f}".format(c),"W=",sess.run(W),"b=",sess.run(b)) print("Optimization Finished!") training_cost=sess.run(cost,feed_dict={X:train_X,Y:train_Y}) print("Train cost=",training_cost,"W=",sess.run(W),"b=",sess.run(b)) #Graphic display plt.plot(train_X, train_Y, 'ro', label='Original data') plt.plot(train_X, sess.run(W) * train_X + sess.run(b), label="Fitting line") plt.legend() plt.show()